The bright spark in the AMD Q3 2022 financials released last week is the performance of datacentre. At a time when major technology companies are warning against soft demand and lower revenue, AMD posted quarter revenue that’s 45 per cent higher than exactly a year ago.

Most of that gain is attributed to the continued momentum exhibited by Epyc processors. Where there is ying, there is yang, and AMD’s prospering datacentre fortunes contrast sharply with chief rival Intel, whose segment revenue fell by 27 per cent in the same timeframe, and more worryingly, operating margin was eviscerated from $2.29bn in Q3 2021 to practically nothing in Q3 this year.

It’s against this backdrop of progress that AMD keeps its foot to the floor and introduces 4th Generation Epyc processors previously known by the codename Genoa. Harnessing up to 50 per cent more cores and threads at the top end than the previous Milan generation, and using nascent Zen 4 architecture to its fullest, the latest Epyc chips are a serious force to be reckoned with.

AMD Epyc 9654

$11805

Pros

- Super performance

- Cutting-edge platform

- Improved TCO

- CXL connectivity

Cons

- No HBM technology

Club386 may earn an affiliate commission when you purchase products through links on our site.

How we test and review products.

Primed to extend AMD’s compute-centric, density and energy efficiency lead over in-market Intel 3rd Generation Ice Lake Xeon and pre-empting 4th Generation Xeon Sapphire Rapids CPUs arriving January 10, 2023, there are myriad reasons for AMD to be bullish about Genoa’s prospects in claiming expanding market share in the lucrative server space.

Firm Foundations

Understanding the latest Genoa Epyc processors requires context. AMD introduced first-generation Naples Epyc back in June 2017. Built using the clean-sheet Zen architecture which also debuted on desktop Ryzen in the same year, 32-core, 64-thread Epyc proved to be a good test case of high-performance server potential. November 2018 brought along second-generation Rome Epyc processors, but this time with core-and-thread count doubling to 64/128, respectively.

Hitting the execution straps with regularity at the precise, serendipitous time when Intel floundered with subsequent Xeon launches has provided 3rd Generation Milan Epyc the perfect springboard for further incursion into the x86 server market. The latest cache-enriched entrant, Milan X, opens previously inaccessible revenue opportunities in segments such as technical computing.

“Datacentre is AMD’s most important division”

Forrest Norrod, Senior Vice President and General Manager, Data Center Solutions Business Group, AMD

Depending on which market research notes you read, AMD currently enjoys a 20 per cent x86 server market share, up from practically nothing five years ago. 4th Generation Epyc, encompassing multiple swim lanes, is the precious key to unlocking greater opportunity.

Genoa Is Not Unique

Which brings us nicely on to the ever-expanding Epyc portfolio. AMD has historically used one design per generation. Appreciating Epyc now has traction with big-name players who have seen it develop over time, AMD is broadening the product stack by introducing four variants each tackling a different segment of the large server market.

| 4th Generation Epyc | Release Year | Maximum core/thread | Architecture |

|---|---|---|---|

| Genoa | 2022 | 96/192 | Zen 4 |

| Genoa-X | 2023 H1 | 96/192 | Zen 4 |

| Bergamo | 2023 H1 | 128/256 | Zen 4c |

| Siena | 2023 H2 | 64/128 | Zen 4 |

The initial 4th Generation thrust is provided by standard Genoa using the Zen 4 architecture scaling up to 96 cores and 192 threads. Early 2023 will see a cache-enlarged version known as Genoa-X, housing up to 1,152MB of L3, much in the same way as Milan is augmented by Milan-X. The third Epyc swim lane is Bergamo, which uses a density-optimised architecture known as Zen 4c. The purpose is to enable the same number of cores and threads in half the die space – caches will have to be smaller than regular Zen 4, one imagines – providing opportunity of pushing up to 128C/256T per socket. Finally, for cost-sensitive environments where value is a larger play than all-out performance – think edge computing, for example – Siena comes to the fore next year. Get the feeling Epyc is about to enter prime time?

Though other 4th Generation Epyc processors are vigourous enough in their own right, the focus of today’s review is straight-up Genoa. Key callouts over last-gen Epyc are legion – more cores and threads, new socket, better architecture, additional memory channels, DDR5, PCIe 5, CXL, and so forth. Let’s take each in turn before benchmarking a 2P Titanite server featuring best-in-class Epyc 9654 chips housing 192 threads each.

More Cores And Threads

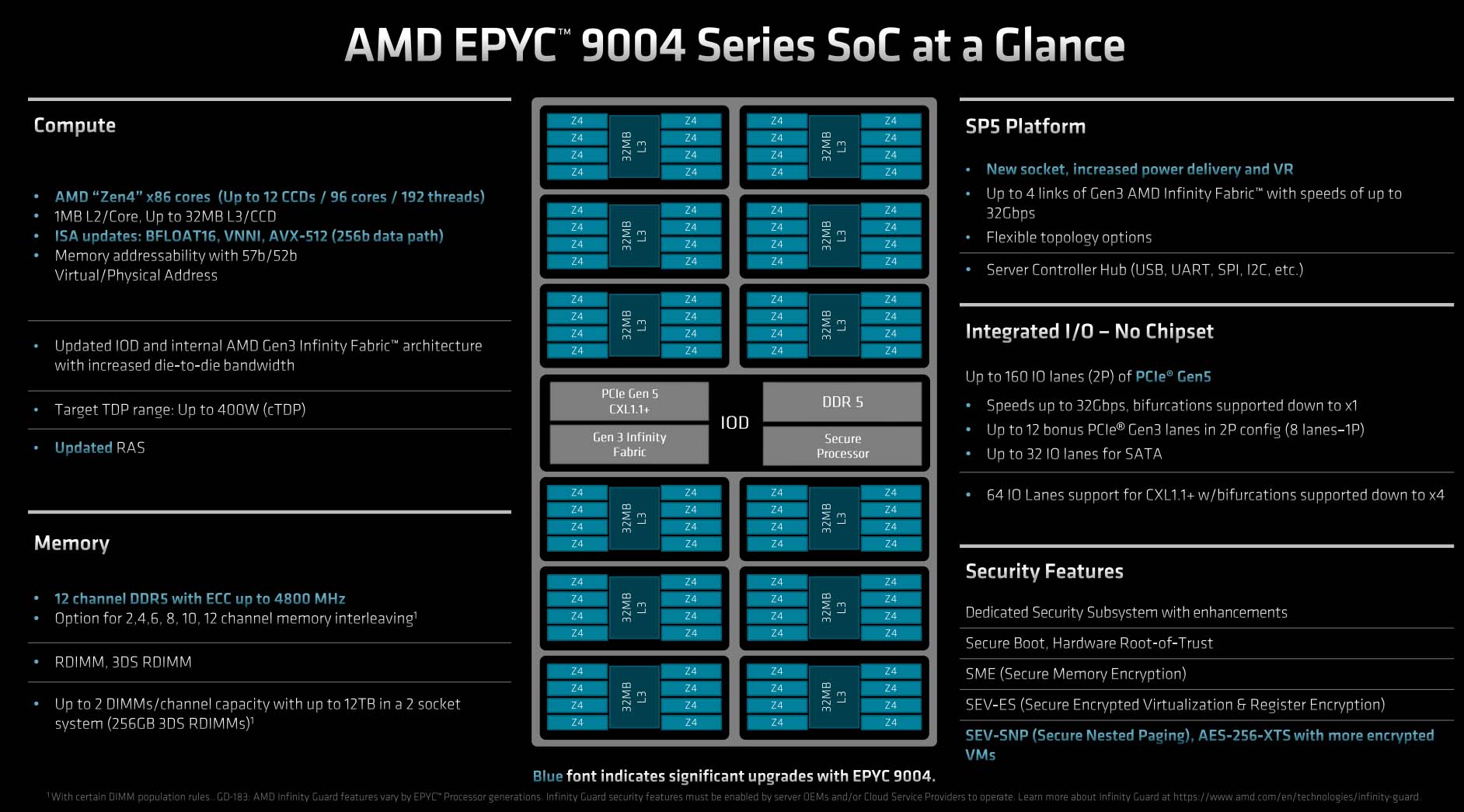

Having been stuck at 64 cores and 128 threads since 2nd Generation Epyc processors, AMD finally ups the maximum count by 50 per cent, to 96/192. The chief reason why per-socket density increases rests with the fabrication move from Milan’s 7nm compute and 14nm IOD to 5nm/6nm, respectively, for Genoa. Leveraging TSMC’s smaller, more energy-efficient process is green light to scaling higher on cores and frequency.

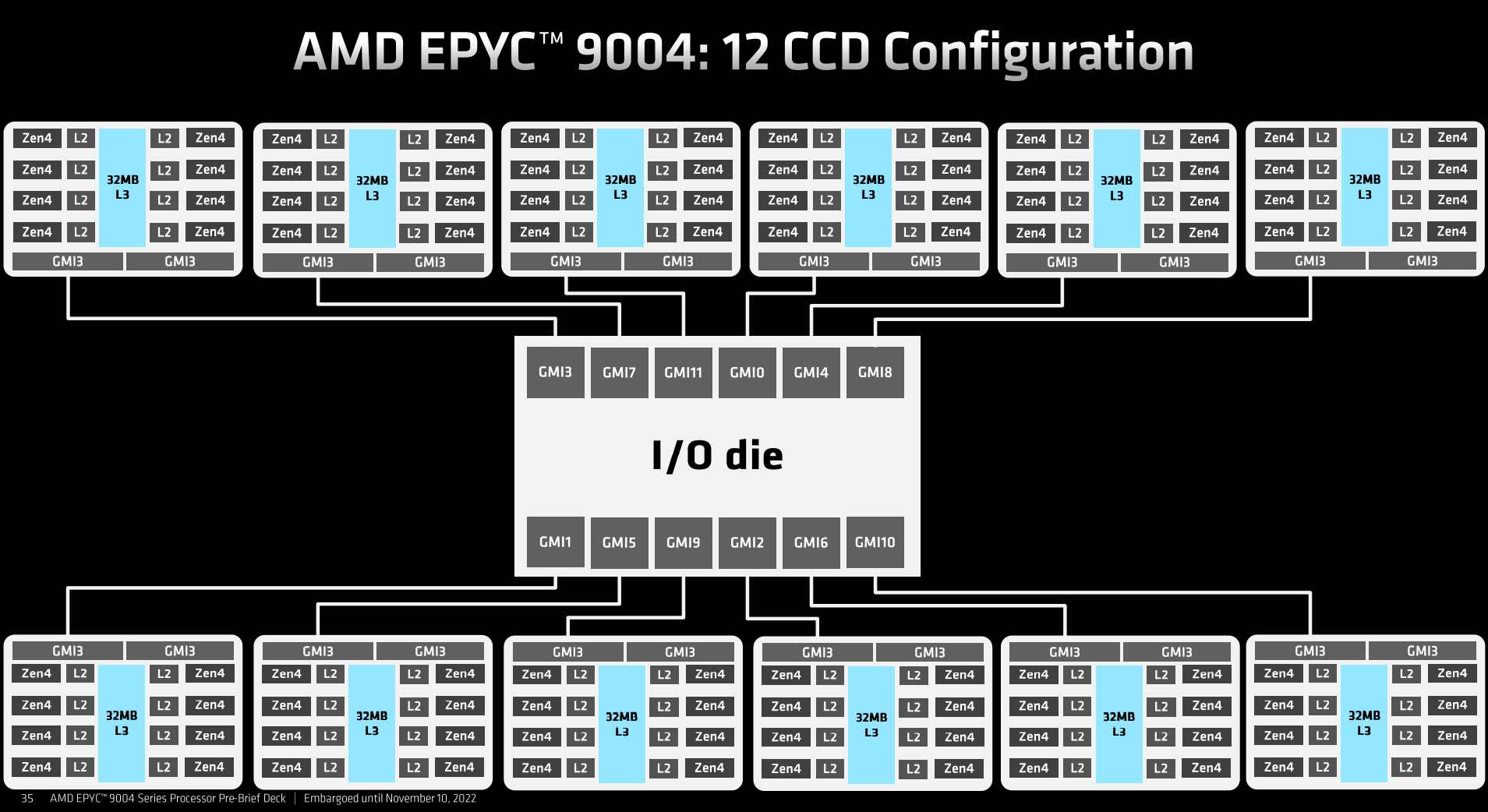

The pioneering chiplet design strategy introduced with Epyc/Ryzen back in 2017 pays clear dividends with high-core-count processors. AMD maintains the same eight cores per chiplet, but this time increases the total number from last-gen’s eight (7nm) to 12 CCDs (5nm), representing the simplest method of boosting core-and-thread count by a substantial 50 per cent. Like desktop Ryzen 7000 Series CPUs and compared to the previous generation, AMD doubles per-core L2 to 1MB but keeps per-CCD L3 at 32MB.

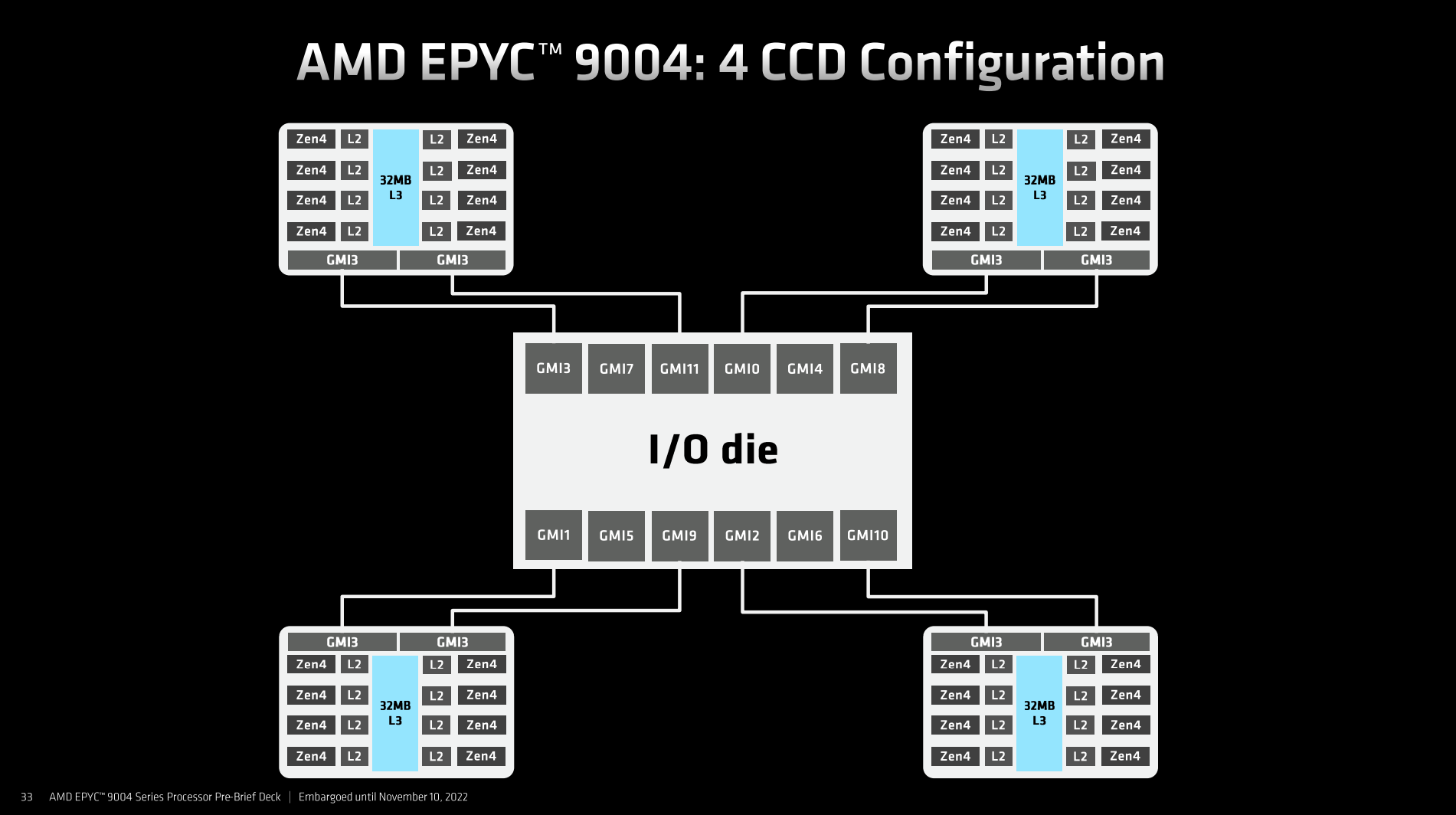

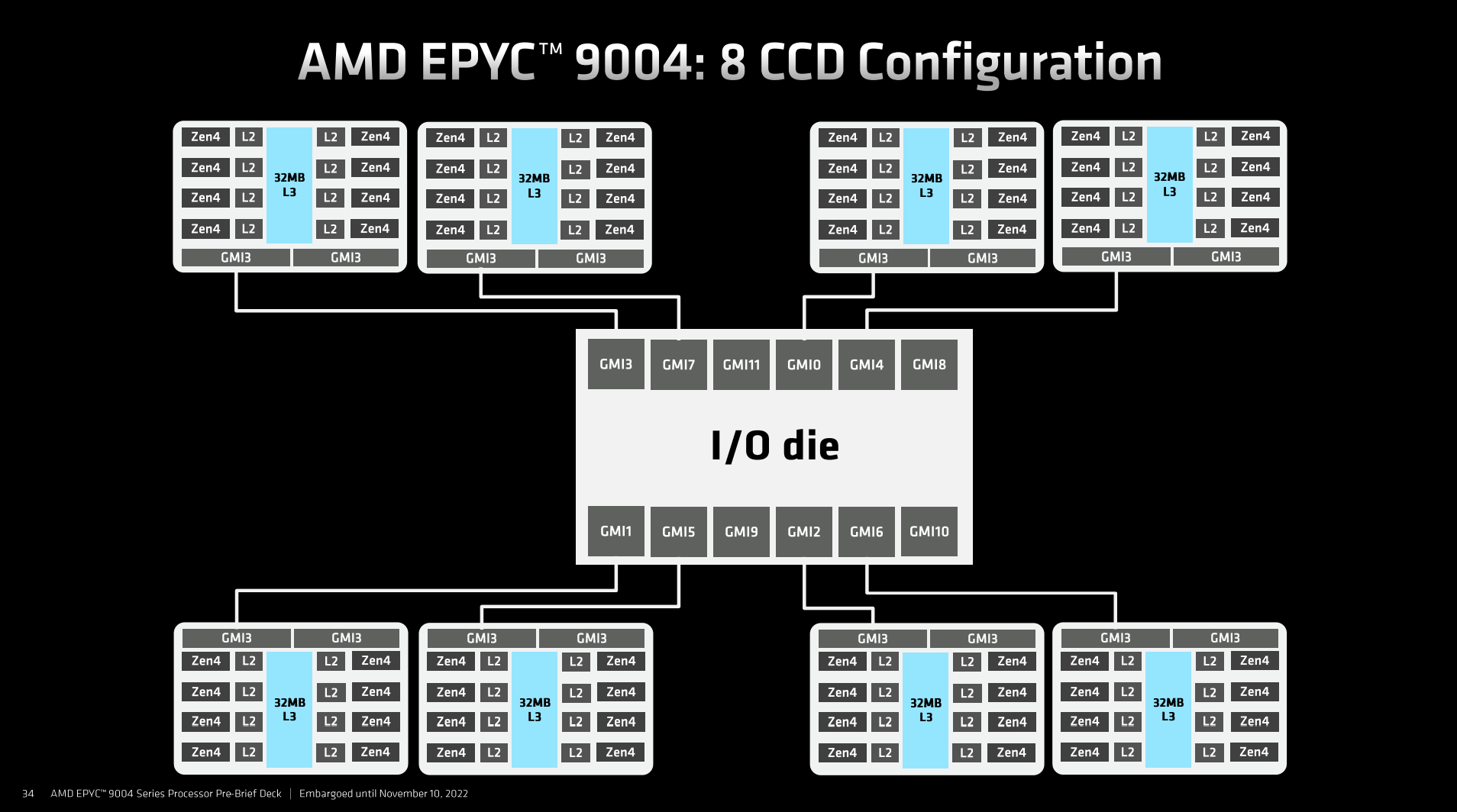

Up to 12 CCDs – depending upon model – continue to connect to a central I/O die conveniently known as IOD. AMD can build various core and L3 cache configurations by massaging the number of CCDs. Simple maths informs us the top-bin 96-core chip(s) use all 12 CCDs and carry 384MB of L3.

There are three distinct Genoa packages on offer – a four-CCD, eight-CCD, and top-stack 12-CCD configuration. If AMD wants a lot of L3 cache and relatively few cores, then either the 8- or 12-CCD is used – remember, each CCD holds 32MB of L3. One can, for example, theoretically build a 32-core Epyc with any of the three configurations. The first would, in that instance, be fully populated, the second half populated, and the third only use one-third of resources. All would have varying levels of L3 cache, too. Generally speaking, CCDs rise in concert with required cores.

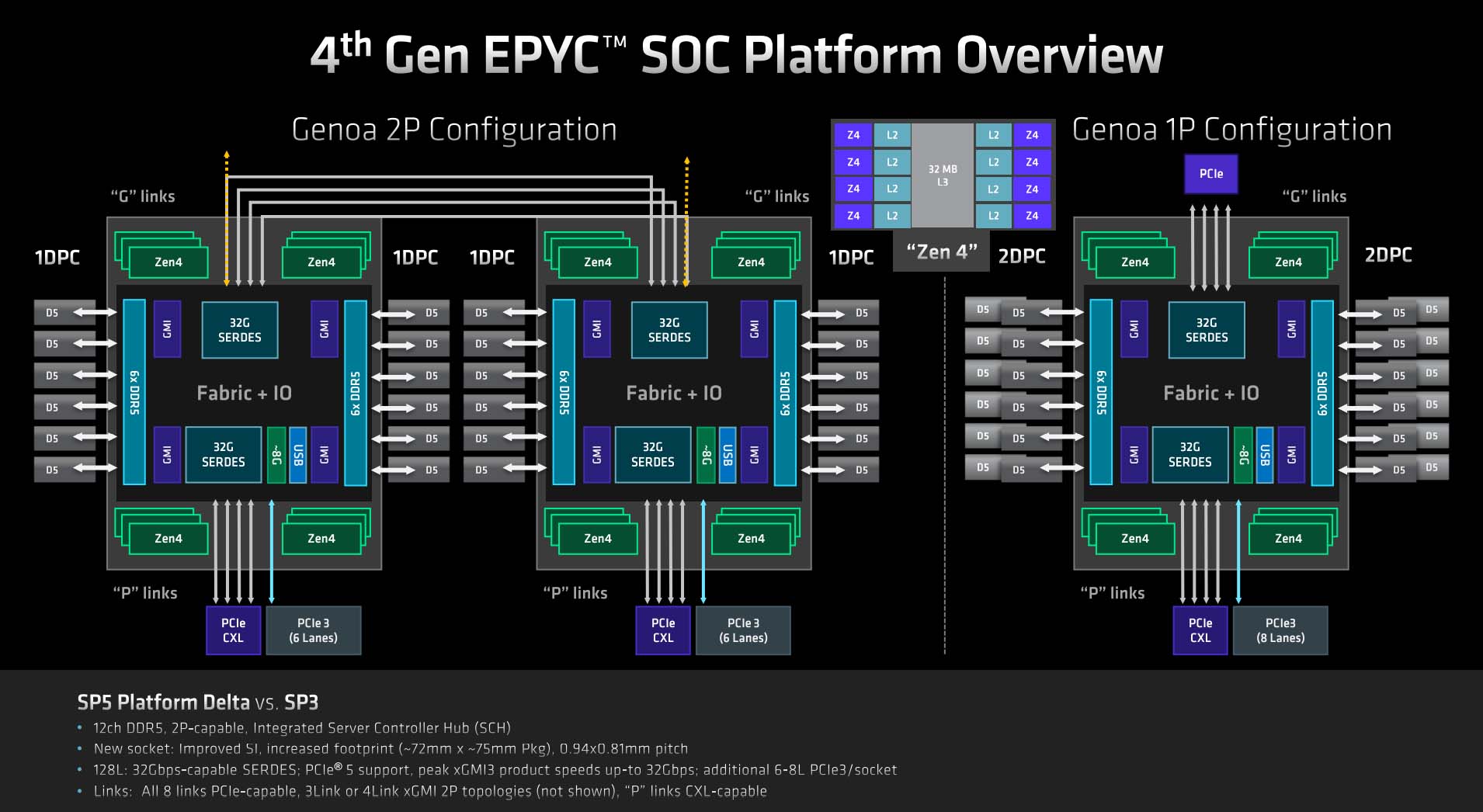

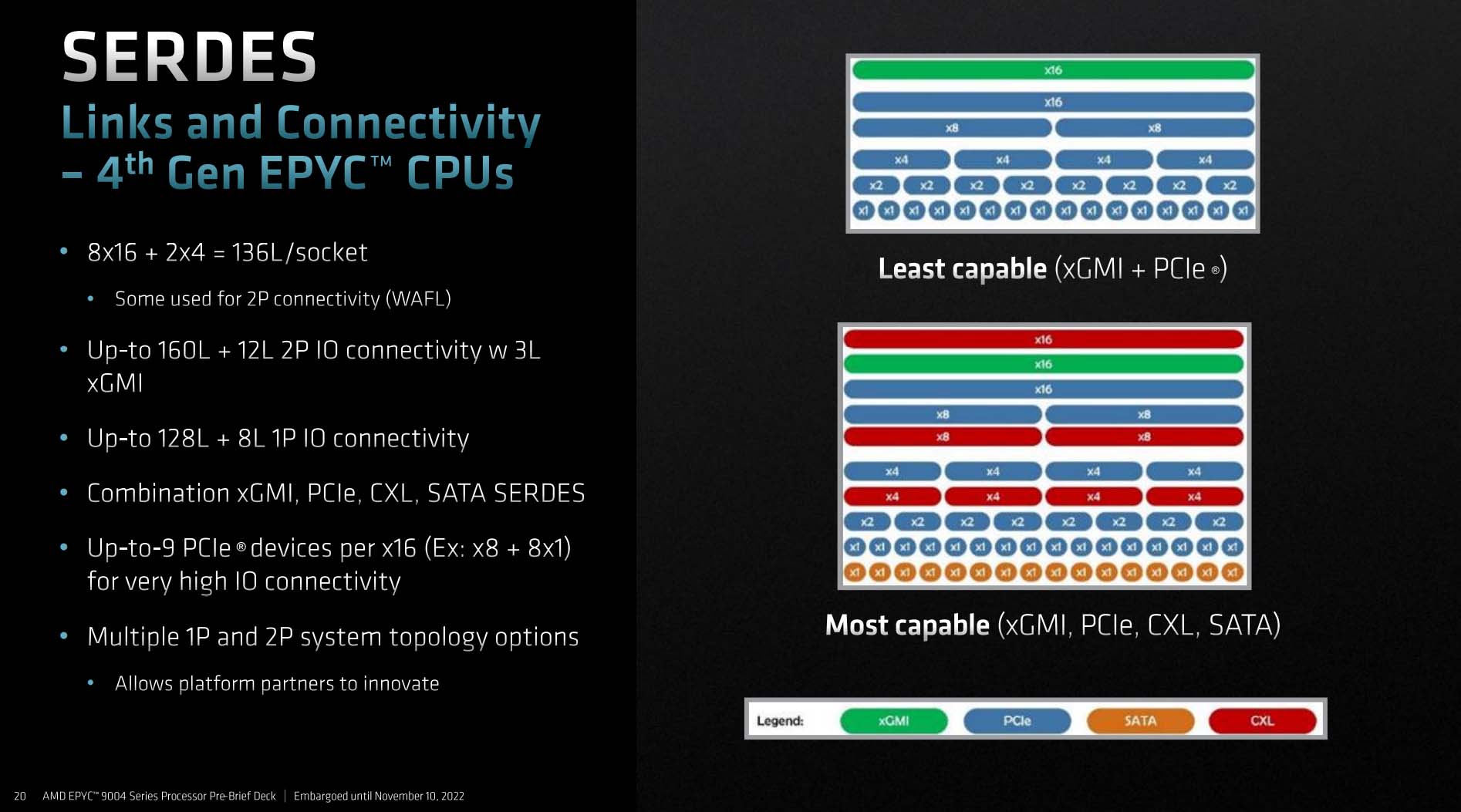

PCIe 5.0 – Double The Speed

These complicated slides detail how expansion for Genoa – Serdes, GMI, etc. – are plumbed out of the IOD. Total number of PCIe lanes doesn’t change between generations, as there are 128 available in a single-processor environment, rising to 160 in a 2P solution, with the remainder used to join chips together. Far more useful in a datacentre processor, the benefit is of doubling throughput speed by upgrading from PCIe 4.0 to PCIe 5.0, and this is also why we see the Serdes links increase from 25G on Milan to 32G on Genoa.

The flexibility in how lanes are apportioned enables turnkey system providers to differentiate their products. There can be masses of PCIe devices hanging off the lanes – up to nine per x16 link – plenty of SATA, xGMI for graphics, or CXL for memory expansion (more on that later).

Socket To Me

AMD has put great store in maintaining motherboard socket compatibility through multiple generations of desktop and server processors, thus enabling hassle-free upgrading over time. It’s arguable AMD could have kept the original SP3 socket that’s been in service for the previous three generations, even with today’s 12-CCD SoCs, but has chosen not to do so. Shifting over to a brand-new SP5 socket is considered necessary due to the attendant requirements for increased power delivery, expanded memory channels, Infinity Fabric speed, PCIe 5.0, et al.

Given the longevity and scope of incumbent SP3, we have no qualms about AMD’s platform-wide upgrade for this generation, especially as it covers multiple 4th Gen Epyc product lines.

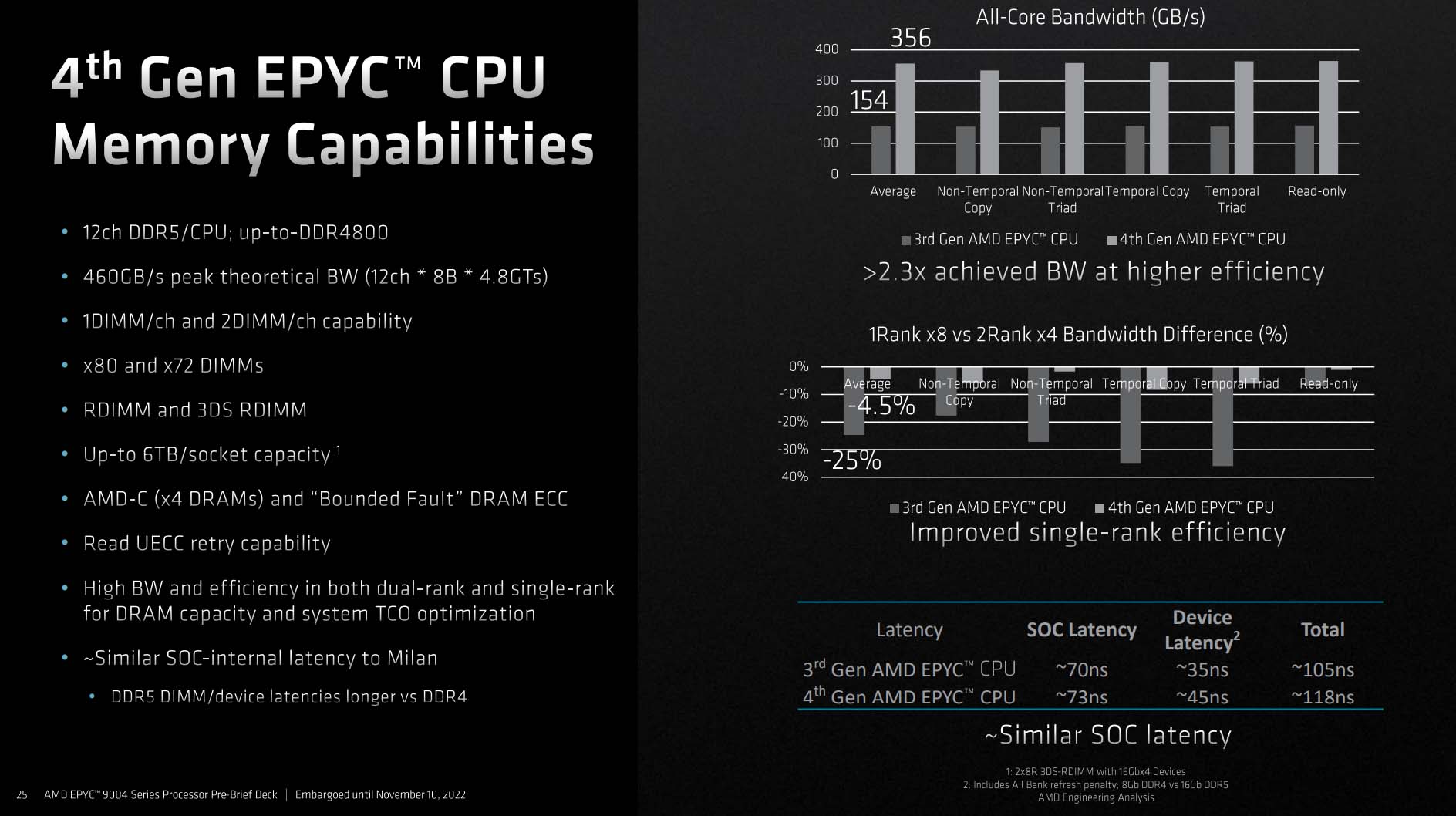

A well-thought-out server platform strikes a good balance between compute throughput, caches, and discrete memory capacity and bandwidth. This is why Genoa moves from eight-channel DDR4 present on Milan to 12-channel DDR5. Peak bandwidth increases from last-gen’s 204.8GB/s (DDR4-3200, 8ch) to 460GB/s, or a considerable 2.47x hike.

In practice, however, AMD sees 2.3x higher bandwidth when comparing generations. More impressive is the improved efficiency when running single-rank memory compared to preferred dual-rank. An Achilles heel for 3rd Gen Epyc where performance dropped by as much as 25 per cent, AMD maintains much higher efficiency this time around.

New memory types usually arrive at the cost of latency. DDR5 is worse in this regard, of course, though the total latency isn’t too far off DDR4-only Epycs. On the plus side, having more memory channels paves the way for higher capacities per socket and box… and that’s without taking new-fangled CXL into account.

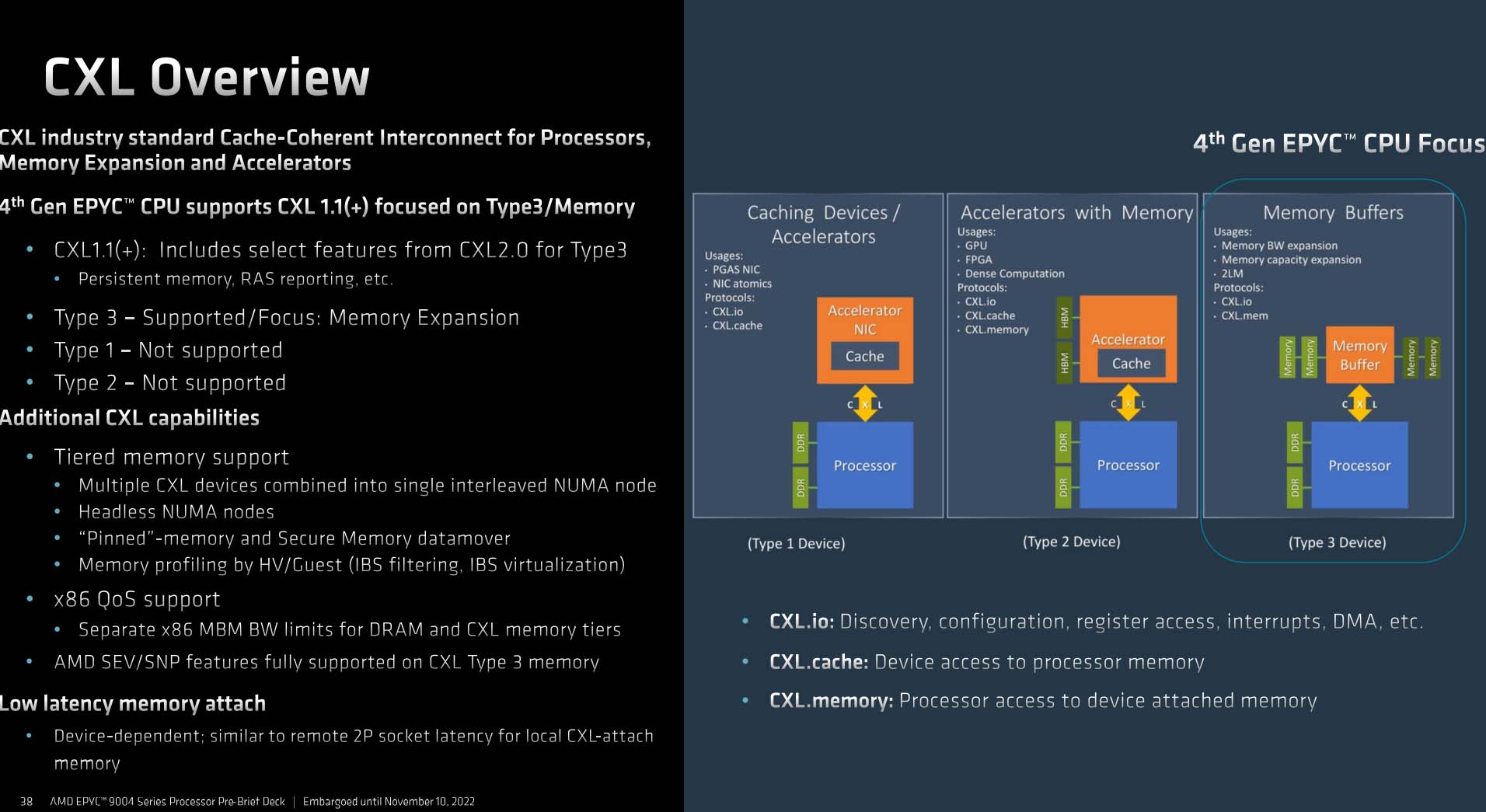

CXL – A Much-Needed Feature

Features are integrated into silicon when chip architects deem it most prudent to do so. 4th Generation Epyc was originally designed not to leverage Intel-developed Compute Express Link (CXL) technology. According to CTO, Mark Papermaster, having been impressed with the open standard and with an eye on future developments, AMD decided late in the game to integrate CXL into Genoa.

Though Genoa is rich in memory footprint and speed, the emerging CXL technology offers a simple means to add even more RAM to the system via the PCIe-riding interface. The faster the interface, the better the memory performance, and use of PCIe 5.0 pays dividends.

“Late in the game, we decided CXL had to be in Genoa”

Mark Papermaster, Chief Technology Officer and Executive Vice President, AMD

AMD’s 64-lane allowance is focussed on memory rather than specialised accelerators (Type 1) or general-purpose accelerators (Type 2), which is ostensibly the same level of CXL support as upcoming Intel Sapphire Rapids.

Adding extra memory to an already-full system remains useful in segments where the working dataset is large – think databases and machine learning – but there is a latency penalty, albeit small, when traversing another bus. CXL is in its infancy and compatible memory vendors are still thin on the ground. Good news is Samsung’s already announced a CXL Type 3 memory expander offering 512GB modules.

CXL becomes truly inventive when as-yet-unsupported Type 1 and Type 2 products become available and implemented in next-gen hardware. The protocol opens up the tantalising opportunity of having truly disaggregated computing, linking cores to peripherals and memory via multiple CXL interfaces. Less reliance on future IODs, perhaps?

For now, though, AMD’s support lays the framework for having weird and wonderful amounts of memory populated in a server. By also grabbing features from CXL 2.0 Type 3, meaning memory pooling and use of persistent memory, we look forward to seeing how adventurous partners build out future boxes.

Zen 4 Smarts

Combining with more cores at the premium end of the stack and higher frequencies made possible by 5nm core production, AMD continues throwing the kitchen sink at Genoa by using the latest Zen 4 architecture.

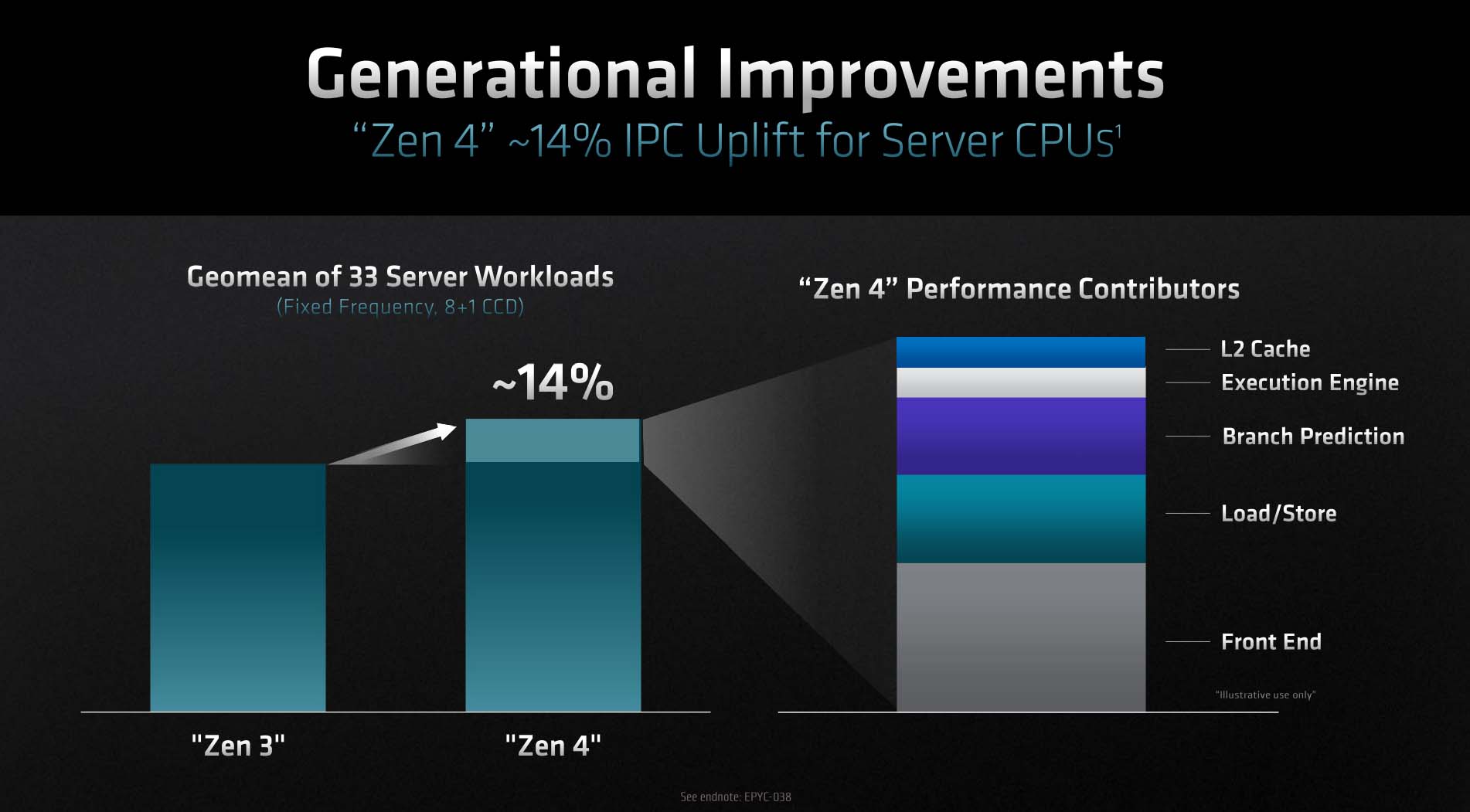

Replicating IPC improvements seen on the desktop iteration of Zen 4, AMD claims a 14 per cent uplift over Zen 3 at identical frequencies.

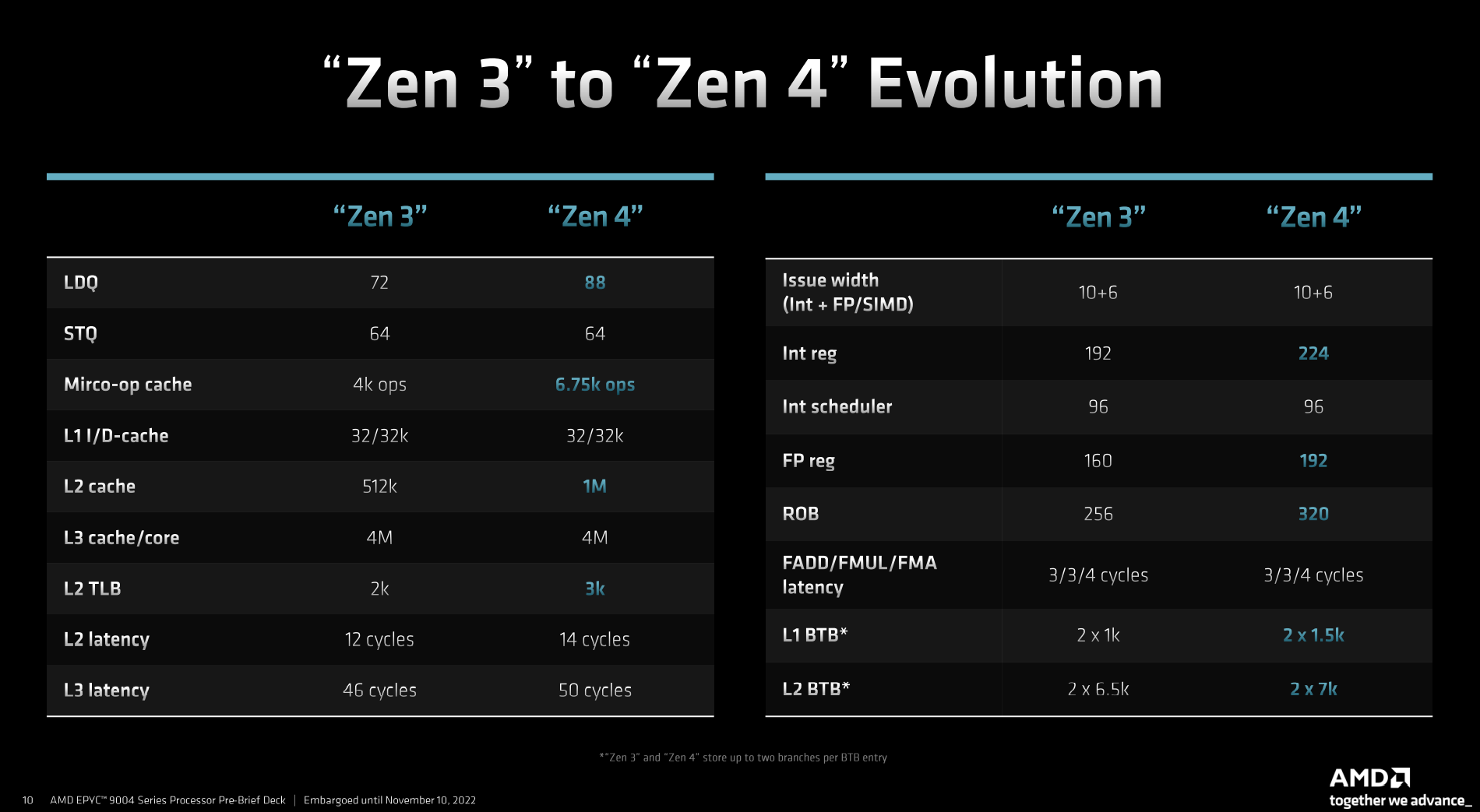

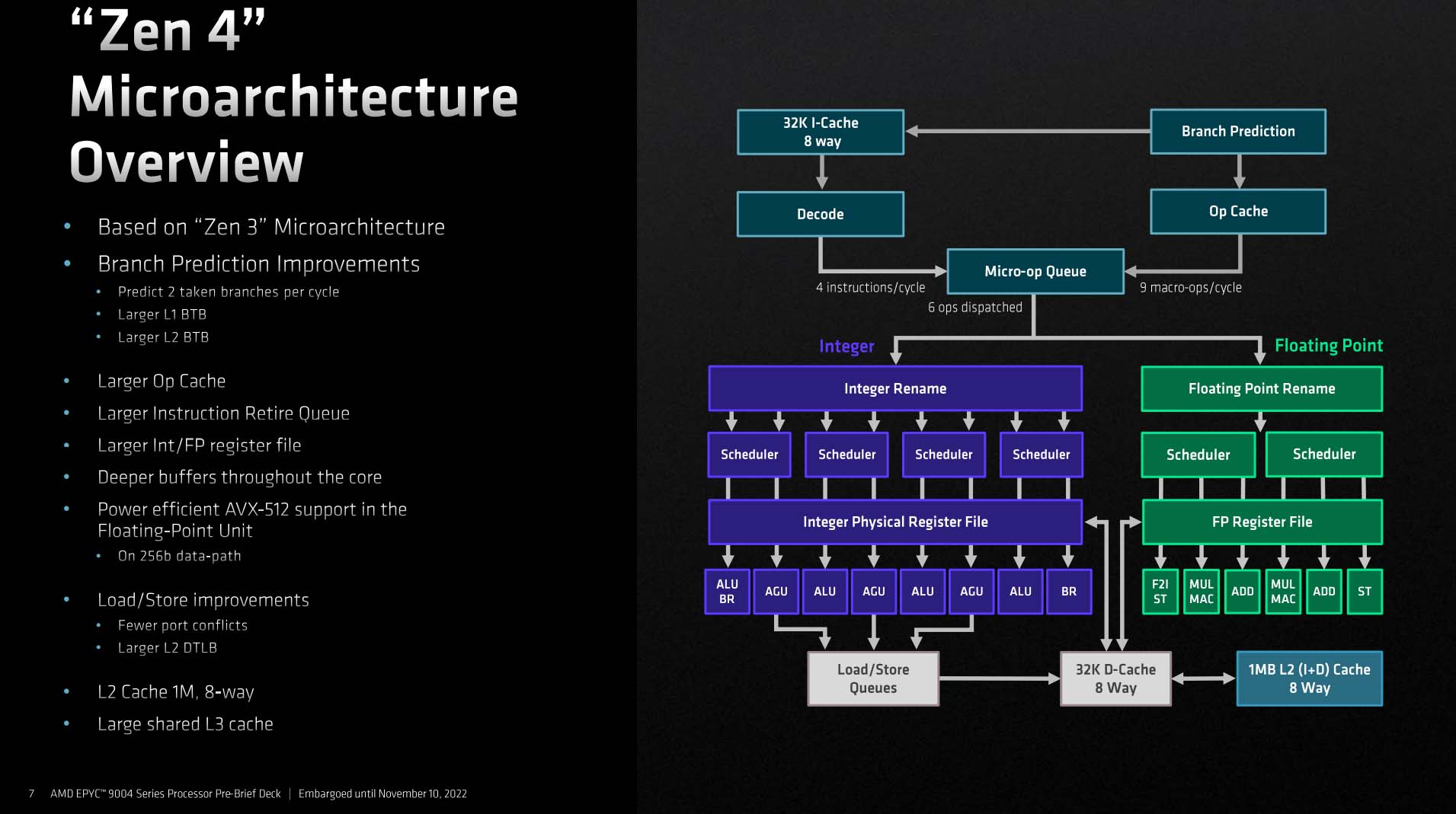

Front-end and branch prediction advances afford the largest portion of IPC gain – somewhere in the region of 60 per cent – and key here is the increase in the size and processing ability of operation cache. Reasoning is simple enough, because Zen 4, like its predecessor, keeps to a decode rate of four regular instructions per cycle, which is weak when the queue can dispatch six into the execution engines.

AMD gets around this by having a 4K L0 op-cache on Zen 3, but this is increased to around 7K on Zen 4, alongside larger supporting buffers. And that’s been a hallmark of Zen evolution over the years. Zen 2, for example, had an L1 Branch Target Buffer (BTB) of only 512 entries; Zen 3 increased that to 1,024, and Zen 4 goes up to 1,536 entries. It wouldn’t surprise us one iota if Zen 5 doubles buffer and cache sizes again.

The easiest way to visualise the overall benefit is to imagine the front-end being a funnel into the core’s engine – the wider and more efficient it is, the better one can saturate execution engines. Some of the most sought-after CPU architects are high-quality front-end merchants… and with good reason.

If you’re going to feed the beast, it better have the stomach to digest larger portions. This is where larger instruction retire queues and register files come into play. Adding them takes transistor space, explaining why Zen 4 is fundamentally larger than Zen 3, though it’s considered worth the expense as performance benefits outweigh the cost of implementation, helped by that move from 7nm to 5nm.

Moving on down to load/store units, Zen 4 goes, you guessed it, larger in areas that matter. Of particular note is the 50 per cent increase in L2 DTLB, though from what we can glean, it may be less associative.

This birds-eye view of key changes reinforces the message doled out above. AMD spends most of Zen 4’s additional resource into expanding buffers, caches and queues. The downside of doing so is increased latency out to larger, slower L2 and L3 caches, yet a few cycles can be masked by more in-flight processing.

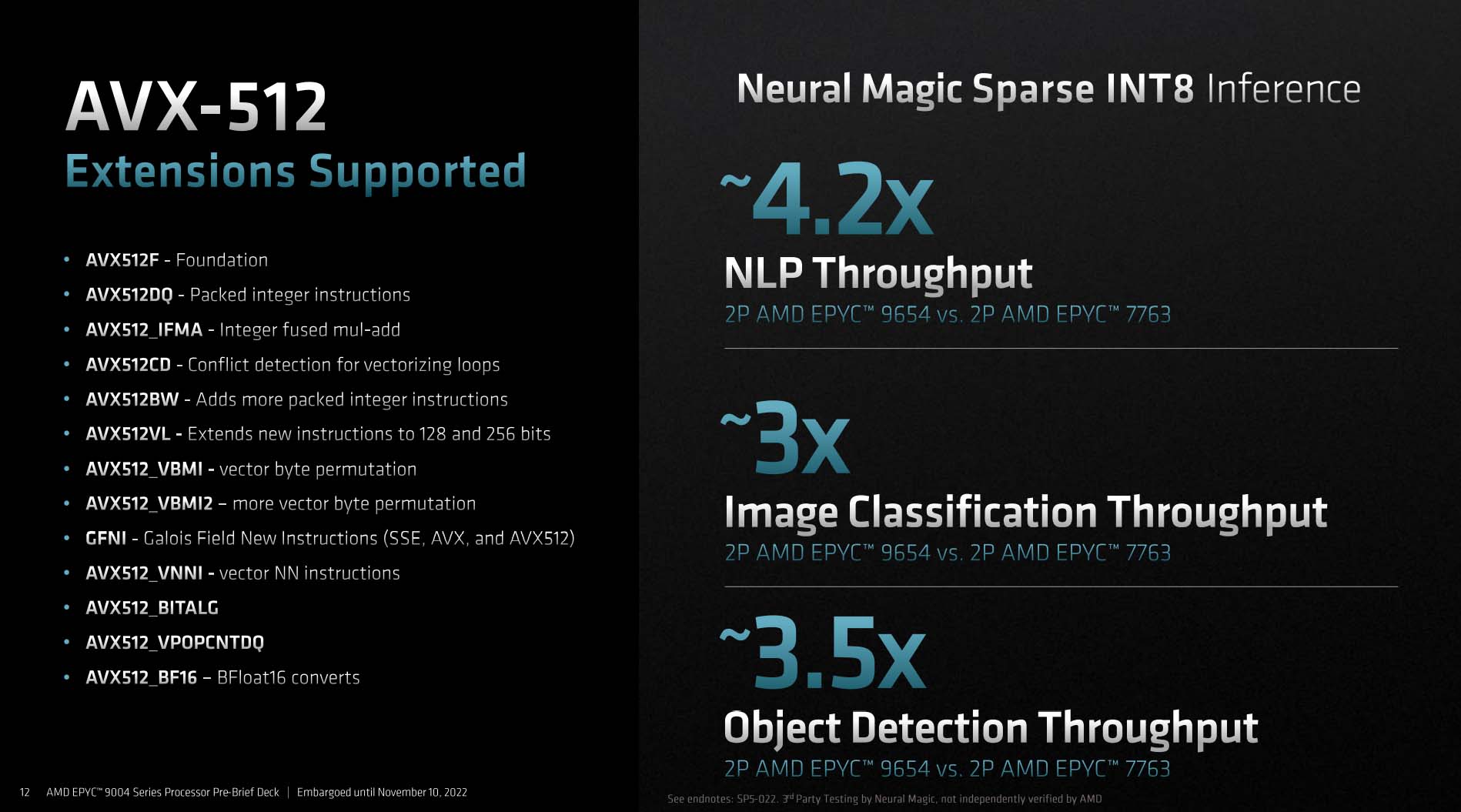

Did we forget to mention AVX-512 support? How remiss. Zen 4 does indeed support most of the feature-set yet does so in an interesting way. Rather than go for a single, 512-wide ALU, which is the optimal but expensive way, AMD chooses to double-pump 256-bit-wide instructions. This approach is slower than a native implementation, of course, and Zen 4 does it this way, we imagine, so as not to hurt frequency too much. Full-on AVX-512 workloads have a habit of dragging speeds down and thermals up. Going double-pump, though not ideal, is a good middle ground in balancing die space against all-out performance.

Models, Specs And Comparisons

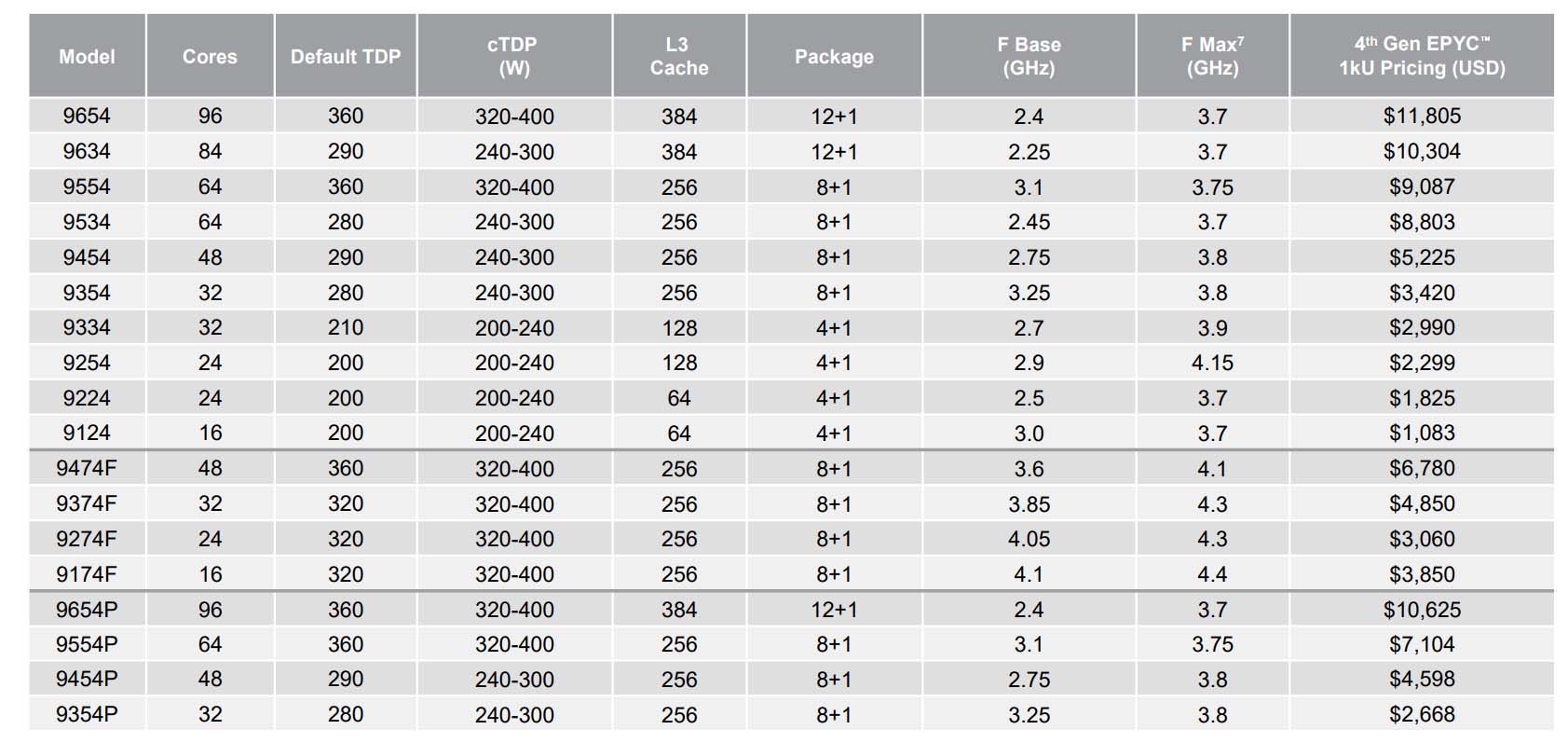

Bulking out Genoa through multiple fronts, we already know it’ll be faster than Milan on a core-to-core basis. We also know there are models offering 50 per cent more cores than any Epyc that has gone before. This heady mix coalesces into 18 models available from today.

Here’s the motherlode. First aspect to note is increased power consumption across the board. The lowest default TDP of 210W is 55W more than on 3rd Generation Milan, while top-bin power rises from 280W to 360W. That’s somewhat strange, particularly for entry-level models, as tempering down to 5nm ought to save power.

We can also see how each model is built from a packaging point of view. As discussed further up, AMD chooses between 4-, 8-, and 12-CCD topologies, depending on how it sees the chip fit into the wider landscape. For example, the 16-core 9124 uses the four-CCD package, but the also-16-core 9174F, which is over 3x as expensive, rides with an 8-CCD setup.

Matters become even more detailed once you consider how much cache is lit up in each CCD. Going back to our 16-core example, the $1,083 9124 has only 64MB, whereas the high-frequency 9174F uses the eight-package limit of 256MB. Horses for courses.

It’s instructive to compare same-stack models from Genoa and Milan, getting a sense of how performance and pricing plays out over generations.

| Model | Cores / Threads | TDP | L3 Cache | Base Clock | Boost Clock | Launch MSRP |

|---|---|---|---|---|---|---|

| Epyc 9654 | 96 /192 | 360W | 384MB | 2.40GHz | 3.70GHz | $11,805 |

| Epyc 7773X | 64 / 128 | 280W | 768MB | 2.20GHz | 3.50GHz | $8,800 |

| Epyc 9554 | 64 / 128 | 360W | 256MB | 3.10GHz | 3.75GHz | $9,087 |

| Epyc 7763 | 64 / 128 | 280W | 256MB | 2.45GHz | 3.40GHz | $7,890 |

| Epyc 9474F | 48 / 96 | 360W | 256MB | 3.60GHz | 4.10GHz | $6,780 |

| Epyc 7643 | 48 / 96 | 225W | 256MB | 2.30GHz | 3.60GHz | $4,995 |

| Epyc 9374F | 32 / 64 | 320W | 256MB | 3.85GHz | 4.30GHz | $4,850 |

| Epyc 75F3 | 32 / 64 | 280W | 256MB | 2.95GHz | 4.00GHz | $4,860 |

Performance comparisons aren’t valid between pairs as there are platform and per-core throughput advantages for Genoa. Nevertheless, Epyc 9654 is in a class of its own, meaning AMD charges above $10,000 for the first time. Large-scale customers don’t pay this, of course, yet it’s a useful guide in assessing where average selling price is going.

64-core models are more interesting. Epyc 9554 is a better server chip than Epyc 7763 because it operates at higher frequencies and has all of Genoa’s additional benefits. The $1,197 price hike feels justified. Moving down, frequency comes by way of heightened power for best-in-class 48-core models. Meanwhile, the premier Genoa 32-core model is priced attractively.

Caveat emptor! Upfront silicon and even deployment cost of new platform and memory are not necessarily the main drivers of total cost of ownership (TCO). Rather, performance and compute density become increasingly important at the rack and datacentre level.

TCO

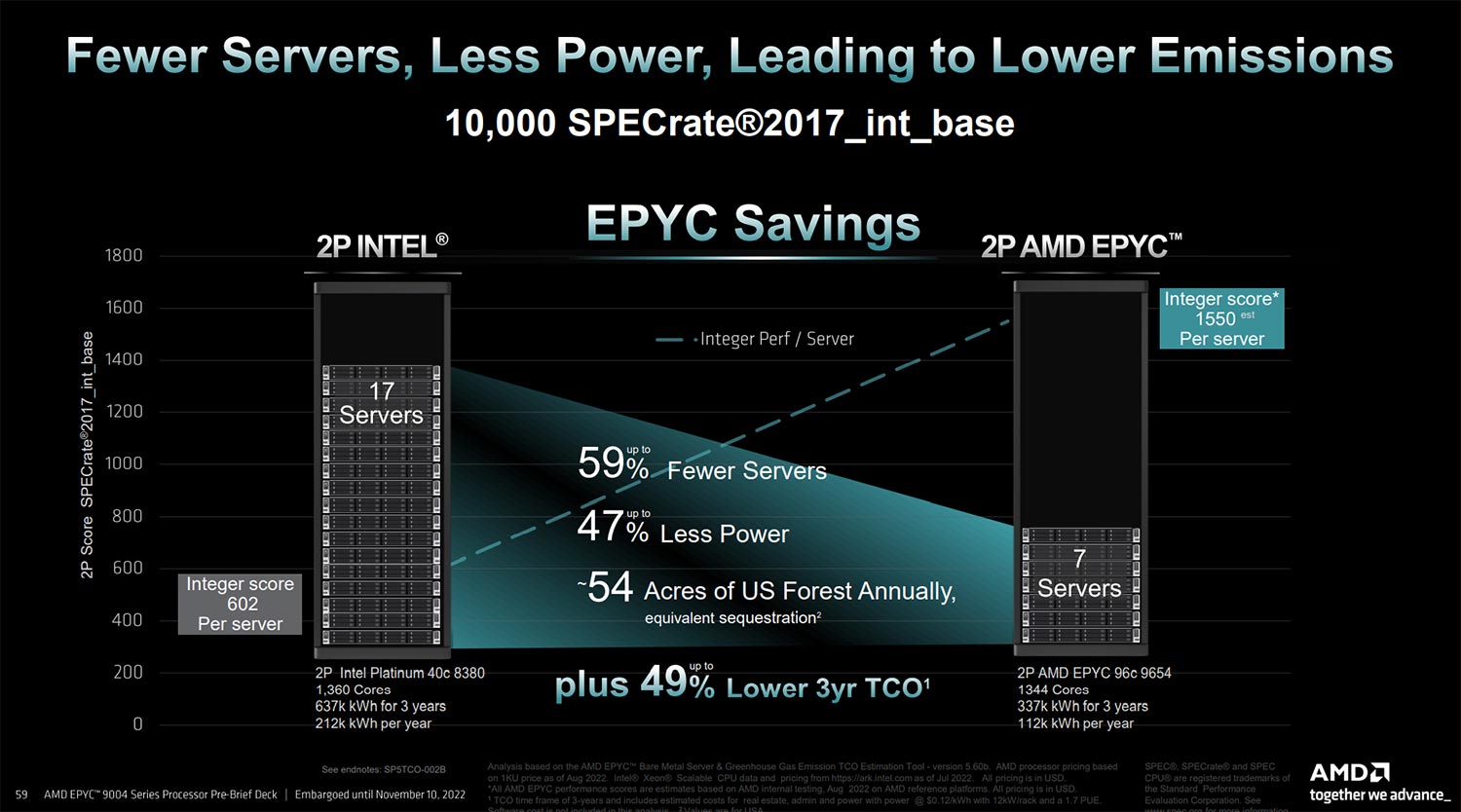

Evidenced by this AMD-supplied graphic, a 2P Epyc 9654 server scores around 1,550 in the industry-standard SPECrate2017_int_base benchmark, which is considered indicative of common integer-heavy workloads. Meanwhile, the highest recorded 2P result for in-market Epyc 7763 is 861 and for Intel Xeon 8380 is 602 (external csv file), though it must be noted that an 8-way Xeon server has managed an Epyc Genoa-like 1,570.

Taken as a proxy, achieving a 10,000 score requires seven Titanite-configured Epyc servers compared to 17 for Intel Platinum 8380. What’s more telling, if you follow the narrative, is an energy saving of 300,000 kWh over a three-year period. Even if electricity is provided to the datacentre at a ludicrously low 15p per kilowatt, that’s a £45,000 energy saving in a single rack.

We’re well aware Intel is launching 4th Gen Sapphire Rapids Xeons in January 2023, doubtless skewing the results less in favour of AMD, but topping out at 60 cores and 120 threads per chip, they won’t take the density crown for integer-type workloads.

Anything Missing?

It’s abundantly clear Genoa is a fundamentally better server CPU platform than any Epyc that has gone before. Does that make it perfect? The answer depends upon the kind of workload being run… and what the competition is promising in the same space.

We know AMD’s 12-channel DDR5 support provides 2.3x real-world bandwidth increase to cores, which is especially helpful for the 84- and 96-core SKUs. Intel believes it has the better memory implementation by integrating 64GB of HBM2e memory on 4th Generation Xeon Max CPUs, citing over 1TB/s bandwidth and huge performance gains over in-field Epyc 7773X in HPC and technical computing workloads, all without having to even use DDR5 memory at all! We won’t know how valid such claims are until Sapphire Rapids is benchmarked, yet it’s hard to ignore Intel’s bombast.

In a similar vein, Intel is betting big on AI/machine learning by baking in Advanced Matrix Extensions (AMX) into silicon. Designed to vastly increase computation by, in effect, using a powerful matrix calculator, the latest Epycs don’t feature quite so fine-grained technology. Last but not least, Intel’s Data Streaming Accelerator (DSA) is also reckoned to be of significant benefit as it offloads many common data-movement operations from the CPU to a dedicated accelerator, improving performance by freeing up cores to carry out the compute jobs they do best.

There’s no obvious right or wrong here; both companies see near-term server workloads propagate differently; AMD goes heavy on compute, Intel turns an eye towards CPU-based machine learning, high-bandwidth memory, and offloading as much resource from the cores as possible.

Titanite Server

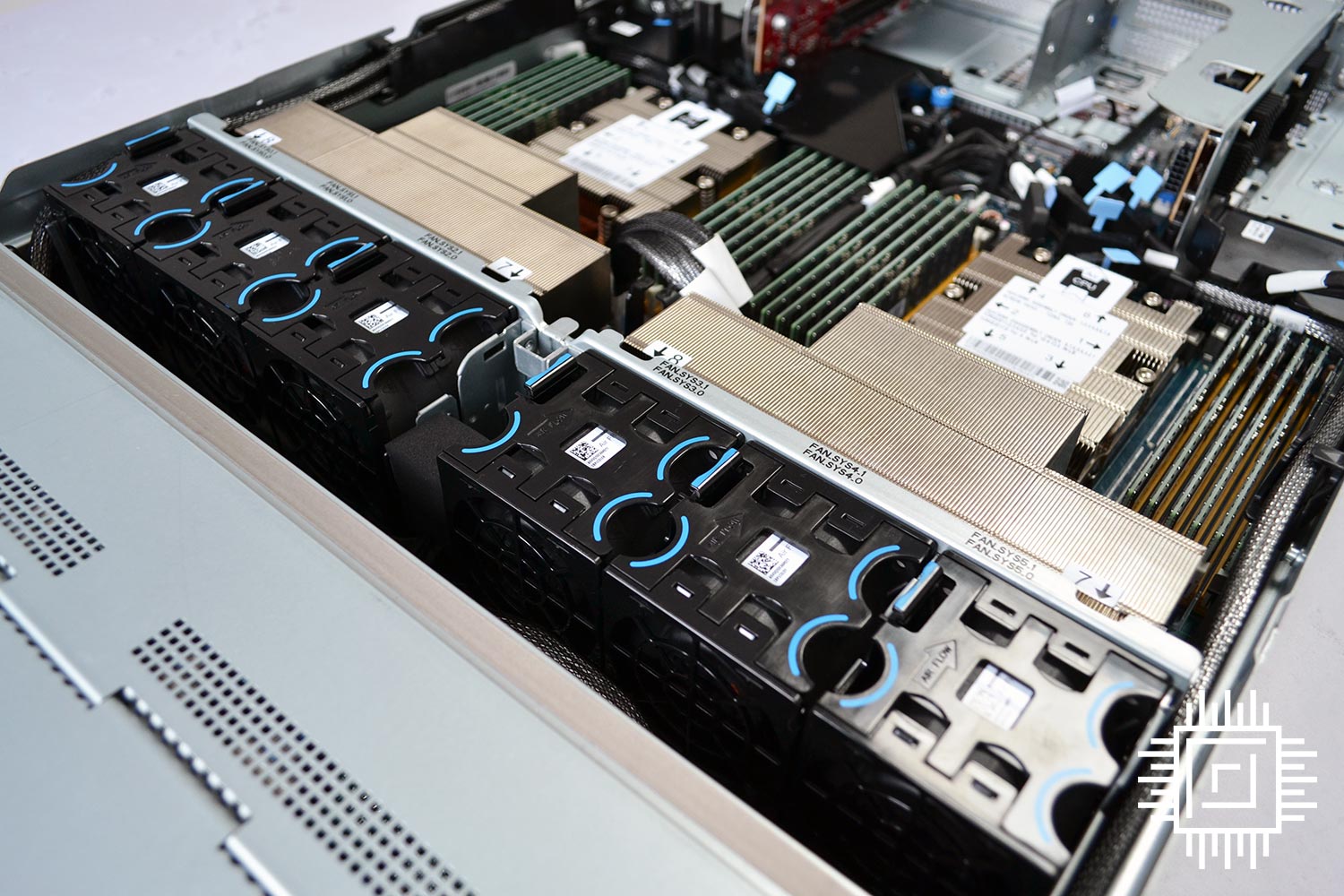

AMD provided Club386 with a fully-stocked Epyc Genoa Titanite SP5 2U rack platform server for technical evaluation. Fitting into an industry-standard 19in form factor, the design is somewhat removed from the Daytona platform we also have in the labs, used for testing 3rd Generation Milan/X chips.

Supporting 24 2.5in U.2 slots at the front and augmented by a single PCIe 3.0 x4 and two PCIe 3.0 x1 on the PCB itself, power is sourced from two Murata CRPS form factor PSUs rated at 1,200W and carrying 80 Plus Titanium certification. Capable of peak 2,592W, we found it best to plug both into the wall, if for nothing else than taming the high-pitched squealing when under load. Interestingly, the PSUs use a smaller form factor to the LiteOn models in Daytona.

The Titanite system is merely for performance evaluation and not long-term running, so various power-saving technologies are not active, which translates to peaking at over 1,000W when benchmarking.

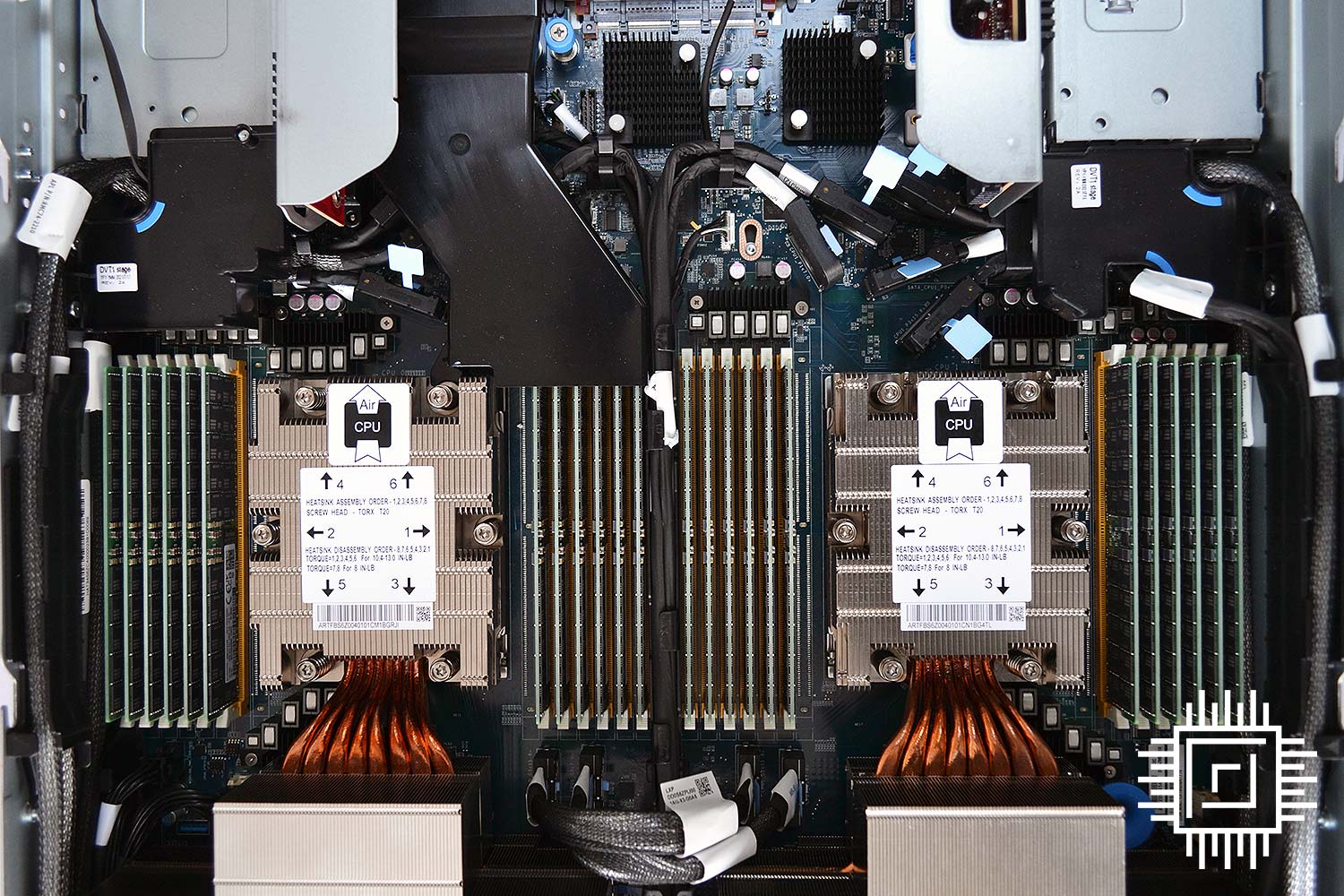

A large part of that consumption is dealt with by the two CPUs hiding under enhanced cooling on Titanite. Using eight beefier heatpipe-connected heatsinks, air is forced over the processors. The larger SP5 socket is indirectly observed by the significantly wider cooling requiring no fewer than eight screws to be undone before the CPUs hove into view.

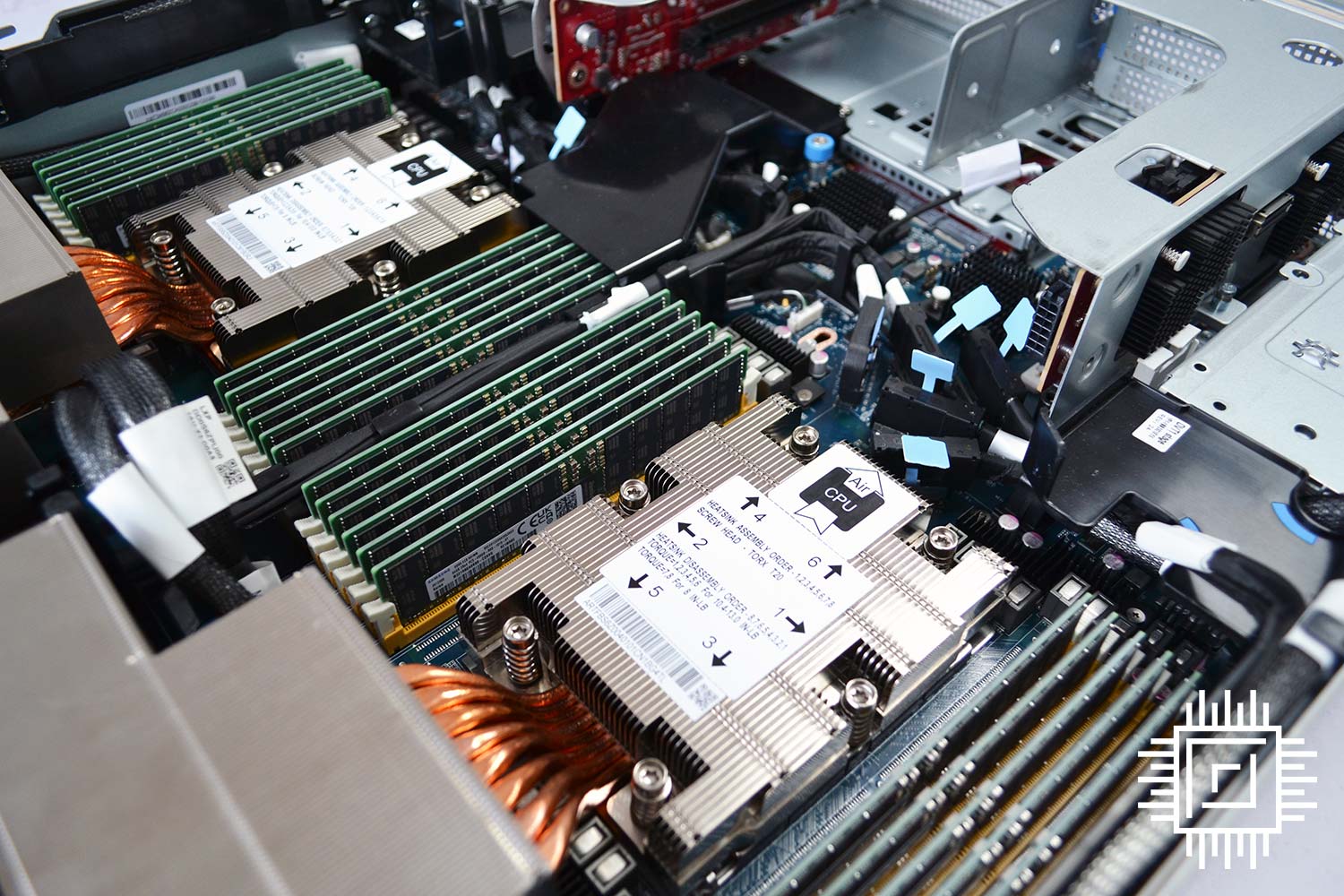

As shipped, AMD equips Titanite with two 96-core, 192-thread Epyc 9654 chips for maximum multi-core performance. Do the math and you’ll quickly realise there are almost 400 Zen 4 threads contained within, operating at around 3GHz when under duress.

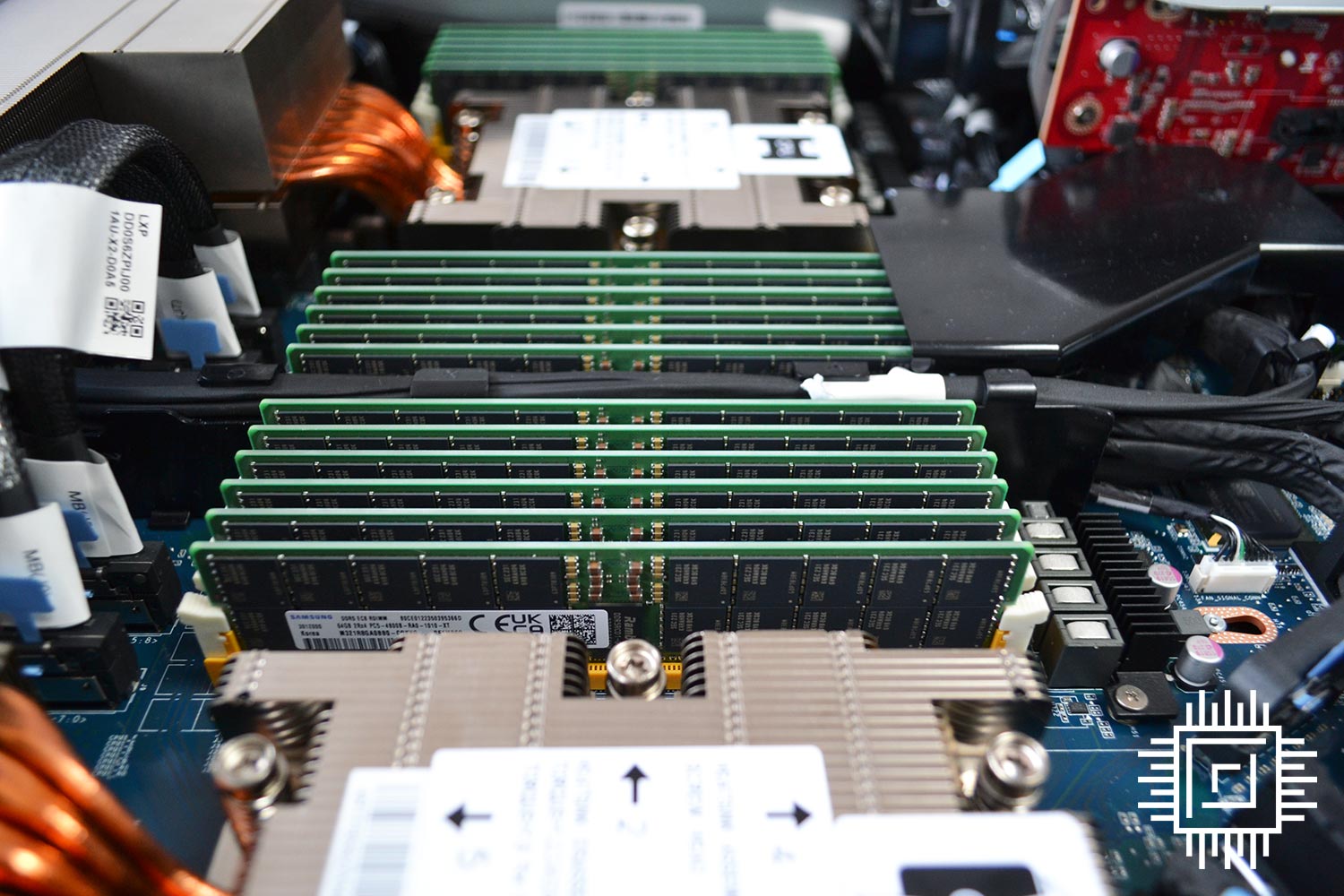

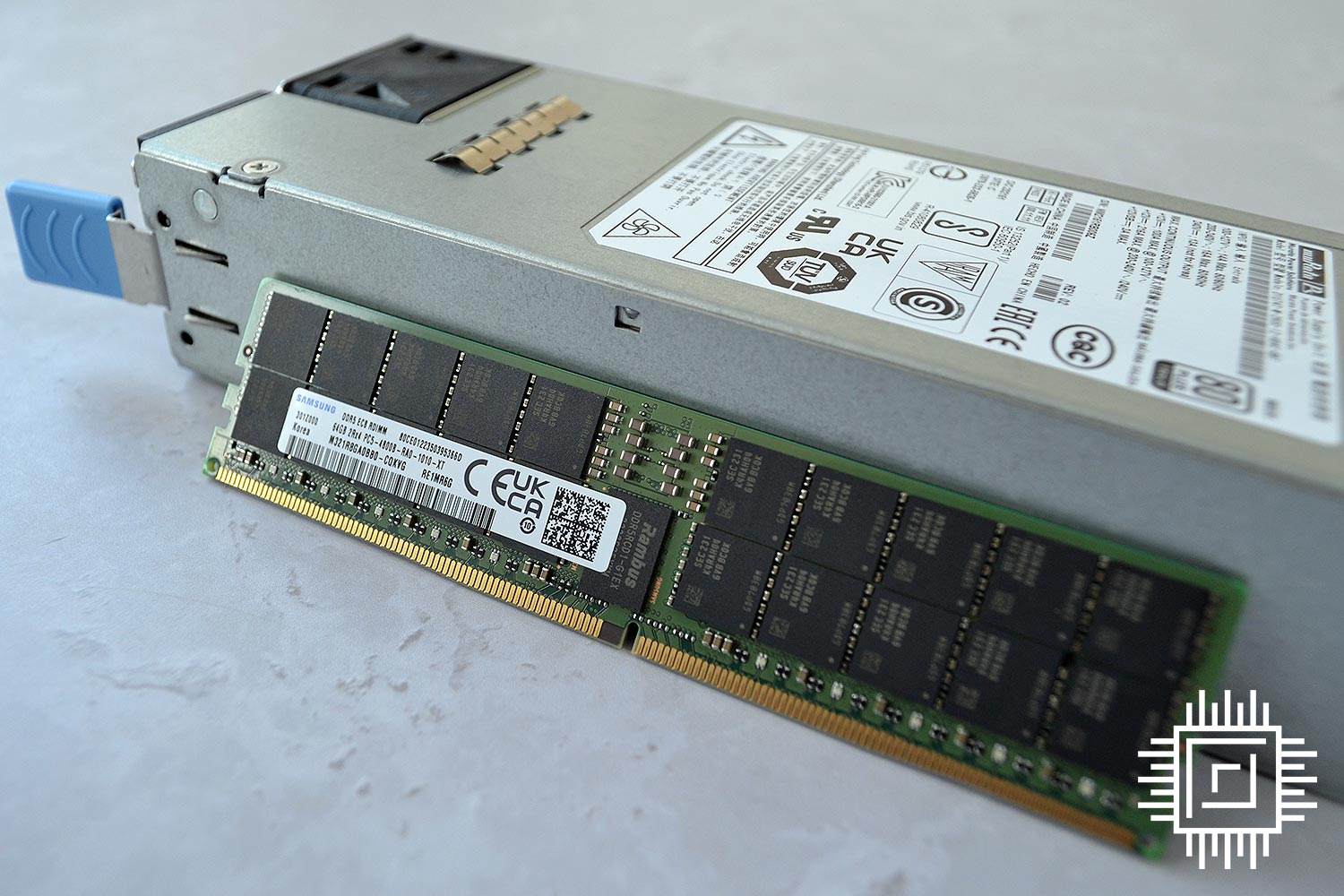

A key improvement this generation is with 12-channel DDR5 memory. There are actually 28 pinouts on Titanite, though 24 are populated on a one-DIMM-per-channel topology across the two processors.

Samsung is the go-to provider for 1.5TB capacity – 24x64GB DIMMs – running at the default 4,800MT/s. That’s a whole heap of bandwidth and footprint for memory-starved applications.

Seen from above, Titanite has some curious cabling orientations. Designed to make access easier, the routing makes sense when viewed in person.

Titanite also features a brand-new AST2600-powered Hawaii H BMC board which offers up back-panel connections such as mini-DisplayPort, Gigabit Ethernet for remote access, M.2 2230, Micro-SD, and the ever-useful debug LEDs.

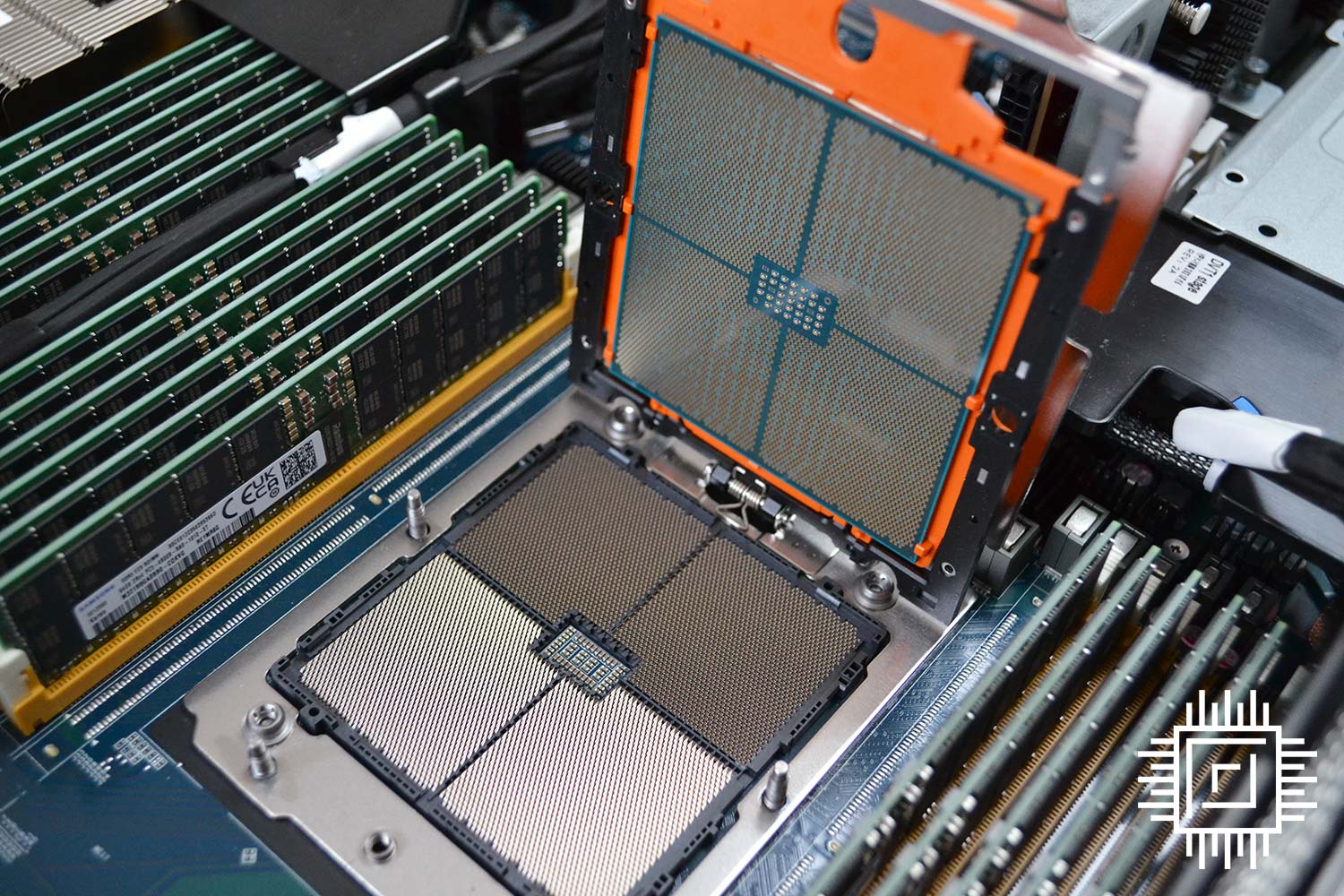

Removing the substantial heatsink is an exercise in patience. Disengaged screws in reverse order, one needs to be careful to ensure the 920g heatsink doesn’t tilt too much in any direction.

The LGA6096 processor and socket are biggies, by the way, measuring 75.4mm by 72mm.

Cleaning away copious amounts of thermal interface material – on both heatsink and CPU – reveals this beauty. Behold the Socket SP5 AMD Epyc 9654 processor taking up a chunk of motherboard space. Comfortably larger than the last generation, server board partners need to be inventive in how they situate all supporting components.

4th Generation Epyc 9654 (96C192T) makes 3rd Generation Epyc 7763 (64C128T) look feeble in comparison. The chips are actually the same width, though as is patently obvious, Epyc 9654 is significantly taller. Thumbs-up for bringing the orange sled back, too.

It’s just as well AMD’s made the carrier handle wider; handling an $12,000 processor and coaxing it into the retaining mechanism is not work for shaky hands!

Upgrades aplenty for this generation of server.

Performance

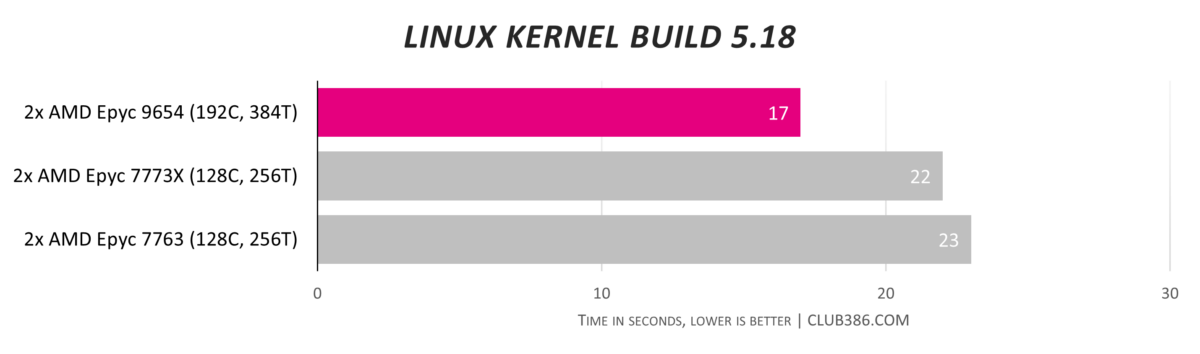

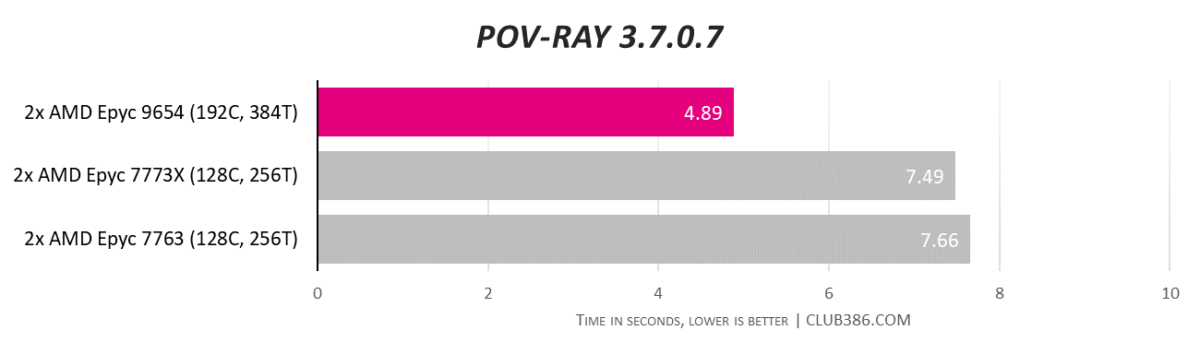

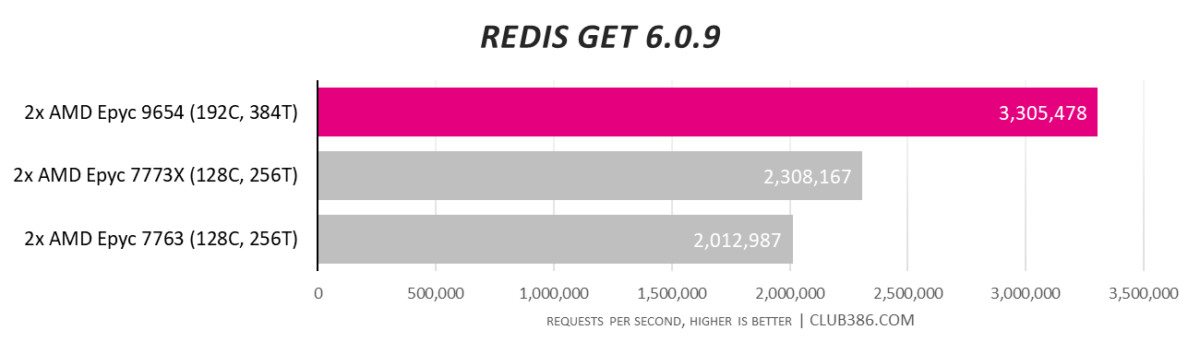

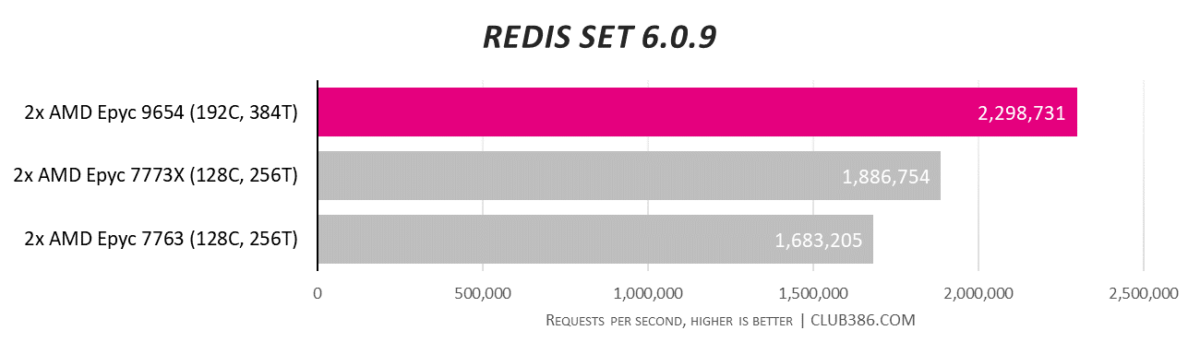

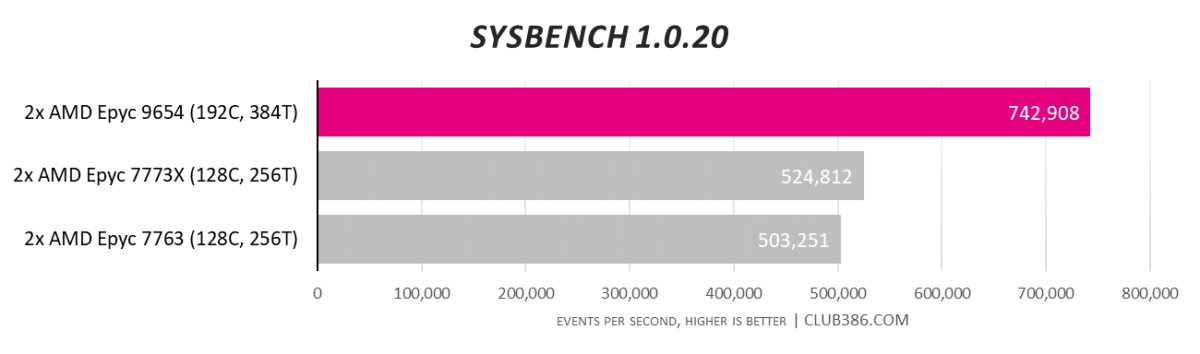

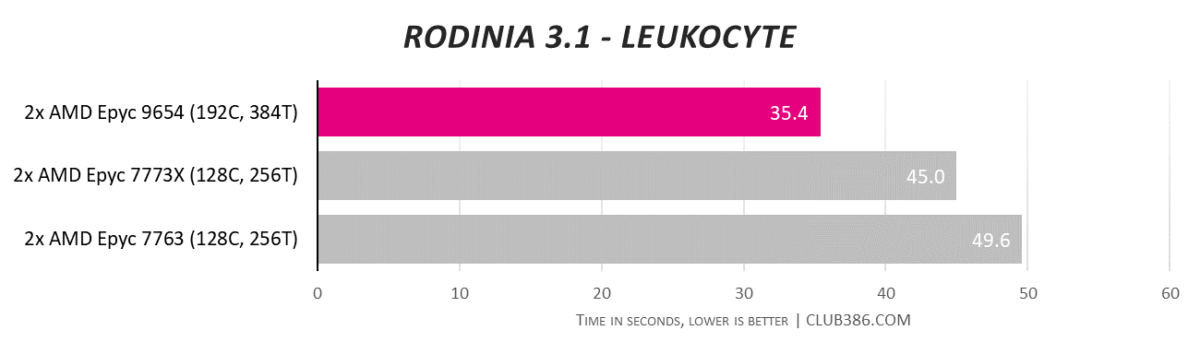

2P Epyc 9654 is compared against the best AMD server processors from the last generation, including cache-king Epyc 7773X and Epyc 7763, both also in a 2P configuration. Testing was done on Ubuntu 22.04 LTS. The Titanite server’s BIOS was RTL1001_L while the Daytona’s was RYM1009B. We plan to add Intel 4th Generation Sapphire Rapids numbers as soon as a server becomes available.

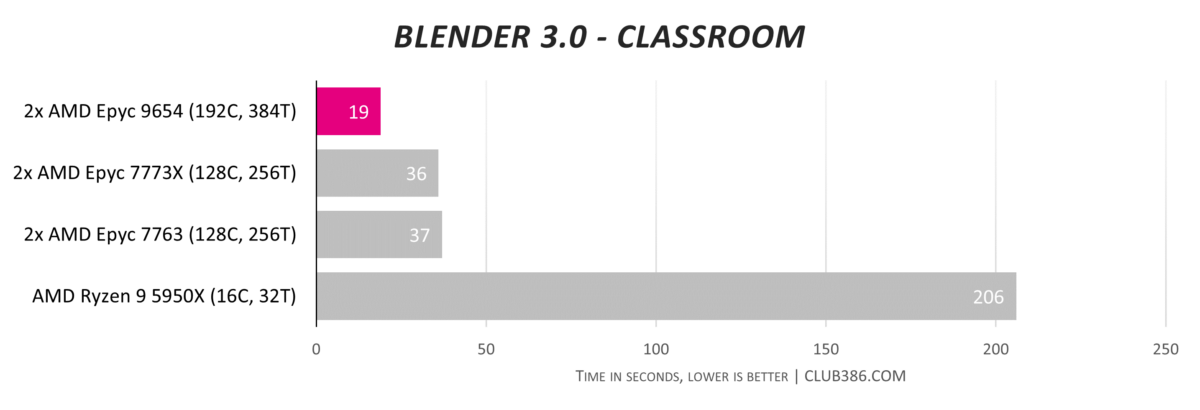

Here is a graph that will make many an enthusiast chuckle. It’s worth remembering the prior generation Epyc 7773X and 7763 have been best-in-class processors since their inception, which is what 64 cores and 128 threads gives you. Their performance is massively faster than a desktop Ryzen 9 5950X, standing to reason.

Epyc 9654 may only be 17/18 seconds faster than the aforementioned duo… but that makes it twice as fast as anything that’s come before. Extrapolate out to projects that take hours rather than seconds and the time-to-completion metric becomes meaningful. Incredible performance.

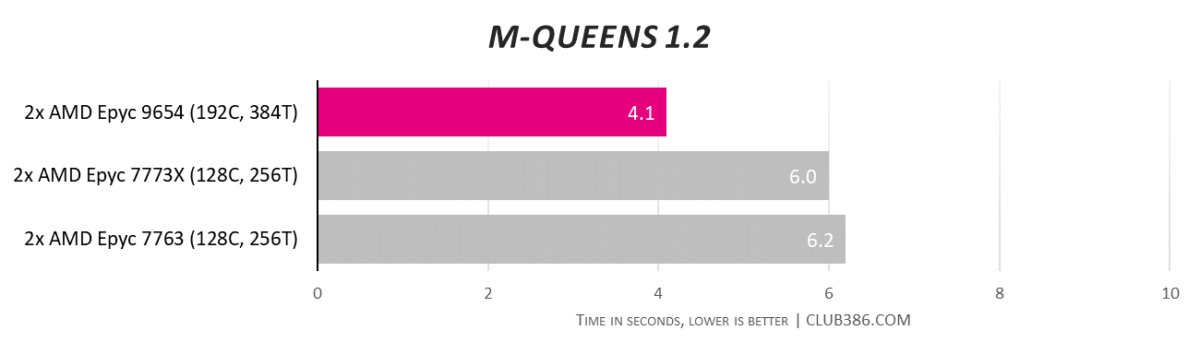

Running multi-core server applications? There’s nothing remotely close to dual Genoa.

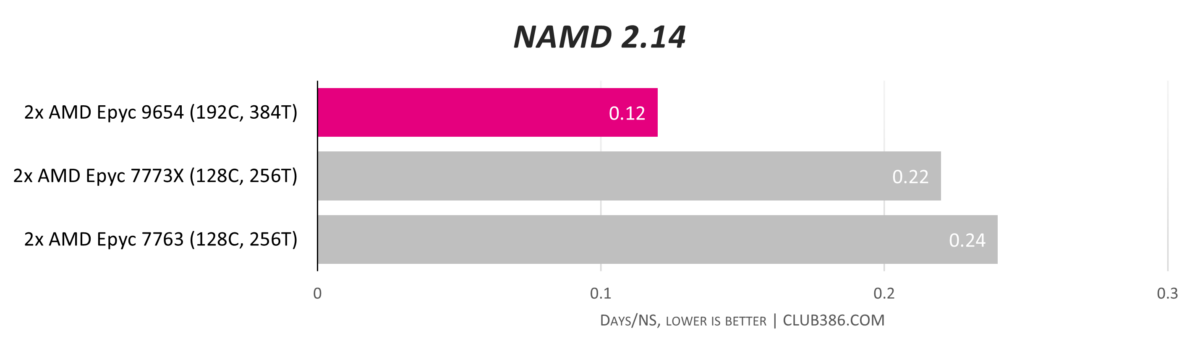

Carrying on a theme of benchmark-busting numbers, 4th Generation Epyc stands alone with respect to 2P performance. It is twice as fast as a dual Epyc 7763 machine.

Genoa is the Cosworth to the bog-standard Sierra of Milan. Throwing more cores, caches, frequency, IPC, memory channels, memory speed, and everything else in between, AMD’s brute-force approach works well for now.

One can transpose improvements to other applications. We can imagine a 60-80 per cent increase in pure compute over in-market processors, availed through higher core counts and IPC.

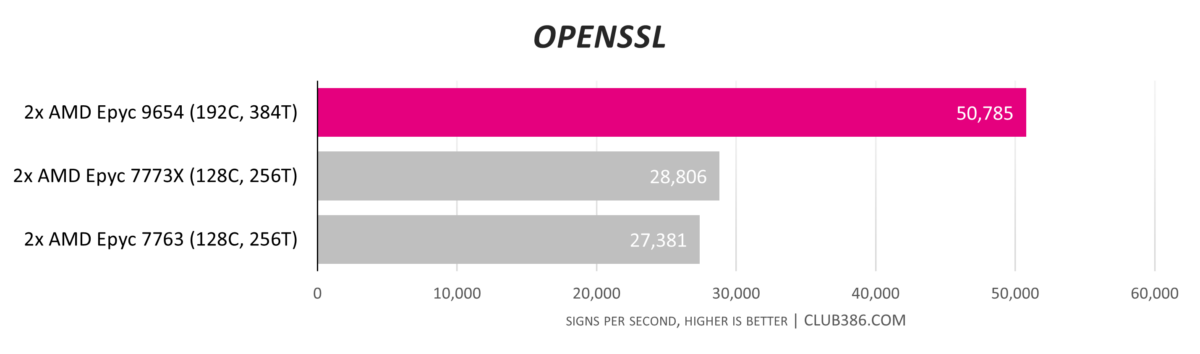

Understanding where Epyc 9654 fits into the 2P server landscape is easy. There is nothing faster for multi-core workloads, period.

Conclusion

Getting ready for datacentre prime time, AMD’s 4th Generation Epyc processors take compute performance to a new level. Building on the inertia created by previous generations, Genoa CPUs bring the noise by brandishing a new platform, DDR5 memory, CXL connectivity and, most importantly of all, twin hammers of the latest Zen 4 architecture allied to 50 per cent more cores and threads, per socket, than any Epyc that has come before.

Meting out performance in spades, top-line Epyc 9654 CPUs set new records for common 2P solutions. No other x86 processor combinations come close for workloads taking advantage of integer and floating-point might, and it’s a fact readily cheered by Epyc admirers who view the 9004 Series as AMD going truly mainstream in the lucrative server world.

Rival Intel is busy building out 4th Generation Sapphire Rapids Xeons ready to take the fight to AMD, particularly with Max models carrying HBM2e memory excelling in memory-bound applications, and ARM-based Ampere Altra servers are making inexorable progress for cloud-native workloads. Nevertheless, neither has the sheer compute throughput offered by AMD’s latest champions.

Offering different dimension performance for eclectic datacentre workloads, AMD has raised an already high bar with the release of 4th Generation Epyc 9004 Series. Providing a better TCO to boot, AMD’s case for inclusion in the next wave of server purchases is stronger than ever.

Verdict: Colossal compute performance and a forward-looking platform make Epyc more attractive than ever