A Twitter user has transformed GPT-4 into a handy and accessible tool capable of searching for information and giving recommendations without manual inputs.

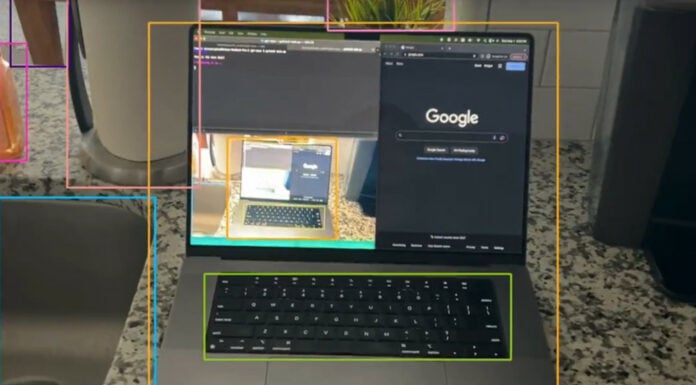

Mckay Wrigley has managed to make his own J.A.R.V.I.S. (from Iron Man) by combining GPT-4 AI, an iPhone for its camera, Internet access, and a custom food data set. All that is left is to give it a mechanical body and you have your own dedicated butler. The results speak for themselves in the demo video below.

For his demo, using voice commands, Mckay asked the AI about the keto diet. The AI then searched the Internet for related information before summing the important bits and responding via audio synthesis. After this, he again asked the AI if it could tell which food inside the fridge was keto. Here, the AI used the phone cameras to see and determine what is what by comparing the fridge content using a custom data set made by him. And simply by closing the fridge, the AI understood that all food had been checked, then moved to search the web for which is keto compatible.

After giving its results, Mckay asked the AI to find possible recipes using the available ingredients. And, as expected, once more, the AI found a recipe called “15-Minute Lemon Garlic Butter Steak with Spinach” that looks very tasty, if you ask me.

Mckay noted that the AI didn’t get anything that was present in the fridge, but as this is still a proof of concept, it’s not a big deal, and we would agree, since this is already very impressive.

According to Mckay, to create your own, you will need:

- YoloV8 for object detection

- A 20min YouTube video teaching how to add your own data/images to the vision model

- GPT-4 to handle the “AI”

- OpenAI Whisper for voice

- Google Custom Search Engine for web browsing

- MacOS/iOS for streaming the video from the iPhone to Mac

- The rest is basic Python 101 and coding knowledge.