AMD has divulged details on how it plans to address the client AI opportunity in 2024. Announced at the AI Summit in Santa Clara, the next step in personal AI processing is the mobile Ryzen 8040 Series processors shipping in a multitude of laptops from early next year.

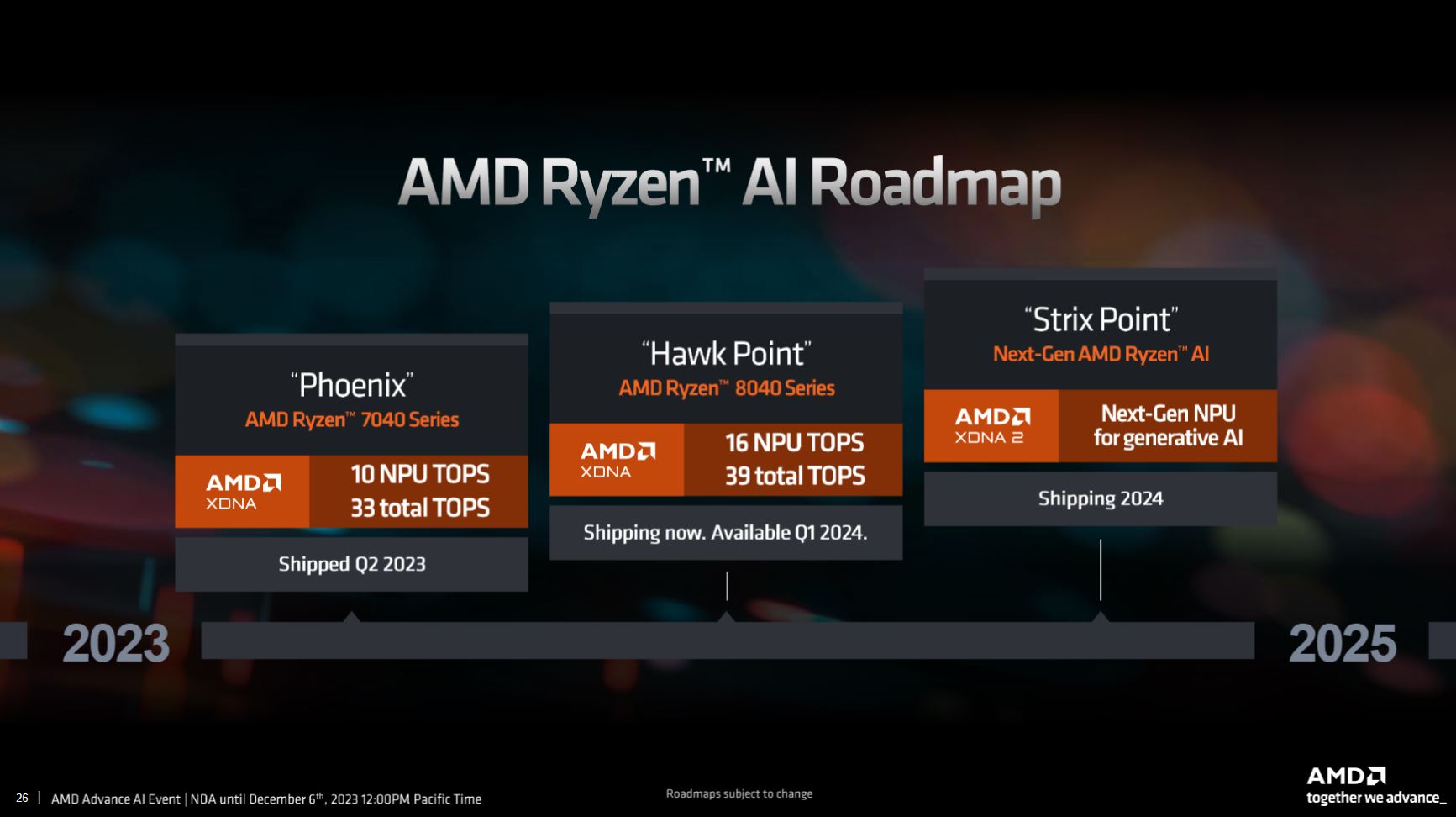

Building on the Ryzen 7040 ‘Phoenix’ Series announced at CES this year, there’s more of the same, albeit a larger focus on the Xilinx-derived NPU processing primed for client AI workloads.

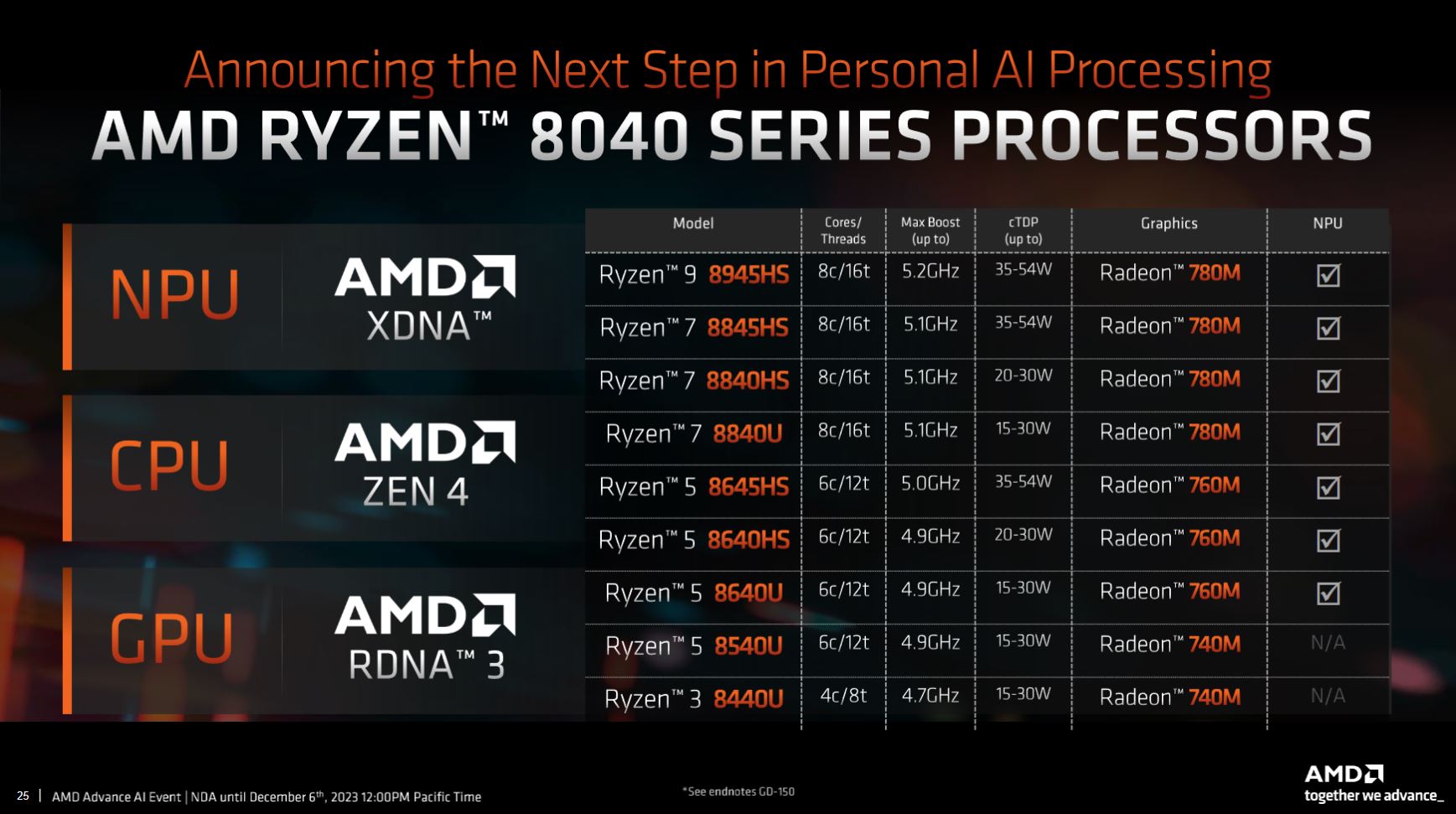

Keeping commonality with today, AMD splits into 15-30W U Series designed for ultra-thin laptops and 35-54W HS Series for performance models typically featuring a discrete GPU. Carrying the same Zen 4 CPU and RDNA 3 GPU architectures as today, there’s no change in base design or footprint – processors will use existing FP8, FP7 and FP7 R2 sockets. Good news for laptop-makers as switching between the generations is any easy affair.

An AI-optimised stack

“We continue to deliver high performance and power-efficient NPUs with Ryzen AI technology to reimagine the PC,” said Jack Huynh, SVP and GM of AMD computing and graphics business. “The increased AI capabilities of the 8040 series will now handle larger

models to enable the next phase of AI user experiences.”

Drilling down, not a lot has changed from a high-level vantage point. Running up from quad-core CPU processing on Ryzen 3 through to octo-core on Ryzen 7 and Ryzen 9, it’s also clear that integrated graphics are cut from the same cloth as this year.

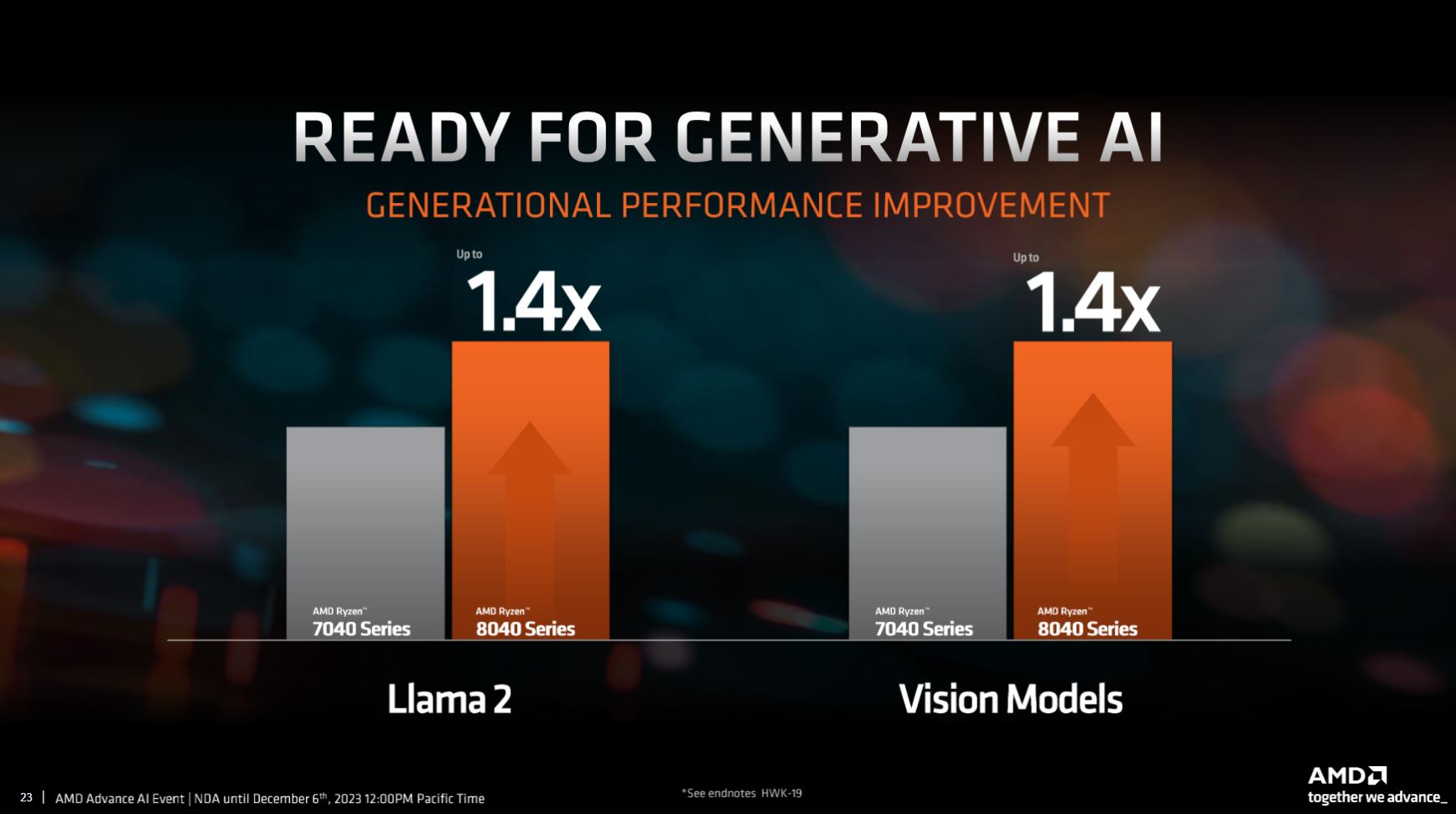

The largest difference between models rests with Ryzen 8040 ‘Hawk Point’ accommodating a more powerful AI NPU unit. Today’s leading AMD processors imbued with a Xilinx NPU achieve around 10 TOPS of INT8 performance. This increases to 16 TOPS on Ryzen 8040, indicating it’s more of a refresh and optimisation of current technology than a grounds-up design. Of more importance to users, AMD reckons the new series is up to 40% faster than today’s best 7040 Series for common models such as Meta’s Llama 2.

Local inferencing to the fore

The Ryzen 8040 Series’ sweet spot is for local inferencing of medium-sized models. For example, the simplest form of Llama 2 pulls in 7bn parameters, so we’d love to know how the token throughput compares with, say, an Apple M1 or leading mobile Core processor.

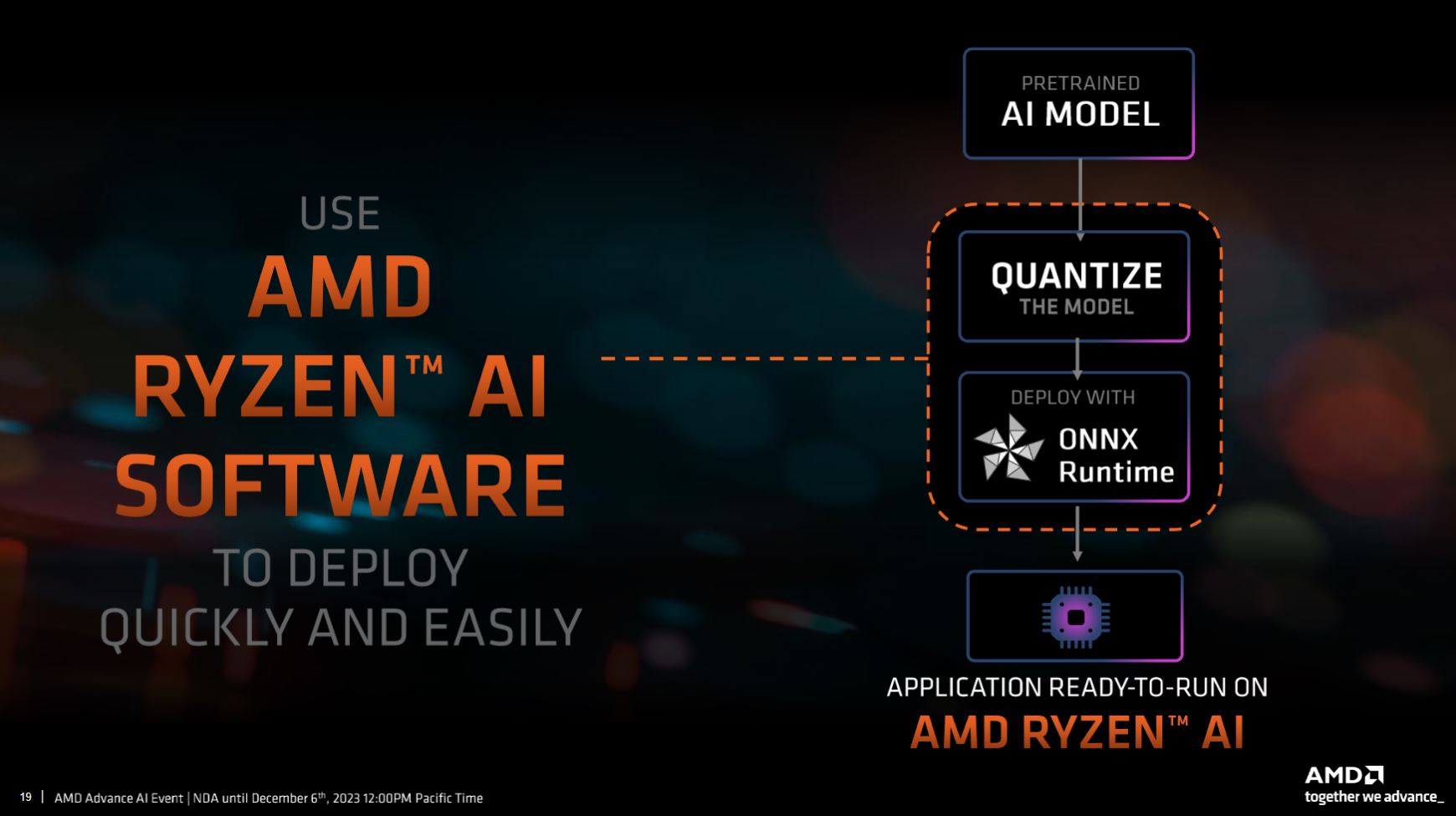

Hardware only gets you so far in the AI world. To that end and with the intention of making it easy to port existing AI models designed with open-source industry acknowledged frameworks such as PyTorch, TensorFlow, and ONNX, AMD has already introduced Ryzen AI Software. Taking the pretrained model and converting it to INT8 open source in the ONNX format, AMD believes the few clicks needed to ensure they run on AMD hardware provide sufficient incentive for adoption.

Strix Point moves the AI needle

There’s plenty more in the mobile AI tank, too. AMD also teased upcoming processors currently known by the codename ‘Strix Point.’ Based on the next evolution of the XDNA architecture and promising up to 3x the generative AI performance of today’s in-market Ryzen 7040 Series, there’s certainly more to get excited about.

We don’t yet know how either Hawk Point or, lesser so, Strix Point, compare to Intel’s upcoming multi-tile mobile Meteor Lake processors. That’s the real battleground for staking places in next-gen laptops. Watch this space.