Five years have passed since AMD reinvigorated server and datacentre aspirations through the release of first-generation Epyc CPUs based on the all-new Zen architecture. Since then, Epyc has made significant inroads into the server processor market share held by Intel.

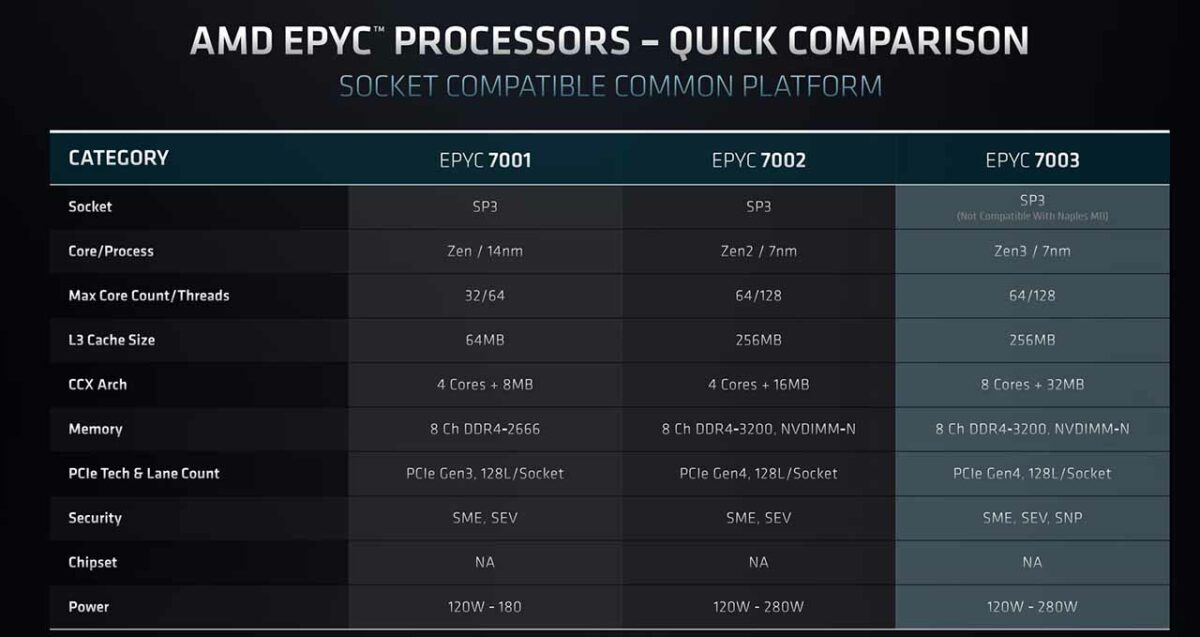

Original Epyc, codenamed Naples, was officially known as the 7001 series and topped out at a then-impressive 32 cores and 64 threads per chip. In 2019, AMD announced Epyc 7002 series, codenamed Rome, which not only doubled the core-and-thread count on flagship CPUs, but also increased performance farther by using a more refined Zen 2 architecture. 2021 saw the introduction of Epyc 7003 series, codenamed Milan, which kept to the same 64C128T processing at the premium end of the stack but moved the performance needle to the right by using the latest Zen 3 architecture alongside further refinements in the platform.

AMD Epyc 7773X

£8499 / $8800

Pros

- Prodigious speed

- Relative value

- Easy update

- Gobs of L3 cache

Cons

- No upgrade path

Club386 may earn an affiliate commission when you purchase products through links on our site.

How we test and review products.

In the same vein as desktop chips, AMD has deliberately kept the first three generations on the same socket (SP3) and platform, enabling older, in-service motherboards to be upgraded to third-generation processors via a simple BIOS update. Five-year-plus platform longevity remains a key selling point for Epyc, providing datacentre architects with a proven roadmap for future upgrades.

Epyc 7003 series is to be the last family on the SP3 socket. AMD has made clear plans to release next-generation Epyc 7004 series on a brand-new socket known as SP5, making future chips platform-incompatible with all current models. Changes run more than socket deep, however, as upcoming processors, codenamed Genoa, are set to feature DDR5 memory, more memory channels, more cores, more cache, and more performance. Notice a trend here?

AMD’s roadmap execution is in stark contrast to Intel who has delayed subsequent server microarchitectures due to manufacturing delays. Intel still enjoys over 80 per cent of total x86 server CPU shipments, of course, but a lack of roadmap adherence has been serendipitous to AMD’s fortunes.

Epyc 7003 series in more detail

Building on the commentary above and before exploring performance parameters of Epyc 7003 series, it is necessary to appreciate key changes in finer detail between Epyc generations.

The chart shows there has been less performance uplift between Epyc 7002 and 7003 than when comparing differences between the first two generations. This is to be expected, especially when the next slew of chips upends the continuity witnessed above.

Moving from Zen 2 to Zen 3 affords an extra 20 per cent improvement when evaluated using chips housing the same number of cores and threads. The exact improvement is down to how well Zen 3 runs a particular workload, and corner case examples have shown a 50 per cent uptick in performance. Nevertheless, 20 per cent is a reasonable return across the board. Most of this gain derives from polishing various aspects of the Zen architecture, using deeper buffers, enhanced branch prediction, and a wider dispatch mechanism. Small changes which coalesce into meaningful improvements for sectors where time is money.

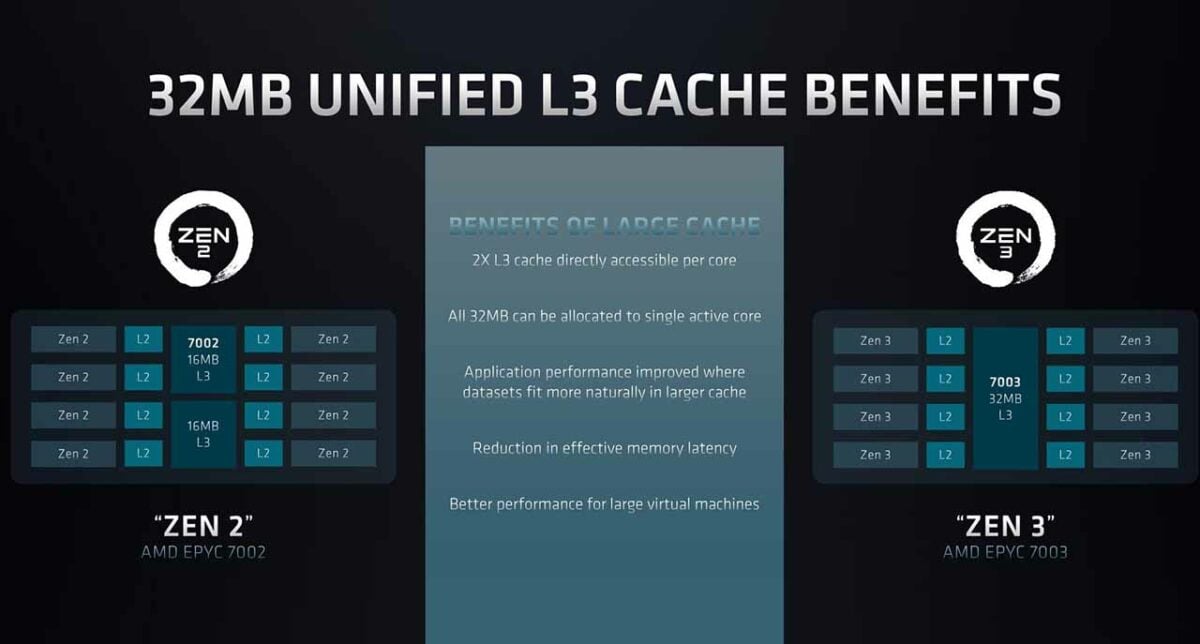

Another small driver of additional oomph rests with the way AMD has rearchitected on-chip cache partitions. Previously, in Zen 2, each of the four cores had access to 16MB of L3 cache in its own complex (CCX), and two complexes constituted a single CCD. Zen 3 doesn’t change the number of cores in a CCD – they remain at eight – but does away with CCXes altogether. The upshot is each of the eight cores has access to 32MB of cache, leading to potentially lower latency for cache-heavy applications such as virtualisation. This nuanced change is the direct result of workload simulations.

Though most performance is availed by running eight channels of DDR4 memory, AMD says it has responded to customers’ needs of running six-channel memory on Epyc 7003 series – a feature not present on Epyc 7002. Driven by cost savings for multi-rack installations that don’t need absolute bandwidth, we view it as a sensible move, as is the introduction of secure nested paging for memory.

Models – X-rated Milan hoves into view

| Model | Cores / Threads | TDP | L3 Cache | Base Clock | Boost Clock | Launch MSRP |

|---|---|---|---|---|---|---|

| Epyc 7773X | 64 / 128 | 280W | 768MB | 2.20GHz | 3.50GHz | $8,800 |

| Epyc 7763 | 64 / 128 | 280W | 256MB | 2.45GHz | 3.40GHz | $7,890 |

| Epyc 7713 | 64 / 128 | 225W | 256MB | 2.00GHz | 3.67GHz | $7,060 |

| Epyc 7713P | 64 / 128 | 225W | 256MB | 2.00GHz | 3.67GHz | $5,010 |

| Epyc 7663 | 56 / 112 | 240W | 256MB | 2.00GHz | 3.50GHz | $6,366 |

| Epyc 7643 | 48 / 96 | 225W | 256MB | 2.30GHz | 3.60GHz | $4,995 |

| Epyc 7573X | 32 / 64 | 280W | 768MB | 2.80GHz | 3.60GHz | $5,590 |

| Epyc 75F3 | 32 / 64 | 280W | 256MB | 2.95GHz | 4.00GHz | $4,860 |

| Epyc 7543 | 32 / 64 | 225W | 256MB | 2.80GHz | 3.70GHz | $3,761 |

| Epyc 7543P | 32 / 64 | 225W | 256MB | 2.80GHz | 3.70GHz | $2,730 |

| Epyc 7513 | 32 / 12 | 200W | 128MB | 2.60GHz | 3.65GHz | $2,840 |

| Epyc 7453 | 28 / 56 | 225W | 64MB | 2.75GHz | 3.45GHz | $1,570 |

| Epyc 7473X | 24 / 48 | 240W | 768MB | 2.80GHz | 3.70GHz | $3,900 |

| Epyc 74F3 | 24 / 48 | 240W | 256MB | 3.20GHz | 4.20GHz | $2,900 |

| Epyc 7443 | 24 / 48 | 200W | 128MB | 2.85GHz | 4.00GHz | $2,010 |

| Epyc 7443P | 24 / 48 | 200W | 128MB | 2.85GHz | 4.00GHz | $1,337 |

| Epyc 7413 | 24 / 48 | 180W | 128MB | 2.65GHz | 3.60GHz | $1,825 |

| Epyc 7373X | 16 / 32 | 240W | 768MB | 3.05GHz | 3.80GHz | $4,185 |

| Epyc 73F3 | 16 / 32 | 240W | 256MB | 3.50GHz | 4.00GHz | $3,521 |

| Epyc 7343 | 16 / 32 | 190W | 128MB | 3.20GHz | 3.90GHz | $1,565 |

| Epyc 7313 | 16 / 32 | 155W | 128MB | 3.00GHz | 3.70GHz | $1,083 |

| Epyc 7313P | 16 / 32 | 155W | 128MB | 3.00GHz | 3.70GHz | $913 |

| Epyc 72F3 | 8 / 16 | 180W | 256MB | 3.70GHz | 4.10GHz | $2,468 |

The full 23-CPU stack of Epyc 7003 series in all its glory. As AMD is able to build chips in a modular fashion, thanks to the use of CCDs tied together with Infinity Fabric, there is an almost endless combination of cores, TDPs, caches and frequencies. AMD liberally plays on this fact by introducing specific SKUs optimised for particular environments. The starkest example of such modularity is the Epyc 72F3 right at the bottom, which actually uses the full 8-CCD arrangement as found on the top-bin 7773X. Only one core is active in each CCD, whereas it is possible to have all eight running, a la 7773X. Think about it for a second, 72F3’s cores have 32MB of L3 per core, while 7453 (28 cores and 64MB cache) has a ratio of 2.29MB of L3 per core. Eclectic, huh?

Four Epyc chips carry exactly the same specifications as another but are suffixed with a P, which is to say they only work as single processors. All others can be installed in pairs on a motherboard, known as a 2P arrangement, and thus attract a premium for the privilege.

Eagle-eyed readers will notice the four X-suffixed chips in the line-up. These are known by the codename Milan-X and shoehorn triple the L3 cache as regular Epyc 7003 processors. AMD uses a novel cache-stacking technology known by the marketing term ‘3D V-Cache,’ and we have covered it in detail right over here.

An expanded L3 footprint works well in various technical computing workloads, which tend to have large in-flight memory requirements, according to internal testing. Doing so improves performance hugely for certain applications requiring lots of cache, but being even-handed, this approach verges on pointless if the workload is compute bound rather than cache bound. Caveat emptor.

Notice how the suggested pricing of same-core processors varies significantly? For example, the 16C32T Epyc 7313P costs just $913 while the Epyc 7373X costs $4,185 – or well over four times as much. It’s clear AMD charges a large premium for more on-chip cache, because it knows customers who rely on it can afford to pay; the financial outlay is more than compensated by increased performance on their specific workload.

Likewise, the exact combination of cores, power budget and cache predicate the target workload. Where AMD reckons it can charge more, it does, especially if rival Intel Xeon is not considered a real competitor in that space. For example, if users need a high-frequency part because their workload is bursty in nature, the F-series is primed for them… and priced accordingly.

AMD’s Epyc 7003 SKU matrix is confusing until each processor is taken in the right context.

Top of the pack: Epyc 7773X

Our review chips are range-topping Epyc 7773X models suffused with 768MB of L3 apiece. Totalling that heady 1.5GB across a 2P platform common in the industry, there’s nothing better until fourth-gen Genoa rolls around later on in the year.

Pictured alongside are Epyc 7763 and Epyc 7742 processors, all of which carry matching 64 cores and 128 threads per chip, and differences in performance are dictated by three factors: internal architecture, frequency, and cache levels.

| Model | Cores / Threads | TDP | L3 Cache | Base Clock | Boost Clock | Generation |

|---|---|---|---|---|---|---|

| Epyc 7773X | 64 / 128 | 280W | 768MB | 2.20GHz | 3.50GHz | 3rd, Milan |

| Epyc 7763 | 64 / 128 | 280W | 256MB | 2.45GHz | 3.40GHz | 3rd, Milan |

| Epyc 7742 | 64 / 128 | 225W | 256MB | 2.25GHz | 3.40GHz | 2nd, Rome |

This comparison is interesting insofar as it touches all three facets. At the bottom, but hardly slow, is the second-generation (Rome) Epyc 7742. In the middle, architecture moves to present-gen Milan with Epyc 7763, whilst the leader of the pack is Epyc 7773X, carrying more cache and a touch more high-end speed.

Understanding the dynamics of each processor set is key in evaluating ensuing performance. The three sets of chips are tested in an AMD ‘Daytona’ reference platform equipped with 512GB of Micron DDR4 RDIMMs running at 3,200MT/s.

Performance

We use a number of benchmarks in the excellent Phoronix Test Suite. Epyc 7773X is compared against also-64C128T processors in the form of Epyc 7763 and Epyc 7742, as illustrated in the table above. Testing was done on Ubuntu 22.04 LTS and the Daytona server’s BIOS updated to RYM1009B.

There’s little reason to expect much higher performance than a pair of Epyc 7763 chips because the baseline architecture is the same. Some benchmarks will benefit from a slightly higher core speed, especially for light loads, and a few large-footprint applications are sure to love the extra L3 cache.

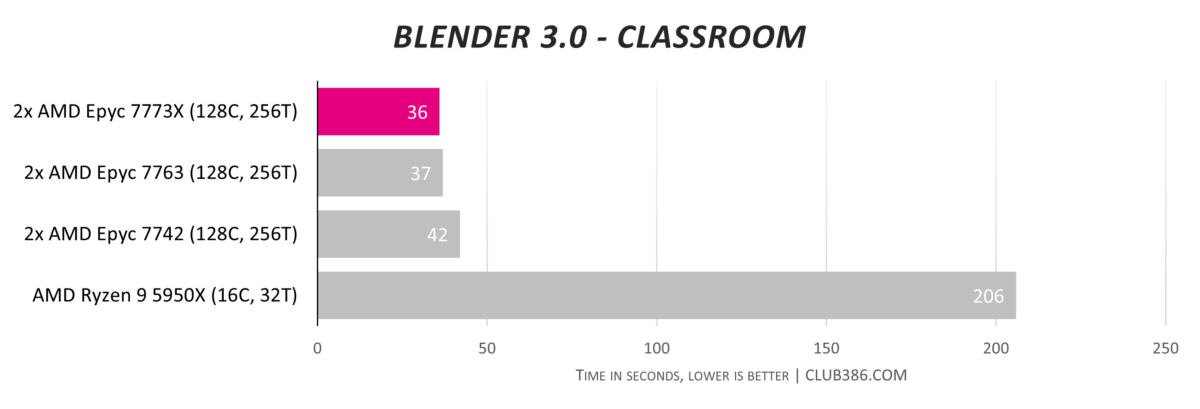

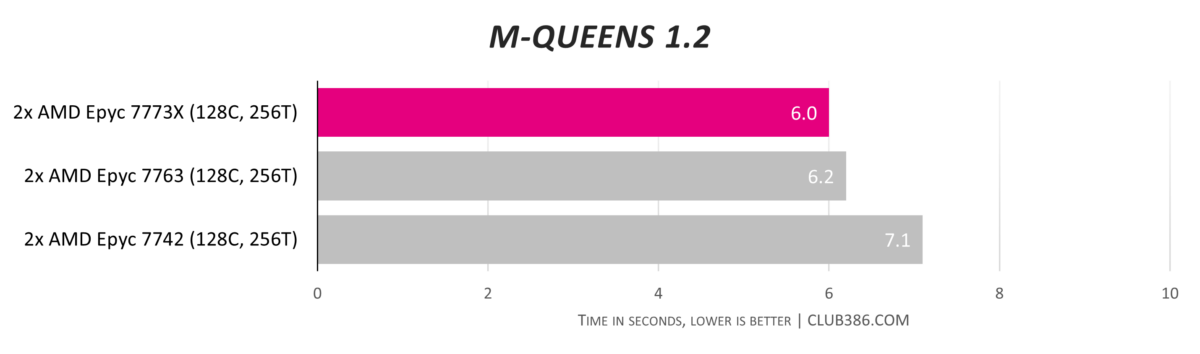

Comparing three different 64C, 128T Epyc chips shows advantages to architecture and frequency. Let’s remember Epyc 7742 is no slouch, providing a full 256 threads in the Daytona server. Nevertheless, it’s comfortably slower than either third-generation pair, with 7773X beating out 7763 by a fraction.

Putting these results into some kind of context for kicks, a desktop Ryzen 9 5950X (16C32T) takes over 200 seconds to complete this test – or six times slower than the Epyc 7773X duo.

And it’s that fraction that’s going to be important. A step change in performance will only be available from more cores and threads.

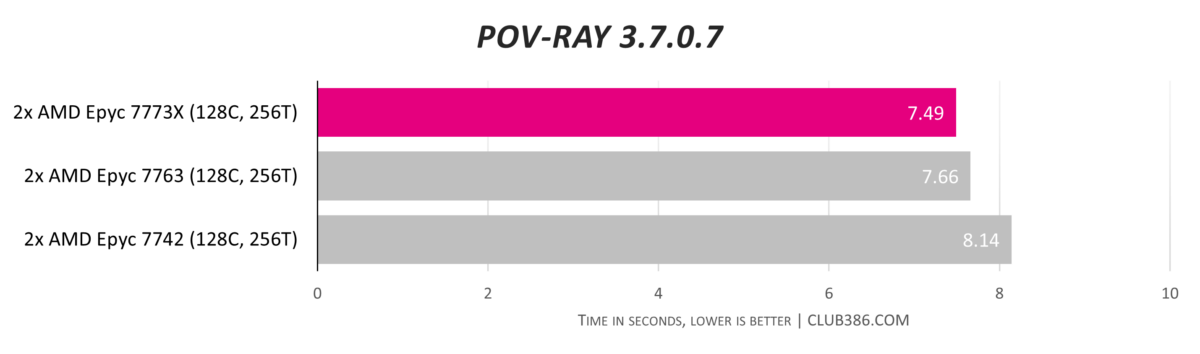

The previous best (7763) gets better with best-in-breed Milan-X (7773X).

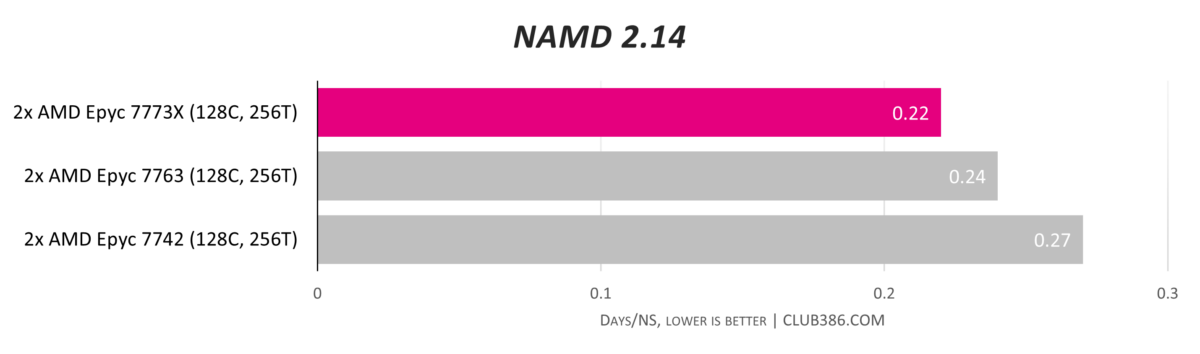

The high-performance simulation of large biomolecular systems – 327,506 to be exact – relies on sheer horsepower first and foremost. Results are expressed in the number of days it takes to complete the simulation, so whilst 0.22 vs. 0.27 may not sound impressive when viewed in isolation, the percentage increase is significant. A desktop 5950X produces a result of approximately 1.1 days/ns, or about five times slower than the fastest on show.

Another multi-thread test, another win for Epyc 7773X.

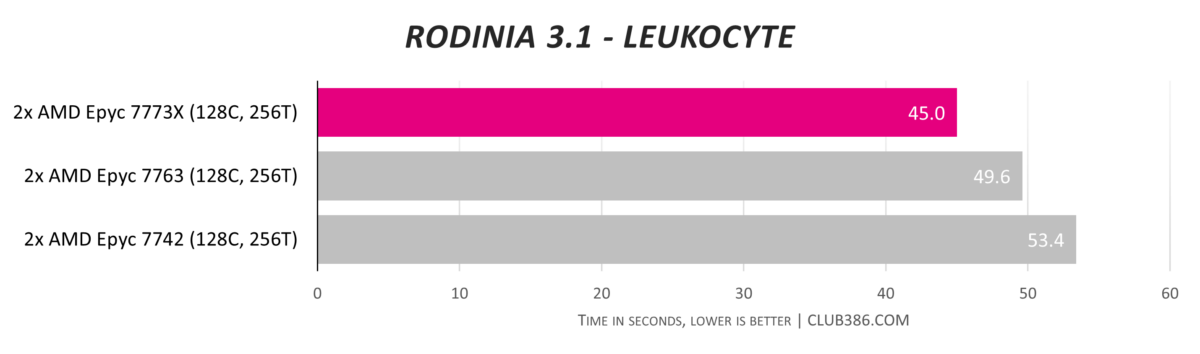

Used in the field of medicine, the leukocyte application detects and tracks rolling leukocytes (white blood cells) in in-vivo video microscopy of blood vessels. The quicker, the better, clearly, and the test favours frequency and greater levels of cache.

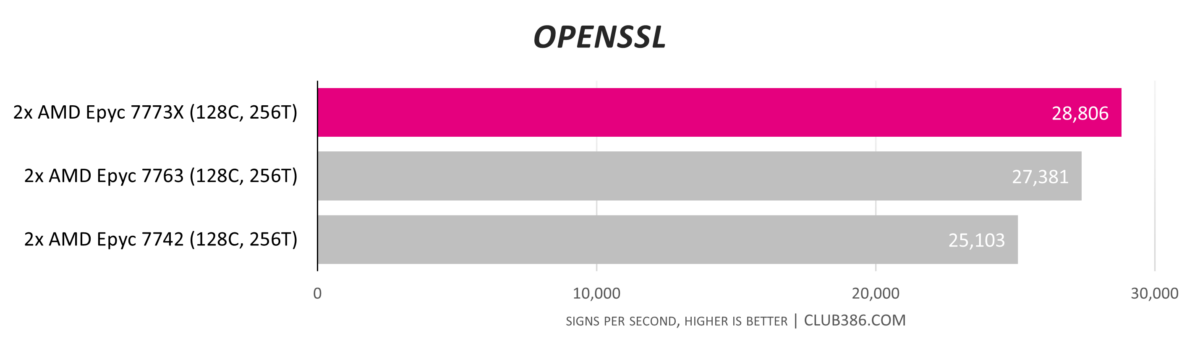

Being able to process more signs per second for encryption, for a 2P server installation, is reliant on cores and frequency. Epyc 7773X takes first spot again, as expected, through higher frequencies.

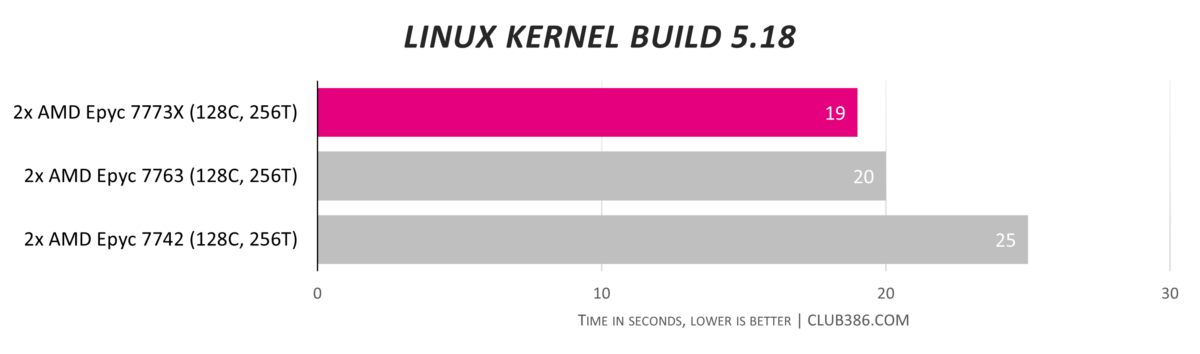

This benchmark is fun to do. What used to take several minutes now takes less than 20 seconds on the standard ‘defconfig’ setting.

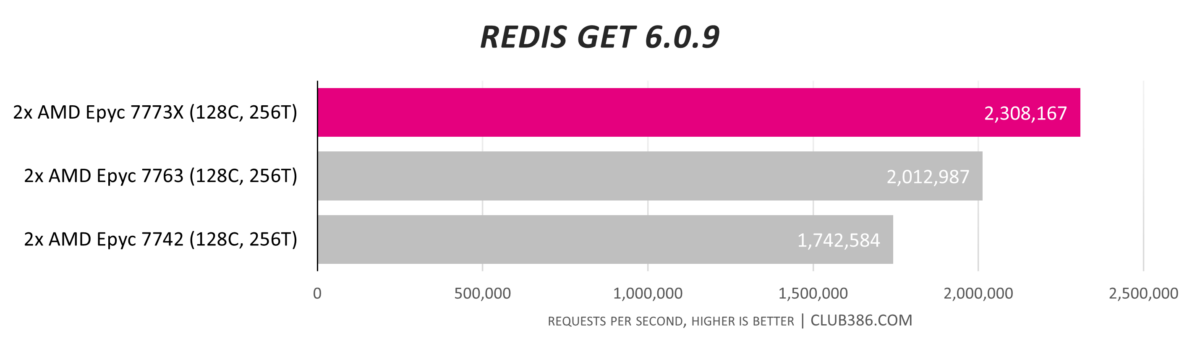

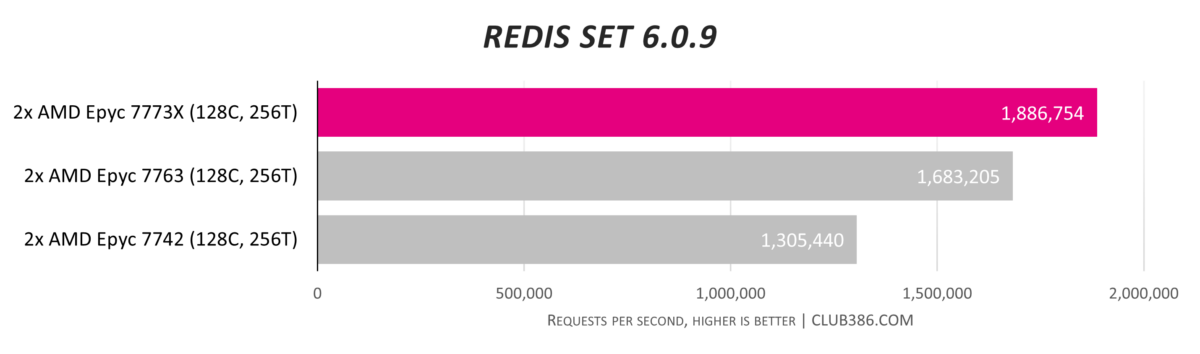

Redis is an in-memory NoSQL database test. Having lots of on-chip cache, you would assume, is beneficial as more of the working set can be loaded at a time. Results bear that out, too, as the 12 per cent increase over 7763 is more than the frequency differences between the two pairs.

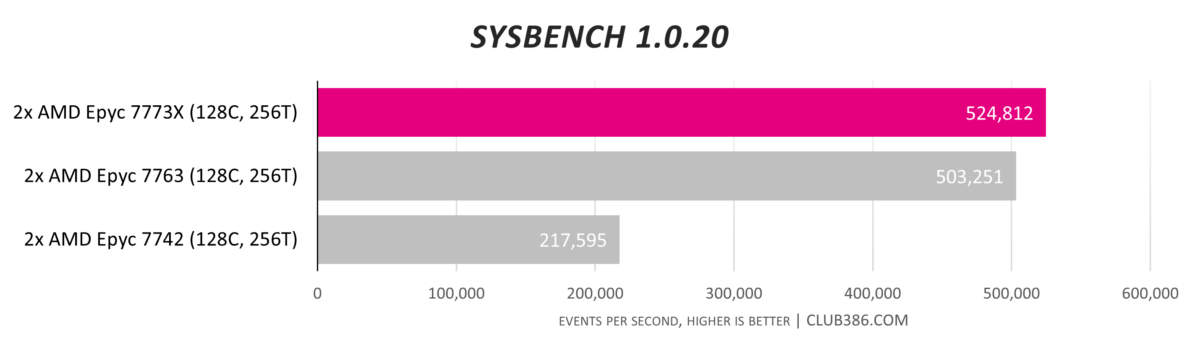

The CPU part of Sysbench must love the move from second-gen Rome to third-gen Milan as the 2.4x performance improvement for 7773X vs. 7742 cannot be explained away by frequency or cache. Perhaps it’s ongoing optimisations, too, but there are applications that really take a shine to a particular microarchitecture.

Conclusion

AMD builds on momentum established since first-gen Epyc’s introduction in 2017. The server-focussed stack comprises 23 processors scaling from eight cores through to 64, and with the introduction of four X-suffixed models carrying triple the amount of L3 cache, AMD has solutions for eclectic workloads. There are chips for high frequency, those that tread the multi-thread ground and house lots of cores, and now, four new models primed for large-footprint applications whose working set fits neatly into the expanded 768MB L3 cache.

Epyc has therefore evolved over time to serve more markets and opportunities, and the culmination of this approach is Epyc 7773X. Outfitted with 64 cores and 128 threads, along with 768MB of L3 cache, it is the most potent server chip to come out of AMD’s design rooms.

7773X extends AMD’s lead in workloads that take full advantage of cores and threads, and with extra levels of L3 cache, some applications benefit greatly. It remains a niche product, but given the dominance in a broad swathe of benchmarks and sold with a $910 premium over incumbent 7763, it becomes easy enough to recommend to companies where all-round performance matters above all else.

Verdict: The fastest Epyc to date, 7773X raises the bar for 2P servers.