AMD’s ever-expanding footprint in the datacentre environment was last bolstered through the release of 4th Generation Epyc processors in November last year. At that time, AMD served notice on intention to build out the CPU server portfolio with a more core-dense variant known by the codename Bergamo alongside a cache-rich version referred to as Genoa-X. Today, that promise is fulfilled at the company’s Data Center and AI Technology Premiere.

Already in market with class-leading server CPU performance from the 96-core, 192-thread 4th Generation Epyc Genoa 9654 processors – which have today been adopted by Amazon for its EC2 M7a Instances on a preview basis – AMD has understood the need to broaden its portfolio for datacentre segments for which more specialised Epyc variations are required. Designed for cloud-native computing where core density and value are arguably more important than sheer frequency and throughput, Zen 4c-based Bergamo is born.

More cores, please

Finer-grained details will be divulged later, but it’s important to emphasise that incumbent Genoa and newly-minted Bergamo share the same socket and carry an identical ISA feature set, meaning a customer can switch seamlessly from one to the other without having to tinker with underlying motherboards or software optimisations.

Known as the Epyc 97×4 series, AMD increases maximum per-socket count to 128, up from 96 on regular Genoa. As up to two chips are accommodated on one motherboard, it’s the first time 256-core, 512-thread CPU compute capability has been witnessed in the x86 space.

Rumours suggest AMD has been able to increase compute density without boosting power through numerous silicon-saving optimisations in the architecture, including halving the per-CCX L3 cache whilst using denser but same-capacity L1 and L2 caches. Compared to Zen 4 regular which measures 3.84mm² on a core and L2 basis, Zen 4c is 35 per cent smaller, at 2.48mm², based on the same 5nm CPU process.

Furthermore, AMD boss, Lisa Su, explained Bergamo uses 16 cores per CCD – by doubling up on CCXes – up from eight on Genoa. This means the top-bin chip will use eight CCDs instead of today’s maximum limit of 12. Fewer CCDs, more cores. “Zen 4c is actually optimised for the sweet spot of performance and power,” explained Su.

AMD believes Epyc 9754 ‘Bergamo’ offers up to 2.6x the performance of rival Intel’s top-of-stack Xeon Platinum 8490H processor in cloud-native applications

Bergamo-based servers are now shipping to hyperscale customers, says AMD. Meta is preparing to deploy Bergamo as the next generation high volume general compute platform. ‘We’re seeing significant performance improvements with Bergamo over Milan in the order of 2.5x,” said Meta’s vice president of infrastructure, Alexis Black Bjorlin.

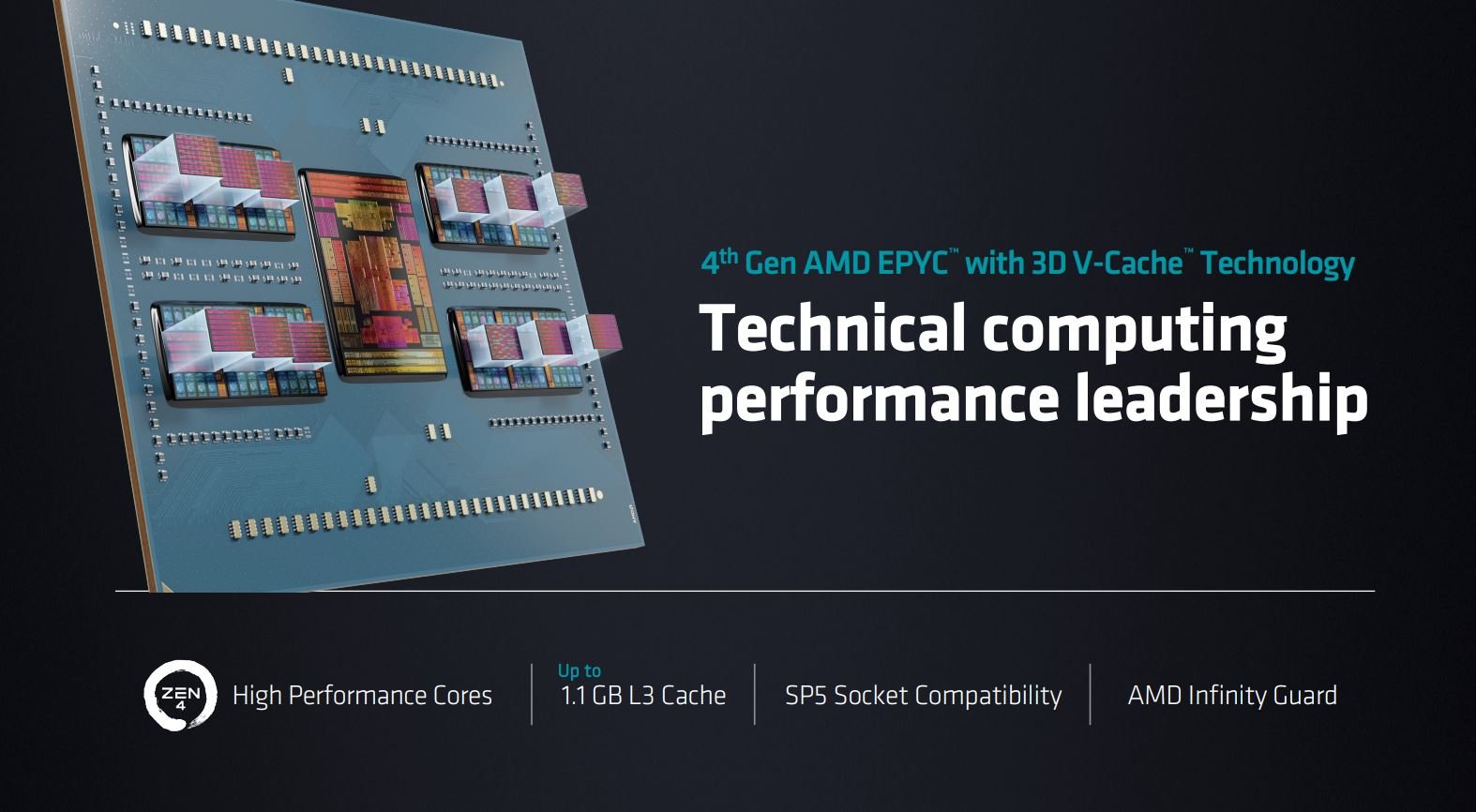

2.2GB+ of L3 cache per motherboard

The third ‘swim lane’ for 4th Generation Epyc are chips designated as Genoa-X. These follow the same strategy as Milan-X, where L3 cache is increased three-fold compared to non-X processors. Using a novel cache-stacking technology pioneered in the last generation, Genoa-X increases the maximum per-CPU cache from 384MB to 1,152MB.

Many technical computing workloads use large datasets that otherwise don’t fit into regular Epyc processors’ cache allotment. Having three times the amount of L3 – enough to hold more working code close to the processing engines – is far more beneficial than adding additional cores. That was true for Milan-X and is truer still for Genoa-X.

Underscoring this point, AMD took the opportunity of comparing a 96-core Genoa-X processor to a top-of-the-line, 60-core Intel Sapphire Rapids chip (8490H) in a technical computing benchmark, whilst also showing how 32-core processors from each company perform at the same task. The results naturally show a convincing landslide win for Epyc. AMD sees Genoa-X as a good fit for professional software licensed on a per-core basis. Platforms featuring Genoa-X will be available from partners starting next quarter.

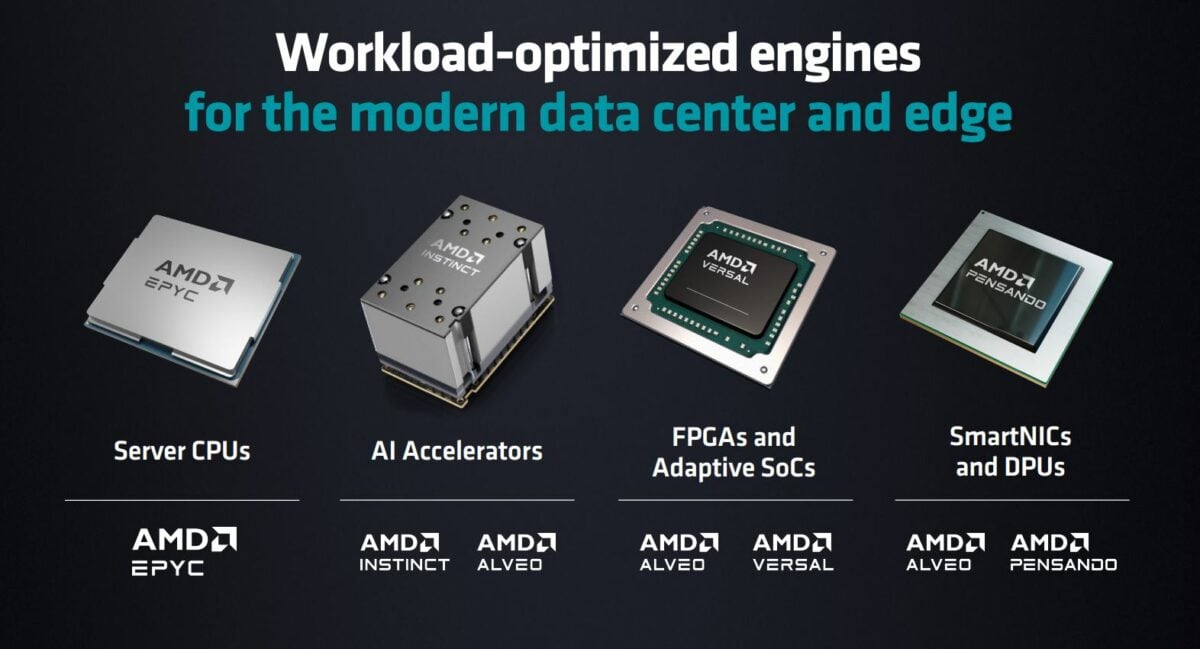

CPUs are one part of the AMD datacentre story and their role is well understood. AI, however, has become the hottest buzzword in the industry. In the overarching ecosystem, generative AI – where new, seemingly realistic content is produced from training data, as evidenced by ChatGPT and Google Bard – is receiving the most attention.

Instinct MI300X 192GB for generative AI

Right now, the vast majority of hardware and software used for generative AI runs on rival Nvidia solutions. AMD is acutely aware of this – and the $150bn datacentre AI accelerator TAM by 2027 – and instead champions an open-source approach running on Instinct hardware, the latest of which is the MI300 accelerator taking pride of place in the upcoming El Capitan supercomputer.

MI300(A) is a 13-chiplet, 146bn-transistor design which houses 24 Epyc 4th Generation CPU cores, CDNA 3 graphics and multi-stack 128GB of HBM3 memory. Using this potent design as a base, AMD revealed tentative details about a generative AI workload-optimised model known as Instinct MI300X.

Understanding generative AI processing works best on GPUs rather than CPUs, 153bn-transistor MI300X shelves the three-chiplet Epyc portion and devotes all resources to GPU compute via two further GPU chiplets, though no hard and fast specification details were provided on further specifications. Appreciating training models can be large, MI300X increases HBM3 memory to 192GB per instance, enough to fit large-language models such as Falcon-40B, a 40-billion parameter model, on a single 750W MI300X GPU accelerator, said AMD. This larger memory footprint is viewed as a key advantage over Nvidia, where fewer AMD accelerators are needed to handle a set-sized LLM inference workload. A single MI300X can run models with up to 80-billion parameters, we were informed.

AMD will ship out MI300X GPUs in a Universal Baseboard (UBB) form factor that’s physically and logically compatible with the eight-way Nvidia DGX board, according to Lisa Su. The aim is to make adoption of AMD solutions as frictionless as possible for customers who want an alternative to Nvidia’s unbridled hegemony. MI300X GPUs will begin shipping in Q3 2023, and we expect more in-depth details at a later date.

The wrap

Fuelling momentum with 4th Generation Epyc, AMD is introducing higher core density and massively expanded L3 cache models with Bergamo and Genoa-X, respectively, and providing a glimpse into the hardware behind its nascent generative AI push.

The strategy and execution on the Epyc side feels more sure-footed than for AI processing, but that’s to be expected as AMD has a longer history in convincing large-scale customers the benefits accruing from its server CPU technology. Business as usual for Epyc, generative AI processing is a tantalisingly rich potential revenue seam that requires laser-focussed mining if market share is to be taken away from behemoth Nvidia.