AI apparently has an insatiable thirst for electricity, and this could be a cause for concern in the not-too-distant future. Arm CEO Rene Haas warns that AI may consume a quarter of America’s power requirements by 2030. This is a significant increase compared to today’s current usage of 4% or less.

Now, 25% may not seem like much, but it’s sort of scary when you translate that figure into raw numbers. For example, the US generated about 4,178 billion kWh (approx. 4.2 trillion kilowatt-hours) of electricity in 2023. Without accounting for future growth, AI alone could potentially consume 10,445 billion kWh of electricity in the next six years.

AI’s rising power demand

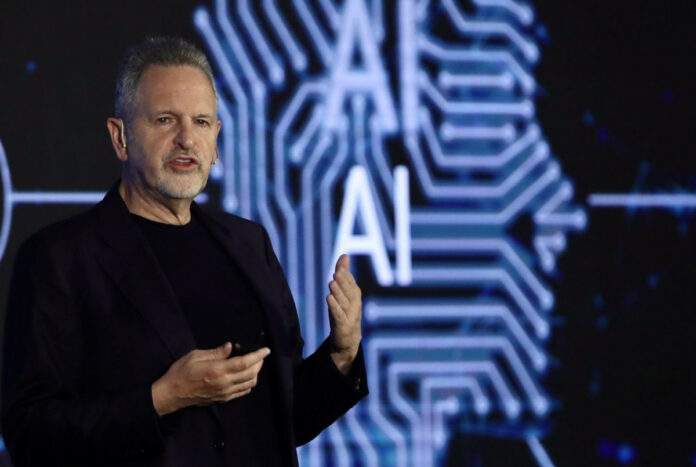

The issue was discussed in a Wall Street Journal interview on Tuesday. Haas specifically blames LLMs. He explained that AI models, including Open AI’s ChatGPT, require an “insatiable” amount of electricity to operate effectively. “The more information they gather, the smarter they are, but the more information they gather to get smarter, the more power it takes,” he said.

The Arm CEO is not alone in his predictions. The International Energy Agency’s (IEA) most recent Electricity 2024 report suggests that power consumption for AI data centres is ten times the amount it was back in 2022. Specifically, it noted that a single interaction with ChatGPT uses nearly 10 times the electricity of an average Google Search.

It goes without saying that the AI “gold rush” will only continue to add to this alarming increase in power requirements. Henceforth, Haas has emphasized the need to create more efficient and sustainable AI solutions to curb high power demands.

As a result, Arm and its parent company, SoftBank Group, have pledged $25 million to help fund a US-Japan research collaboration aimed at supporting AI research at universities across the US and Japan. One of the project’s initiatives is providing innovative ways to curb AI electricity usage, which major tech companies like Amazon and Nvidia directly support.