January 10, 2023 is the date Intel has set to debut 4th Generation Xeon processors formerly known as Sapphire Rapids. Beset by numerous delays at a time when rival AMD has made predictable strides with Epyc chips, Intel is coming out swinging as we inch closer to launch. Providing further details on HBM2e-equipped models known as Xeon Max, performance claims are bordering on astonishing.

Xeon Taken To The Max

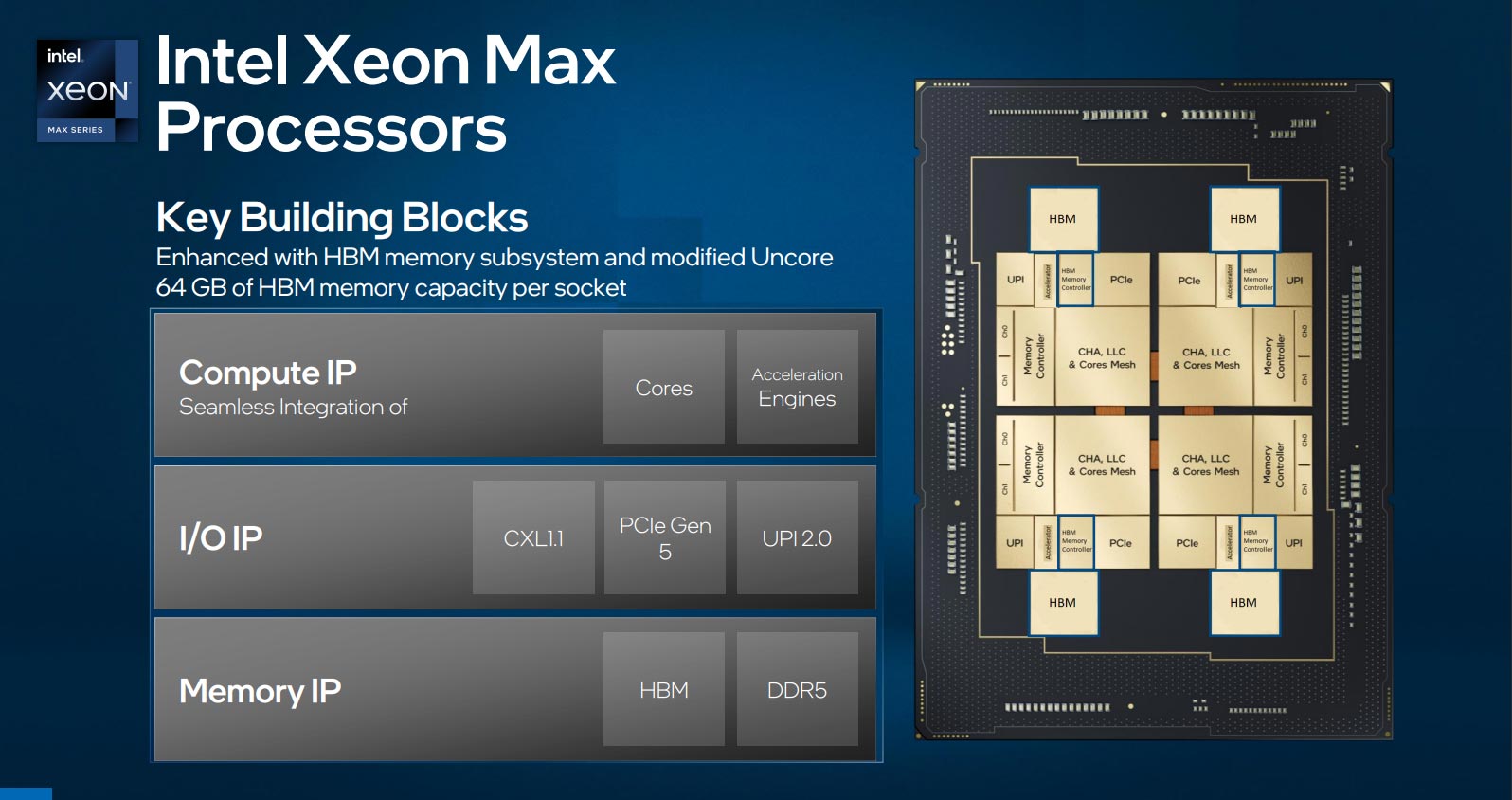

Getting the headline specs out of the way first, Intel is releasing two variants of Sapphire Rapids Xeons – those with and without on-chip HBM2e (High-Bandwidth Memory). Non-HBM2e chips scale to a maximum 60 (P) cores and 120 threads per socket, while HBM2e models top out at 56 cores and 112 threads. Intel’s reasoning in reducing cores rests with the additional juice required to drive heaps of on-chip cache.

Maximum power consumption is slated at 350W, which is considerably higher than current best-in-class Xeon Platinum 8380’s 270W but in line with rival Epyc processors and Xeon server-on-module offerings. Sapphire Rapids is a forward-looking architecture suffused with the memory-expanding CXL 1.1 connection, PCIe Gen 5 – both of which are also present on upcoming Epyc Genoa, by the way – and it’s built using multiple tiles connected together via EMIB packaging.

Key to Xeon Max’s success in the server environment are brand-new compute and memory technologies. Intel is betting big on the machine learning and AI farm by provisioning silicon for AMX extensions. Keeping to the eight memory channels of incumbent solutions, the shift to DDR5-4800 increases on-paper bandwidth by 50 per cent over Ice Lake-SP.

Building out using a tiled approach means Intel can be flexible in what it adds to specific SKUs. For now, though, the major deviation is only whether Xeons feature HBM2e memory or not.

HBM2e Leading Performance Charge

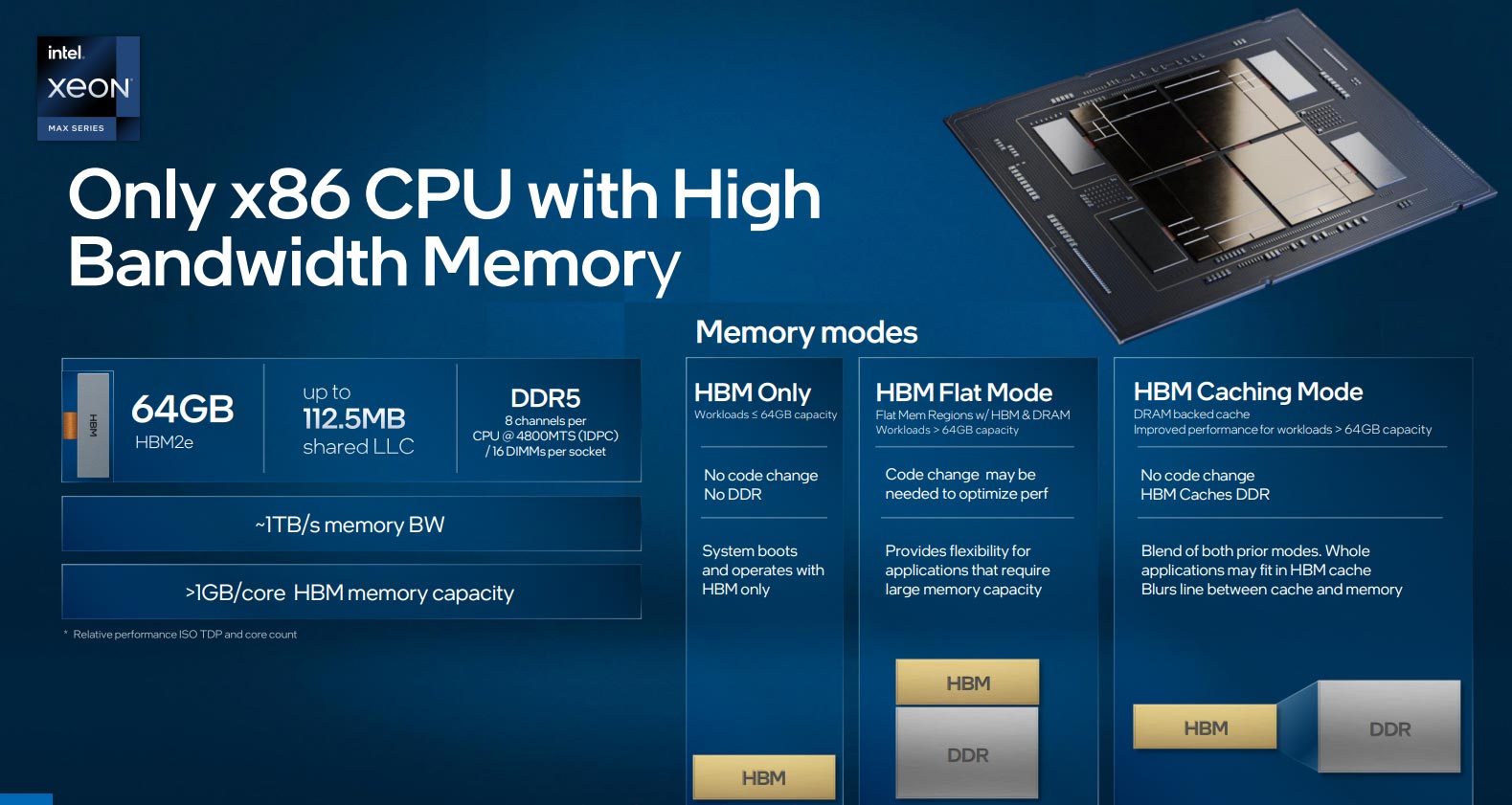

Segueing nicely, Xeon Max processors carry 64GB of HBM2e memory, meaning there is always more than 1GB-per-core at the ready. Backed up by up to 112.5MB of last-level cache (LLC), Intel boosts the on-chip memory footprint by a huge amount compared to any server processor that has come before.

Having gobs of fast memory is a boon for HPC, AI and data analytics applications, enabling the working set to fit into the processor’s cache. Arranged in four stacks of 16GB, in addition to eight-channel DDR5, Intel reckons Xeon Max has approximately 1TB/s of memory bandwidth, compared with around 200GB on Xeon Ice Lake-SP.

HBM is arranged in three modes. The first is interesting as the server operates without any DDR5; all memory transactions are done via 64GB of HBM2e. Should more than 64GB be required, the application spans across multiple sockets and nodes, which is common in the HPC space. The second, known as Flat Mode, where both HBM2e and DDR5 are exposed to software as two distinct NUMA modes. The operating system and services run primarily on DDR5, leaving HBM2e free for applications. In Caching Mode, however, HBM caches the contents of DDR. Doing so means it’s transparent, which leads to two things: no code changes due to transparency, and all accesses are handled by the memory controllers.

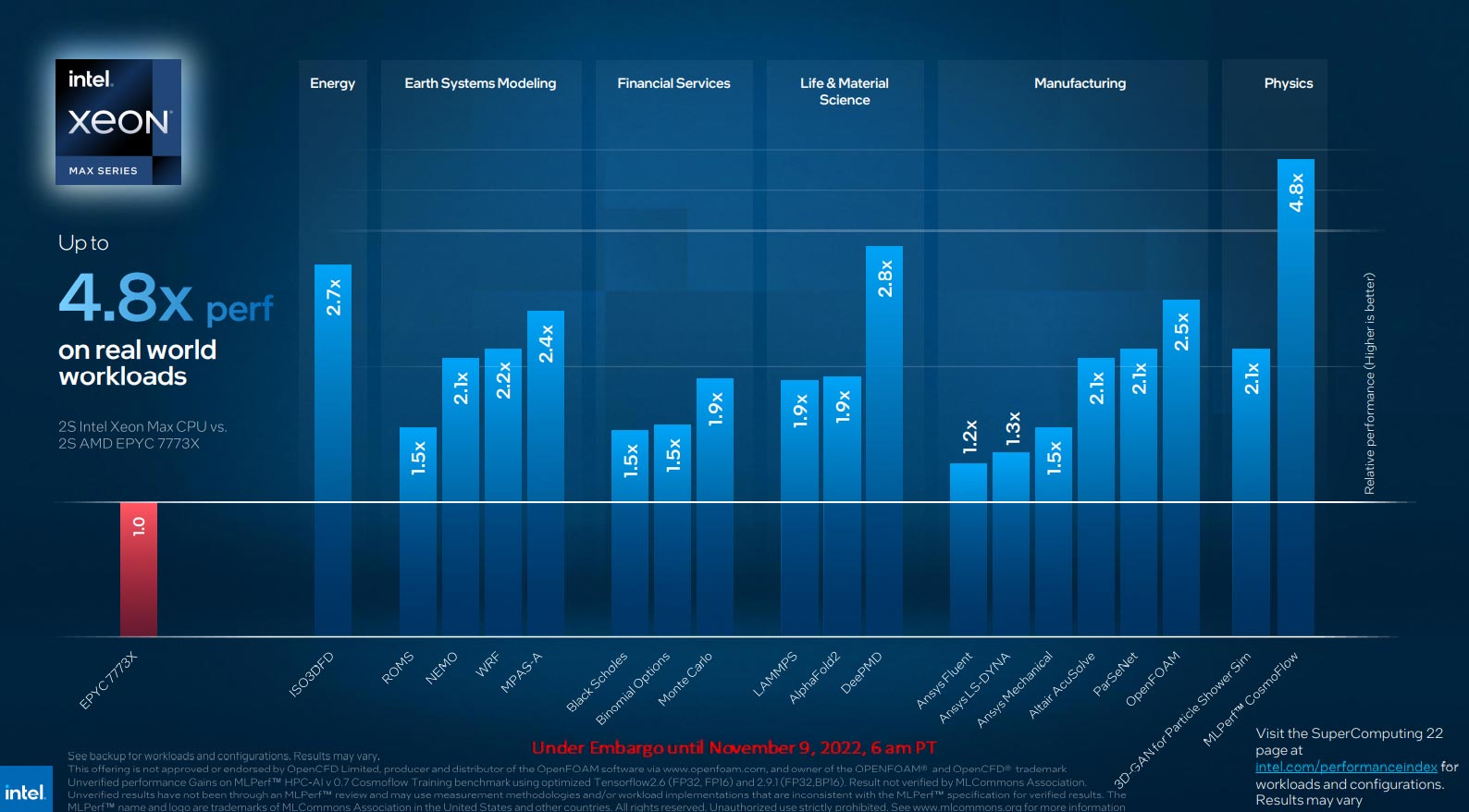

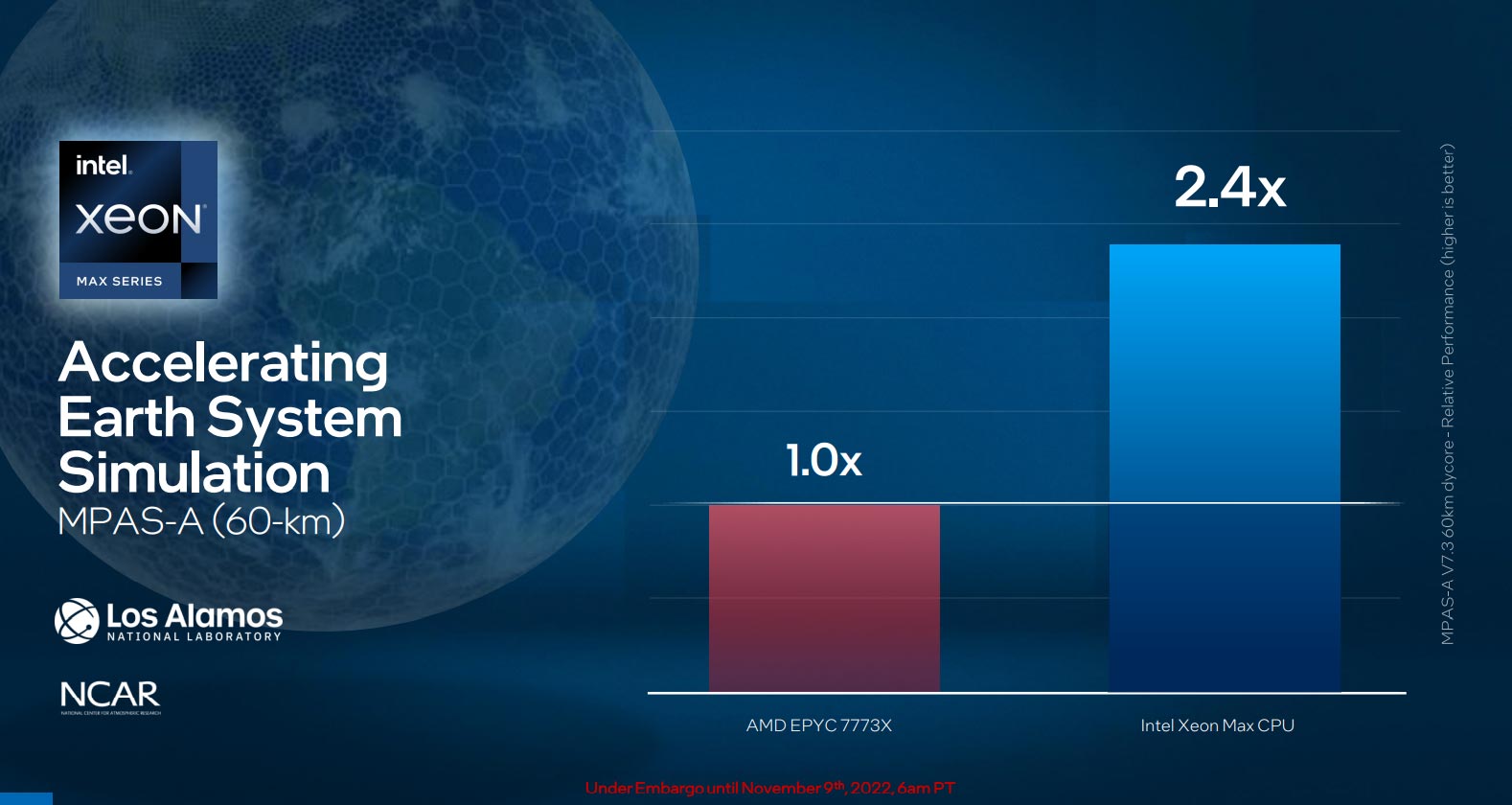

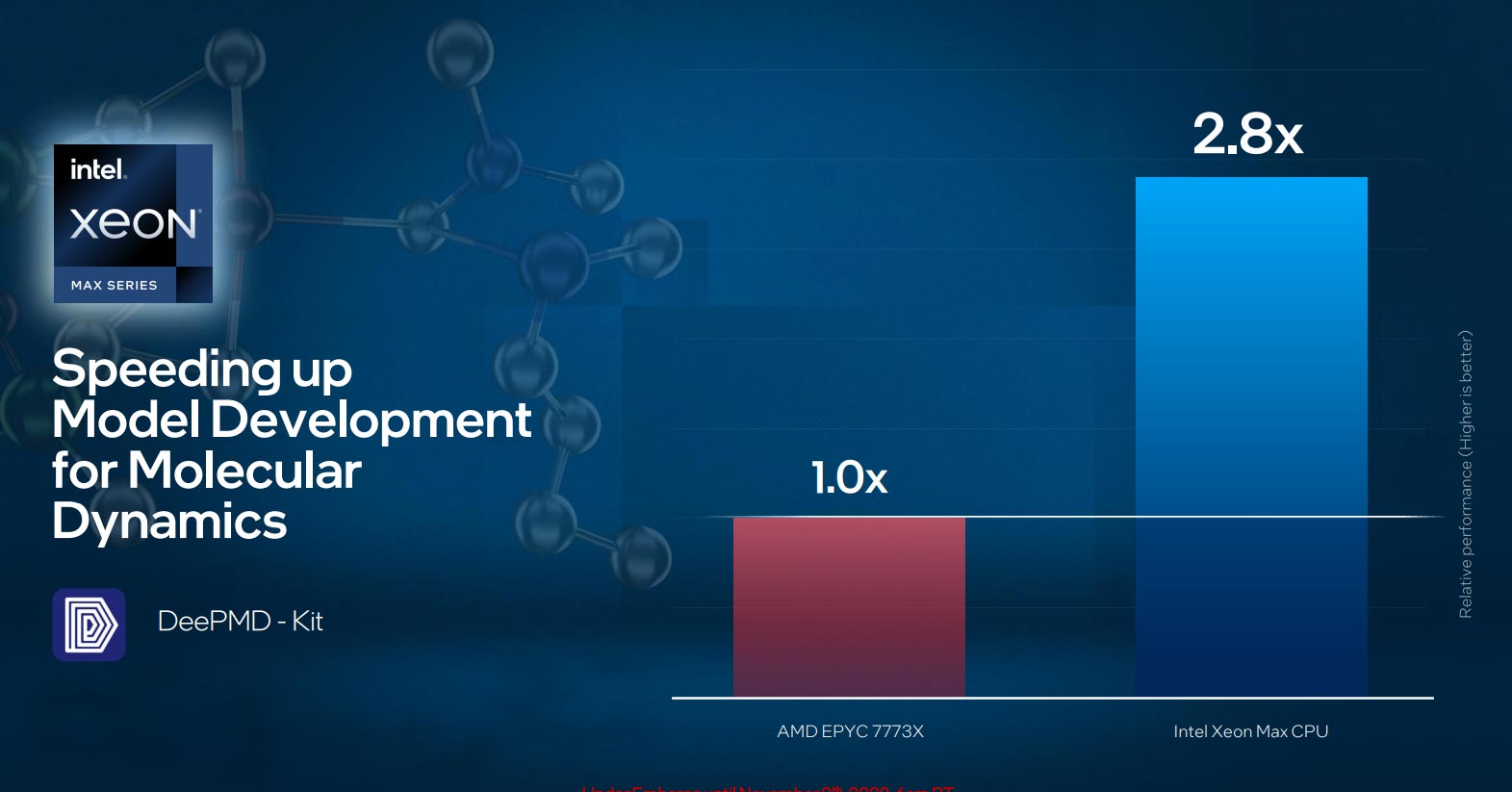

The startling benefits of having HBM2e on the chip are illustrated by Intel-provided benchmarks comparing top-bin Xeon Max to in-market AMD Epyc 7773X. Intel is naturally and deliberately choosing applications that are very sensitive to changes in memory bandwidth and proximity, so it’s conceivable all fit into the 64GB of HBM2e. Tested in HBM-only mode, we see scaling ranging from 1.2x to around 2x, with outliers jumping up close to 5x.

Benchmark Bonanza

There are more provisos here than we can shake a large stick at. The big elephant in the room is Intel hasn’t yet released 4th Generation Xeon Max. Tuned to primarily show the benefits of having 64GB of HBM2e memory present, not all applications will scale this way. Compute-bound tasks are likely to run faster on Epyc, due to its greater core count, and with due knowledge that 96-core, 192-thread 4th Generation Epyc Genoa is released tomorrow, the comparisons become invalid quickly. Nevertheless, Intel puts forward a strong case for HBM2e in memory-bound applications.

The performance story is much the same when comparing upcoming 4th Generation Xeon Max against today’s 40C80T Xeon 8380 Platinum.

Server performance is taking on another dimension with the imminent release of 4th Generation models from AMD and Intel. Epyc is primed for compute-centric throughput whilst Xeon Max looks to excel in memory-intensive scenarios. The perfect server processor may well be a mixture of Genoa and Sapphire Rapids. Anyone care to build one?