I’m about to say something mildly controversial. Other than the frankly ludicrous RTX 5090, extending GPU dominion from up high in unashamed fashion, the three other Nvidia GeForce RTX 50 Series GPUs announced at CES 2025 are not a Big Deal™ in the traditional hardware sense. Usually hidden out of sight behind talk of emboldened specs, the real star of the show is what Nvidia has done with software and how across-the-board improvements benefit millions of gamers.

Don’t get me totally wrong, mind you. No matter which game I’m playing, I’d rather have an RTX 5080 over an RTX 4080 (Super) and RTX 5070 over an RTX 4070 (Super) every day of the week and twice on Sundays.

Judge me by my CUDA core count, do you?

Yet I deliberately temper enthusiast expectations knowing Nvidia’s own testing shows the generational rasterisation leap between familial cards is smaller than it’s been for a long while. I’ll have to run my own numbers, of course, but looking at the base hardware specs of the RTX 5080 and RTX 4080 Super, with both priced at $999 at time of launch a year apart, shows conservative differences.

| GPU | RTX 5080 | RTX 4080 Super |

|---|---|---|

| Architecture | Blackwell | Ada Lovelace |

| Process | TSMC 4N | TSMC 4N |

| Die codename | GB203 | AD103 |

| Die size | 378mm² | 378.6mm² |

| Transistors | 45.6bn | 45.9bn |

| CUDA cores | 10,752 | 10,240 |

| Base clock | 2.30GHz | 2.29GHz |

| Boost clock | 2.62GHz | 2.55GHz |

| CUDA FP32 | 56.34 TFLOPS | 52.22 TFLOPS |

| Memory | 16GB | 16GB |

| Memory type | GDDR7 | GDDR6 |

| Memory bus | 256-bit | 256-bit |

| Memory speed | 30Gbps | 23Gbps |

| Memory bandwidth | 960GB/s | 736GB/s |

| Ray tracing | 171 TFLOPS | 121 TFLOPS |

| Tensor Core | 1,801 TOPS | 836 TOPS |

| Total graphics power | 360W | 320W |

| System requirements | 850W | 750W |

| Price | $999 | $999 |

| Availability | January 2025 | January 2024 |

Digesting numbers gives pause for thought. Don’t expect much extra on traditional throughput as both cards are eerily similar in silicon footprint, die size, and ye olde FP32 TFLOPS – a throughput calculation that’s historically largely defined gaming performance in tandem with memory bandwidth. There’s a nice 30% uptick in that department via the use of GDDR7 running at 30Gbps, while Nvidia’s bolstered ray tracing capability by a larger degree. The key outlier is all-important AI TOPS, which I’ll get on to later. You’ll get to know why this number is so important for this generation and the next.

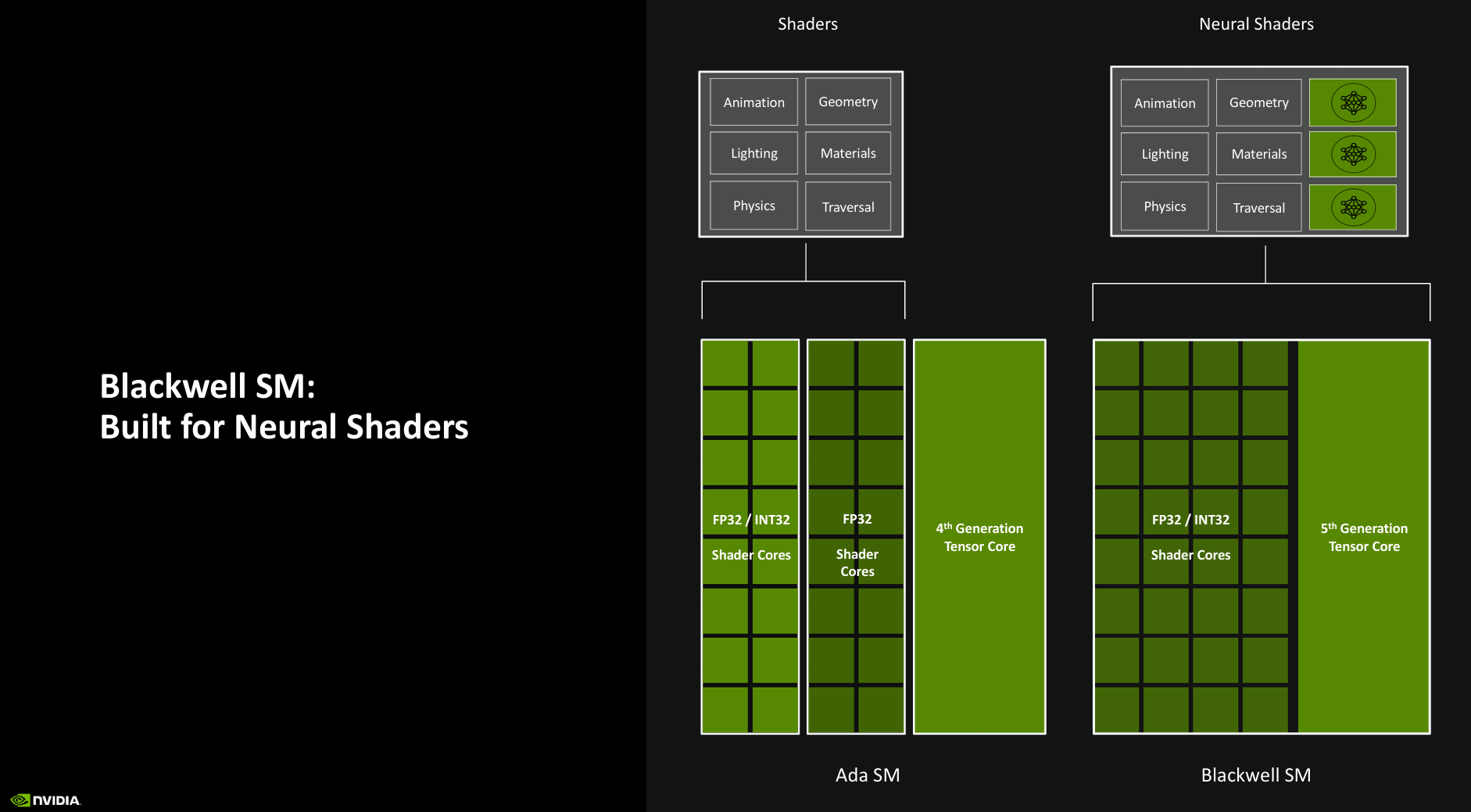

Perhaps I’m being too harsh to RTX 50 Series’ Blackwell’s architecture and SM units. Zooming into one shows the same 128 shader-cores albeit arranged differently. Previously, RTX 40 Series, aka Ada, split the cores as 64 FP32 (dark green) and a further 64 capable of FP32 and INT32. Now, we still have the same total number but all 128 are able to process floating point or integer. The distinction is important insofar as games do use INT32, so having the flexibility of all cores to run it is useful. It’ll be difficult for me to tease out the real-world benefits, mind.

The other key change is addition of neural shading that adds neural networks to programmable shaders. Similar to how DLSS works, the tech builds more realistic-looking textures from highly compressible data. I was shown a demo where RTX Neural Faces creates a real-time AI model from a simple rasterised face, making it look far more natural. Think about it the way DLSS takes a low-resolution input and creates a higher-quality output. The demo below shows that AI-enhanced Neural Materials look both more lifelike and take up less valuable VRAM space. Do understand that like many new ways of doing things, developers will need to integrate neural shading into their engines.

Nevertheless, back on point, I’ve been doing this GPU analysis game long enough to translate the table above into probable performance at 4K with all the visual bells and whistles turned on. My inkling is you’ll see a 10-15% rasterised framerate improvement between RTX 5080 and RTX 4080 Super. I may be proved wrong when reviews arrive, of course, but I’m certain you won’t see 30-40% improvements without resorting to novel image-processing techniques.

RTX 50 Series focus was never on rasterisation

And that’s the nub of the matter. Nvidia is acutely aware that spiralling transistor costs and investment into leading-edge fabrication processes is not the way to go when building better graphics cards. Innovation necessarily needs to occur outside of silicon, and this is precisely why game-changing investment, if you excuse the egregious pun, has been made in RTX and DLSS technologies over the past six years.

GeForce RTX 50 Series proudly stands as a poster child on how to extract meaningful generational performance uplifts without unduly investing greatly in expensive things like more CUDA cores. It’s the only series with the full gamut of DLSS 4 technologies in tow, including Multi-Frame Generation (MFG), which adds three additional frames between every traditionally rendered frame. And as everyone know, more frames equals more frags.

Sounds easy enough on paper as GeForce RTX 40 Series GPUs also has Frame Generation capabilities, but the two are not the same. Slotting a second or third frame on, say, RTX 4080 becomes increasingly complex for purely time reasons. Each subsequent frame requires generation of new Optical Flow information alongside a DLSS-derived AI model. Behind the scenes, a lot’s going on to build an inserted frame. That’s verging on impossible to do in the short time between traditionally rendered frames. Nvidia grappled with this problem for a while and realised that while MFG is a great idea on paper, it requires a new approach.

Step up, Blackwell. RTX 50 Series GPUs do a few things differently. MFG now works because the hardware-based Optical Flow Accelerator is replaced by a deploy-once AI model capable of generating multiple frames. It’s 40% faster and requires 30% less VRAM, too. Solving the second part of the MFG problem, namely generating visually high-quality imagery necessary for multiple frames, Nvidia goes to town on RTX 50 Series AI capabilities. DLSS works best with incredible AI TOPS horsepower, and it’s these TOPS that, over and above the last generation, help generate and slot in frames two and three pertinent to MFG. Without an abundance of TOPS, you get an image that flops.

To that point, RTX 5080 has over 2x the capability of RTX 4080 Super. There’s more to it than that, actually, as RTX 50 Series natively supports the faster FP4 format common in AI tasks – RTX 40 Series runs it much slower due to a lack of specific hardware – but you get the picture. Go big on AI or go home. There is no other sensible way to deliver mind-numbing framerates.

The last piece of the jigsaw is how additional frames are delivered to your display. Even if RTX 40 Series could generate multiple frames quickly, like RTX 50, there’s a question of pacing them evenly such that performance feels smooth. This is why the frame-pacing onus turns from the CPU to a dedicated engine on RTX 50 Series. In a nutshell, GPU frame pacing, AI-generated frame generation, and multiple DLSS frames all combine to deliver DLSS 4 in its fullest form.

Results are impressive, frankly. You’re looking at Cyberpunk 2077 with image quality turned up to 11. That’s why even an RTX 5090 stumbles to a mere 27fps natively at 4K. Cranking up to DLSS 4 MFG increases frame rates by a factor of eight whilst reducing latency. Sure, you could easily change the native DLSS Off settings to hit 60fps, but Nvidia is illustrating a corner case with RT set to path tracing.

I had a chance to play through this very game during an Editors Day recently. The title’s a staple in the Club386 testing regime so I know it well. Hooked up to a 4K240 OLED, the smarts behind DLSS 4 offer the most fluid experience I’ve ever had in Night City. It actually feels weird to see the stratospheric framerate in what is on paper a ridiculously taxing setting.

This experience teaches me a thing or two about graphics. Long gone are the days where iterative performance increases were achieved by brute forcing CUDA cores. Wanted to run Crysis twice as fast? You patiently waited until either Nvidia or AMD made a GPU twice as big and twice as thirsty. If that trend had continued until today, going from 27fps to 243fps would require a GPU that costs $10k+ and needed thousands of watts. Put more succinctly, it just ain’t gonna happen.

Don’t worry, purists, you’ll never get rid of traditional rasterisation because DLSS requires a base foundation to work from. Nvidia boss, Jensen Huang, alluded to as much in a press Q+A, yet the debate between raster and DLSS will doubtless rage on. For me, all computer graphics are attempts at recreating the real world the best we can, so whether it’s one technique or another, I don’t really care. I only care it works. After six years of evolution, I strongly feel DLSS is now a must-use feature on RTX graphics cards.

The pursuit of better performance and higher-quality imagery is a cornerstone of DLSS evolution. To be blunt, the first few iterations were, at best, middling. Sure, there were higher framerates though the visual cost was sometimes high. Instances of ghosting, shimmering, and things not looking quite right plagued nascent implementations. Having followed the trajectory since launch, I’d rather now game with DLSS Quality than turn the native resolution down.

Driving up framerate and image quality

Up until today, DLSS used a pixel-generating methodology based on Convolutional Neural Networks (CNNs). Optimised over the previous six years, it’s as refined as the technology allows. Now, however, helping the DLSS cause is a more modern technique known as the Transformer Model. Developed by Google in 2017, this deep learning model is the gold standard for AI, adopted in popular applications such as ChatGPT.

DLSS 4 Transformer requires more innate horsepower to run because it’s more complex. Nvidia cites twice the parameters and four times the compute when compared to CNN. Set against the backdrop of computer graphics, the new model carries noticeable image-quality benefits for DLSS Ray Reconstruction, Super Resolution, and DLAA.

Here’s an illustration of how Ray Reconstruction improves temporal stability and increases detail. I saw this demonstration firsthand at the same event. The effect is subtle that you don’t really notice it until it’s pointed out. But once it is, you immediately see the flaws in even the well-optimised CNN model.

The absolute kicker is that Nvidia isn’t tying Transformer Model’s IQ gains to RTX 50 Series alone. The company certainly could have, incentivising upgrades to the latest and greatest hardware, yet it’ll be available to all RTX cards. I know it’ll run well on recent GeForces such as the RTX 4080 Super, but I’m genuinely curious to see how it fares on older cards such as RTX 2060. Fair play to Nvidia for making it available to millions of gamers. A rising tide lifts all ships and all that.

Of course, DLSS refinements like MFG and Transformer need to be implemented on a game-by-game basis by the developer. One simply cannot run them on every title. Key to success is how quickly Nvidia manages to get its host of technologies into all the leading games.

Nvidia says Alan Wake 2, Cyberpunk 2077, Indiana Jones and the Great Circle, and Star Wars Outlaws will be updated with native in-game support for DLSS MFG when GeForce RTX 50 Series GPUs are launched later this month. Black Myth: Wukong, Naraka: Bladepoint, Marvel Rivals, and Microsoft Flight Simulator 2024 are following suit in the near future, too.

I hope to see even more titles come into the fold as soon as possible. In fact, I want every triple-A game to have RT and DLSS 4 support from day one. It’s a tall ask, but I feel Nvidia has enough industry muscle and inertia to make it happen more quickly than AMD or Intel.

Get on the AI train or get left behind

Those of you who compare GPUs by specification tables – I must admit I used to be one of them – are likely to be left somewhat bemused by the apparent lack of silicon progress from RTX 40 Super Series to RTX 50 Series. Other than the jaw-dropping head honcho RTX 5090, I expect generational rasterisation-only gains to be in the 10-20% region. Small beer in the big scheme of things, and one of the least impressive gen-on-gen gains I can remember. That is the price you pay, literally, for escalating silicon and memory costs.

RTX 50 Series gets around this noisome hurdle by putting its full weight behind framerate- and IQ-boosting technologies like DLSS 4. Run in optimal form with the twin salvo of MFG allied to the Transformer Model, Nvidia promises many-fold fps boosts alongside image quality par excellence.

The proof of the pudding is in the eating. I’ll spend the next week knee-deep in GeForce to validate or repudiate Nvidia’s claims, though I’m mindful the whole picture won’t emerge until many more games and applications receive updates to leverage the new tech imbued in RTX 50 Series cards. In the meantime, it’s clear to me that CUDA cores, the bedrock of rasterisation, are taking a backseat for this and, arguably, future generations of GeForce RTX hardware. As uncontested market leader, Nvidia is fully on board a speeding train called DLSS. Are you?