There’s no denying the unholy power of Nvidia’s Ada Lovelace architecture. Flagship GeForce RTX 4090 is a monster GPU that speaks to the carnal cravings of every PCMR member, yet when reality kicks in, few among us can consider splashing out £2k, even on the best graphics card. There needs to be a more viable choice for us regular folk, and now there is; it’s called GeForce RTX 4070.

Nvidia GeForce RTX 4070 Founders Edition

£589 / $599

Pros

- Excellent efficiency

- Small and beautifully built

- Keeps cool and quiet

- Great for 1440p gaming

- DLSS 3 works wonders

Cons

- Only 12GB memory

- Lacks raster firepower

Club386 may earn an affiliate commission when you purchase products through links on our site.

How we test and review products.

Arriving as the latest instalment to the growing RTX 40 Series range, RTX 4070 represents, above all else, a sense that things are returning to normal. For starters, a £589 / $599 price tag, taking into account soaring inflation, is about what we’d expect to pay for an x70 solution in 2023. Stock is intended to be readily available at launch, and in contrast to the chaotic consumer experience of recent years, RTX 4070 feels simple, safe and sedate. And that’s a good thing.

The mining boom is over, overpriced cards are sat on store shelves as opposed to being met by feverish demand, and with PC sales appearing relatively weak following unprecedented pandemic-fuelled highs, you can now casually walk into a store (they do still exist, keyboard warriors) and buy a graphics card without queueing, searching for stock, or paying vastly over the odds. Good times ahead.

Many will also appreciate the fact that RTX 4070 has the profile of a regular graphics card. Measuring 245mm x 112mm x 41mm in size and tipping the scales at 1,022g, it harks back to a time before gargantuan cards became the norm. Compared to recent monstrosities, 4070 is an itty-bitty thing; it is 22mm shorter than ageing RTX 3070 Ti, suitably rigid throughout, and petite enough not to overhang a regular ATX motherboard.

The reference Founders Edition, as you would expect, is beautifully constructed from die-cast aluminium, with fewer seams and larger axial fans said to offer a 20 per cent increase in airflow over previous generations. A single 12VHPWR connector supplies the juice – GPU and memory power delivery is managed by a 6+2-phase configuration – and if you’ve not yet upgraded your PSU, a two-way splitter is included in the box.

Putting RTX 4080 alongside RTX 4070 invokes memories of Arnold Schwarzenegger and Danny DeVito in Twins; they may belong to the same family, but you wouldn’t know it going purely by bulk or physical form. Strictly a dual-slot solution, RTX 4070 bodes well for small-form-factor enthusiasts who have patiently awaited a card that will fit.

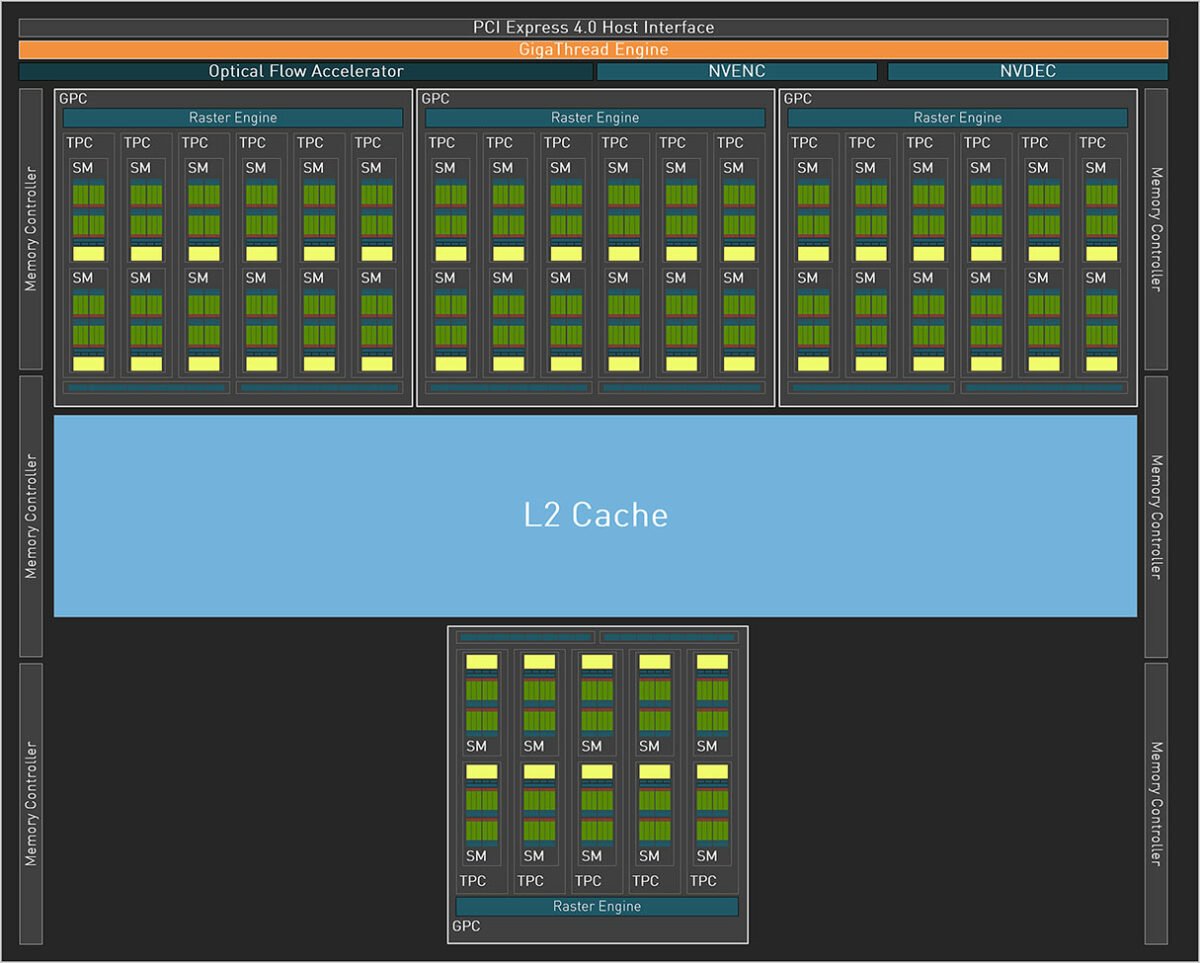

Sizing is right and pricing is admissible, however such slimming down does introduce significant curtailment of the underlying GPU. In an effort to keep good distance between the newcomer and RTX 4070 Ti, Nvidia has cleaved specifications dramatically. While the underlying AD104 die may sound familiar, it is re-engineered to serve as a mid-range proposition, and to the frustration of performance purists, the cuts run deep.

RTX 40 Series: from $1,599 to $599

Wielding the axe with venom, Nvidia slashes AD104’s streaming multiprocessor (SM) count from a maximum of 60 to just 46, and that near-25 per cent reduction translates to relatively lightweight numbers across the board. 5,888 CUDA cores are far removed from the 16,384 availed to RTX 4090, and remember, the head honcho isn’t even a full implementation. Let’s break out the Club386 Table of DoomTM to see how RTX 40 Series has taken shape.

| GeForce RTX | 4090 | 4080 | 4070 TI | 4070 | 3090 Ti | 3080 Ti | 3080 12GB | 3070 Ti |

|---|---|---|---|---|---|---|---|---|

| Launch date | Oct 2022 | Nov 2022 | Jan 2023 | Apr 2023 | Mar 2022 | Jun 2021 | Jan 2022 | Jun 2021 |

| Codename | AD102 | AD103 | AD104 | AD104 | GA102 | GA102 | GA102 | GA104 |

| Architecture | Ada | Ada | Ada | Ada | Ampere | Ampere | Ampere | Ampere |

| Process (nm) | 4 | 4 | 4 | 4 | 8 | 8 | 8 | 8 |

| Transistors (bn) | 76.3 | 45.9 | 35.8 | 35.8 | 28.3 | 28.3 | 28.3 | 17.4 |

| Die size (mm2) | 608.5 | 378.6 | 294.5 | 294.5 | 628.4 | 628.4 | 628.4 | 392.5 |

| SMs | 128 of 144 | 76 of 80 | 60 of 60 | 46 of 60 | 84 of 84 | 80 of 84 | 70 of 84 | 48 of 48 |

| CUDA cores | 16,384 | 9,728 | 7,680 | 5,888 | 10,752 | 10,240 | 8,960 | 6,144 |

| Boost clock (MHz) | 2,520 | 2,505 | 2,610 | 2,475 | 1,860 | 1,665 | 1,710 | 1,770 |

| Peak FP32 TFLOPS | 82.6 | 48.7 | 40.1 | 29.1 | 40.0 | 34.1 | 30.6 | 21.7 |

| RT cores | 128 | 76 | 60 | 46 | 84 | 80 | 70 | 48 |

| RT TFLOPS | 191.0 | 112.7 | 92.7 | 67.4 | 78.1 | 66.6 | 59.9 | 42.4 |

| Tensor cores | 512 | 304 | 240 | 184 | 336 | 320 | 280 | 192 |

| ROPs | 176 | 112 | 80 | 64 | 112 | 112 | 96 | 96 |

| Texture units | 512 | 304 | 240 | 184 | 336 | 320 | 280 | 192 |

| Memory size (GB) | 24 | 16 | 12 | 12 | 24 | 12 | 12 | 8 |

| Memory type | GDDR6X | GDDR6X | GDDR6X | GDDR6X | GDDR6X | GDDR6X | GDDR6X | GDDR6X |

| Memory bus (bits) | 384 | 256 | 192 | 192 | 384 | 384 | 384 | 256 |

| Memory clock (Gbps) | 21 | 22.4 | 21 | 21 | 21 | 19 | 19 | 19 |

| Bandwidth (GB/s) | 1,008 | 717 | 504 | 504 | 1,008 | 912 | 912 | 608 |

| L1 cache (MB) | 16 | 9.5 | 7.5 | 5.8 | 10.5 | 10 | 8.8 | 6 |

| L2 cache (MB) | 72 | 64 | 48 | 36 | 6 | 6 | 6 | 4 |

| Power (watts) | 450 | 320 | 285 | 200 | 450 | 350 | 350 | 290 |

| Launch MSRP ($) | 1,599 | 1,199 | 799 | 599 | 1,999 | 1,199 | 799 | 599 |

Nvidia’s desire to maintain explicit differentiation between the two RTX 4070 models is reflected in boost clock. Not only does the non-Ti part carry fewer cores, they are also downclocked to 2,475MHz, eliminating any risk of encroaching on Ti territory. Had this part been branded as RTX 4060 Ti, we doubt many eyebrows would be raised.

Despite hefty cutbacks, superior scaling afforded by a 4nm manufacturing process allows RTX 4070 to effectively match RTX 3080 12GB in terms of peak teraflops, and with the added smarts of a newer, leaner and more capable architecture, we imagine even RTX 3080 Ti will be in the firing line. 184 fourth-generation Tensor cores and 46 third-generation RT cores are marketed as key upgrades, as is AV1 hardware encoding, and DLSS frame generation will play a key part in realising Nvidia’s promise of over 100fps at 1440p in modern games.

504GB/s memory bandwidth is retained through 12GB of GDDR6X at 21Gbps via a 192-bit bus, which while to be expected, does raise question marks over long-term viability. Nvidia will argue a significant uptick in L2 cache – 36MB for RTX 4070 vs. 4MB for RTX 3070 Ti – reduces the need to spool out to memory on a frequent basis, yet given how poorly optimised PC games can be, greater headroom would afford extra peace of mind. It didn’t take long for 8GB on RTX 3070 Ti or even 10GB on the original RTX 3080 to feel insufficient, and we’d much prefer 16GB as the minimum for this generation of RTX x70 Series card.

Perusing overall specifications suggests RTX 4070 is unlikely to dazzle in terms of pure rasterisation. Rather, it is in the efficiency stakes the GPU is destined to shine. Nvidia attaches a TGP (total graphics power) of 200W, but a 30 per cent decrease over RTX 3070 Ti tells only part of the story. In instances where the CPU becomes a bottleneck, or GPU workload is light, average gaming power draw is said to drop to 186W. Ada’s efficiency has already proven to be class-leading, and as we’ll demonstrate in our testing, RTX 4070’s real-world effectiveness is rather impressive indeed.

There isn’t a great deal else to dissect – specifications are in line with our expectations of what is a pared-back RTX 4070 Ti – yet the £589 / $599 price tag will be debated until the cows come home. Nvidia will make the case for RTX 3080-like performance for less. Enthusiasts will look to a 294.5mm2 die and argue this is an x60-class product masquerading as a premium part. There’s merit to both arguments, but Nvidia’s extraordinary valuation is testament to the company’s ability to convince the market.

Whether we like it or not, the correct price is the one consumers are deemed willing to pay. The nickname Team Green has multiple connotations, and though there are occasional missteps – ill-fated RTX 4080 12GB at $899 remains fresh in our memories – $599 is the figure at which Nvidia expects RTX 4070 to sell in good numbers. Do remember MSRP merely represents a starting point. While the Founders Edition hogs the limelight today, dearer partner cards will arrive tomorrow alongside less attractive fees. You just know there’ll be a Dog Tricks RGB Extreme Edition that’s no faster, much larger, and priced irrationally at $699+.

We’re just about ready to share all-important benchmarks, but before we do, a recap on all that’s new with Ada Lovelace architecture.

Ada Optimisations

While a shift to a smaller node affords more transistor firepower, such a move typically precludes sweeping changes to architecture. Optimisations and resourcefulness are order of the day, and the huge computational demands of raytracing are such that raw horsepower derived from a 3x increase in transistor budget isn’t enough; something else is needed, and Ada Lovelace brings a few neat tricks to the table.

Nvidia often refers to raytracing as a relatively new technology, stressing that good ol’ fashioned rasterisation has been through wave after wave of optimisation, and such refinement is actively being engineered for RT and Tensor cores. There’s plenty of opportunity where low-hanging fruit is yet to be picked.

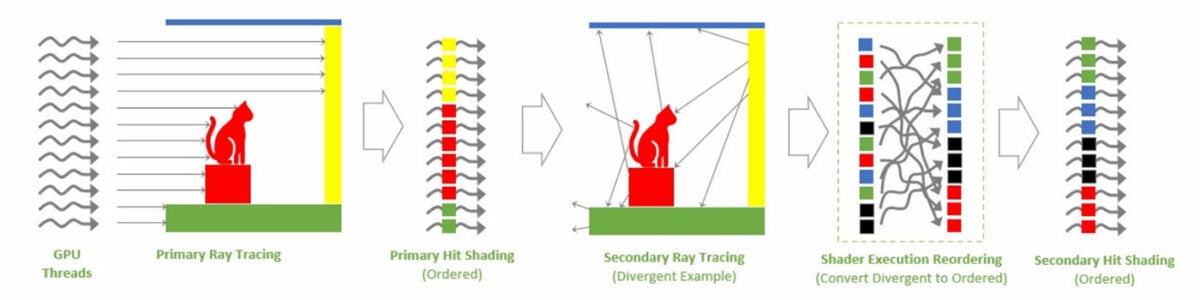

Shader Execution Reordering

Shaders have been running efficiently for years, whereby one instruction is executed in parallel across multiple threads. You may know it as SIMT.

Raytracing, however, throws a spanner in those smooth works, as while pixels in a rasterised triangle lend themselves to running concurrently, keeping all lanes occupied, secondary rays are divergent by nature and the scattergun approach of hitting different areas of a scene leads to massive inefficiency through idle lanes.

Ada’s fix, dubbed Shader Execution Reordering (SER), is a new stage in the raytracing pipeline tasked with scanning individual rays on the fly and grouping them together. The result, going by Nvidia’s internal numbers, is a 2x improvement in raytracing performance in scenes with high levels of divergence.

Nvidia portentously claims SER is “as big an innovation as out-of-order execution was for CPUs.” A bold statement and there is a proviso in that Shader Execution Reordering is an extension of Microsoft’s DXR APIs, meaning it us up to developers to implement and optimise SER in games.

There’s no harm in having the tools, mind, and Nvidia is quickly discovering that what works for rasterisation can evidently be made to work for RT.

Upgraded RT Cores

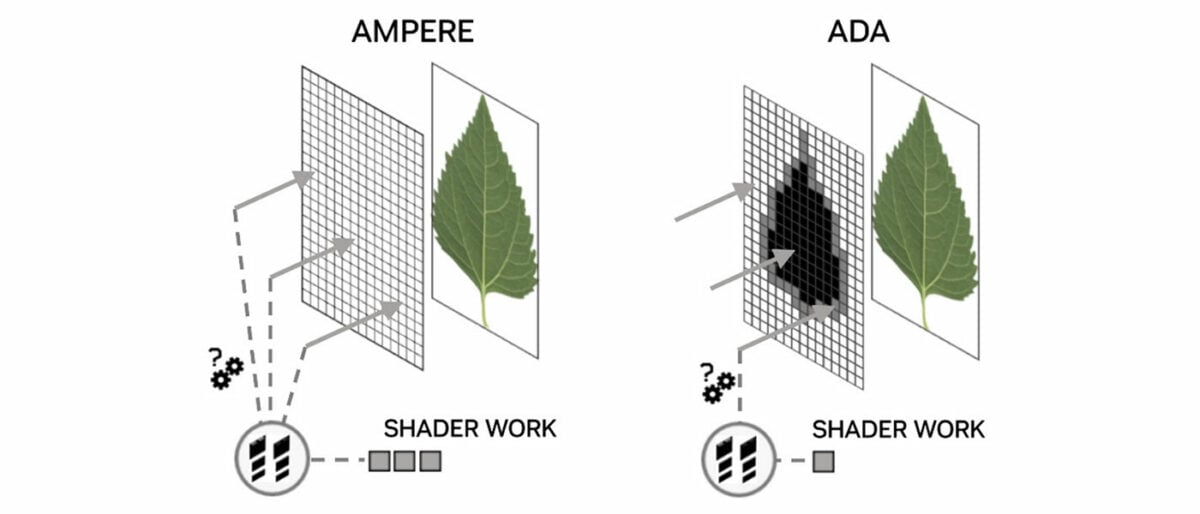

In the rasterisation world, geometry bottlenecks are alleviated through mesh shaders. In a similar vein, displaced micro-meshes aim to echo such improvements in raytracing.

“The era of brute-force graphics rendering is over”

Bryan Catanzaro, Nvidia VP of applied deep learning research

With Ampere, the Bounding Volume Hierarchy (BVH) was forced to contain every single triangle in the scene, ready for the RT core to sample. Ada, in contrast, can evaluate meshes within the RT core, identifying a base triangle prior to tessellation in an effort to drastically reduce storage requirements.

A smaller, compressed BVH has the potential to enable greater detail in raytraced scenes with less impact on memory. Having to insert only the base triangles, BVH build times are improved by an order of magnitude and data sizes shrink significantly, helping reduce CPU overhead.

The sheer complexity of raytracing is such that eliminating unnecessary shader work has never been more important. To that end, an Opacity Micromap Engine has also been added to Ada’s RT core to reduce the amount of information going back and forth to shaders.

In the common leaf example, developers place the texture of foliage within a rectangle and use opaque polygons to determine the leaf’s position. A way to construct entire trees efficiently, yet with Ampere the RT core lacked this basic ability, with all rays passed back to the shader to determine which areas are opaque, transparent, or unknown. Ada’s Opacity Micromap Engine can identify all the opaque and transparent polygons without invoking any shader code, resulting in 2x faster alpha traversal performance in certain applications.

These two new hardware units make the third-generation RT core more capable than ever before – TFLOPS per RT core has risen by ~65 per cent between generations – yet all this isn’t enough to back up Nvidia’s claims of Ada Lovelace delivering up to 4x the performance of the previous generation. For that, Team Green continues to rely on AI.

DLSS 3

Since 2019, Deep Learning Super Sampling has played a vital role in GeForce GPU development. Nvidia’s commitment to the tech is best expressed by Bryan Catanzaro, VP of applied deep learning research, who states with no uncertainty that “the era of brute-force graphics rendering is over.”

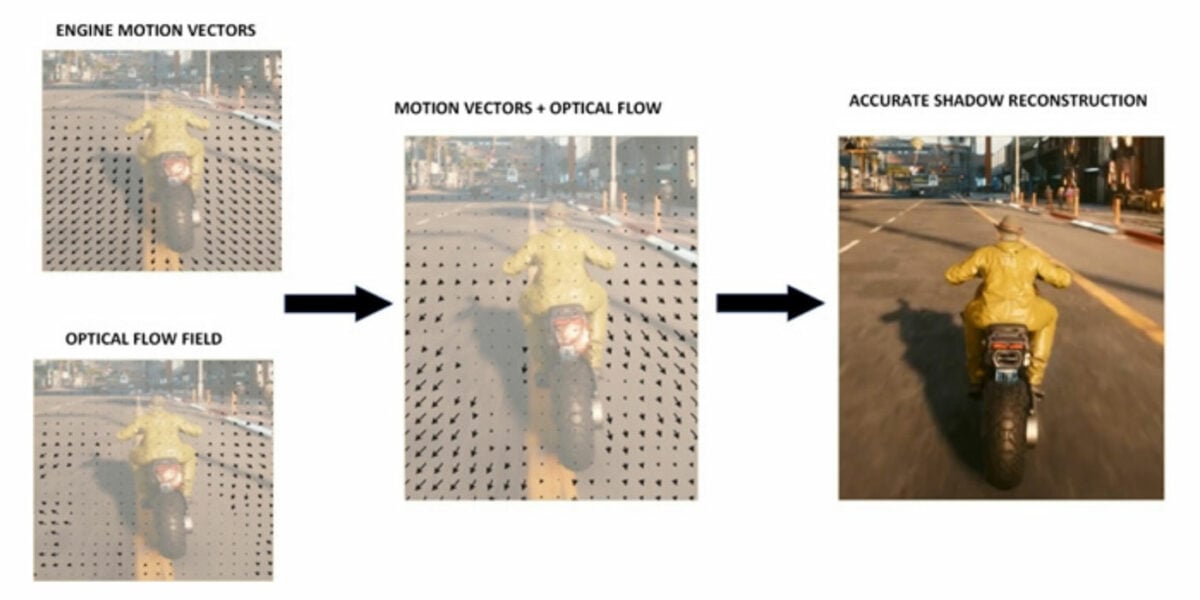

Third-generation DLSS, deemed a “total revolution in neural graphics,” expands upon DLSS Super Resolution’s AI-trained upscaling by employing optical flow estimation to generate entire frames. Through a combination of DLSS Super Resolution and DLSS Frame Generation, Nvidia reckons DLSS 3 can now reconstruct seven-eighths of a game’s total displayed pixels, to dramatically increase performance and smoothness.

Generating so much on-screen content without invoking the shader pipeline would have been unthinkable just a few years ago. It is a remarkable change of direction, but those magic extra frames aren’t conjured from thin air. DLSS 3 takes four inputs – two sequential in-game frames, an optical flow field and engine data such as motion vectors and depth buffers – to create and insert synthesised frames between working frames.

In order to capture the required information, Ada’s Optical Flow Accelerator is capable of up to 300 TeraOPS (TOPS) of optical-flow work, and that 2x speed increase over Ampere is viewed as vital in generating accurate frames without artifacts.

The real-world benefit of AI-generated frames is most keenly felt in games that are CPU bound, where DLSS Super Resolution can typically do little to help. Nvidia’s preferred example is Microsoft Flight Simulator, whose vast draw distances inevitably force a CPU bottleneck. Internal data suggests DLSS 3 Frame Generation can boost Flight Sim performance by as much as 2x.

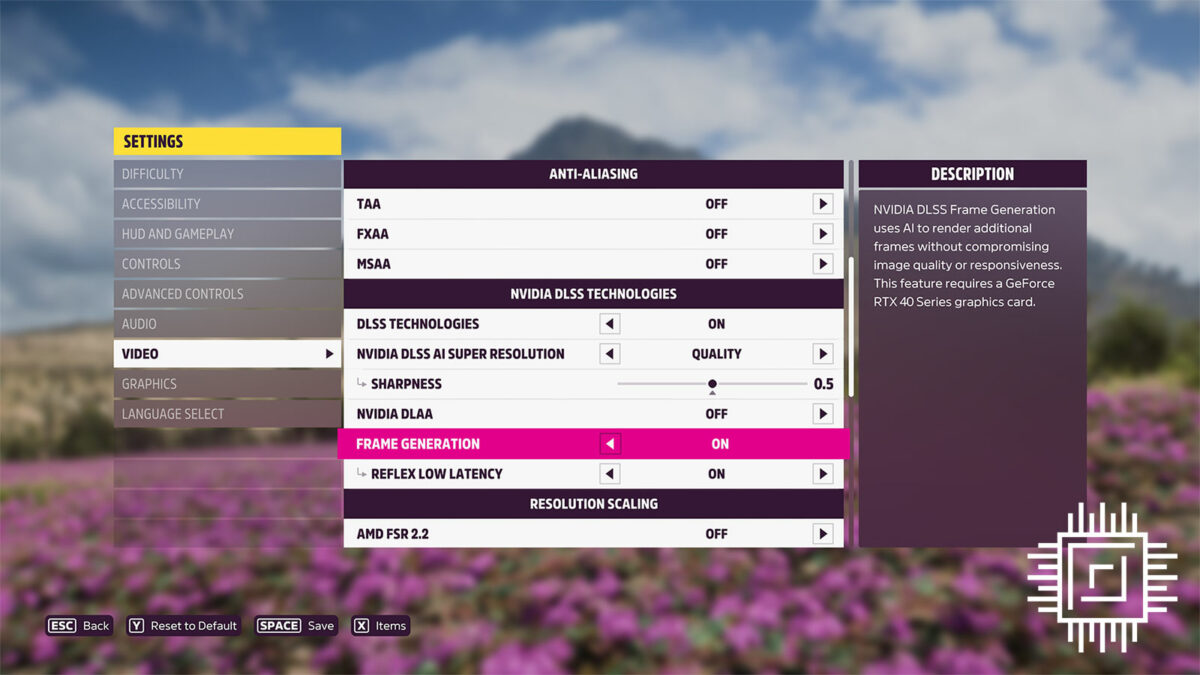

Do note, also, that Frame Generation and Super Resolution can be implemented independently by developers. In an ideal world, gamers will have the choice of turning the former on/off, while being able to adjust the latter via a choice of quality settings.

More demanding AI workloads naturally warrant faster Tensor Cores, and Ada obliges by imbuing the FP8 Transformer Engine from HPC-optimised Hopper. Peak FP16 Tensor teraflops performance is already doubled from 320 on Ampere to 661 on Ada, but with added support for FP8, RTX 4090 can deliver a theoretical 1.3 petaflops of Tensor processing.

Plenty of bombast, yet won’t such processing result in an unwanted hike in latency? Such concerns are genuine; Nvidia has taken the decision to make Reflex a mandatory requirement for DLSS 3 implementation.

Designed to bypass the traditional render queue, Reflex synchronises CPU and GPU workloads for optimal responsiveness and up to a 2x reduction in latency. Ada optimisations and in particular Reflex are key in keeping DLSS 3 latency down to DLSS 2 levels, but as with so much that is DLSS related, success is predicated on the assumption developers will jump through the relevant hoops. In this case, Reflex markers must be added to code, allowing the game engine to feed back the data required to coordinate both CPU and GPU.

Given the often-sketchy state of PC game development, gamers are right to be cautious when the onus is placed in the hands of devs, and there is another caveat in that DLSS tech is becoming increasingly fragmented between generations.

DLSS 3 now represents a superset of three core technologies: Frame Generation (exclusive to RTX 40 Series), Super Resolution (RTX 20/30/40 Series), and Reflex (any GeForce GPU since the 900 Series). Nvidia has no immediate plans to backport Frame Generation to slower Ampere cards.

8th Generation NVENC

Last but not least, Ada Lovelace is wise not to overlook the soaring popularity of game streaming, both during and after the pandemic.

Building upon Ampere’s support for AV1 decoding, Ada adds hardware encoding, improving H.264 efficiency to the tune of 40 per cent. This, says Nvidia, allows streamers to bump their stream resolution to 1440p while maintaining the same bitrate.

AV1 support also bodes well for professional apps – DaVinci Resolve is among the first to announce compatibility – and Nvidia extends this potential on high-end RTX 40 Series GPUs by including up to two 8th Gen NVENC encoders (enabling 8K60 capture and 2x quicker exports) as well as a 5th Gen NVDEC decoder as standard.

Performance

Our 5950X Test PCs

Club386 carefully chooses each component in a test bench to best suit the review at hand. When you view our benchmarks, you’re not just getting an opinion, but the results of rigorous testing carried out using hardware we trust.

Shop Club386 test platform components:

CPU: AMD Ryzen 9 5950X

Motherboard: Asus ROG X570 Crosshair VIII Formula

Cooler: Corsair Hydro Series H150i Pro RGB

Memory: 32GB G.Skill Trident Z Neo DDR4

Storage: 2TB Corsair MP600 SSD

PSU: be quiet! Straight Power 11 Platinum 1300W

Chassis: Fractal Design Define 7 Clear TG

Our trusty test platforms have been working overtime these past few months, and though the PCIe slot is starting to look worse for wear, the twin AM4 rigs haven’t skipped a beat.

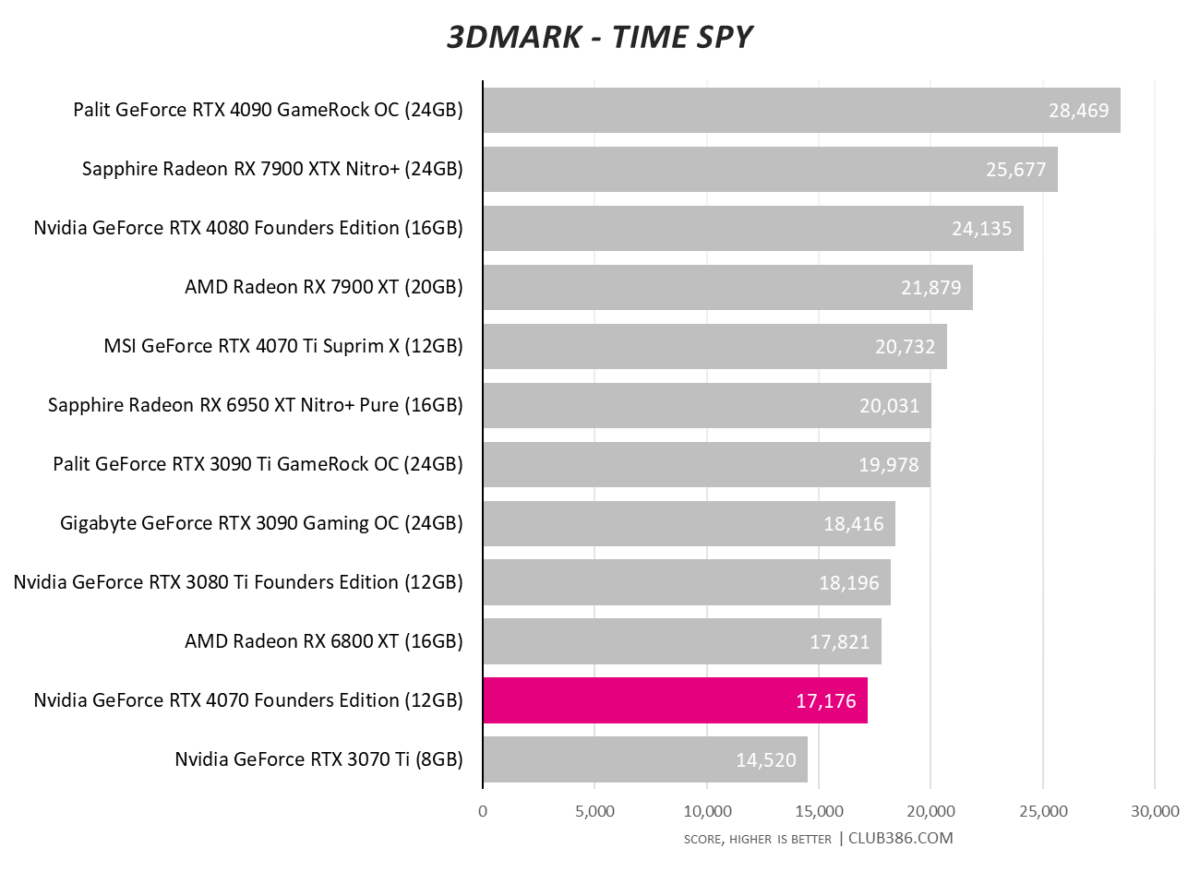

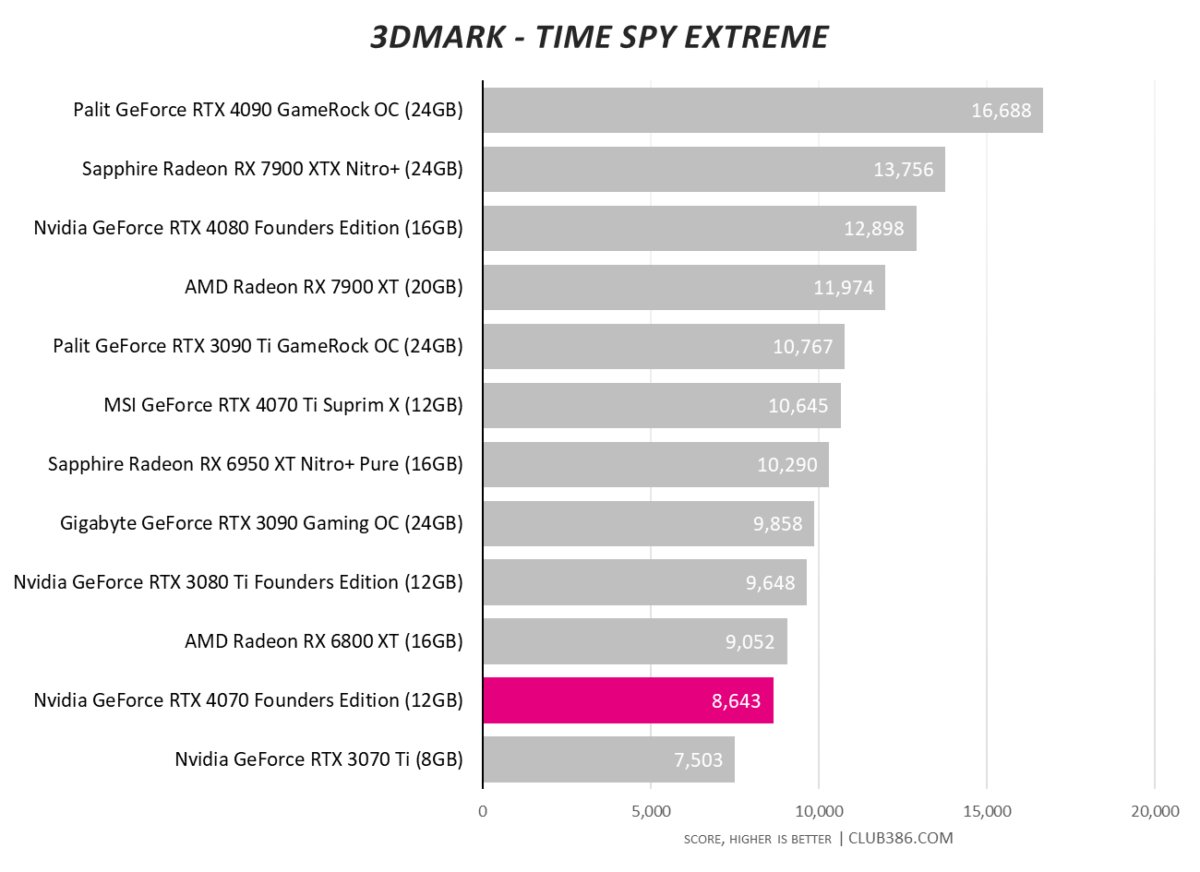

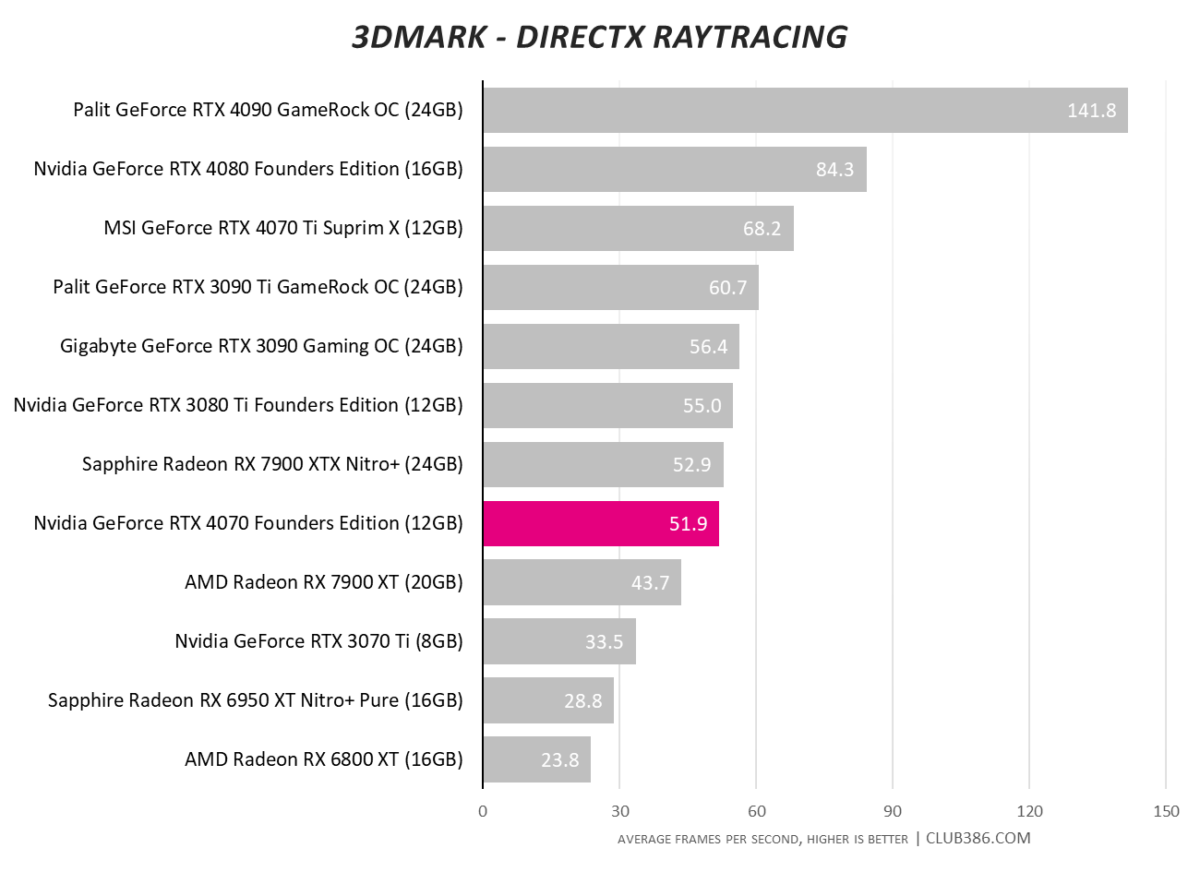

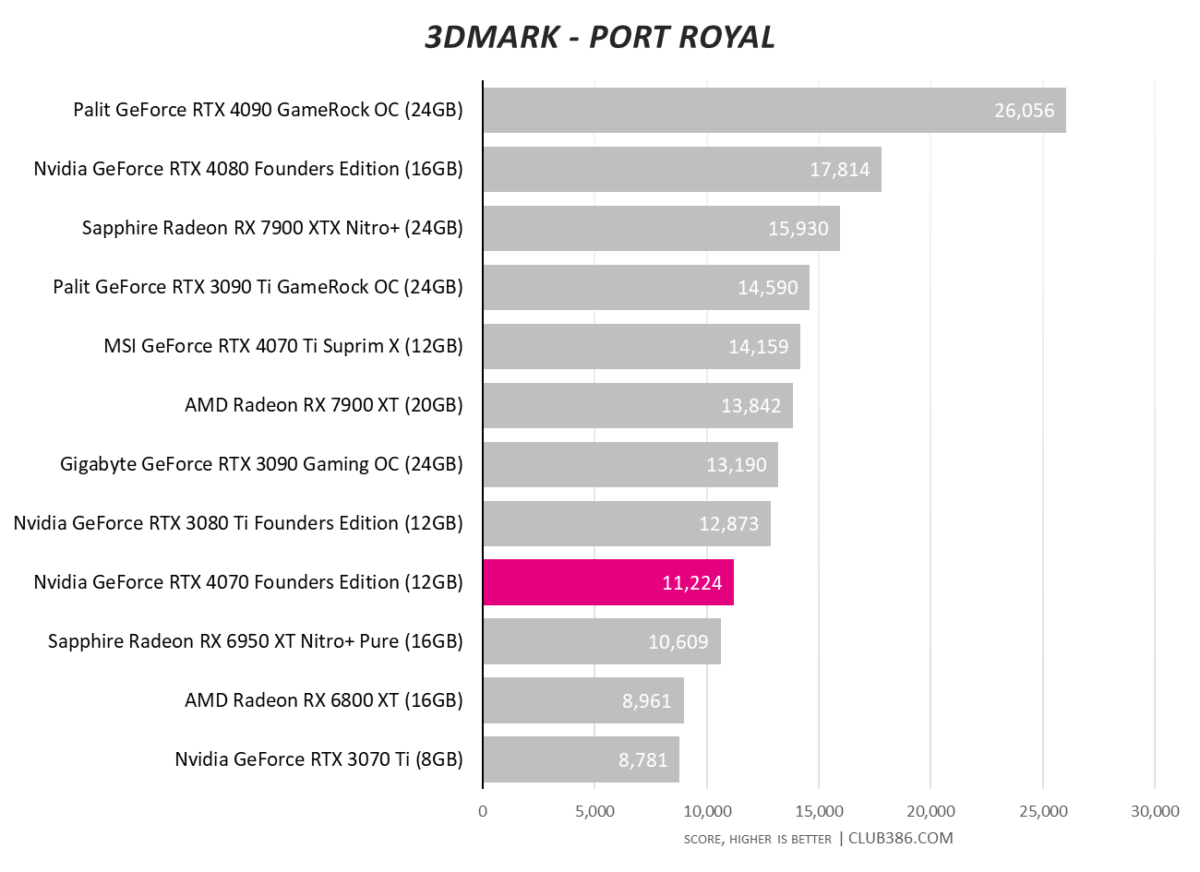

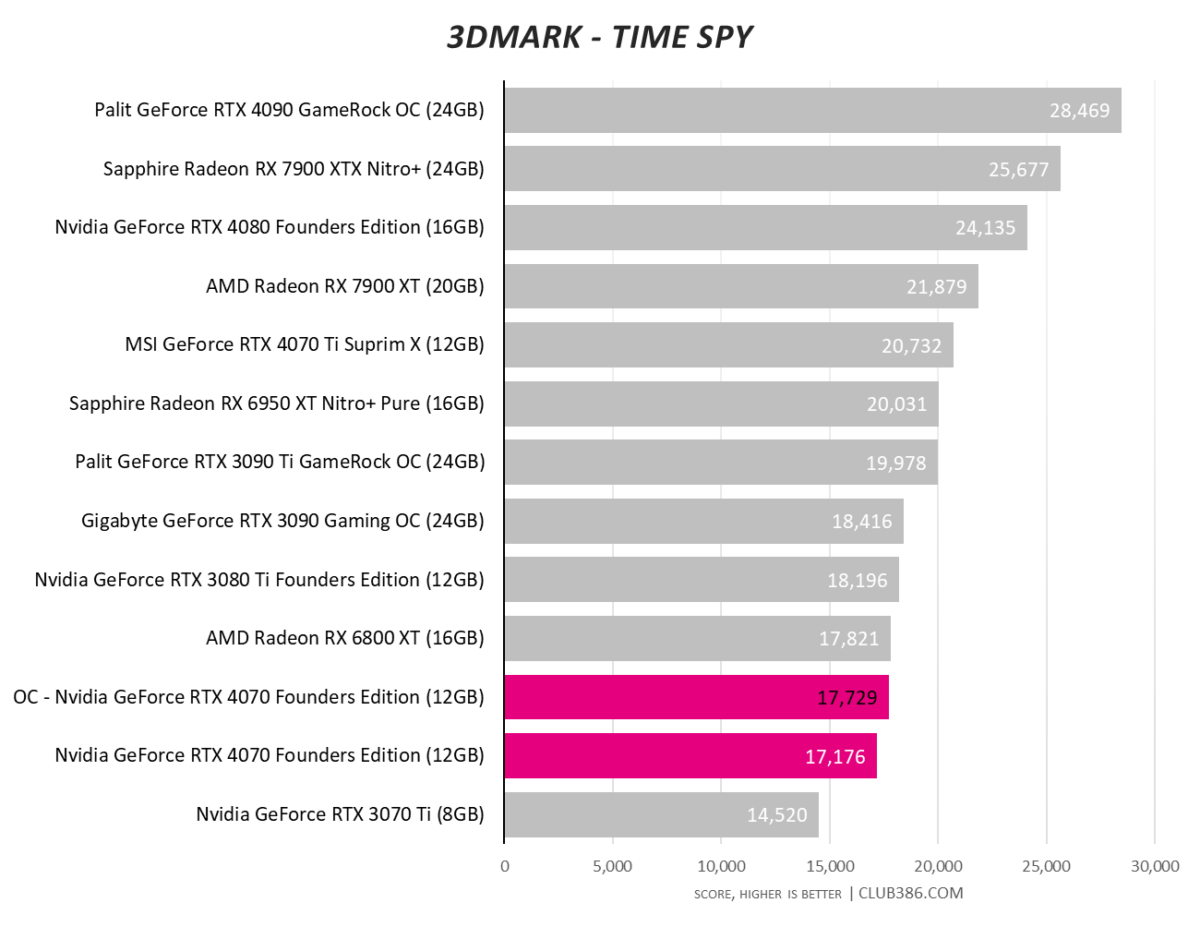

Cut core count by a quarter and performance drops accordingly. RTX 4070 is nearly 20 per cent slower than RTX 4070 Ti in the synthetic 3DMark tests.

The numbers represent a modest upgrade over RTX 3070 Ti, yet the GeForce falls short of Radeon RX 6800 XT, a GPU that dates right the way back to 2020.

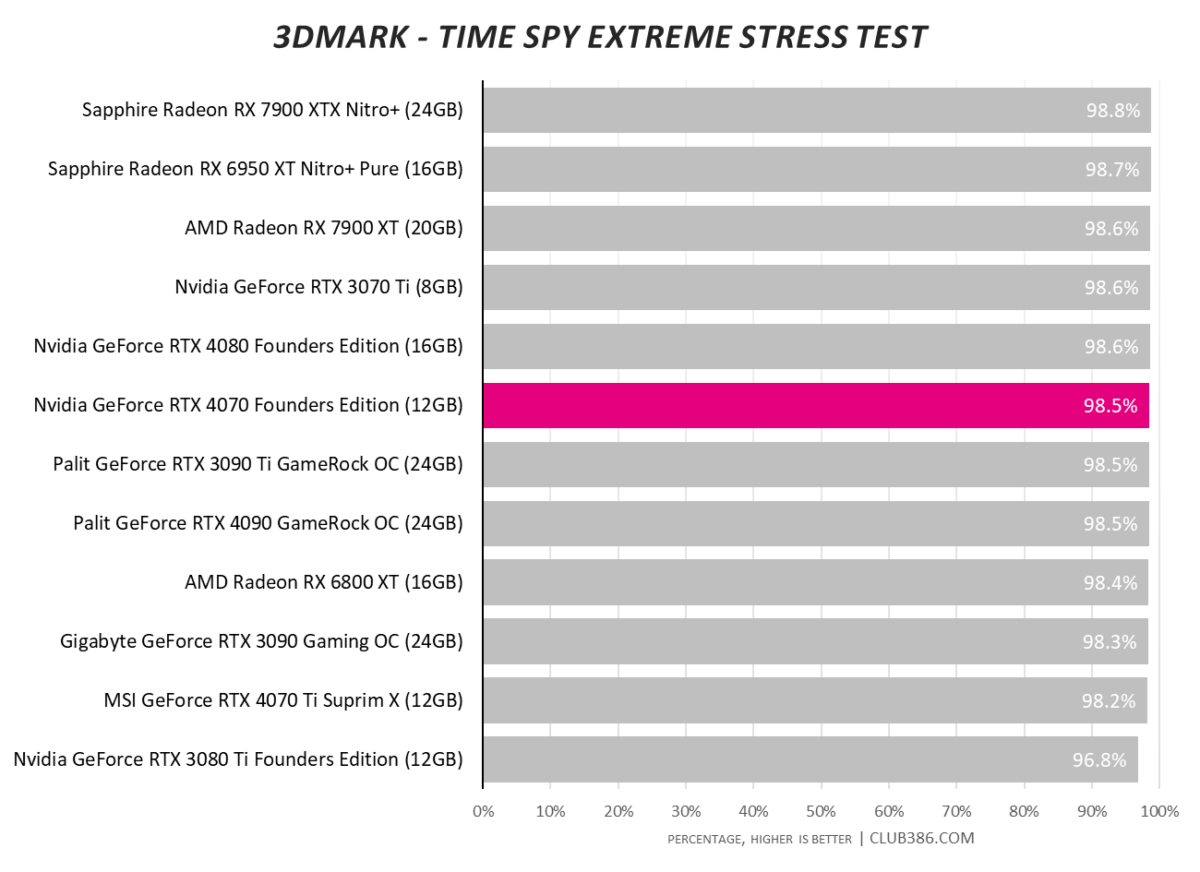

Every current-gen card surpasses the 97 per cent pass mark in 3DMark’s Stress Test with consummate ease. The trim RTX 4070 Founders Edition has no issue maintaining stable frequencies during lengthy gaming sessions.

Modern graphics cards are rarely measured through pure rasterisation alone. GeForce RTX 4070 delivers more than double the performance of Radeon RX 6800 XT in raytracing, and is snapping at the heels of RTX 3080 Ti.

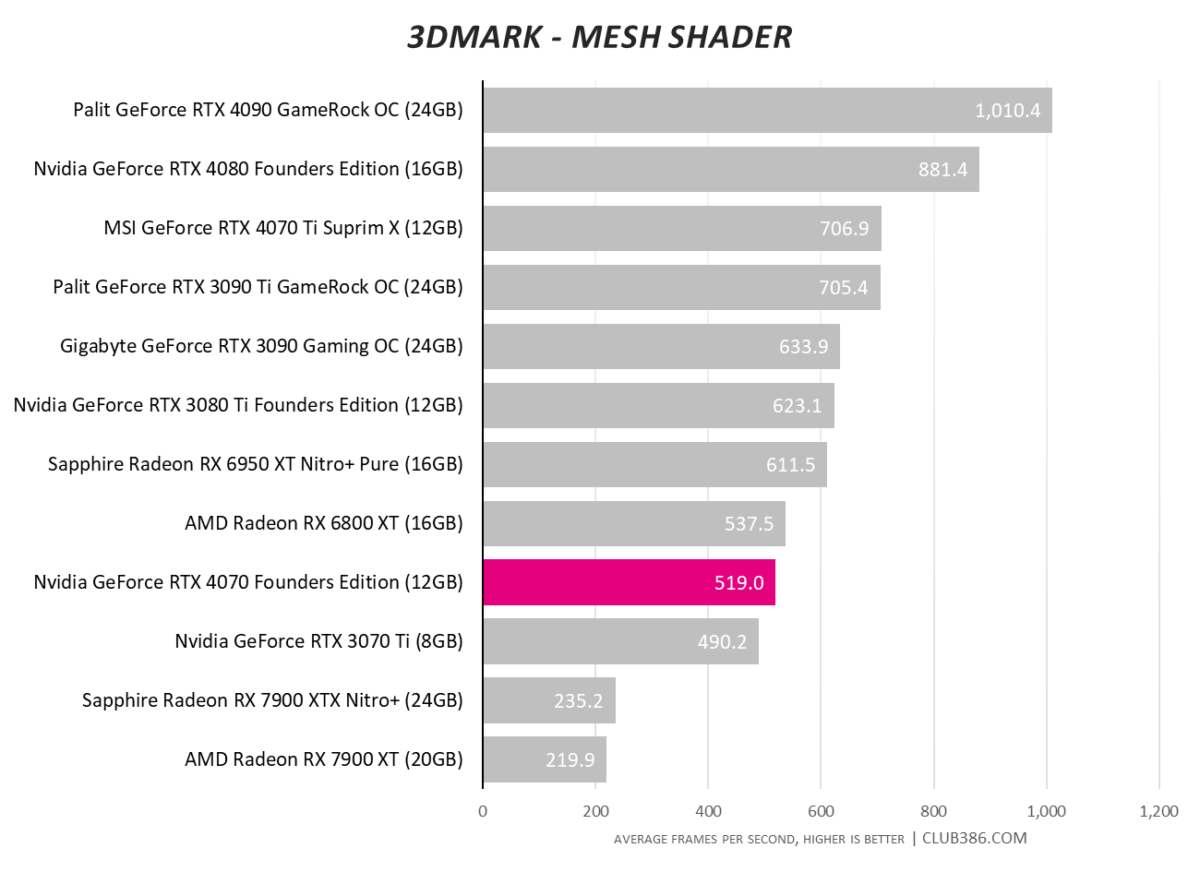

Excuse the low scores for RDNA 3 in the Mesh Shader test; this appears to have been fixed with a driver update and we’ll re-test said cards asap.

Assassin’s Creed Valhalla

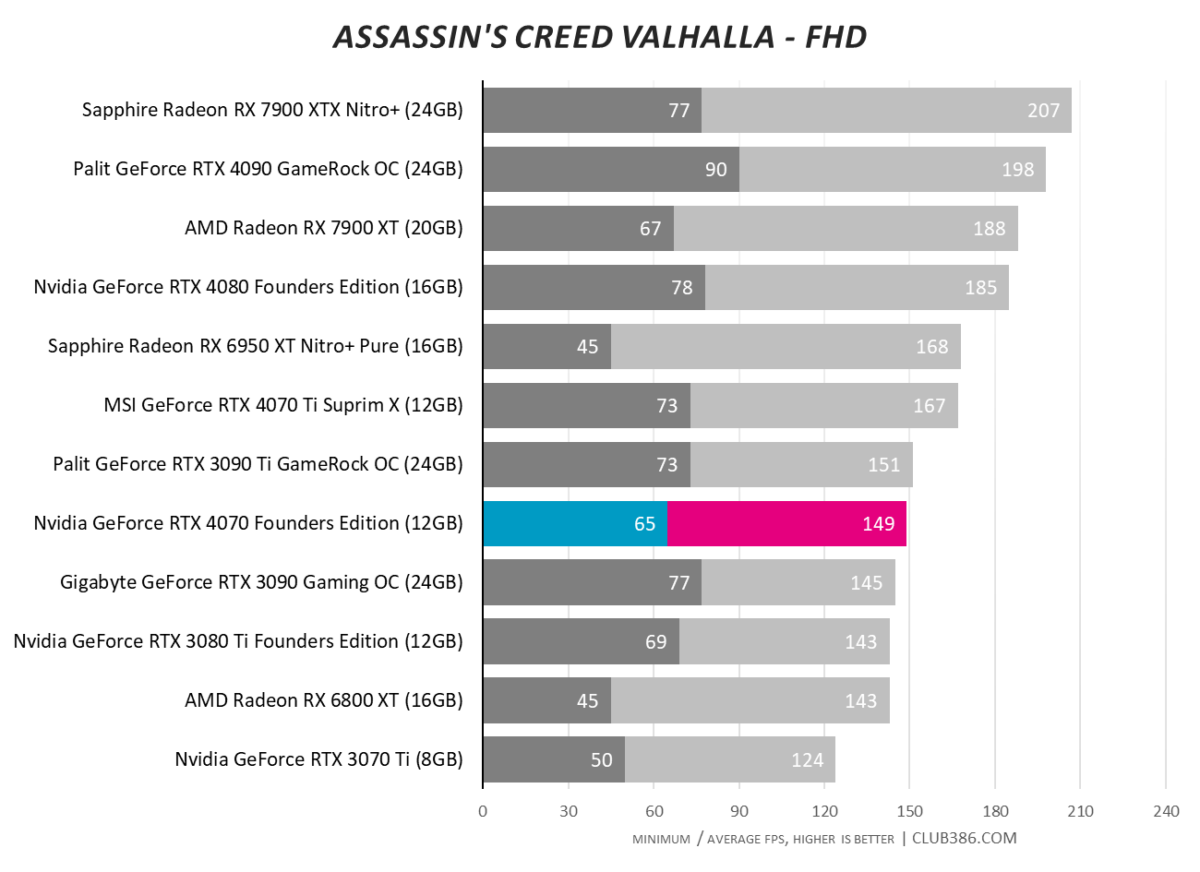

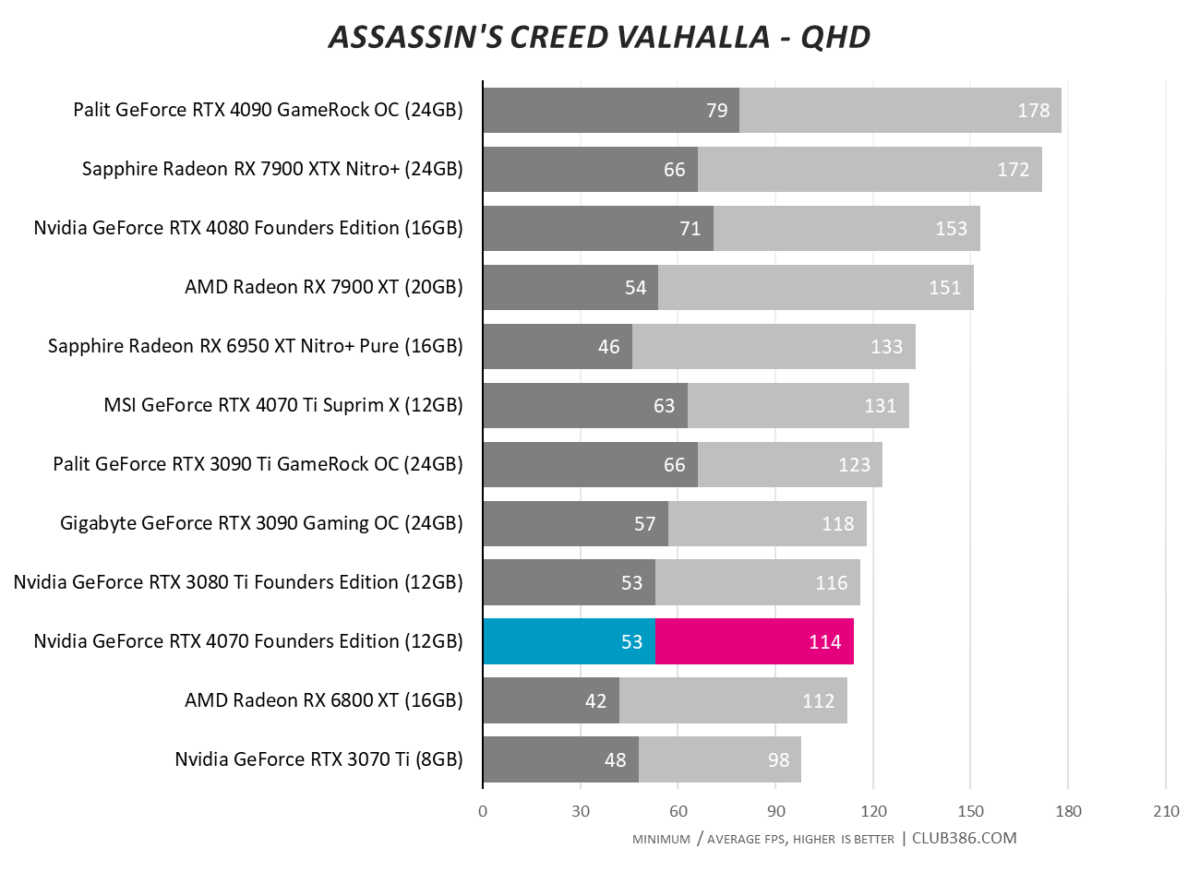

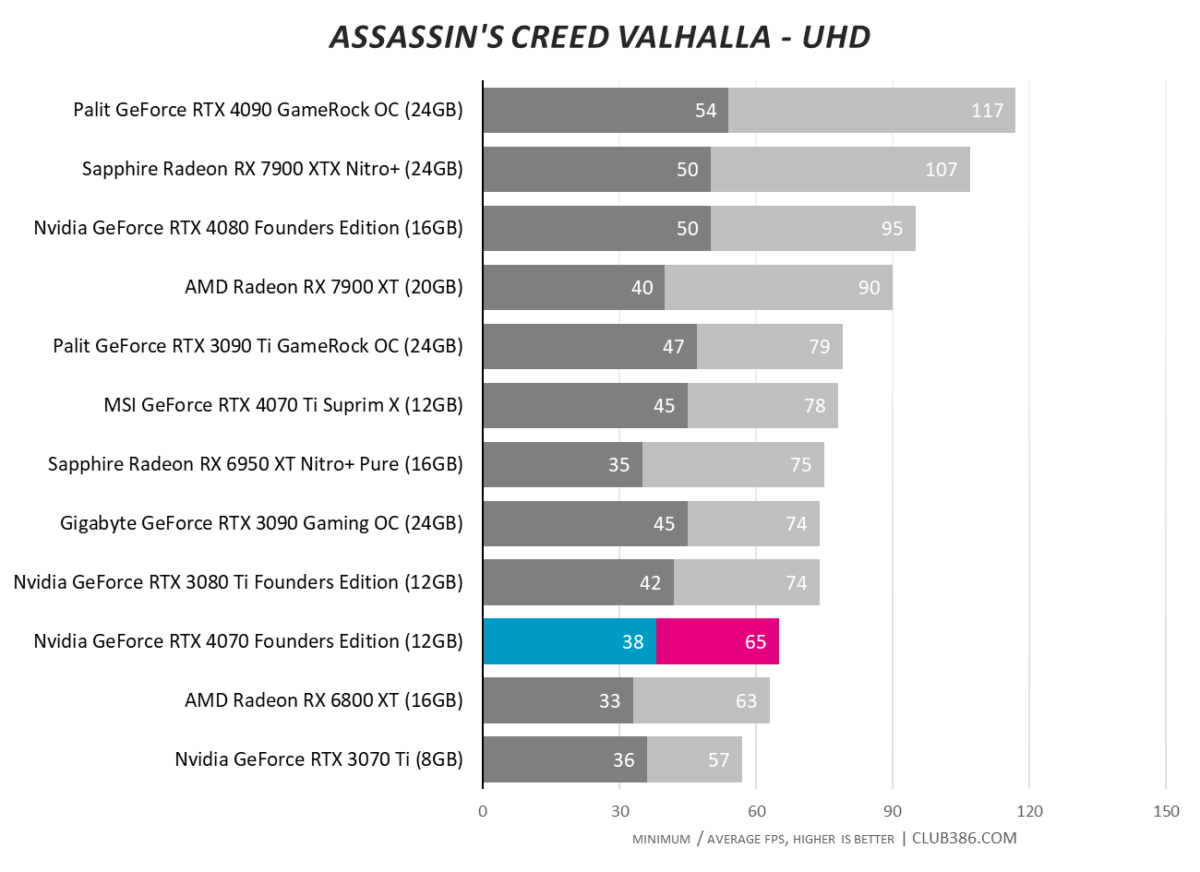

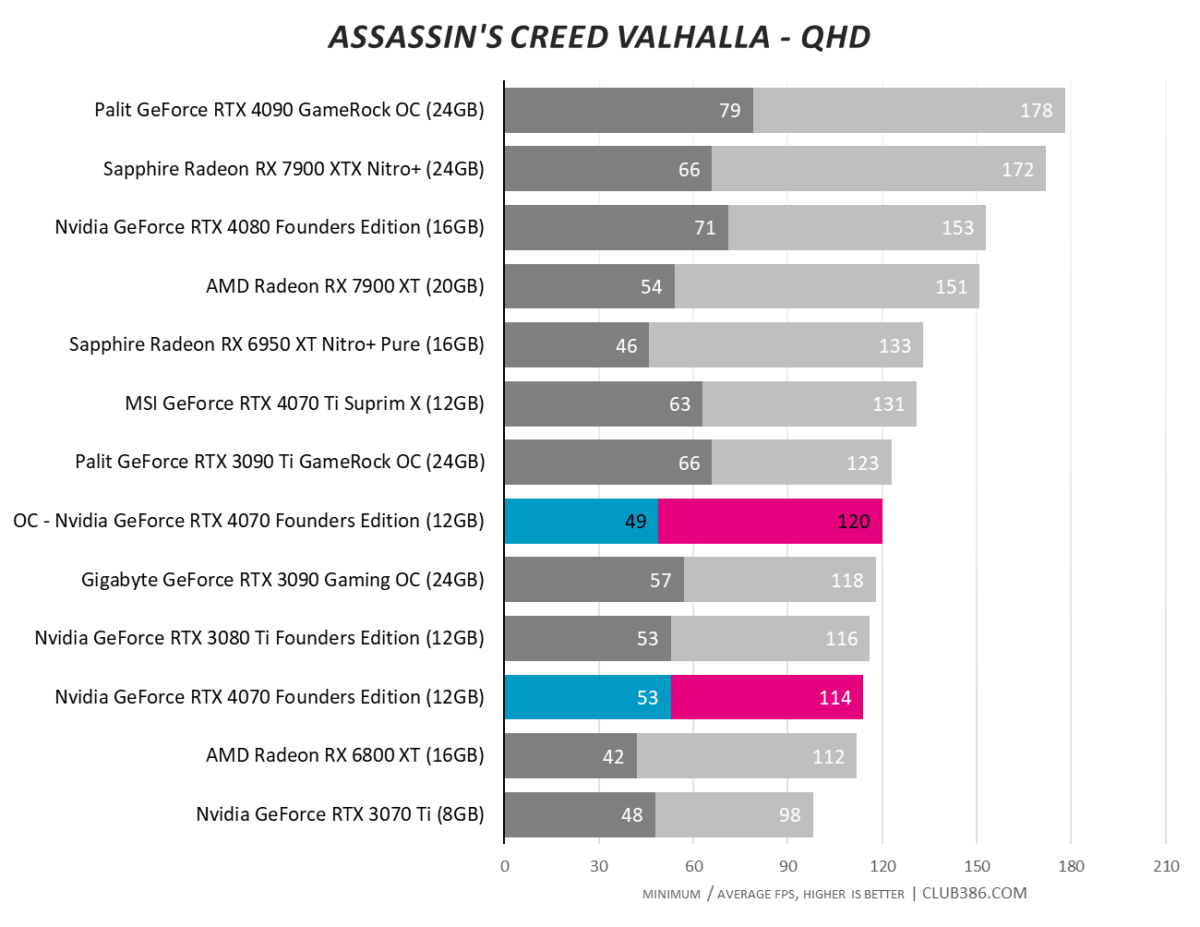

In excess of 100fps at 1440p is Nvidia’s target. GeForce RTX 4070 manages to deliver exactly that in Assassin’s Creed Valhalla, but then so too does Radeon RX 6800 XT. Hardly riveting stuff.

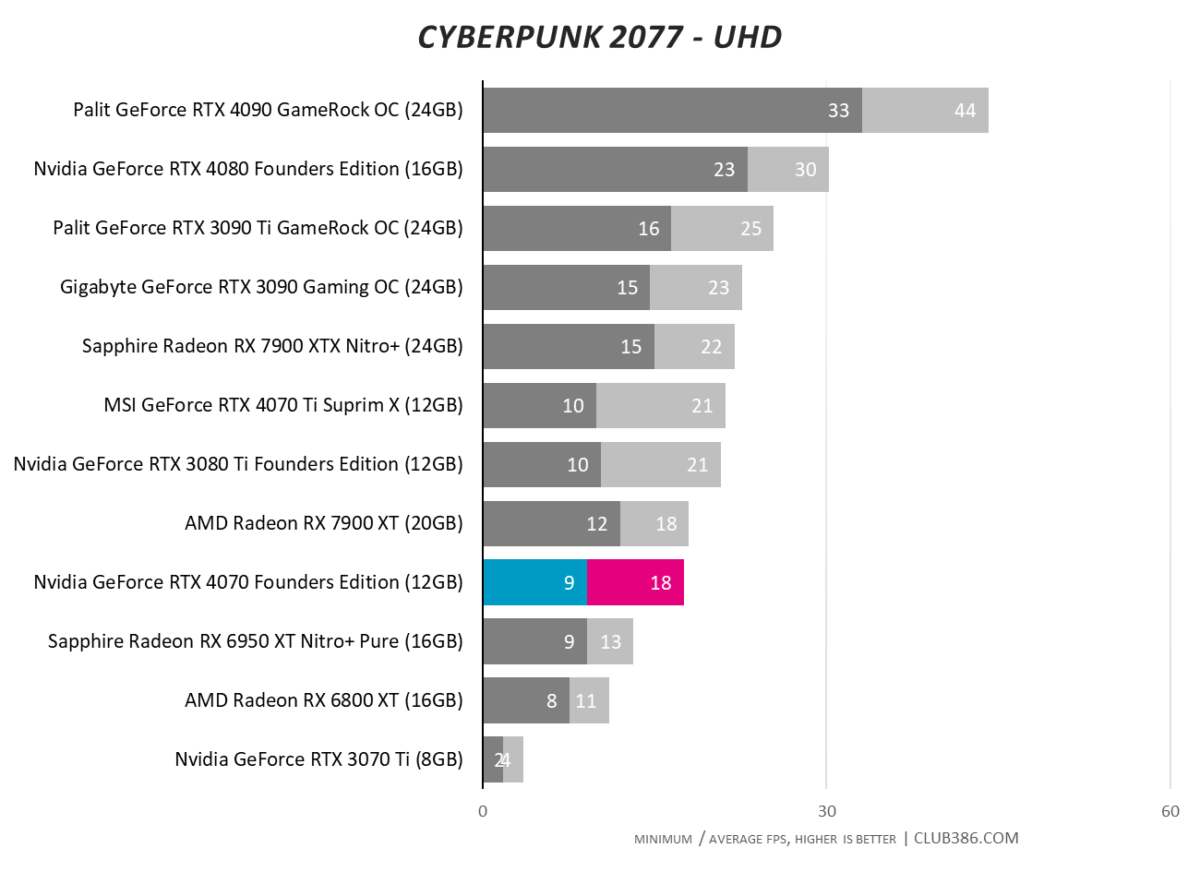

Cyberpunk 2077

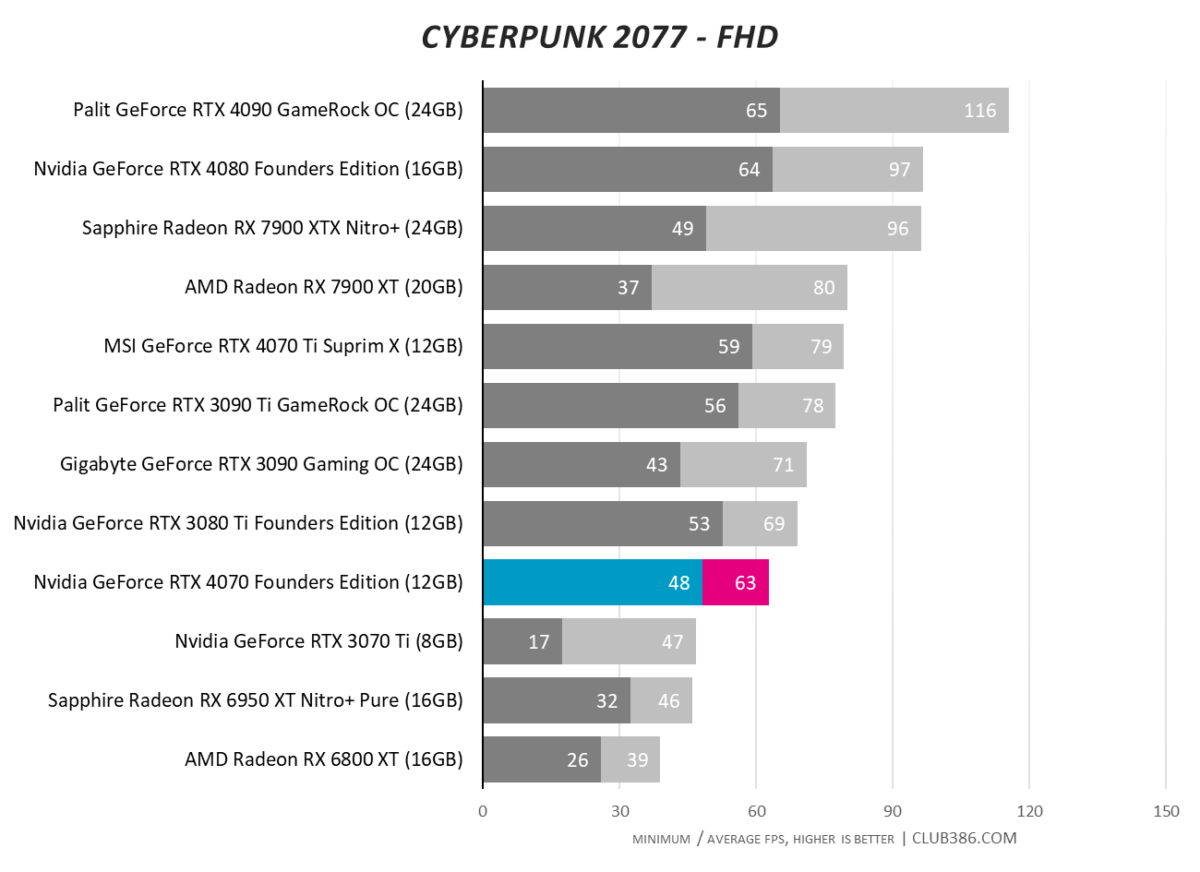

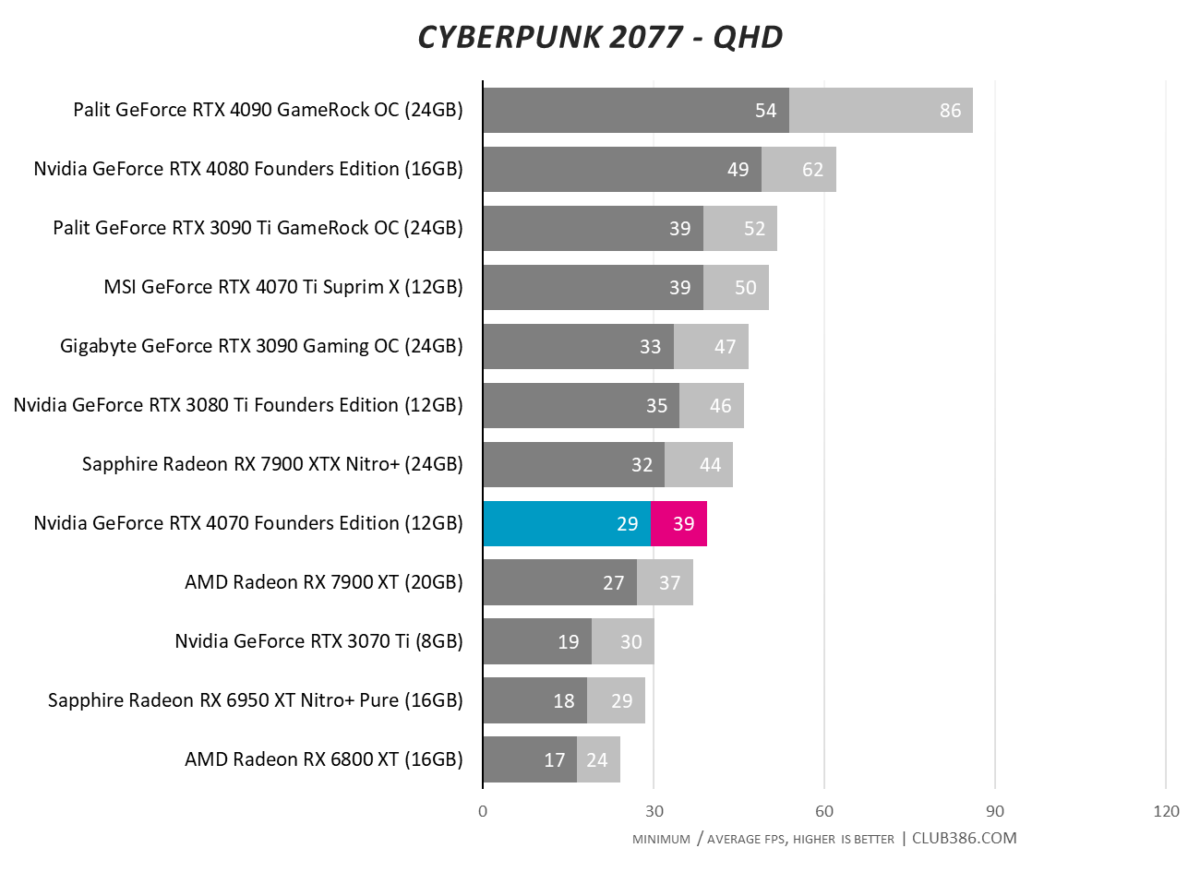

Add raytracing to the mix and it becomes clear RTX 4070 and RX 6800 XT are cut from different cloths. Heck, even RX 7900 XT slips behind the GeForce at a QHD resolution.

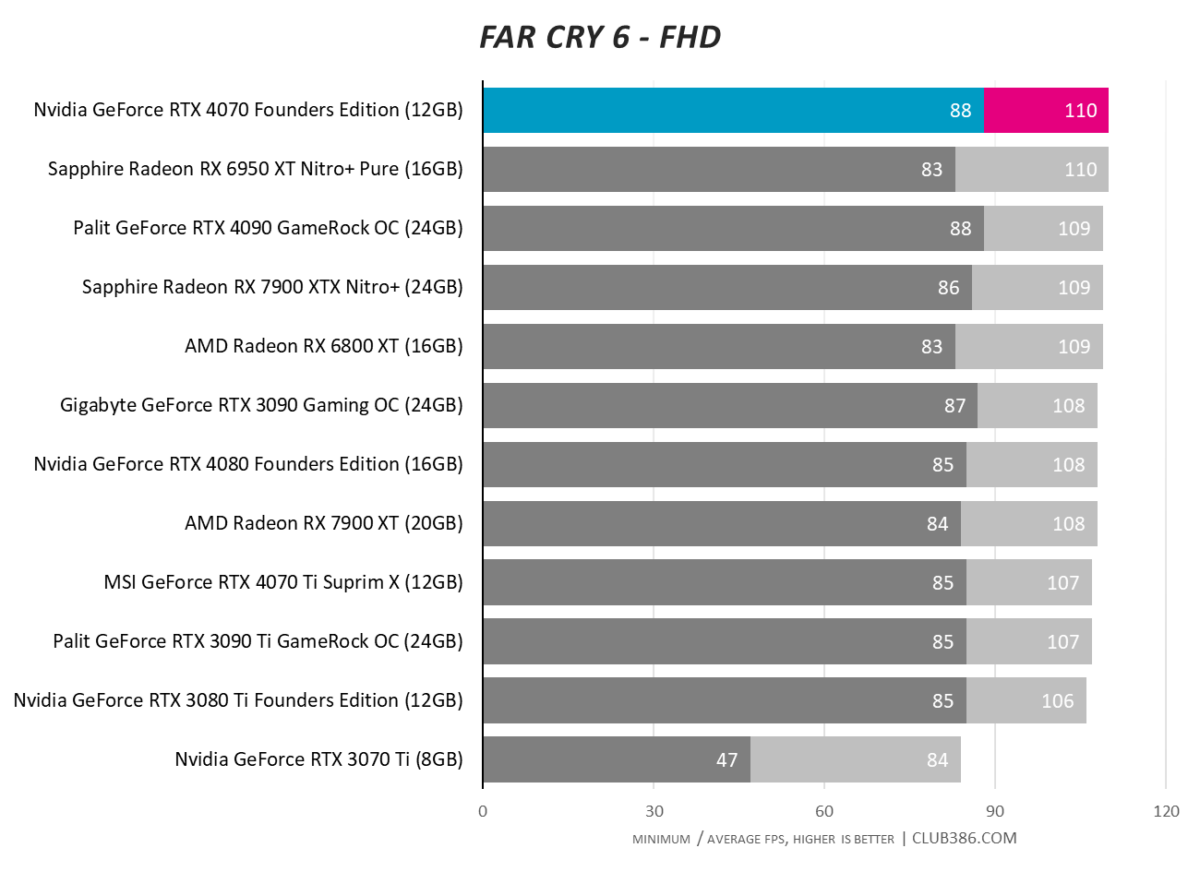

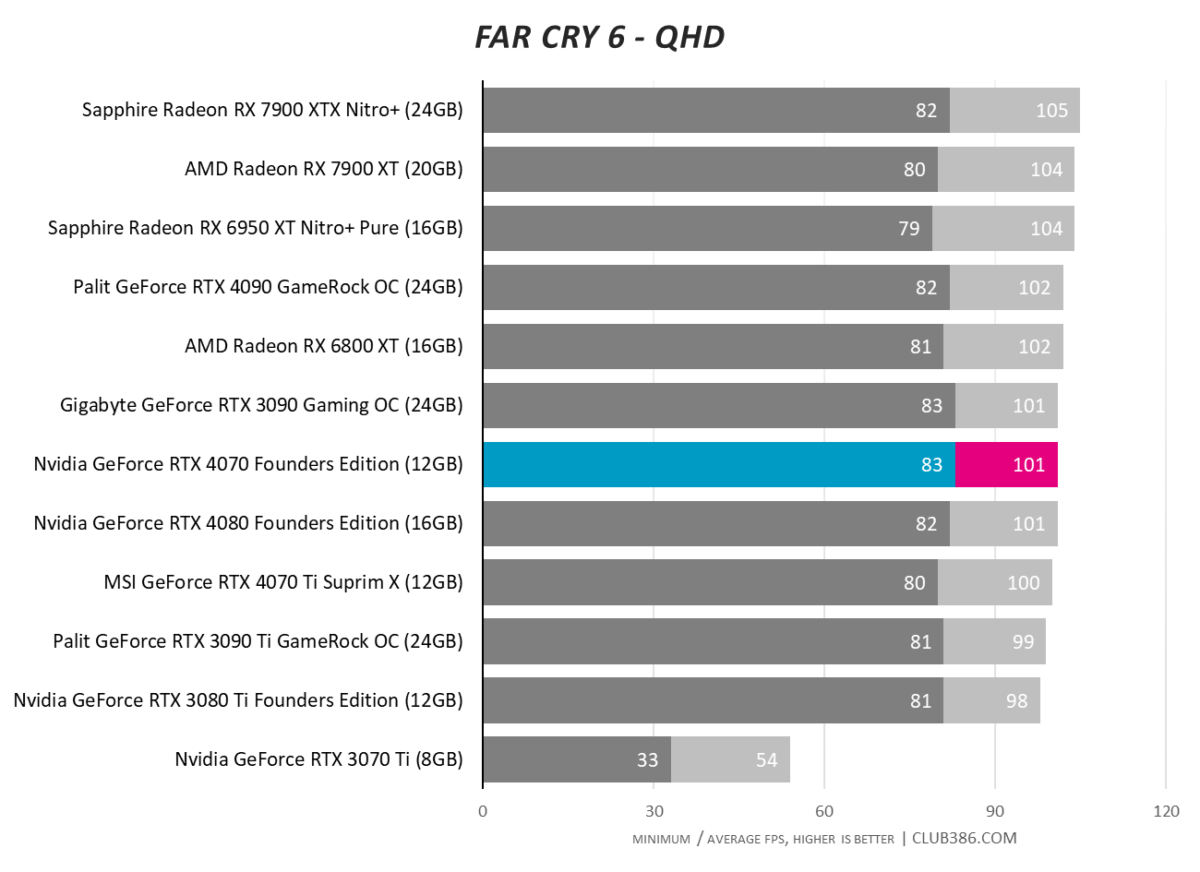

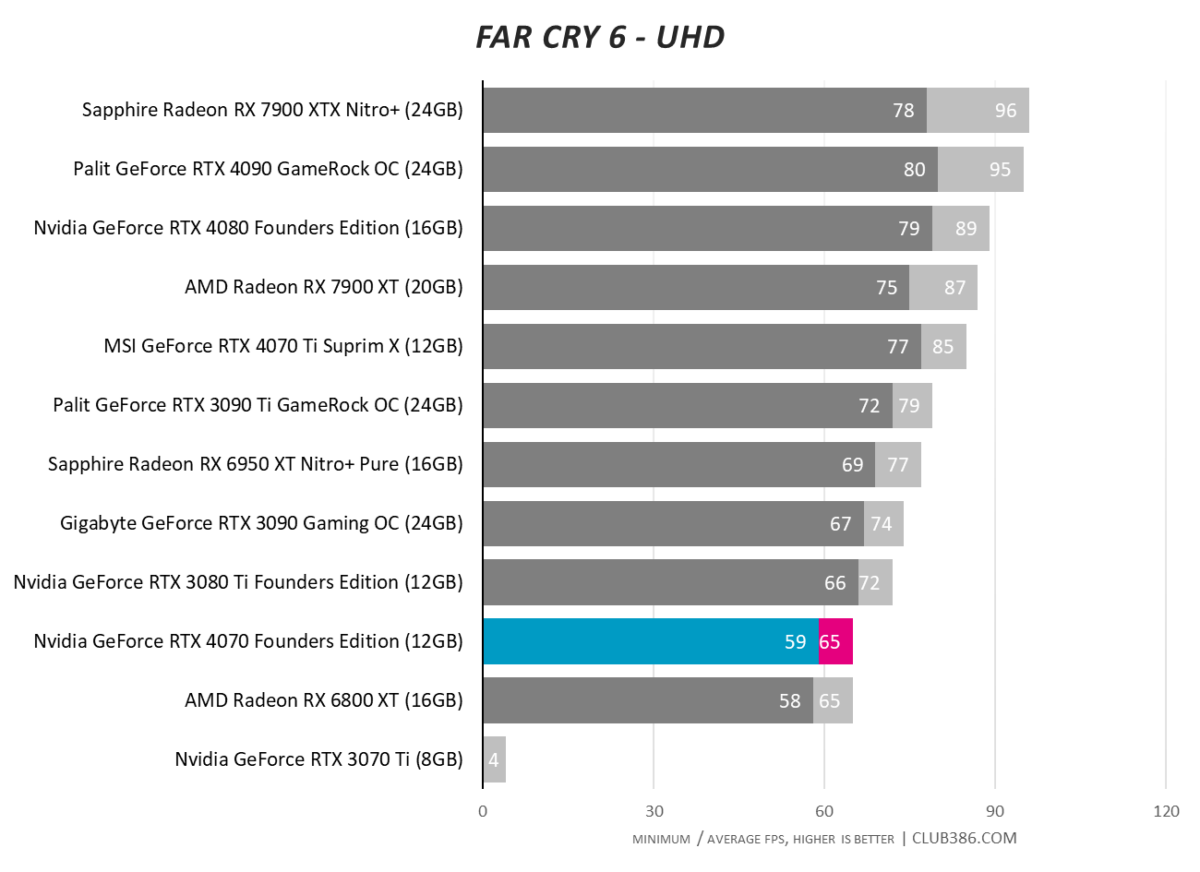

Far Cry 6

Far Cry 6 with RT and high-res textures remains a useful test insofar as it helps weed out GPUs with inferior memory configurations. 8GB GeForce RTX 3070 Ti is left hanging its head in shame. One has to wonder when/if 12GB cards will suffer a similar fate.

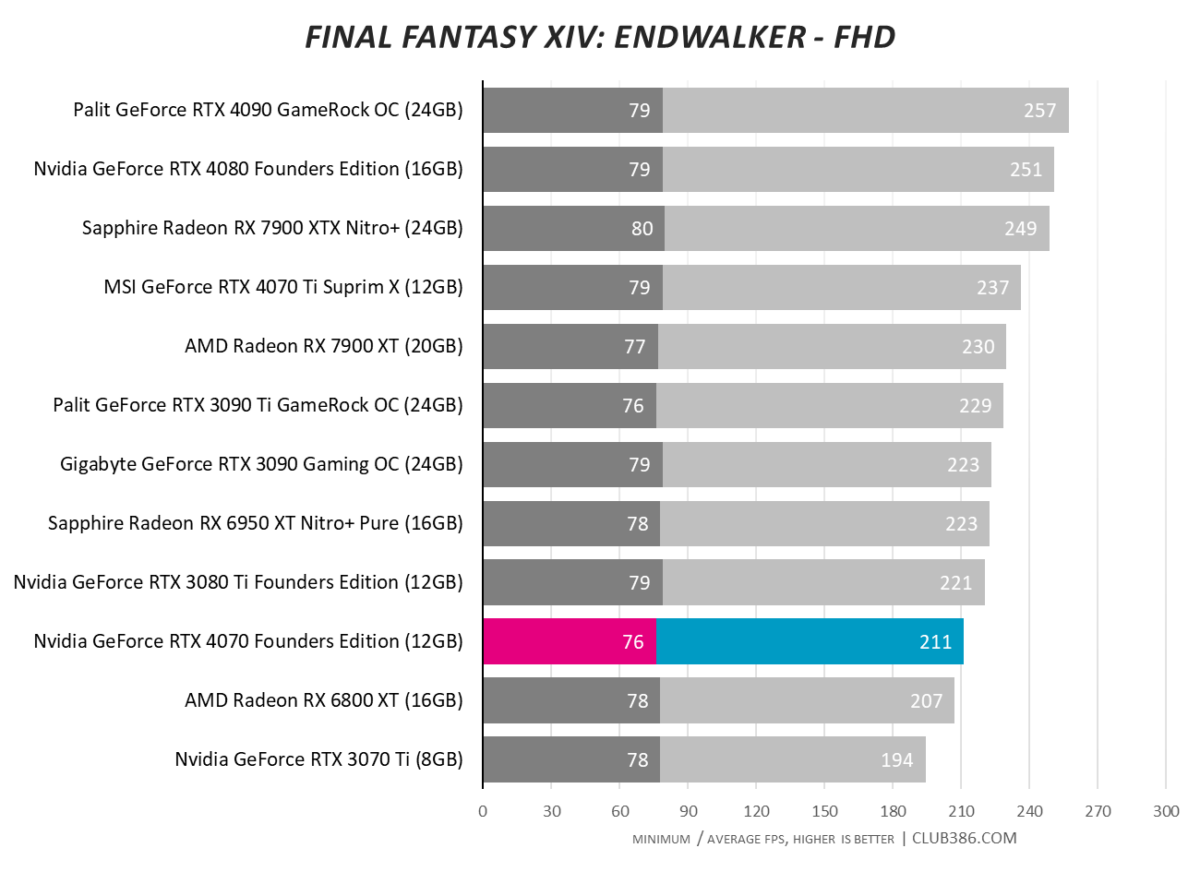

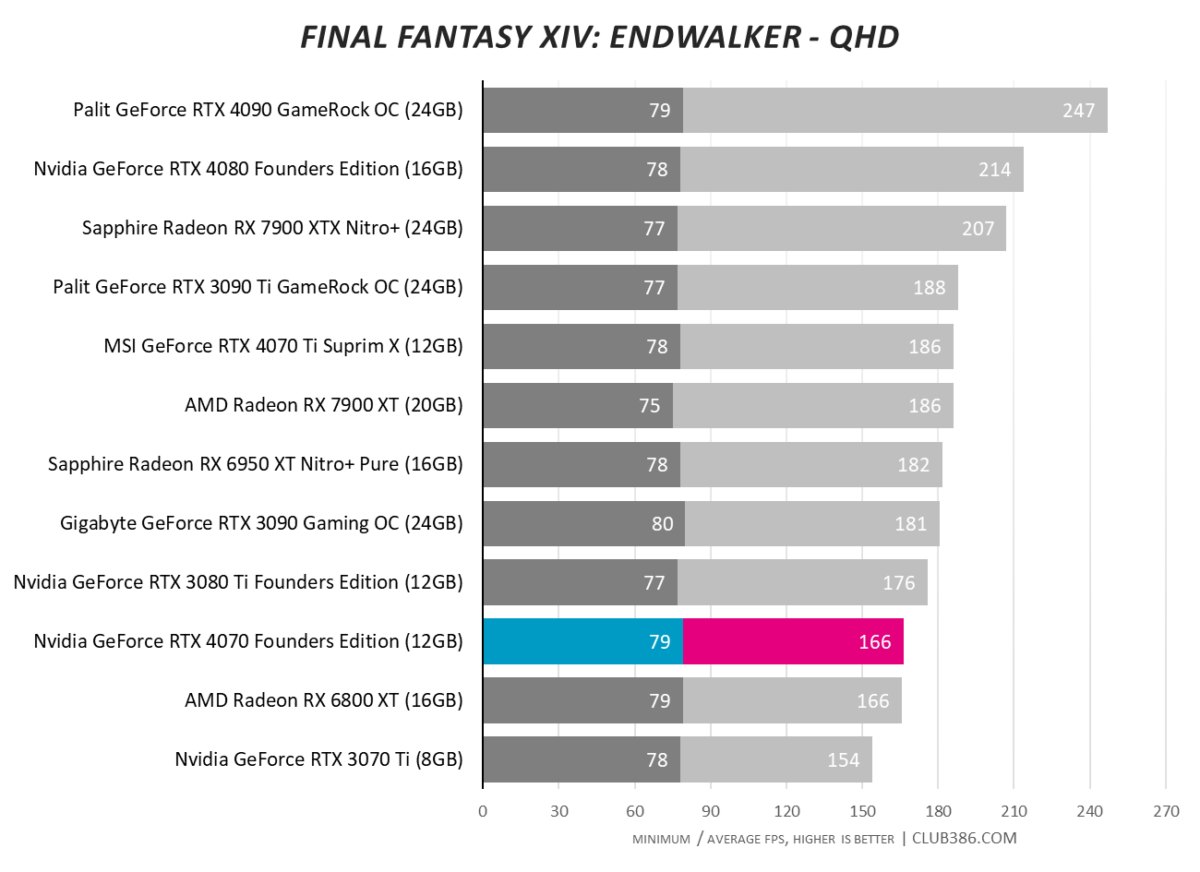

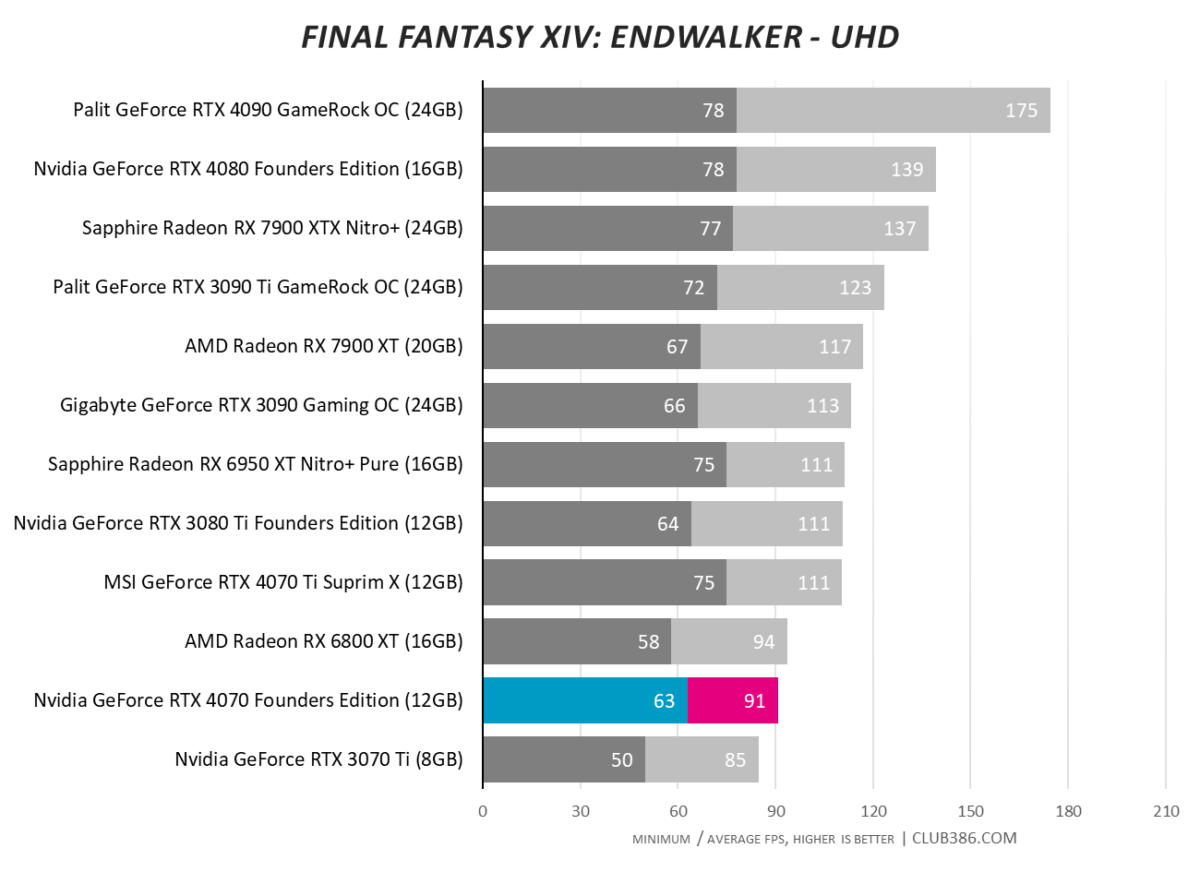

Final Fantasy XIV: Endwalker

Final Fantasy XIV: Endwalker, a game relying heavily on rasterisation, shows RTX 4070 to be practically identical to RX 6800 XT. Given the GeForce is better equipped with regards to raytracing and just as quick everywhere else, last-gen equivalents at this price point are rendered moot.

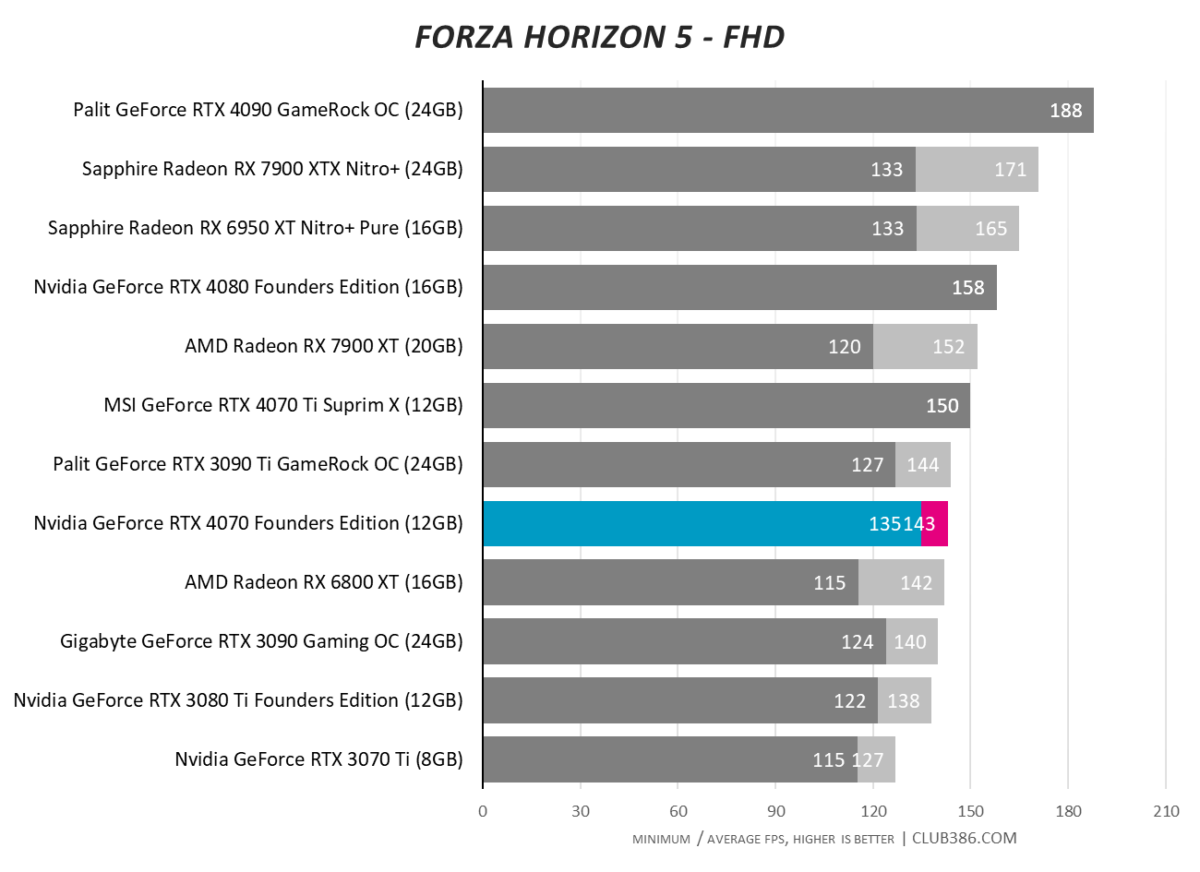

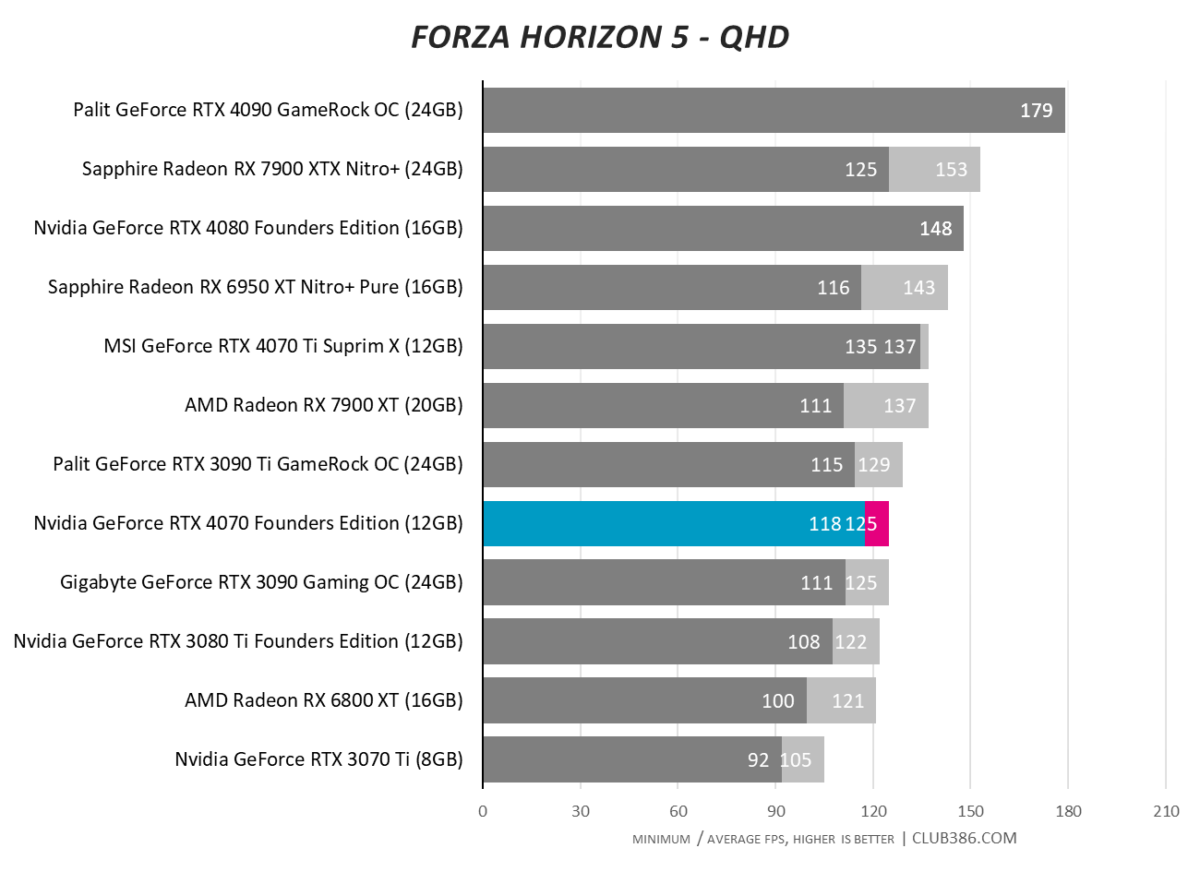

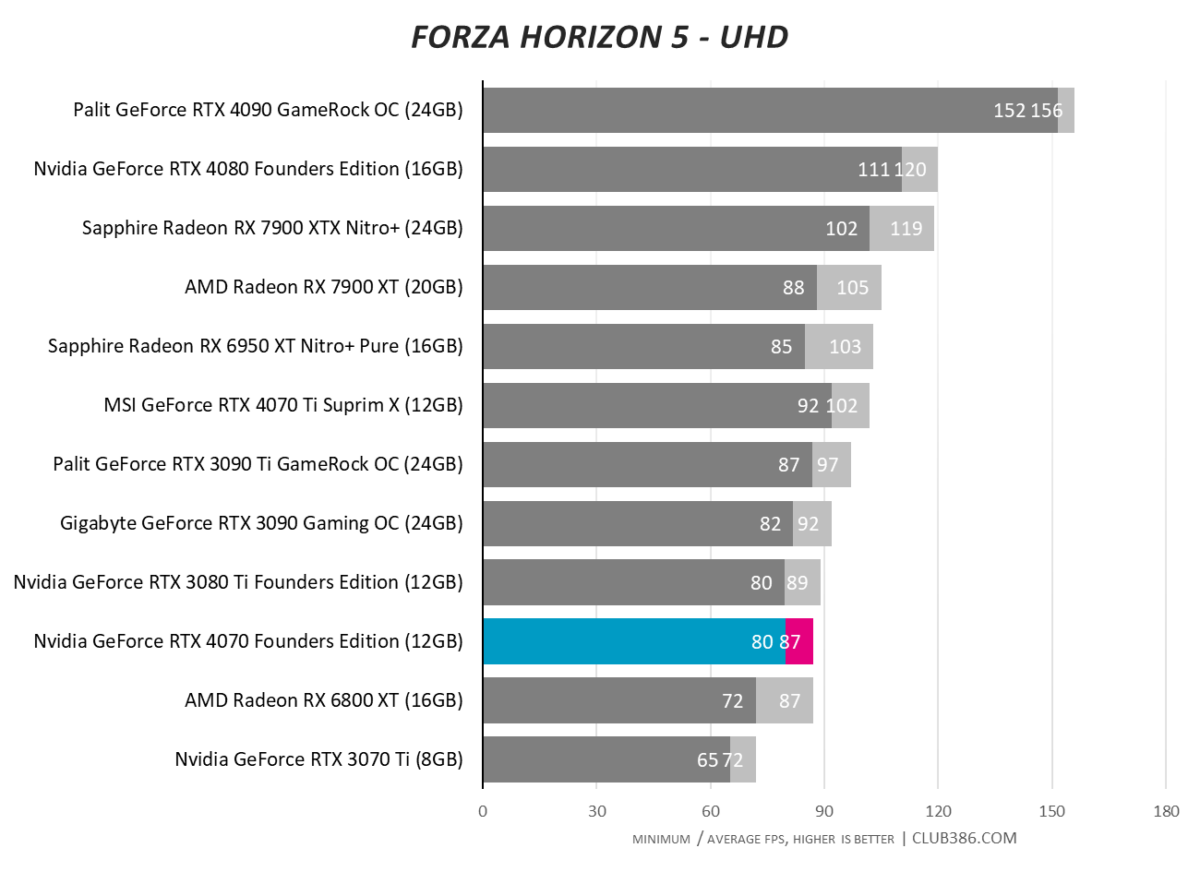

Forza Horizon 5

We see RTX 3080 Ti-like performance in Forza Horizon 5. Nothing groundbreaking, but decent enough for a $599 GPU. Gamers looking to bag a bargain should also make note of Radeon RX 6950 XT. The older part may lack modern niceties, and is particularly thirsty on power, yet delivers a significant performance punch and is often available on sale for under $700 while stocks last. Food for thought.

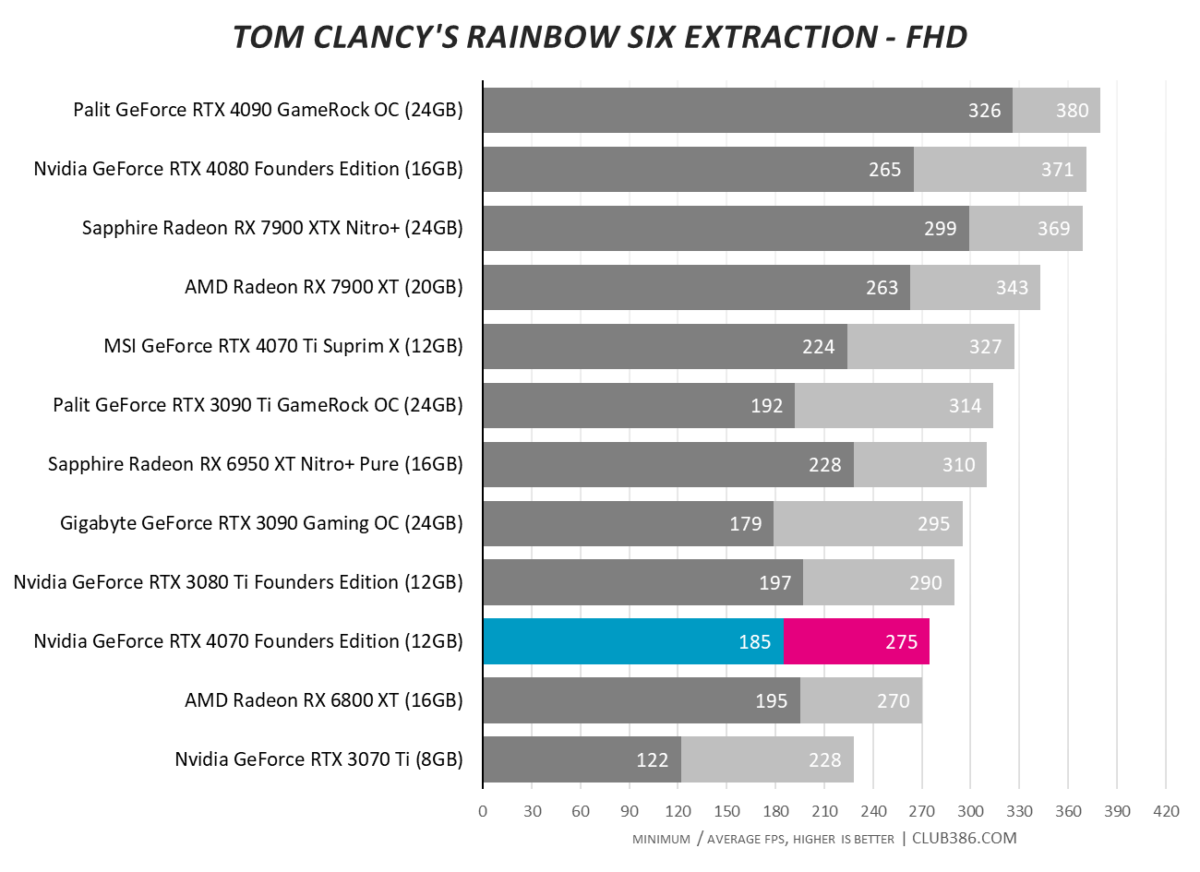

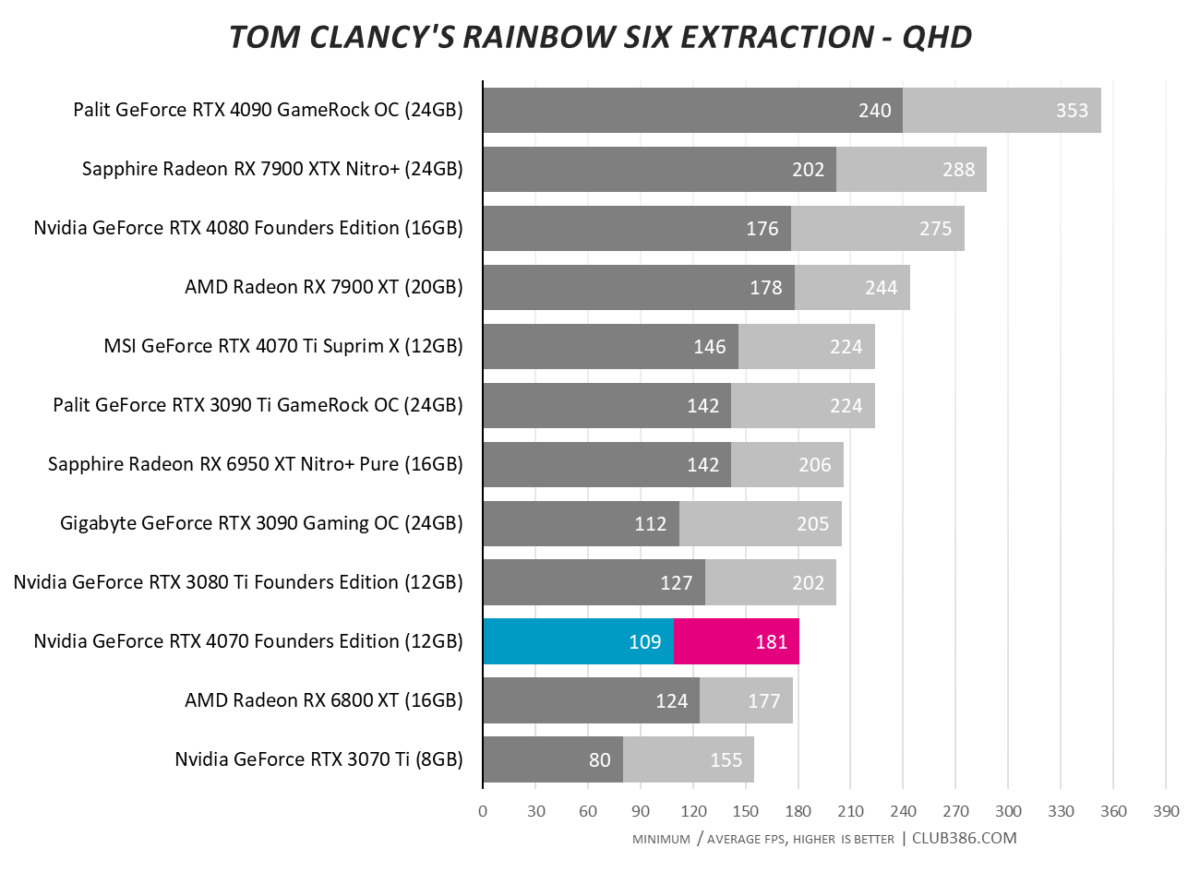

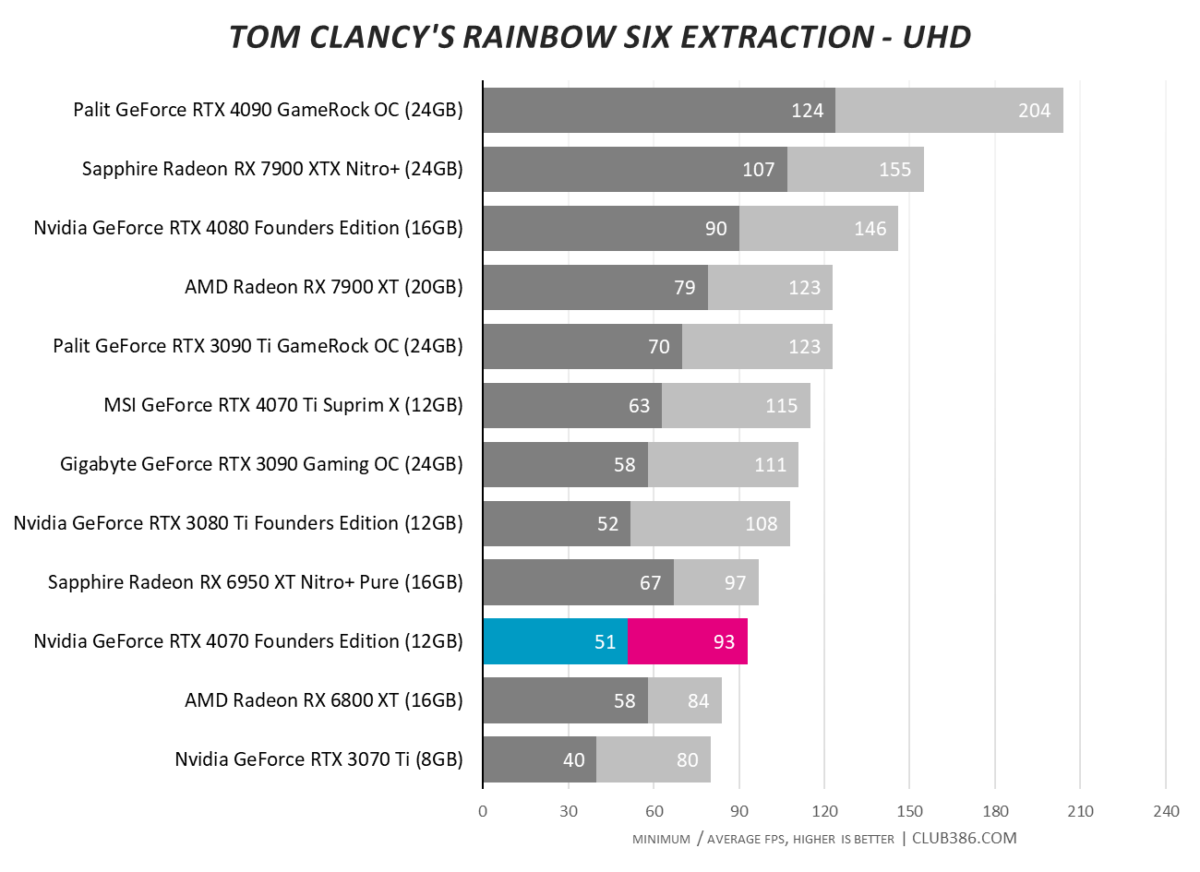

Tom Clancy’s Rainbow Six Extraction

Look at it another way, if your budget extends to 600 bucks and not a penny more, it’s a choice of Radeon RX 6800 XT, GeForce RTX 3070 Ti, or GeForce RTX 4070. Only one winner in that contest.

Power, Temps and Noise

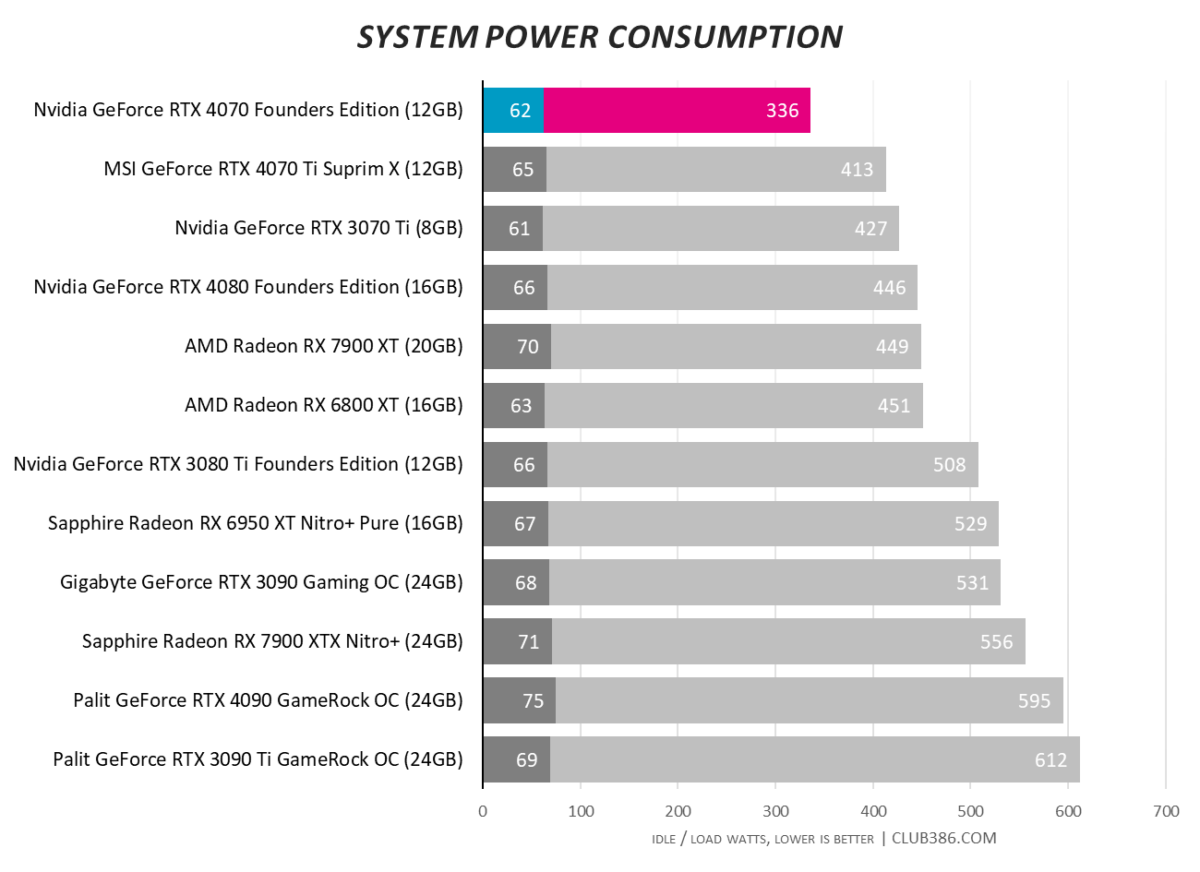

RTX 4070 can be deemed a sideward step with regards to in-game performance, yet make no mistake, it excels in efficiency. System-wide power consumption of 336 watts, with 16-core Ryzen processor in tow, is astonishing. Nvidia officially recommends a minimum 650W PSU, but even that seems overkill. Excellent news for the ‘leccy bill.

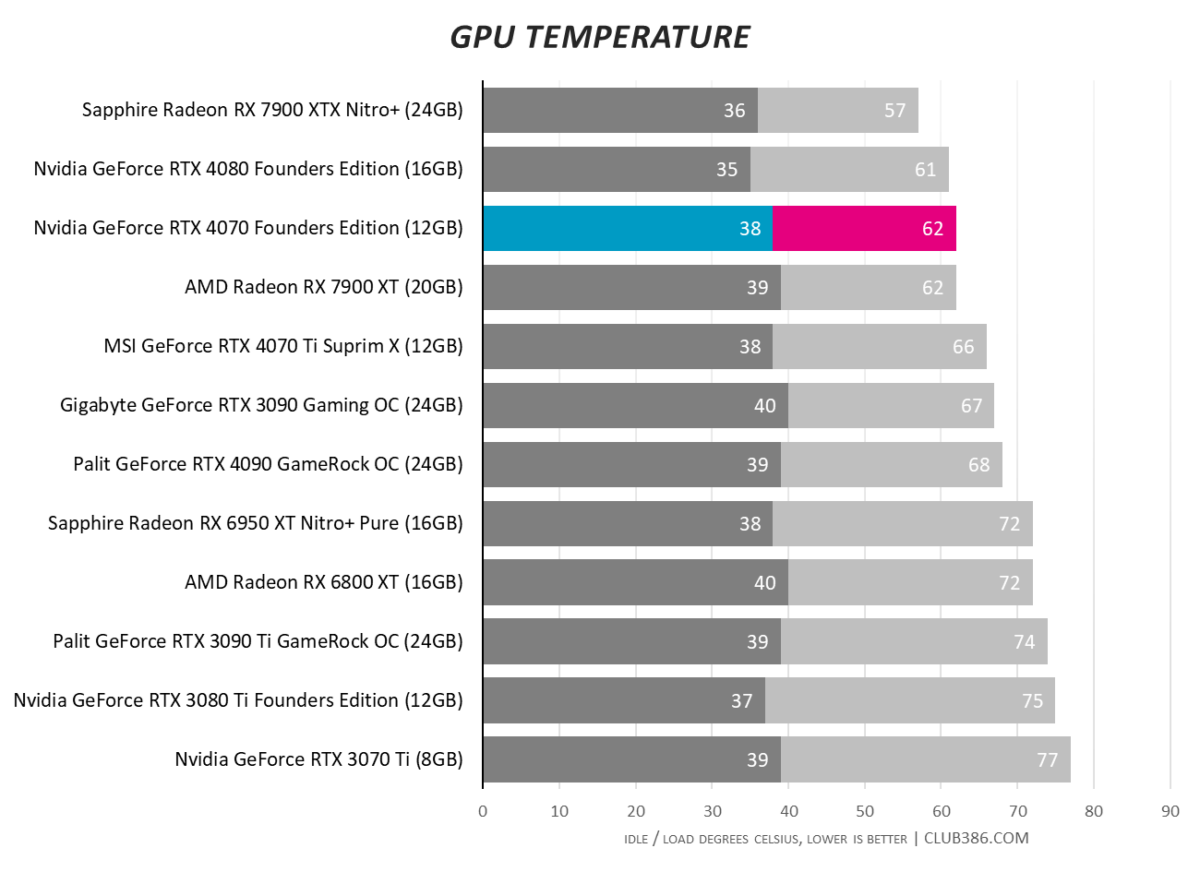

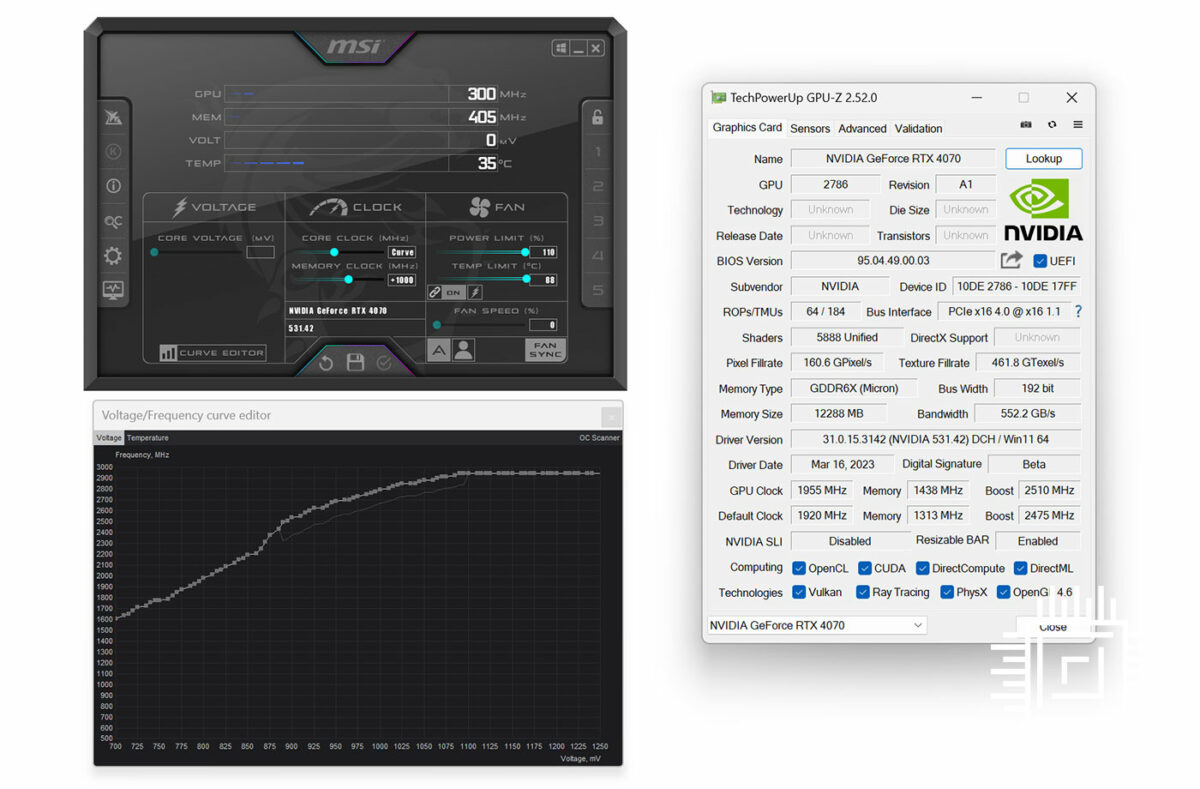

Modest power naturally translates to low temps. Our sample breezes past the official 2,475MHz boost clock, averaging 2,805MHz while gaming, and core temperature never exceeds 62°C.

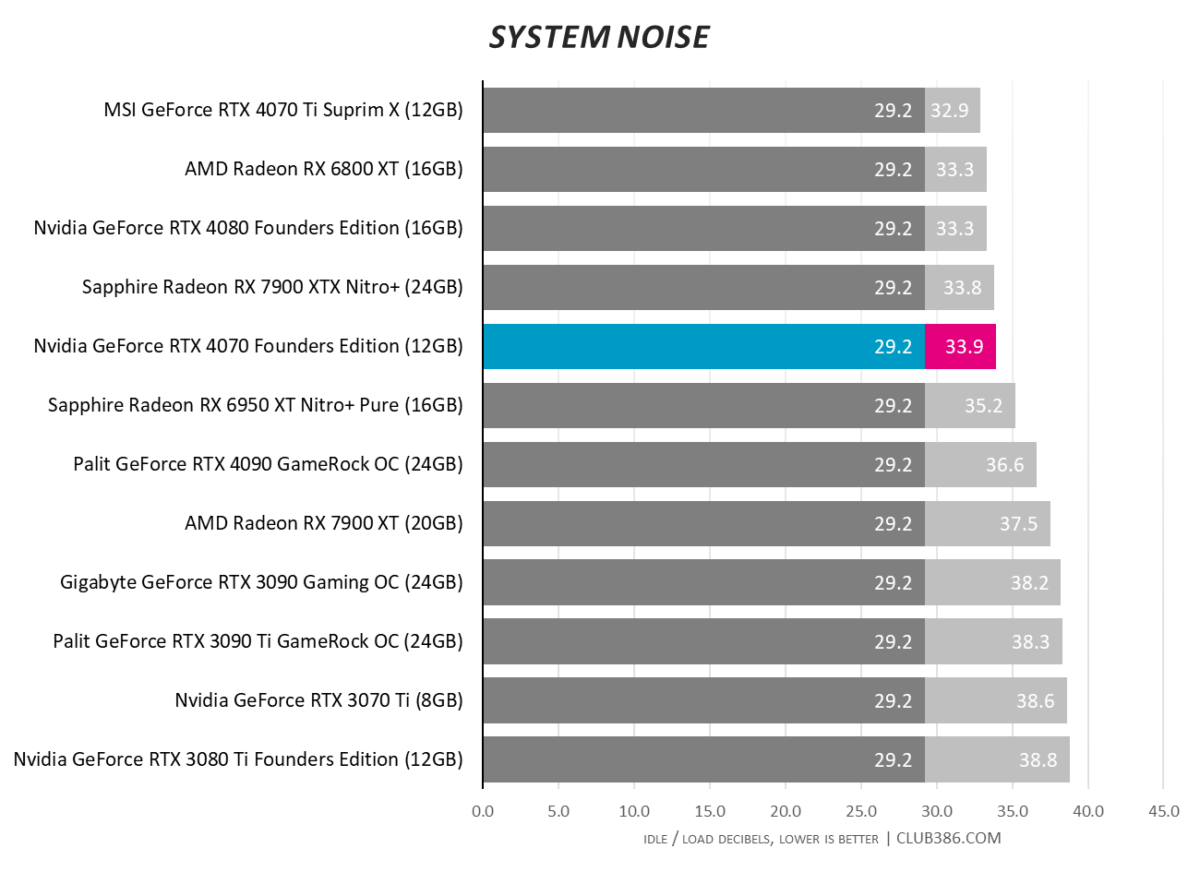

Better still, fan speed tops out a mere 30 per cent (~1,400RPM) inside our real-world test system. The card is quiet at all times, and though it’s hard to judge from a sample of one, coil whine appears much improved over last-gen equivalents.

Relative Performance, Efficiency and Value

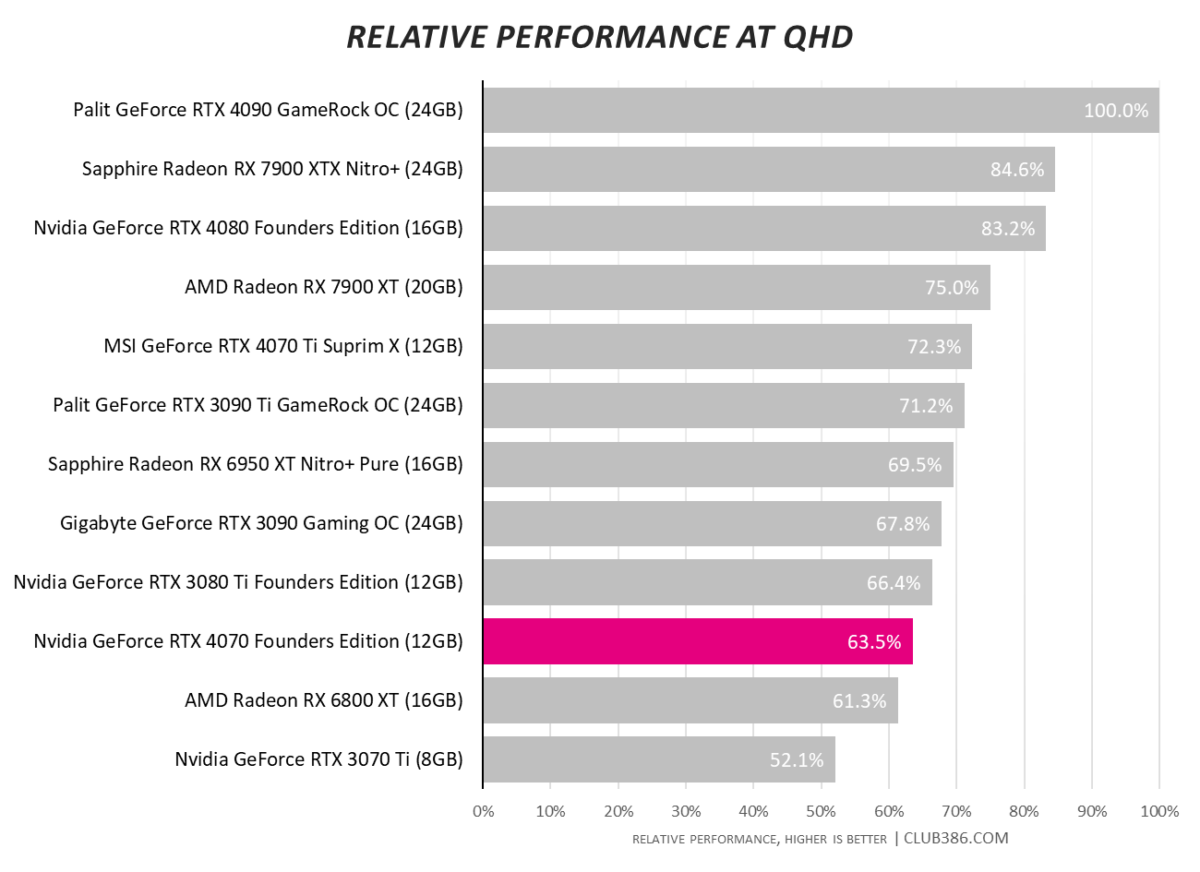

Evaluating relative performance across all benchmarked games reveals Nvidia is doing a fine job of segmentation. RTX 4080 offers 83 per cent of blistering RTX 4090 performance, RTX 4070 Ti lowers the bar to 71 per cent, and now RTX 4070 regular brings up the rear at 64 per cent. It would be logical to assume the first RTX 4060 part will deliver roughly half the top dog’s performance.

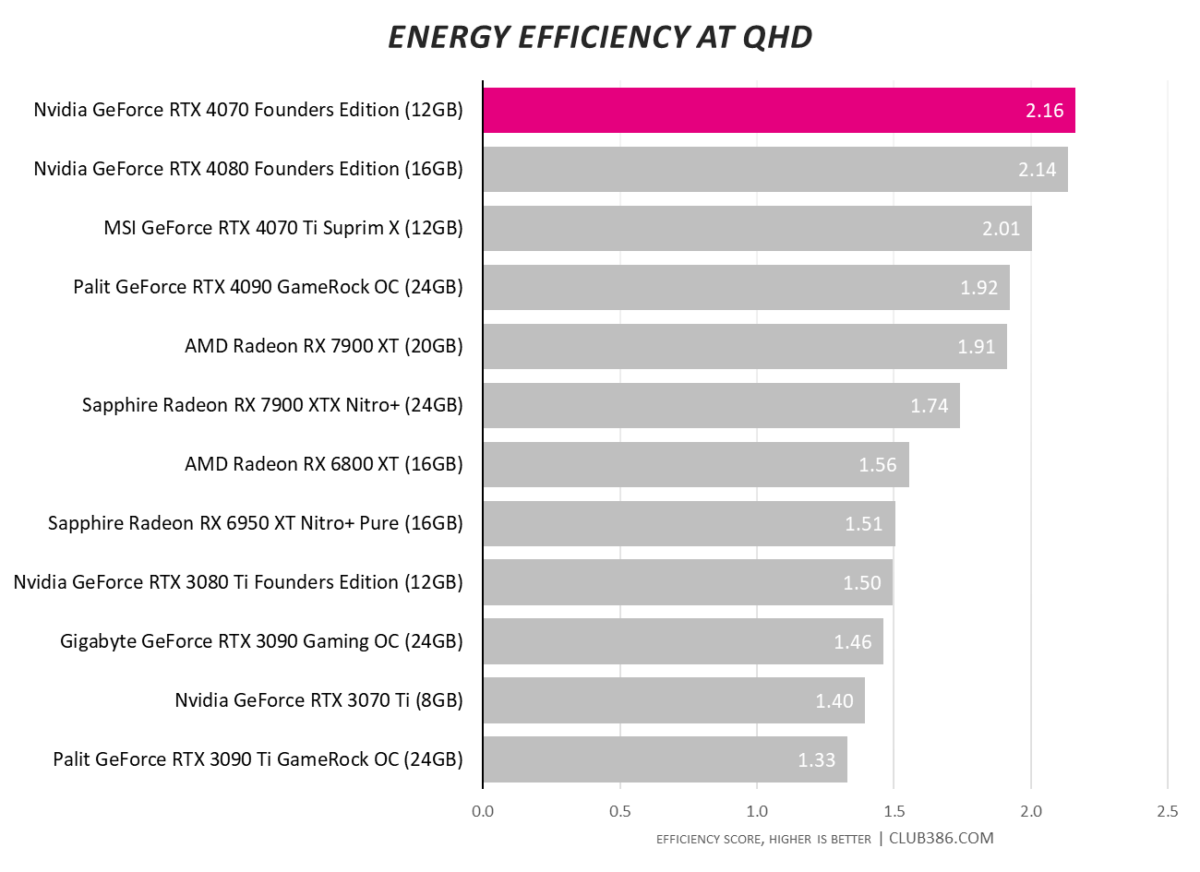

We alluded to energy efficiency being a strong point, and here’s the proof. Dividing average framerate by system-wide power consumption confirms our rig runs optimally with GeForce RTX 4070 at the helm. Note the chart-topping score only takes into account ‘real’ frames; Nvidia’s lead would extend further with AI-generated frames added to the equation.

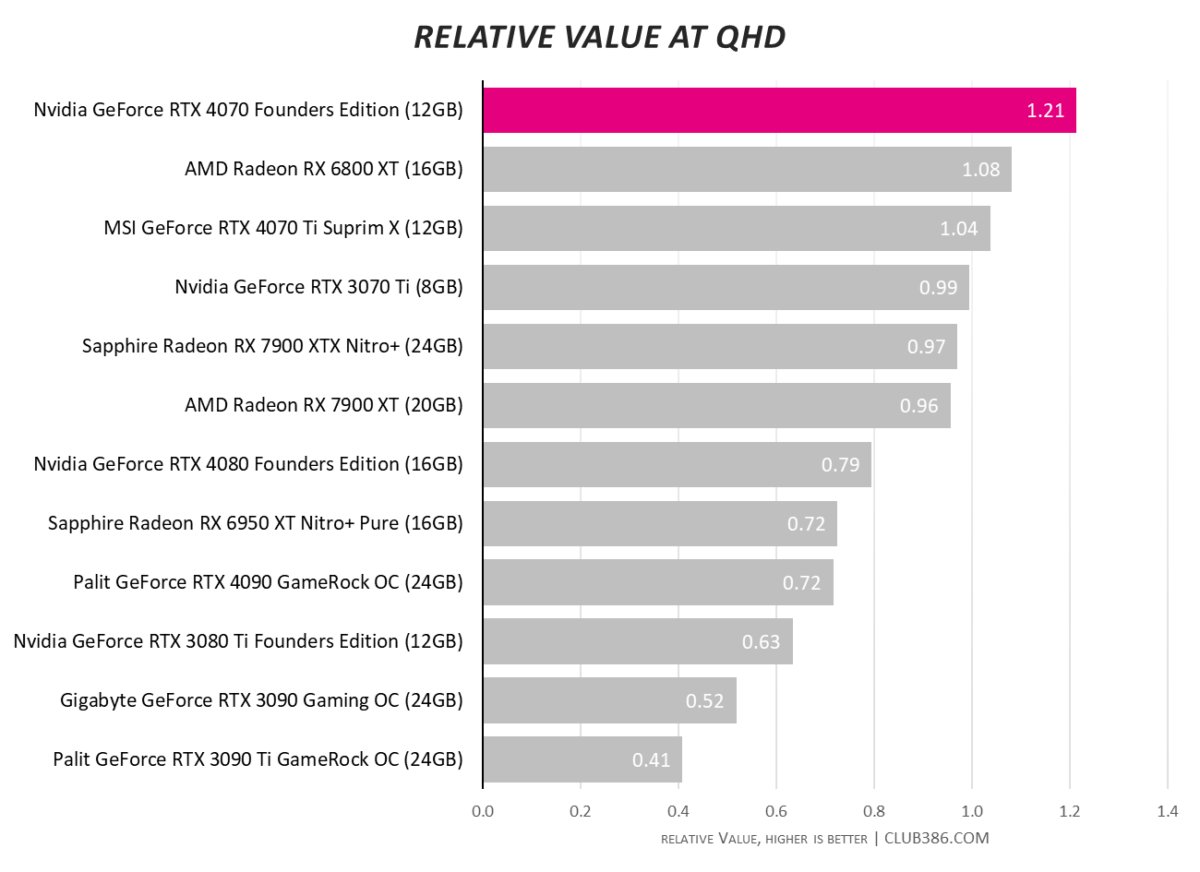

Dividing average framerate by each GPU’s MSRP helps shed light on how much RTX 4070 ought to cost. In a market muddled by the events of recent years, Nvidia easily tops the chart at $599. Dropping that figure to $499 would return a score of 1.46, eclipsing everything else out there; Team Green evidently doesn’t feel the need to go that low just yet.

Overclocking

Seeing as RTX 4070 is effectively a diminished and downclocked RTX 4070 Ti, there’s likely to be a decent amount of headroom to play with. Using the automated scanner built into MSI’s Afterburner utility, in-game boost clock climbs to 2.9GHz with ease. Extreme tinkerers can approach the 3GHz mark, while memory has no trouble scaling to an effective 23Gbps.

There’s extra mileage in the tank, but pushing such an efficient GPU to the limit somewhat defeats the purpose. In this day and age, there are alternative methods to boost framerate.

DLSS 3

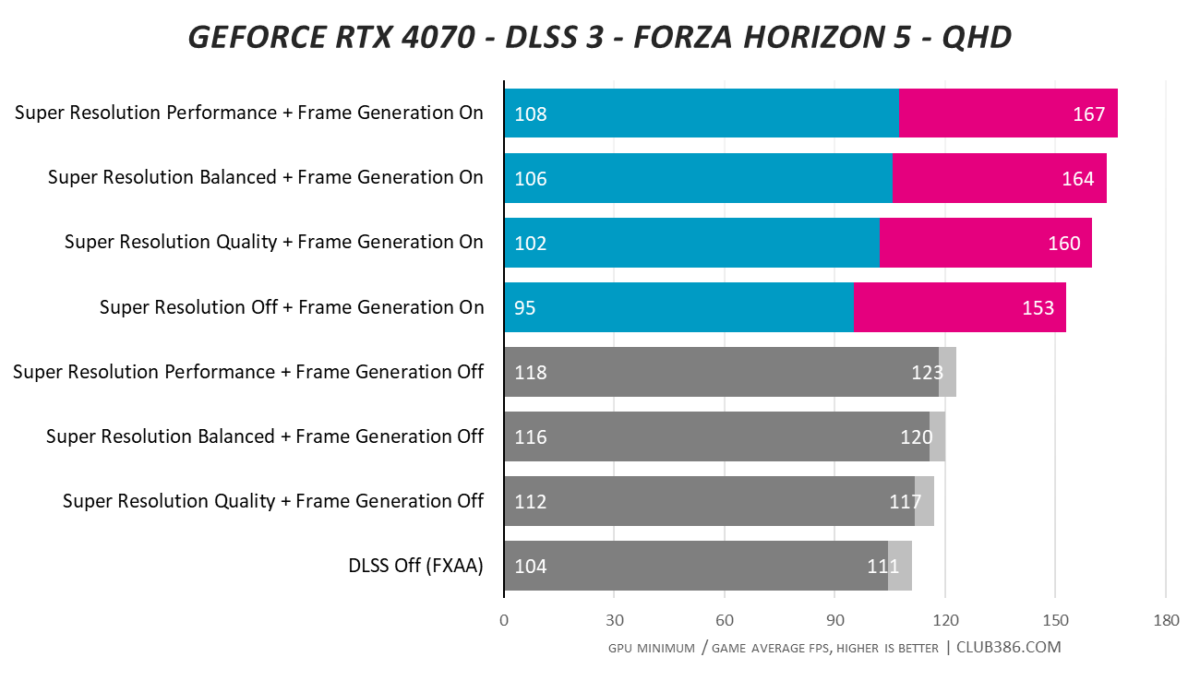

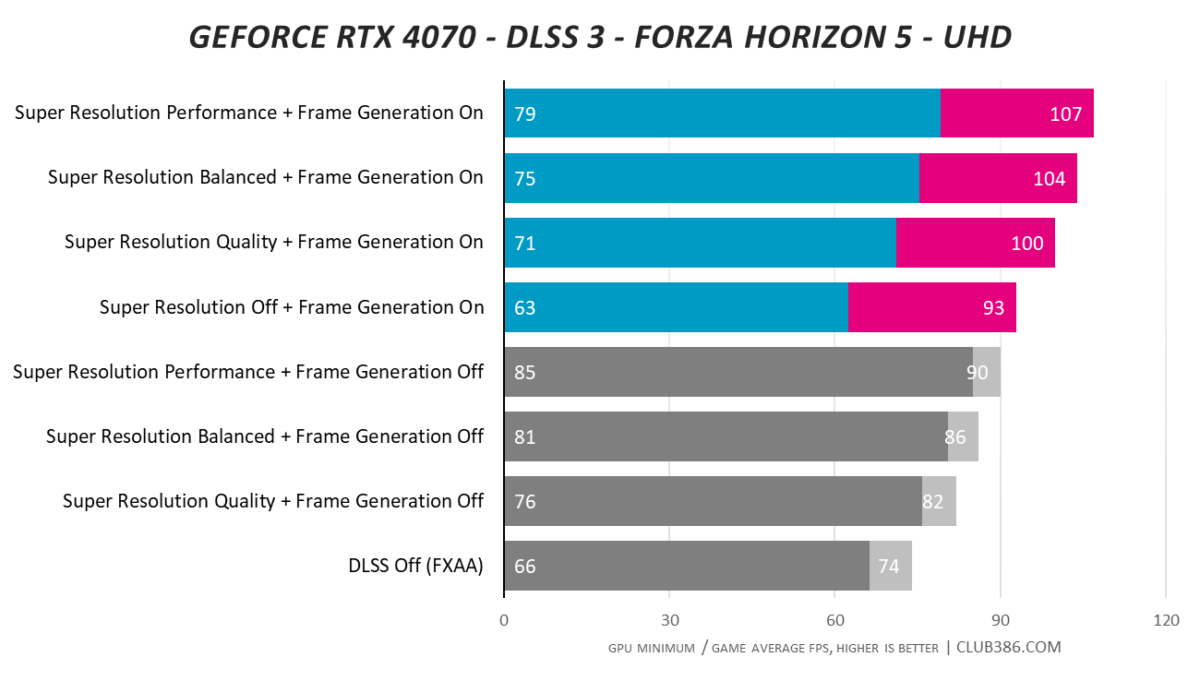

Rejogging the memory banks after all those benchmarks, remember developers now have the option to enable individual controls for Super Resolution and/or Frame Generation. The former, as you’re no doubt aware, upscales image quality based on setting; Ultra Performance works its magic on a 1280×720 render, Performance on 1920×1080, Balanced works at 2227×1253, and Quality upscales from 2560×1440.

On top of that, Frame Generation inserts a synthesised frame between two rendered, resulting in multiple configuration options. Want the absolute maximum framerate? Switch Super Resolution to Ultra Performance and Frame Generation On, leading to a 720p upscale from which whole frames are also synthesised.

Want to avoid any upscaling but willing to live with frames generated from full-resolution renders? Then turn Super Resolution off and Frame Generation On. Note that enabling the latter automatically invokes Reflex; the latency-reducing tech is mandatory, once again reaffirming the fact that additional processing risks performance in other areas.

DLSS 3’s initial success is reflected in industry adoption. Rival GPU manufacturers are scrambling to introduce their own variants, while triple-A games such as Forza Horizon 5 (pictured above) and Diablo IV are choosing to add full support for AI-powered frame generation. It is quickly becoming a sought-after feature with good reason, as results have been impressive thus far.

Forza Horizon 5 is one of the better implementations to date. Enabling frame generation alongside super resolution at maximum quality results in a dramatic 44 per cent increase in framerate. 160fps bodes well for a fast QHD panel, and whatever your feelings on ‘fake’ frames, during actual gameplay, you’d be hard pushed to notice any meaningful difference in image quality.

The performance bump is less pronounced at 4K UHD, yet while RTX 4070 is a 1440p solution first and foremost, the addition of DLSS 3 does leave the door ajar for 2160p gaming. It is too soon to label DLSS 3 a must-have feature, however frame generation is without doubt an important feather in the 40 Series’ cap.

Conclusion

A dearth of competition in mid-range PC graphics has given Nvidia free rein with GeForce RTX 4070. Repurposing an existing GPU to hit new price points, this latest adaptation of AD104 positions the diminutive 294.5mm2 die as a champion of 1440p gaming at high framerates.

Rasterisation performance has in fact taken a sideward step – RTX 4070 is barely any quicker than RTX 3080 or RX 6800 XT – yet the overall package and feature set elevates the newest GeForce above its closest competitors. Raytracing performance is best-in-class, DLSS 3 frame generation continues to impress, and no other GPU delivers fluid QHD gameplay while sipping so little power.

Petite dimensions and beautiful construction make Nvidia’s Founders Edition an attractive upgrade from older generations, but the ease with which spartan RTX 4070 transcends its rivals is indicative of a weak market. It’ll take a competent mid-range Radeon or Arc to ruffle Nvidia’s feathers; until then, GeForce RTX 4070 goes unchallenged as the best GPU available for under 600 bucks.

Verdict: a safe bet at $599, GeForce RTX 4070 is the go-to graphics card for 1440p gaming.