There’s a feeling of excitement at Club386 HQ that only new graphics launches can provide. Sam’s smitten with Intel Arc B580, Fahd can’t wait to see if Radeon RX 9070 XT is a worthwhile upgrade from his current card, and Damien already has plans for a SFF build featuring GeForce RTX 5080.

Truth is that appreciation of all those cards comes alongside a common caveat: GeForce RTX 5090 is the one they really want.

Nvidia GeForce RTX 5090 Founders Edition

£1,939 / $1,999

Pros

- DLSS gets better and better

- The fastest graphics card ever

- Outstanding build quality

- Dual-slot form factor

- 32GB GDDR7 memory

Cons

- Prohibitive pricing

- Power hungry

Club386 may earn an affiliate commission when you purchase products through links on our site.

How we test and review products.

Nvidia’s latest flagship has elicited its fair share of ooohs and aaahs in our labs, and only a lofty £1,939 price tag prevents our collective credit cards from coming out. Such a figure represents an insurmountable hurdle for the majority of gamers.

Nevertheless, we PC enthusiasts love a halo product, and specifically a GPU that paints a picture of what’s to come in subsequent years. It’s a deep-rooted feeling that has persisted for me since my first graphics card acquisition as a youth – a Diamond Monster 3D complete with 4MB of memory – from the local computer market at Wolverhampton racecourse.

Three decades later, I get the privilege and thrill of powering up a GeForce RTX 5090 for the first time. Only, this is a launch that feels different. The landscape has shifted, and goalposts moved as we transition from brute-force muscle to a new generation of innovative neural rendering.

The ramifications for the future of computer graphics are significant, especially so with an industry leader laser-focussed on all things AI. Nvidia’s supremacy in the high-end space is such that I’m confident in stating you’ll see no faster solution than RTX 5090 in 2025. Rivals Intel and AMD have unofficially ceded defeat to focus on the mid-range, and with nobody to challenge Nvidia’s might at the top of the stack, there’s no reason to assume an RTX 5090 Ti is waiting in the wings. This is as good as it gets.

So without further ado, let’s break out the Club386 Table of Doom as we embark on this journey of discovery together.

Specifications

| GeForce RTX | 5090 | 4090 | 3090 |

|---|---|---|---|

| Launch date | Jan 2025 | Oct 2022 | Sep 2020 |

| Codename | GB202 | AD102 | GA102 |

| Architecture | Blackwell | Ada Lovelace | Ampere |

| Process | TSMC 4N | TSMC 4N | Samsung 8N |

| Transistors (bn) | 92.2 | 76.3 | 28.3 |

| Die size (mm2) | 750 | 608.5 | 628.4 |

| SMs | 170 of 192 | 128 of 144 | 82 of 84 |

| CUDA cores | 21,760 | 16,384 | 10,496 |

| Boost clock (MHz) | 2,407 | 2,520 | 1,695 |

| Peak FP32 TFLOPS | 104.8 | 82.6 | 35.6 |

| RT cores | 170 (4th Gen) | 128 (3rd Gen) | 82 (2nd Gen) |

| RT TFLOPS | 317.5 | 191 | 69.5 |

| Tensor cores | 680 (5th Gen) | 512 (4th Gen) | 328 (3rd Gen) |

| Peak FP16 TFLOPS | 419 | 330.3 | 142.3 |

| Peak FP4 TFLOPS | 3,352 | – | – |

| ROPs | 176 | 176 | 112 |

| Texture units | 680 | 512 | 328 |

| Memory size (GB) | 32 | 24 | 24 |

| Memory type | GDDR7 | GDDR6X | GDDR6X |

| Memory bus (bits) | 512 | 384 | 384 |

| Memory clock (Gb/s) | 28 | 21 | 19.5 |

| Bandwidth (GB/s) | 1,792 | 1,008 | 936 |

| L2 cache (KB) | 98,304 | 73,728 | 6,144 |

| PCIe interface | Gen 5 | Gen 4 | Gen 4 |

| Video engines | 3 x NVENC (9th Gen) 2 x NVDEC (6th Gen) | 2 x NVENC (8th Gen) 1 x NVDEC (5th Gen) | 1 x NVENC (7th Gen) 1 x NVDEC (5th Gen) |

| Power (watts) | 575 | 450 | 350 |

| MSRP ($) | 1,999 | 1,599 | 1,499 |

Going by high-level specifications, RTX 5090 represents a scaled-up version of RTX 4090. What we have in retail form is a potent flagship that enables 11 of 12 maximum graphics processing clusters (GPCs). This translates to a mighty 21,760 CUDA cores, which itself represents a 33% uptick over RTX 4090. Look back even further to RTX 3090 and note the number of cores more than doubles.

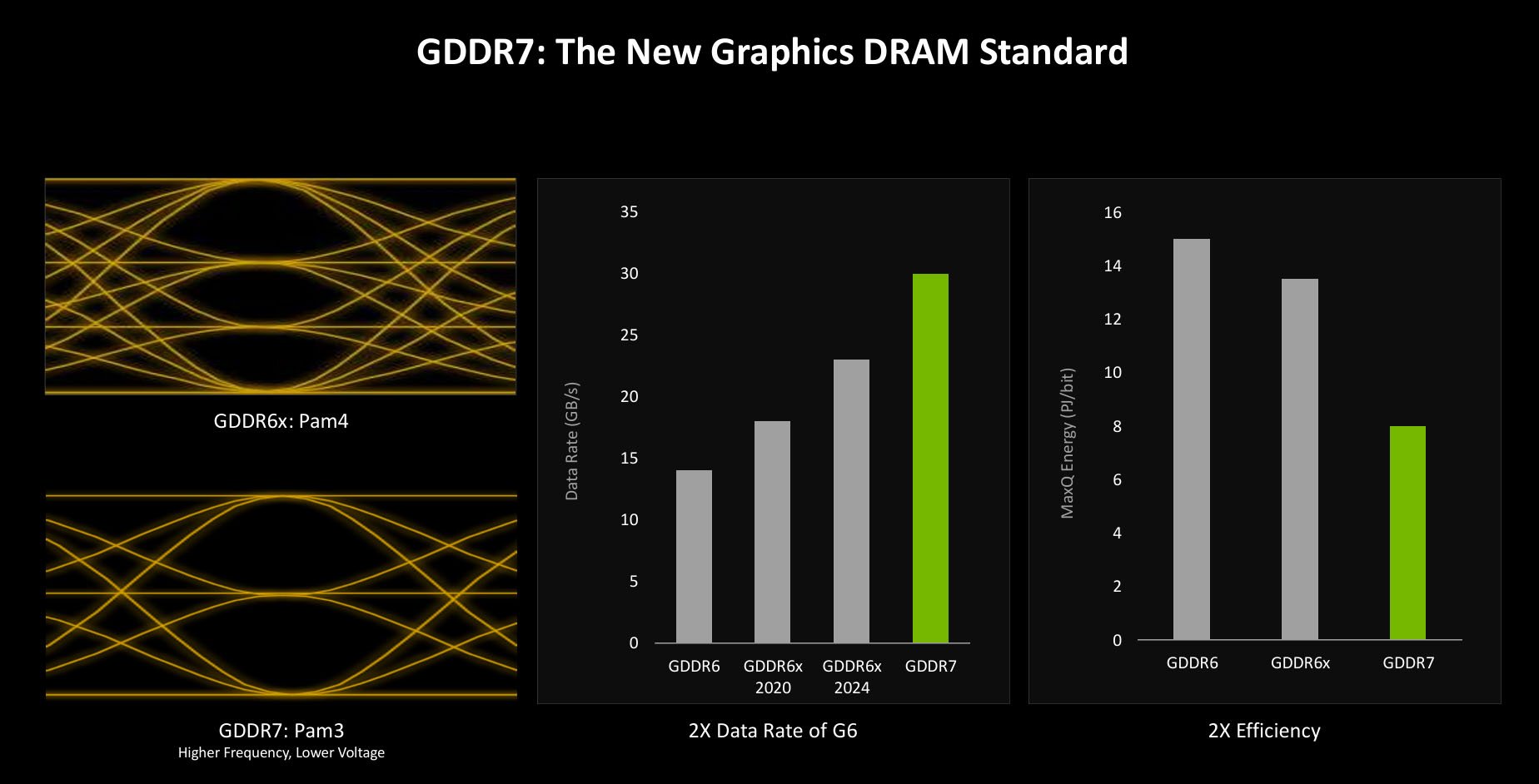

That firepower is allied to gains at the back end, too, where memory is bolstered significantly. The available pool climbs 33%, from 24GB to 32GB, memory bus widens from 384 bits to 512, and more importantly, the introduction of high-speed GDDR7 at a swift 28Gb/s sees total bandwidth balloon to 1,792GB/s. Yes, you’re reading that right, memory bandwidth improves by almost 80% over RTX 4090.

Welcome gains, no doubt, and the underlying Blackwell architecture carries a few tricks up its sleeve not illustrated above.

Architecture

Built on a custom TSMC 4N process also used in the previous generation, the new champ packs in more of everything that matters. Nevertheless, even mighty RTX 5090 is not a full implementation of the GB202 die that has over 11x the transistor count as there are humans on this planet.

Like RTX 4090 before it, there’s room for a Titan-esque RTX 50 Series card packing all 192 SMs and 24,576 CUDA cores, instead of 170 SMs and 21,760 cores available here. I very much doubt you’ll witness the Titanic beast because Nvidia has no reason to allocate precious full-die GPUs to the consumer gaming space.

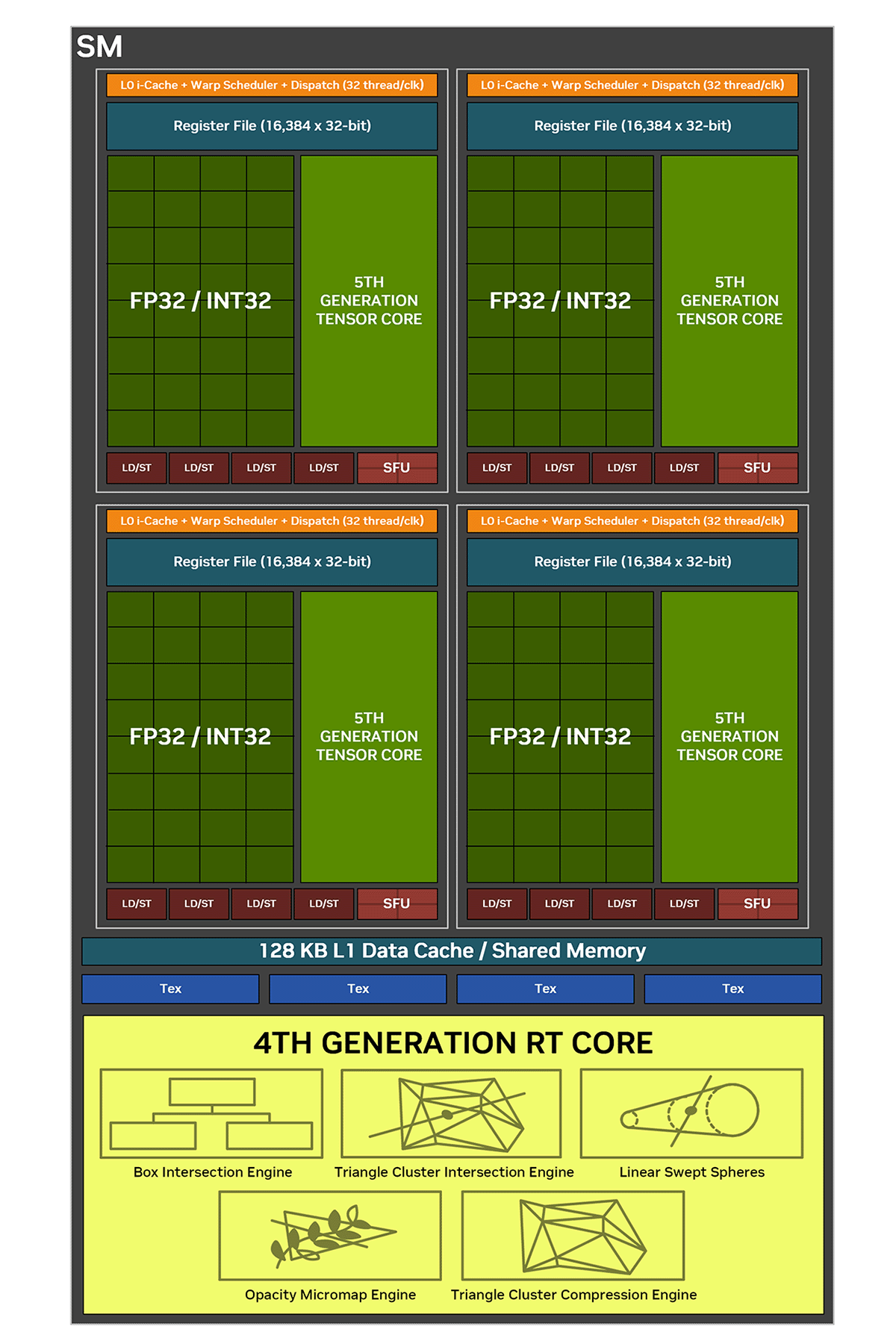

One of 170 resident on RTX 5090 zoomed in, the Blackwell SM continues tradition by running with 128 FP32 cores, one RT Core, four Tensor Cores, and four Texture Units, as found on RTX 40 Series.

Approaching shaders first, you may remember that RTX 40 Series, aka Ada, split each of the SMs into 64 FP32 and a further 64 capable of FP32 or INT32. Now, RTX 50 Series still carries the same number per SM, but all 128 can process floating point or integer, though not at the same time. The distinction is important insofar as games do use INT32, so having core flexibility is useful in maximising performance by keeping the engines full to the brim with work to do.

Bigger changes are afoot for the other SM constituents. Let me explain how by way of the table below.

| RTX 4090 | RTX 5090 | Increase | Gain per SM | |

|---|---|---|---|---|

| ISO CUDA FP32 TFLOPs | 81.92 | 108.8 | 32.8% | Linear |

| RT TFLOPS | 191 | 317 | 66% | 2x |

| Tensor FP16 (FP32 Acc) | 330.3 | 419 | 26.9% | Linear* |

| Tensor FP8 | 1,321 | 1,676 | 26.9% | Linear* |

| Tensor FP4 | 1,321** | 3,352 | 153.7% | 4.7x |

** RTX 40 Series can run FP4, though it uses FP8 Tensor Units to do so

Assuming an identical frequency, RTX 5090 provides a linear increase in CUDA FP32 throughput most closely associated with rasterised rendering. Actual gain is a little less as the new GPU is clocked in a smidge lower.

You’d expect the same to be true of the RT cores – a 33% increase when run at the same frequency – but this is not the case. Nvidia claims double the ray-triangle intersection testing ability, thus making RTX 50 Series more efficient at ray tracing. From an engineering point of view, this is Nvidia saying ray tracing is very much here to stay.

Those with a keen eye will realise the Triangle Cluster Intersection Engine and Linear Swept Spheres support are new RT Core additions. Their purpose is to hardware accelerate a new RTX feature called Mega Geometry – available on all RTX 20 Series and above – and efficiently run the math behind calculating complicated structures such as hair. Put simply, baked into hardware, it’s an invitation for developers to speed-up certain processing for more realistic-looking imagery.

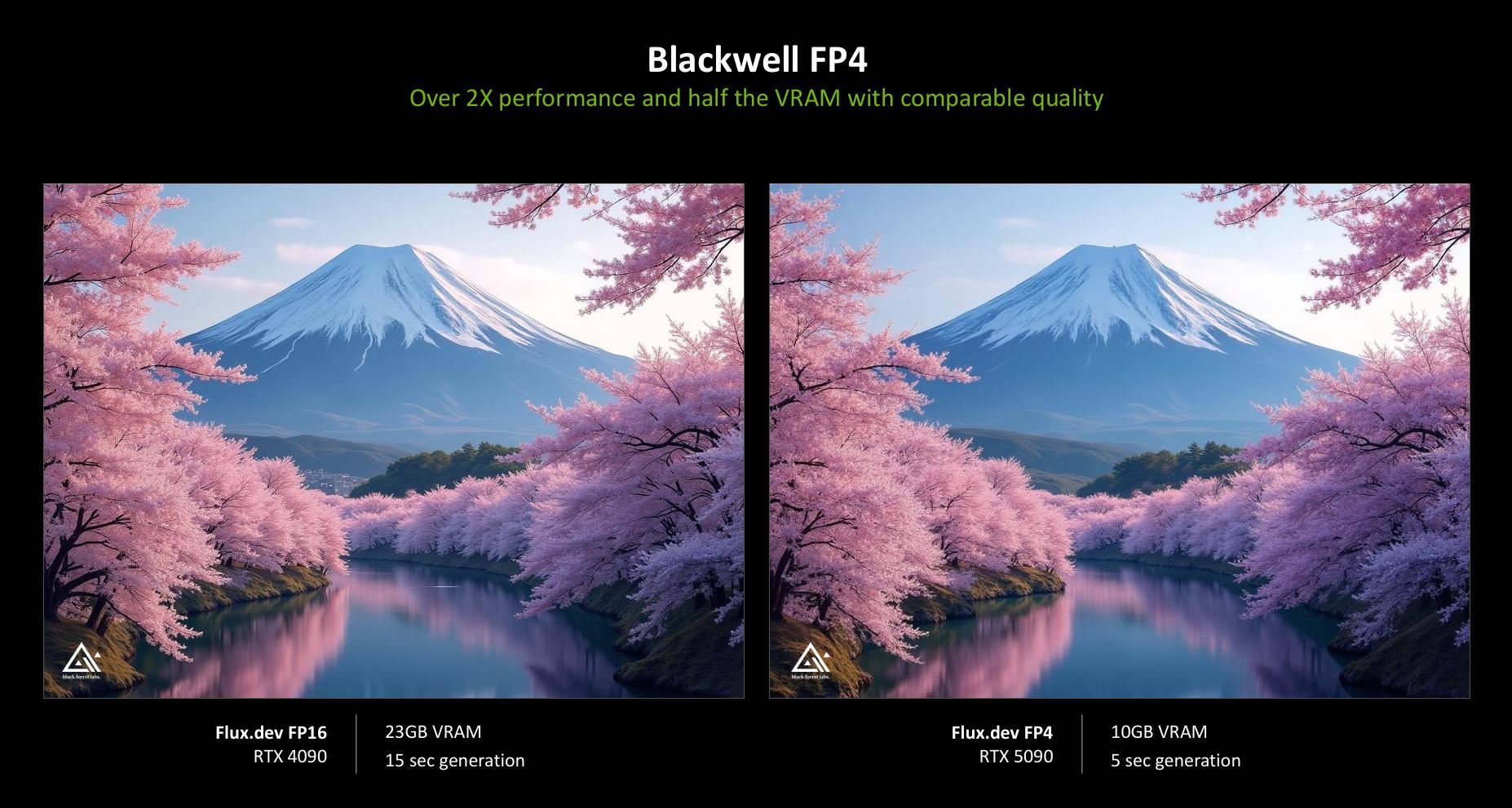

A key callout is RTX 50 Series’ ability to chomp through AI calculations. Part and parcel of DLSS technology underpinning performance, they’re a big deal. Blackwell also introduces specific hardware support for FP4 (AI TOPS) operations, and this is how Nvidia claims startling increases over previous generations. Aimed toward generative AI, running FP4 typically halves VRAM requirement and offers easily twice the speed as FP16, with little loss in fidelity.

“Neural shaders are set to become the predominant form of shaders in games, and in the future, all gaming will use AI technology for rendering.”

Nvidia, January 2025

Being even handed, if your Tensor Core (AI) workload runs precision at INT8, FP8 or FP16, the gain from RTX 4090 to RTX 5090 is a far more modest 26.9%, which is less than the increase in card-wide cores. A probable explanation is that RTX 5090 runs its more numerous cores at a lower frequency to save power.

Eagled-eyed purveyors of GPU architecture will doubtless appreciate the only meaningful place where RTX 5090 cedes ground to RTX 4090 is with respect to pixel fill-rate, determined by multiplying ROPs by clock frequency. Doing so gives the 40 Series card a slight advantage of 443.5 vs. 423.6 Gigapixels per second.

Multi-frame Generation

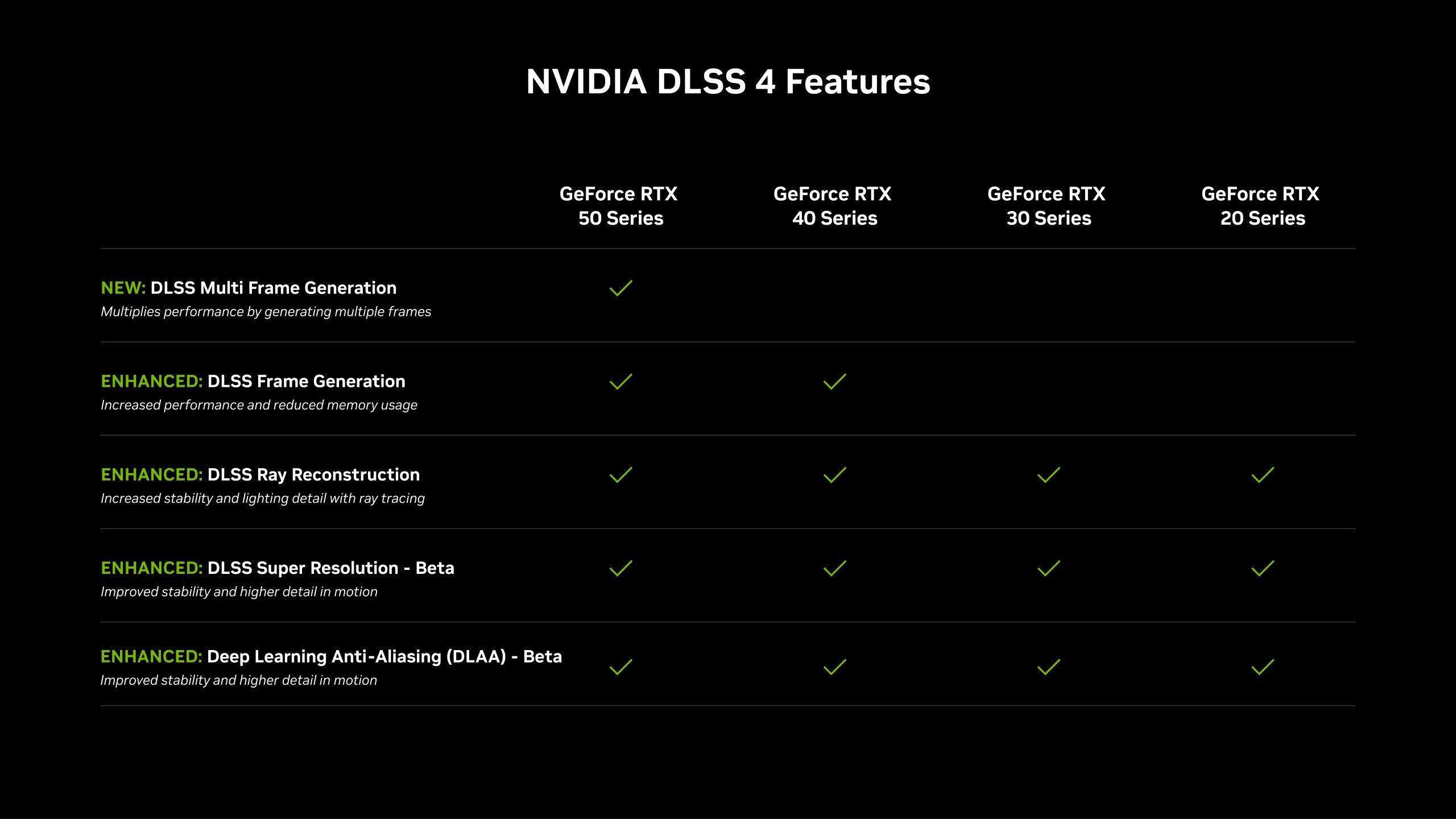

Nvidia’s Frame Generation technology is a key RTX 40 Series asset for boosting performance. Requiring developer support, it works by inserting an AI-created frame between traditionally rendered frames. Tensor Cores do most of the heavy lifting, working quickly enough to ensure seamless transition from raster to AI and back.

Knowing this area is ripe for further exploration, RTX 50 Series duly builds on the technology with Multi Frame Generation (MFG). This time around, though, up to three AI-generated frames are inserted after a regular raster frame, up from a single frame on the previous gen.

Part of DLSS 4, MFG is possible because of changes in the name of simplification. The hardware-based Optical Flow Accelerator used by RTX 40 Series Frame Generation is replaced by a deploy-once AI model capable of generating multiple frames. It’s 40% faster and requires 30% less VRAM, too. Going from dedicated hardware to Tensor Core-run processing opens up the tantalising opportunity of broadening MFG’s appeal in the future.

In most performant form, MFG is run alongside DLSS (upscaling) and Ray Reconstruction (AI-generated pixels in complex ray-tracing environments) to output 15 out of every 16 pixels you see on the screen. Think about that for a second. One raster, 15 AI.

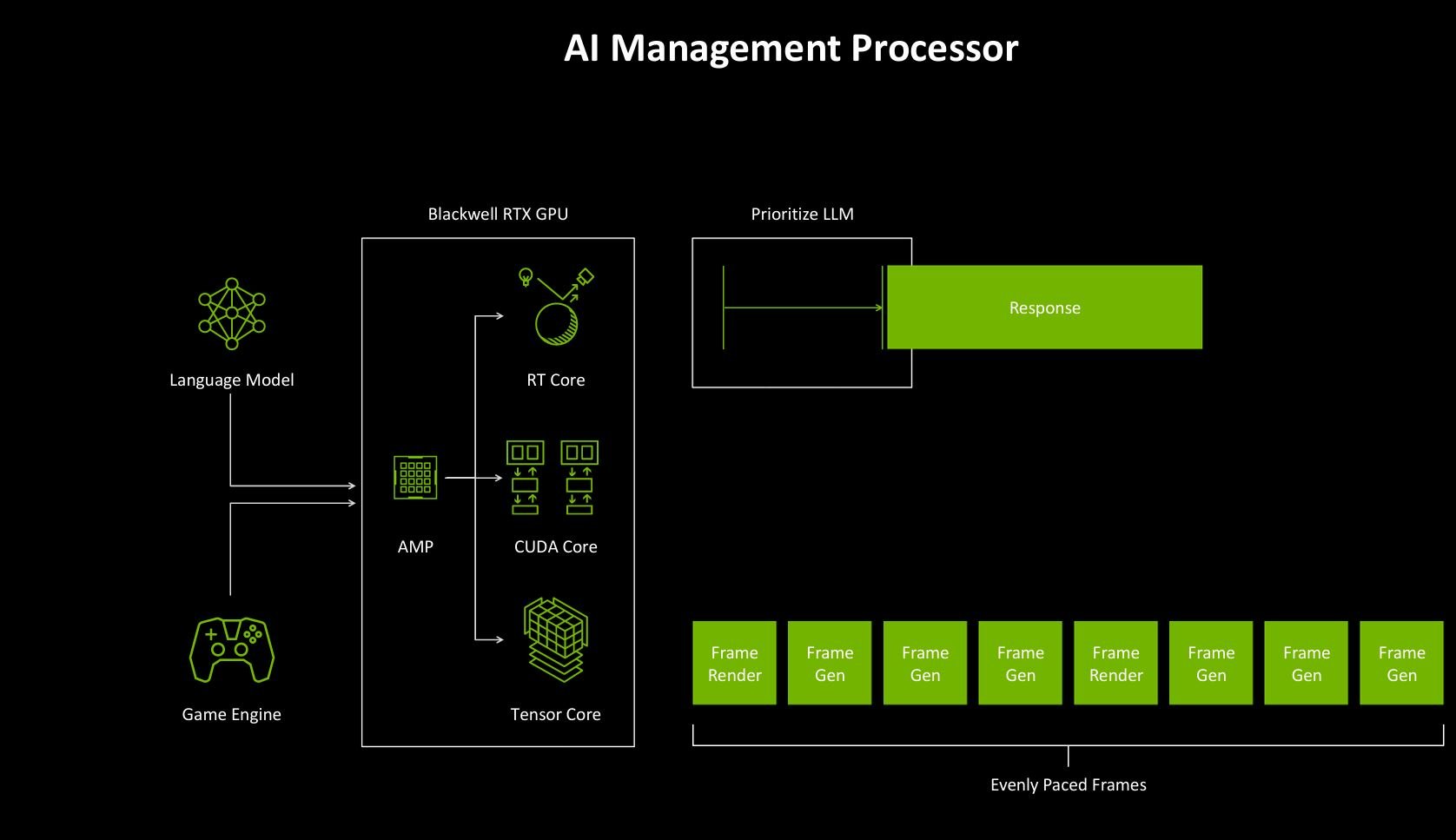

Chatting to engineers at a press event, Nvidia wanted to implement MFG on RTX 40 Series cards but couldn’t do so for other technical reasons, the most pressing of which was the inability to pace MFG frames properly between rastered ones. This makes sense if I think about it, as the hardware needs to keep all this AI-generated traffic running smoothly and evenly. Any poorly timed frame delivery is immediately apparent to the gamer. Getting around this, RTX 50 Series has improved Flip Metering which entails shifting frame-pacing duties from the CPU to dedicated hardware in the display engine.

There’s also a new AI Management Processor (AMP) whose job it is to schedule disparate RT, CUDA, and Tensor Core workloads smoothly. Think about the following context. You’re playing a game with multiple AI models running concurrently, tasked with generating multiple frames through to running large-language models (LLMs) powering unique dialogue from non-playing characters. Then add in a sprinkling of ray tracing on top whilst taxing traditional CUDA cores to the limit.

Juggling these facets is no mean feat. The spinner of gaming plates, AMP is intended to keep everything in check, ensuring your NPC’s dialogue doesn’t interfere with Frame Generation or Ray Reconstruction.

Transformer Model

Up until today, DLSS used a pixel-generating methodology based on Convolutional Neural Networks (CNNs). Optimised over the previous six years, it’s as refined as the technology allows. Now, however, helping the DLSS cause is a more modern technique known as the Transformer Model. Developed by Google in 2017, this deep learning model is the gold standard for AI, adopted in popular applications such as ChatGPT and Gemini.

DLSS 4 Transformer requires more innate horsepower to run because it’s more complex. Nvidia cites twice the parameters and four times the compute when compared to CNN. Set against the backdrop of computer graphics, the new model carries noticeable image-quality benefits for DLSS Ray Reconstruction, Super Resolution, and DLAA.

Here’s an illustration of how Ray Reconstruction improves temporal stability and increases detail. I saw this demonstration firsthand at the same event. The effect is subtle enough that you don’t really notice it until it’s pointed out. But once it is, you immediately see the flaws in even the well-optimised CNN model.

The absolute kicker is that Nvidia isn’t tying Transformer Model’s IQ gains to RTX 50 Series alone. The company certainly could have, incentivising upgrades to the latest and greatest hardware, yet it’ll be available to all RTX cards. I know it’ll run well on recent GeForces such as the RTX 4080 Super, but I’m genuinely curious to see how it fares on older cards.

Of course, DLSS refinements like MFG and Transformer need developer implementation on a game-by-game basis. One simply cannot run them on every title. Key to success is how quickly Nvidia manages to get its host of technologies into all the leading games.

Other than a larger and more transistor-rich slab of silicon than has come before, my key takeaway from GeForce RTX 5090 is increased reliance on AI for smart rendering. The brute force approach only gets you so far – the GPU is as brutish as can be – so here’s Nvidia saying that subsequent gaming architectures will double down on Tensor cores and technologies akin to MFG.

I strongly feel this approach is the only sane way of instigating fundamental step changes in gaming performance – wait until you see MFG in action on Alan Wake 2 and Cyberpunk 2077 – alongside upscaling IQ improvements emanating from using better models.

Memory and Titan

Nvidia’s well aware that more powerful and numerous SM units require significantly more memory bandwidth than available to even RTX 4090. Two key changes are enacted. Compared to its predecessor, bus width increases from 384 bits to 512 bits and memory from 21Gbps to 28Gbps – the latter made possible by the move from GDDR6 to GDDR7. Combining the two lends RTX 5090 a 78% on-paper advantage, together with a frame buffer that at 32GB is 33% larger.

Adding more of everything inevitably leads to higher power consumption, too, notwithstanding GDDR7’s better energy efficiency at any given frequency over its predecessor. Accordingly, Nvidia states a lofty 575W TGP, representing a 28% increase over already hot and bothered RTX 4090. I wonder what it would have been if GDDR6 was still in service?

Though I’ve dismissed talk of an RTX 50 Series Titan card carrying the full GB202 die, I can’t help but conjecture. Run at the same frequencies as RTX 5090, I hazard it’ll need higher voltage to maintain all SMs. A Titan pulling 750W certainly isn’t out of the question. This is all academic talk until, and very much if, Nvidia pulls one out of the hat for no other reason than it can.

Other Titbits

Video encoding/decoding ability is tied in with RTX 50 Series card. For top dog RTX 5090, Nvidia equips three encoders and two decoders. RTX 5080 goes two and two for both, RTX 5070 Ti uses two and one, while RTX 5070 slims it all the way down to one each. They all support the 4:2:2 format, meaning twice the colour data as 4:2:0, so a good fit for videos that feature lots of hues and contrasting imagery. Furthermore, Nvidia says GeForce RTX 5090 can export video 60% faster than the GeForce RTX 4090 and at 4x speed compared with the GeForce RTX 3090.

A natural bump in specification and throughput means PCIe 5.0 is the conduit between card and system. I don’t imagine much performance improvement over PCIe 4.0 because the older standard has plenty of bandwidth.

Founders Edition

Before I get to the all-important benchmarks, it’s absolutely worth taking a moment to reflect on the beautiful engineering of RTX 5090 Founders Edition. This, for me, supplants RTX 4070 Super as the prettiest card Nvidia has ever produced.

And make no mistake, this isn’t a case of form over function. Rather, this is a design years in the making, and one that rectifies multiple shortcomings of unwieldy, gargantuan RTX 4090.

Measuring 304mm x 137mm, RTX 5090 FE is exactly the same length and height as its predecessor but slims from a three-slot to two-slot form factor. The end result is dramatic, resulting in a far sleeker product better suited to a wider range of cases. I didn’t find the chunky RTX 4090 particularly offensive, but it looks comically large by comparison.

The ability to increase TGP and decrease overall thickness is a neat trick, made possible by a wholly new cooling setup based around a dual-blowthrough configuration. Both fans showcase ultra-tight tolerances and funnel air through fin stacks whose surface is subtly curved to ensure the longest fins are where airflow is greatest, while angled vents on the cards’ thinner sides help prevent hot air from reentering from below.

This, for me, supplants RTX 4070 Super as the prettiest card Nvidia has ever produced.

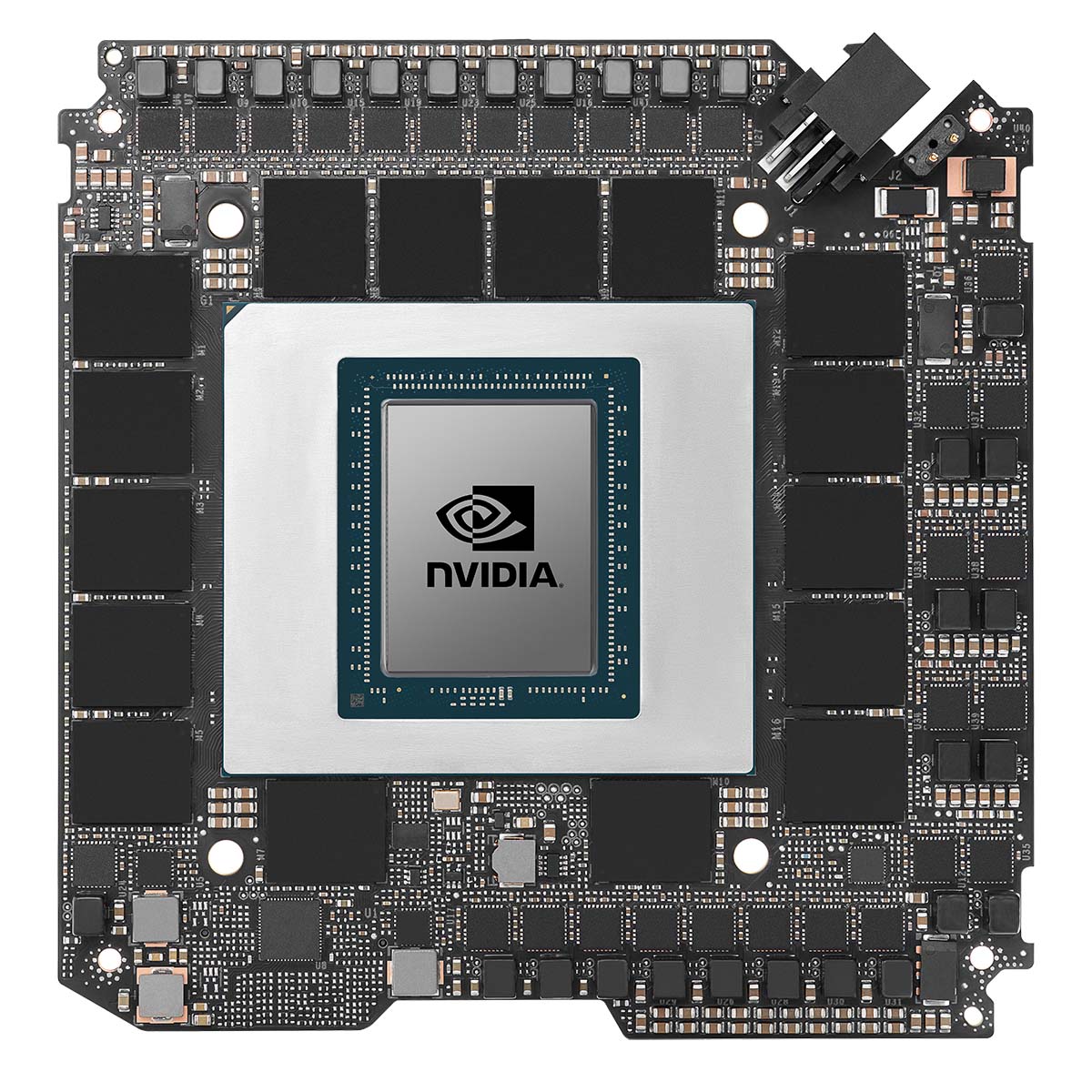

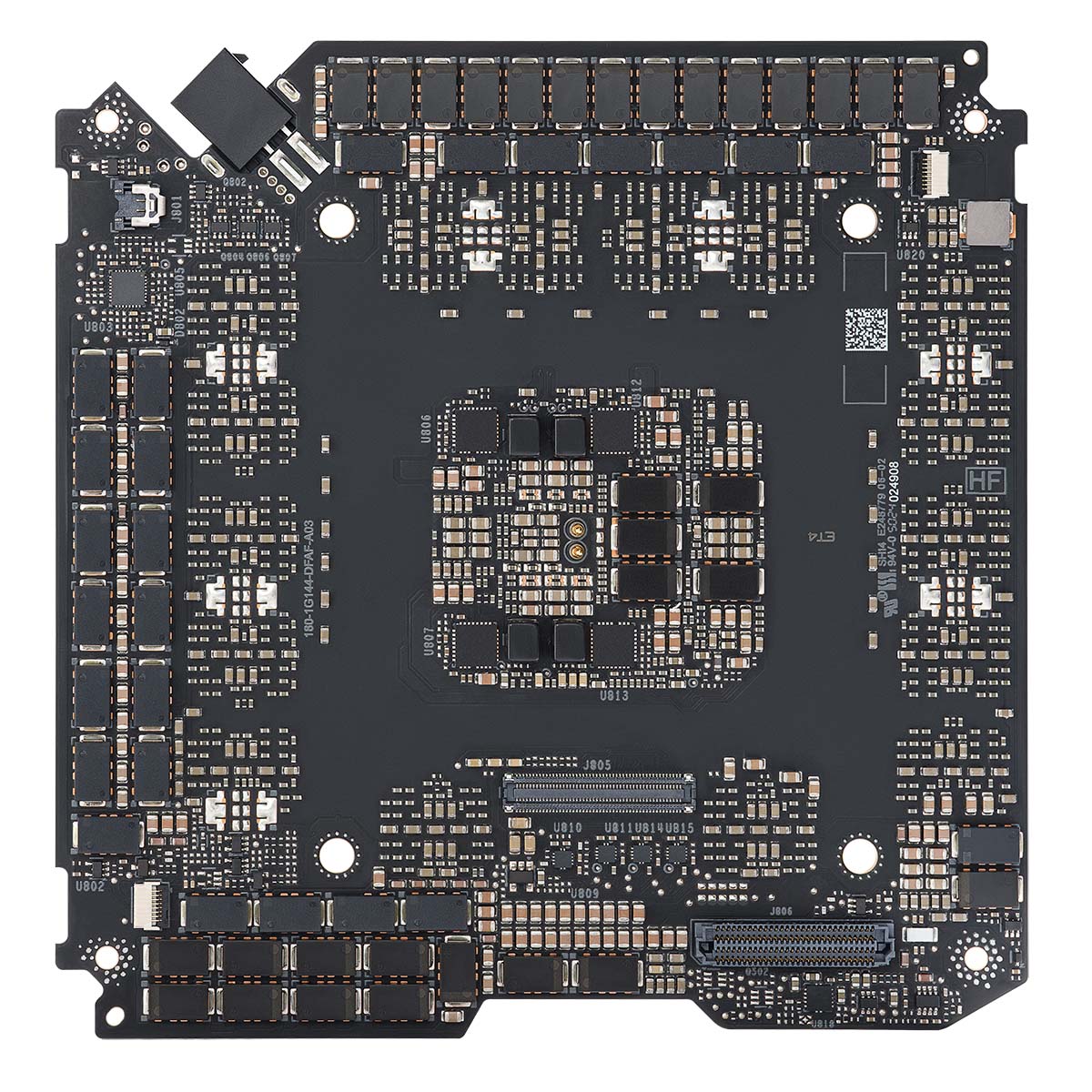

Airflow is but one part of the puzzle. Beneath that shiny, curvy, all-metal shell is a central PCB, behind the X pattern as you look at it. Densely packed with 32GB of memory, this is Nvidia’s smallest range-topping PCB to date, and unique insofar as its central position means connectivity to IO and PCIe takes an unusual approach.

This time around, a proprietary internal cable attaches PCB to PCIe connector, which is clamped in place using a series of screws. Similarly, the IO ports connect to the PCB via a ribbon cable tasked with driving three DisplayPort 2.1b and a single HDMI 2.1b. All told, the card, in tandem with display stream compression (DSC), can drive up to 4K 12-bit HDR at 480Hz or 8K 12-bit HDR at 165Hz.

The intrigue doesn’t stop there. Nvidia’s 750mm2 GPU is now coated with a thin layer of Liquid Metal as opposed to conventional thermal interface material (TIM). In an effort to maximise performance and longevity, the GPU is surrounded by a slim rubber ring to prevent leakage, and the contact plate is nickel-plated to eliminate any unwanted corrosion and subsequent drying out.

Speaking of contact plate, the customary copper block is now a purpose-built vapour chamber whose heatpipes extend horizontally through the fin stacks each side of the central PCB. It’s an intricate setup that shows the lengths Nvidia has gone to in order to shoehorn a 575W chip into a dual-slot design. The drawback is that add-in-board partners with simpler triple- or quad-fan configurations are going to be humongous by comparison. Expect custom 5090s to occupy four or even five slots.

Power is sourced via a tweaked 12VHPWR connector that feels a tad more secure. Nvidia includes a four-way, eight-pin splitter as part of the bundle, and even that is upgraded, with a solid reinforcement block doing a better job of preventing flex than the old piece of tape. Handy to have, however you will want a modern PSU with an available 12VHPWR header; a single cable is so much tidier, and less likely to obstruct the backlit GeForce RTX logo.

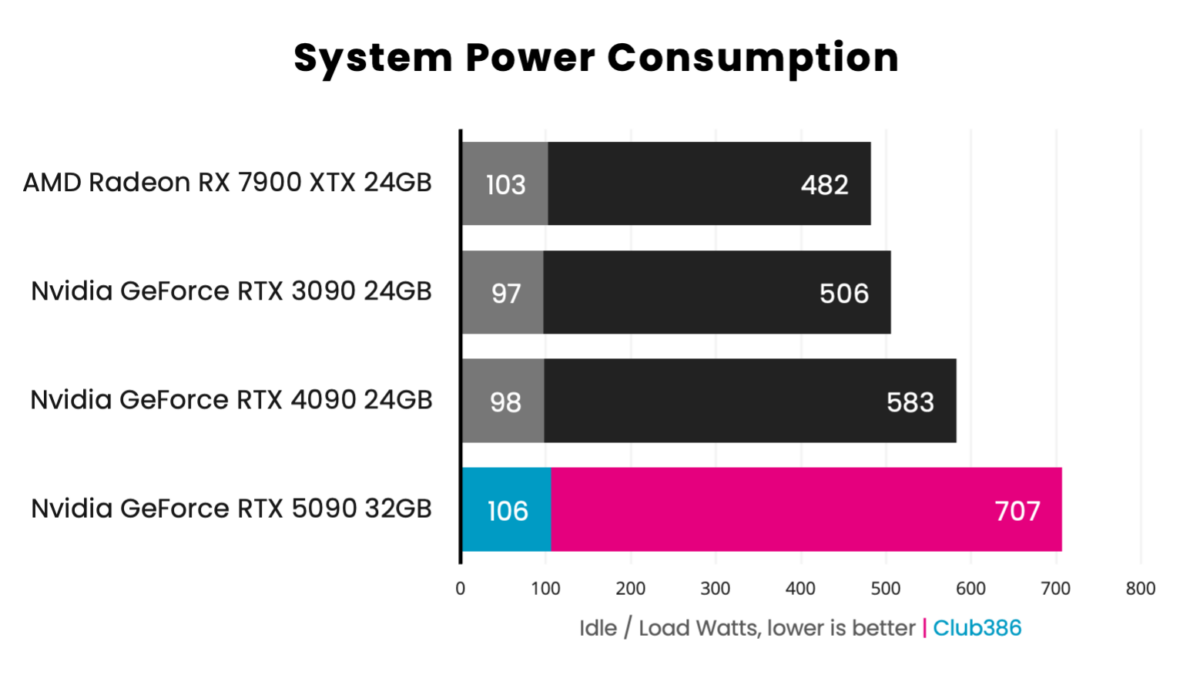

It’s impressive to see a 575W chip tamed by such a sleek cooler, yet there are ramifications. To my memory this is the most power-hungry GeForce graphics card ever produced, and in an era of high energy bills, it’s worth knowing the true cost of ownership.

Installed in a high-end test platform, system-wide power consumption balloons to 707W when gaming. Putting that into perspective, at the current UK energy price cap rate of £0.25 per kWh, a single six-hour gaming session adds £1.05 to your bill. Play a conservative six hours a week and you’ll pay £54.85 in electricity for the year. Stay glued to the screen three hours a day, every day, and your annual ‘leccy cost will hit £191.93 for the PC alone.

Extreme power usage is reflected in Nvidia recommending at least a 1,000W PSU, yet also serves to showcase the robustness of Founders Edition cooling. In-game temperature peaked at a balmy 73°C throughout our testing, and fan noise remained below 40dB. Mighty impressive results for a dual-slot card.

I’m of the opinion no consumer graphics card should fetch £1,939 – it alone costs more than my current rig! – yet if you are able to afford an RTX 5090 Founders Edition, I will cede this feels like an ultra-premium product. From unboxing to installation, it’s an exquisite piece of kit and a joy to handle.

The bad news? Well, you’ll do well to ever lay hands on one. This isn’t just the fastest, most beautiful graphics card on the market, it’s also a 32GB AI and content creation accelerator, making it highly sought after by a vast audience. News of stock shortages has already spread, but I’d go as far as to say RTX 5090 Founders Edition will be hard to come by for the entirety of 2025.

Performance

Our 7950X3D Test PCs

Club386 carefully chooses each component in a test bench to best suit the review at hand. When you view our benchmarks, you’re not just getting an opinion, but the results of rigorous testing carried out using hardware we trust.

Shop Club386 test platform components:

CPU: AMD Ryzen 9 7950X3D

Motherboard: MSI MEG X670E ACE

Cooler: Arctic Liquid Freezer III 420 A-RGB

GPU: Sapphire Nitro+ Radeon RX 7800 XT

Memory: 64GB Kingston Fury Beast DDR5

Storage: 2TB WD_Black SN850X NVMe SSD

PSU: be quiet! Dark Power Pro 13 1,300W

Chassis: Fractal Design Torrent Grey

New year, new start. I’ve tested RTX 5090, 4090 and 3090 all from scratch for your benchmark perusal, and I’ve also re-tested the best Radeon of today, RX 7900 XTX. This is a heavyweight showdown fit for the modern era, today’s equivalent of the fight of the century. Let’s get ready to rumble.

Application & AI

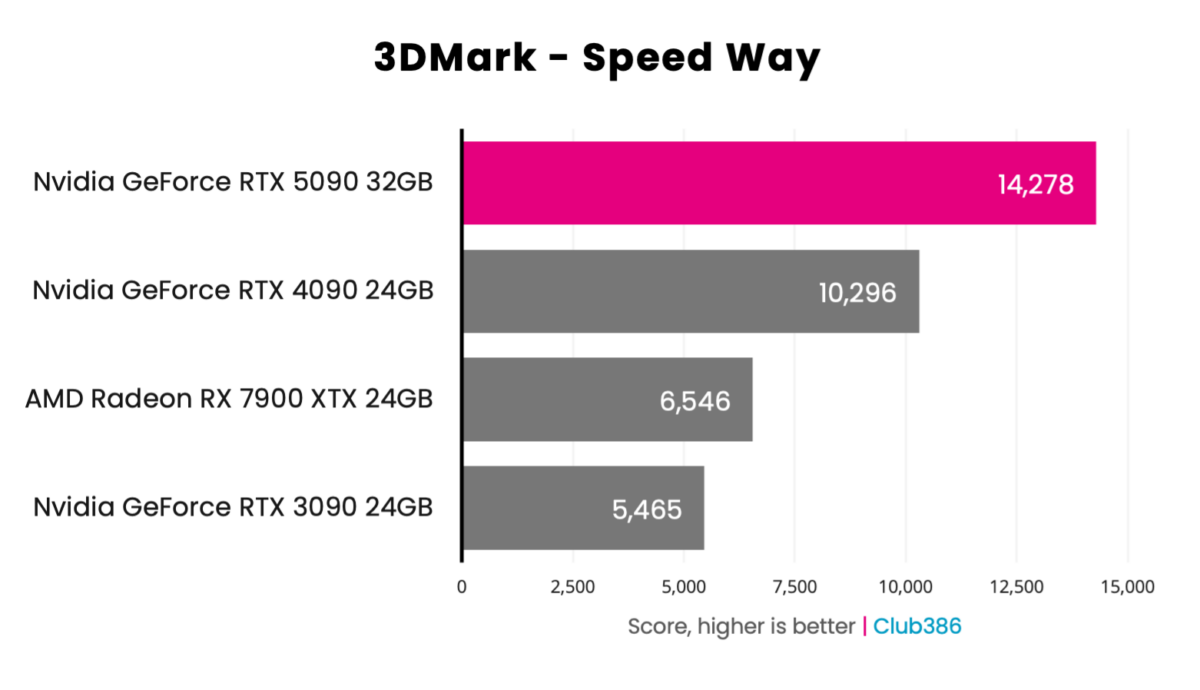

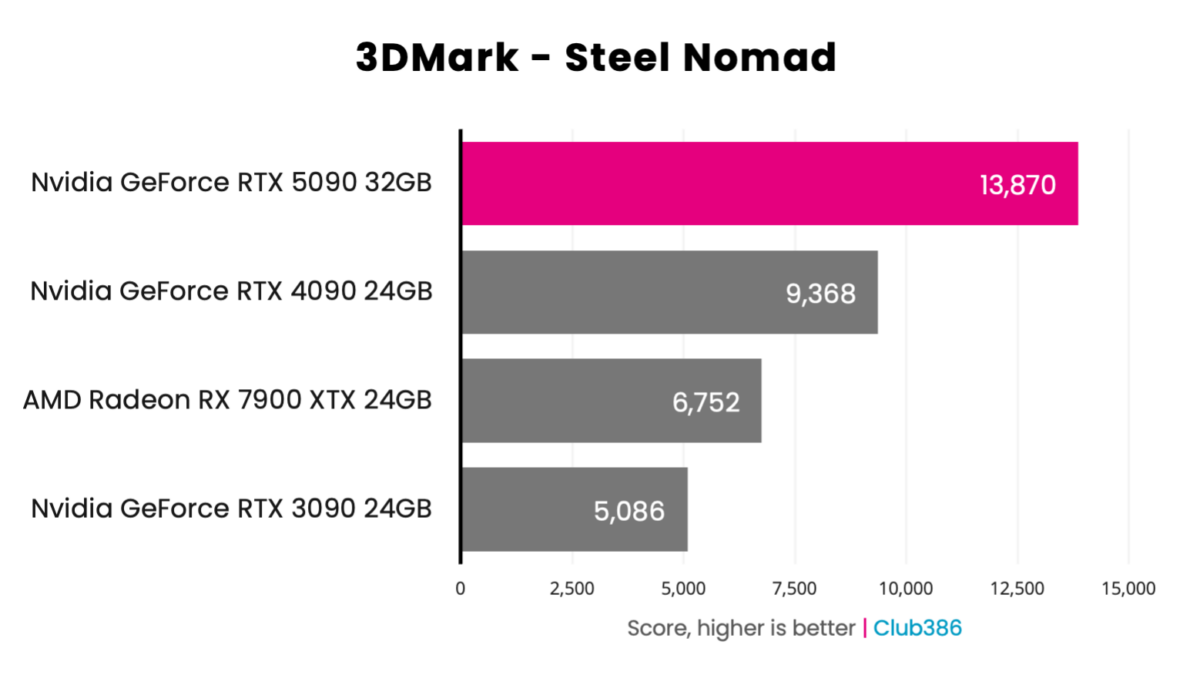

What better place to start than industry-standard 3DMark. A 39% uptick over already-blistering RTX 4090 is eye opening, while the likes of RX 7900 XTX and RTX 3090 cower in the corner.

High-end Speed Way or non-raytraced Steel Nomad? Whichever medicine you take, RTX 5090 administers a whole new level of dosage. 173% faster than RTX 3090, 105% faster than RX 7900 XTX, and 48% faster than RTX 4090.

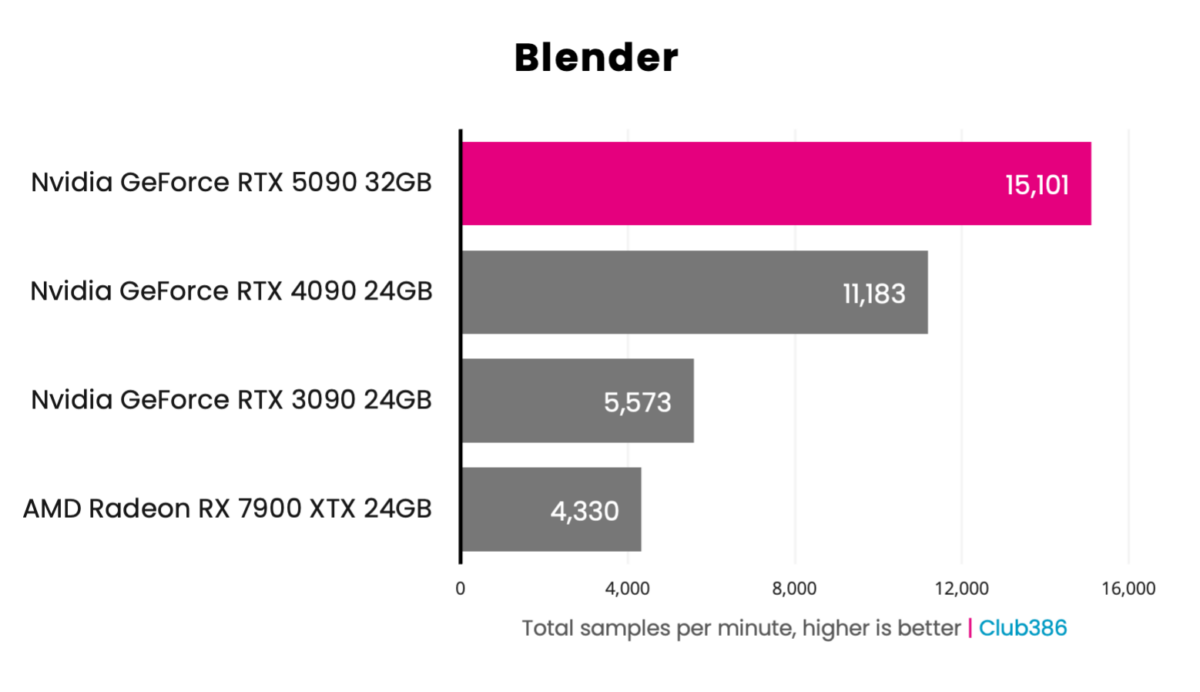

Don’t want to alarm you, fellow gamers, but you’ll be vying for a place in the queue alongside content creators. The previous best do-it-all graphics card is soundly beaten to the tune of 35% in Blender.

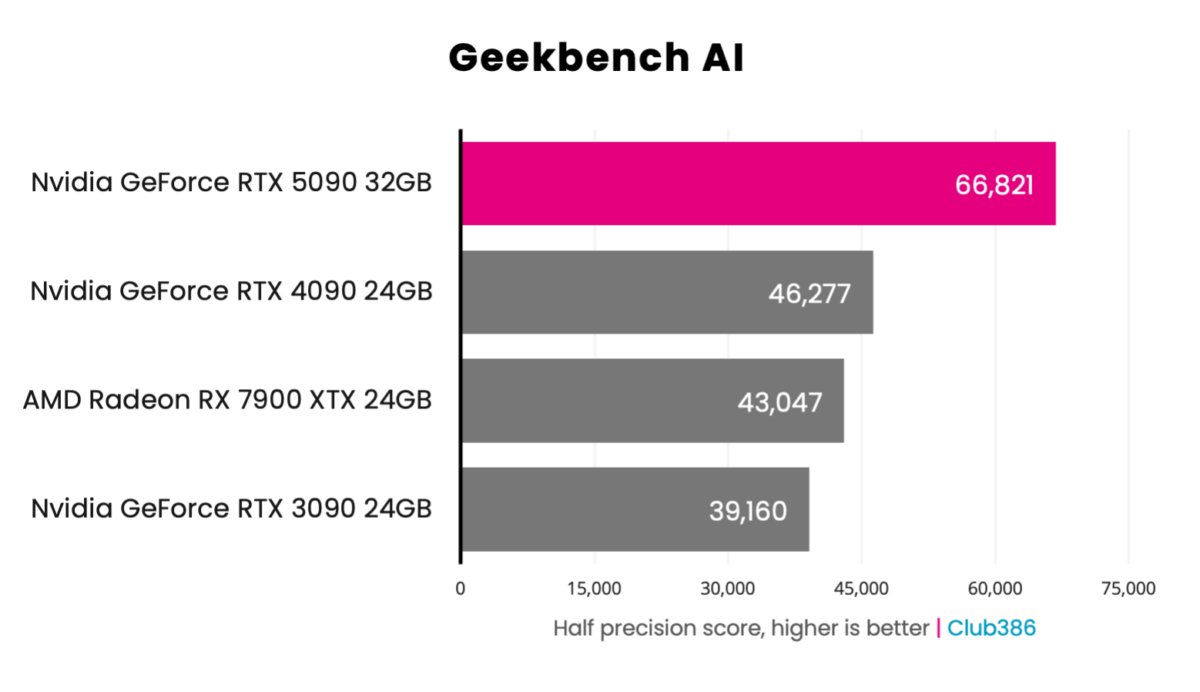

Ever heard of that thing called AI? Well, yep, everyone who’s anyone wanting to get a jumpstart on artificial intelligence will also be in the market for RTX 5090. It is the new standard bearer.

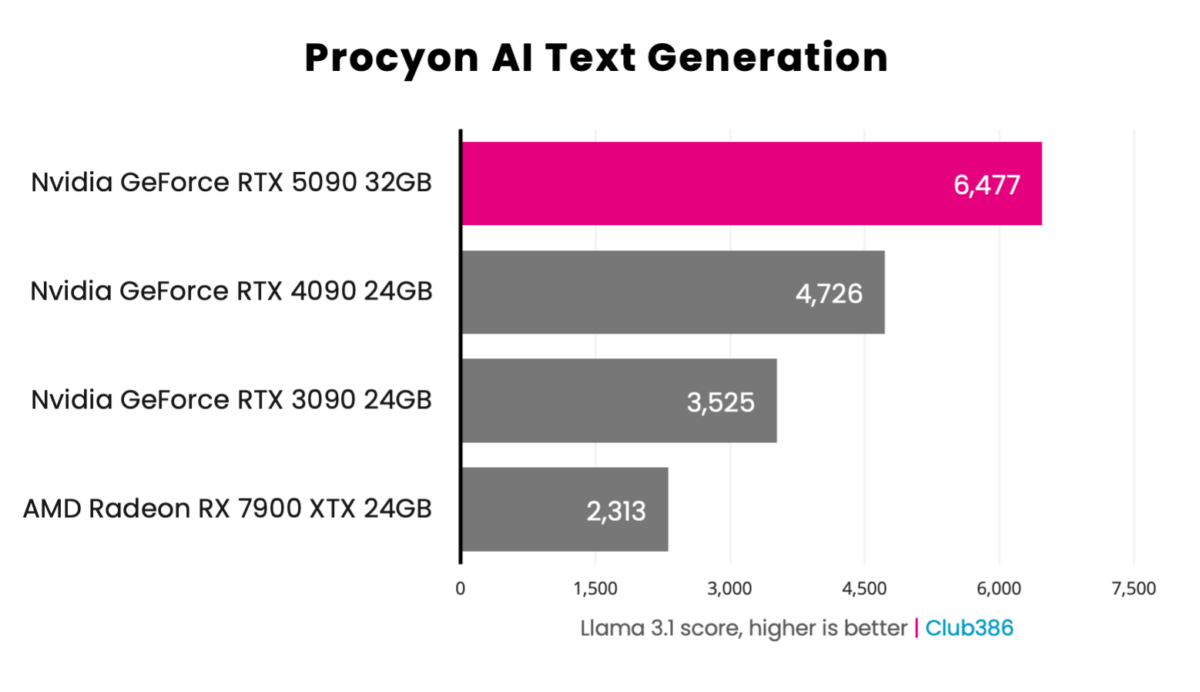

Signalling real-world implications of the card’s onboard AI chops, Procyon’s AI Text Generation benchmark tests multiple large language models for measuring the inference performance of on-device accelerators. I’m charting the newest Llama 3.1 model and seeing a near-40% uplift over RTX 4090.

Game Rasterisation

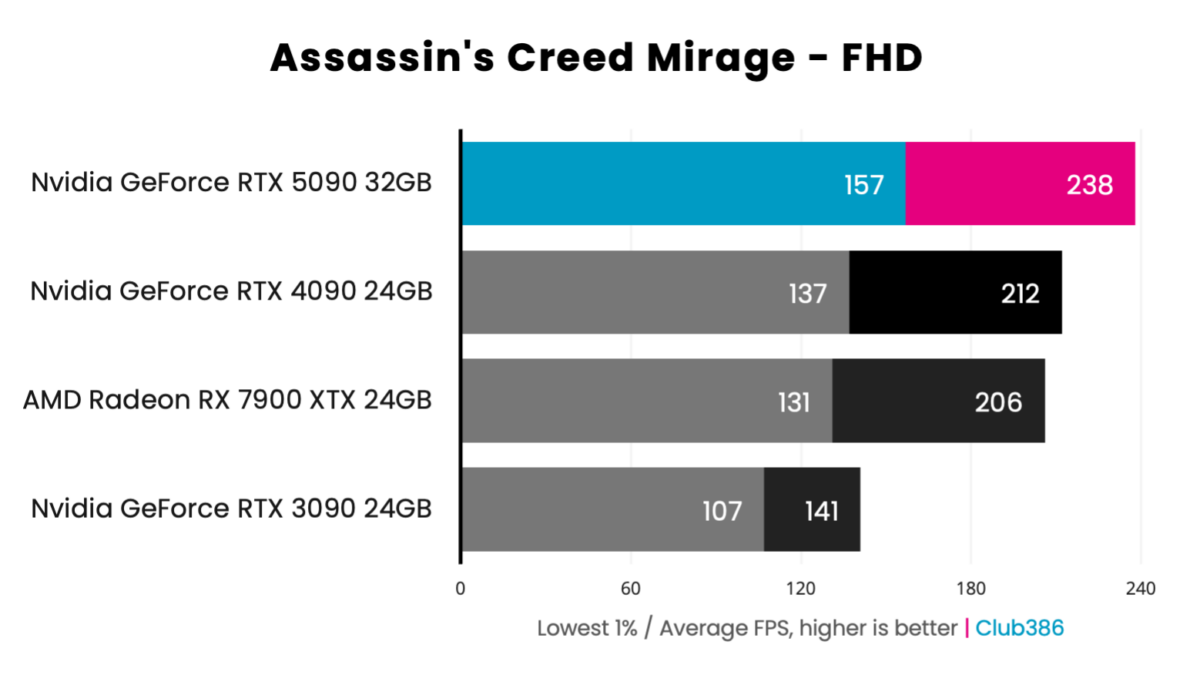

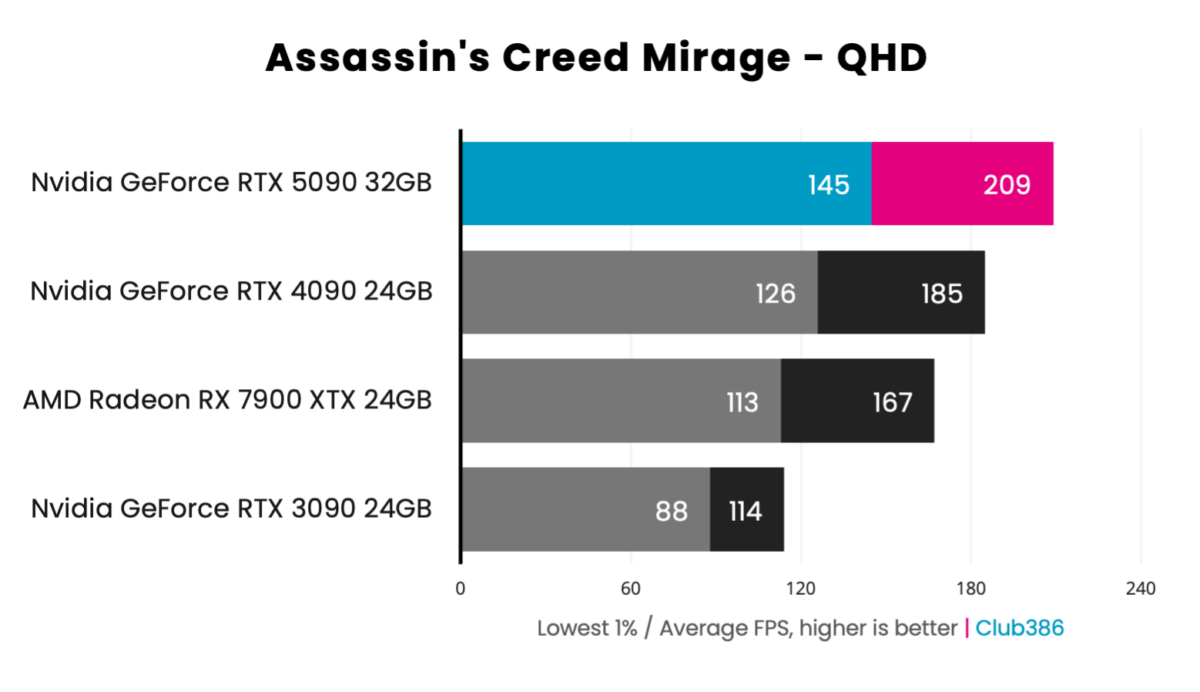

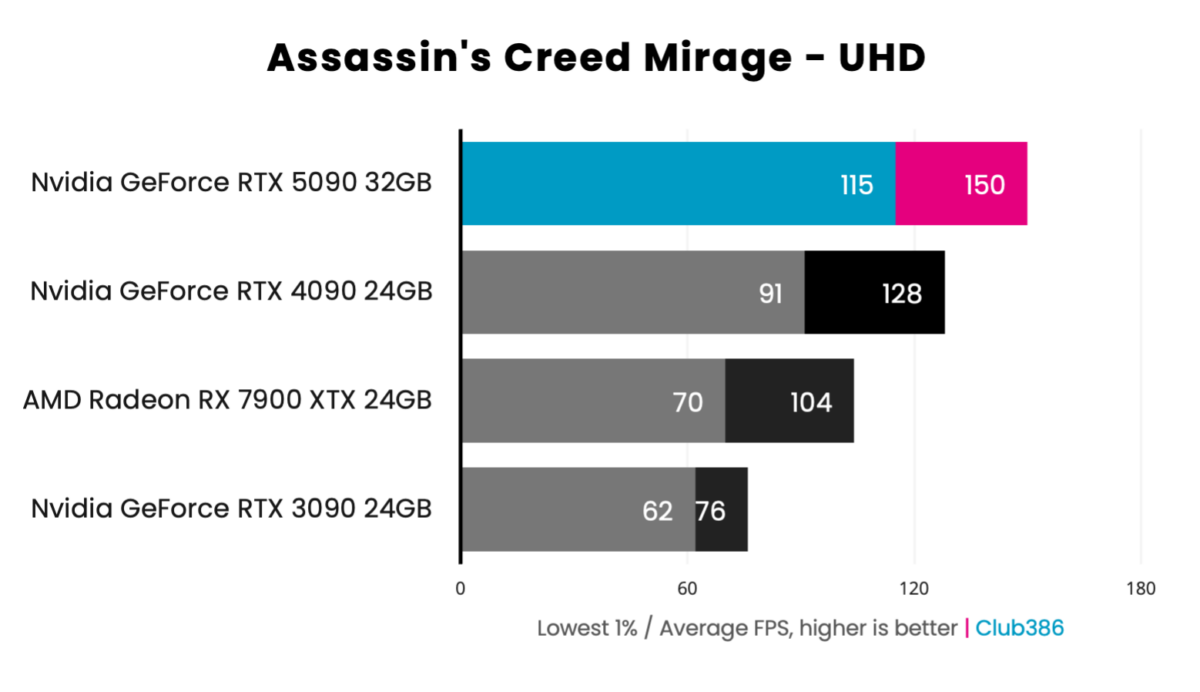

I hear you, it’s games you care about. There’s plenty to this tale, and I’ll begin with good ol’ fashioned rasterisation and some light ray tracing across a slew of modern titles at three popular resolutions – 1920×1080 (FHD), 2560×1440 (QHD) and 3840×2160 (UHD).

Expecting a little more frame rate for your buck? A 33% hike in both cores and memory translates to only a 17% performance improvement in Assassin’s Creed Mirage at 4K UHD.

It’s hard to quibble with 150 frames per second at the highest resolution with image quality dialled up to maximum, yet for simple gaming’s sake, a Radeon RX 7900 XTX can muster over 100fps and costs half as much.

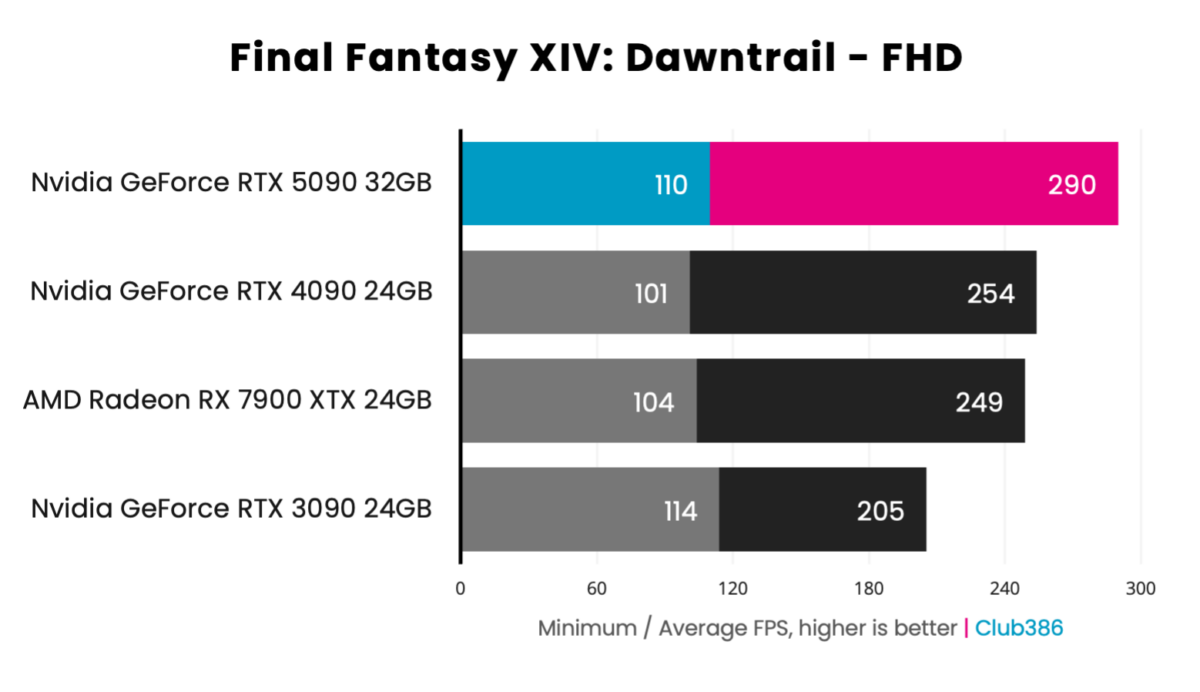

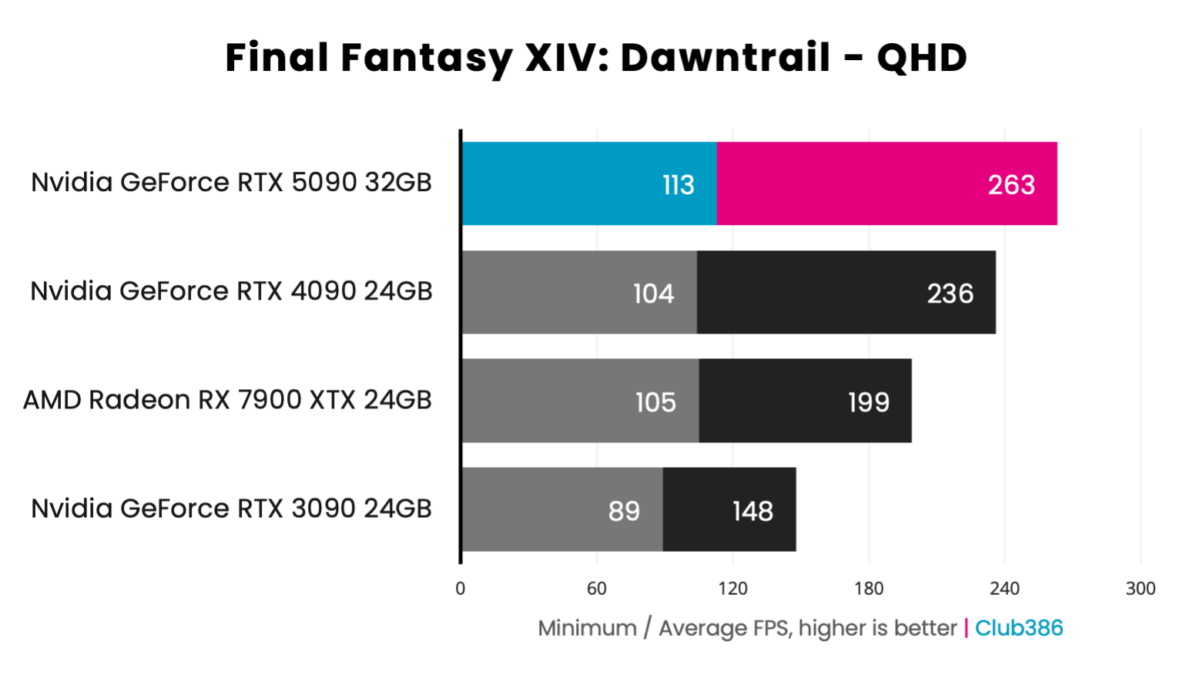

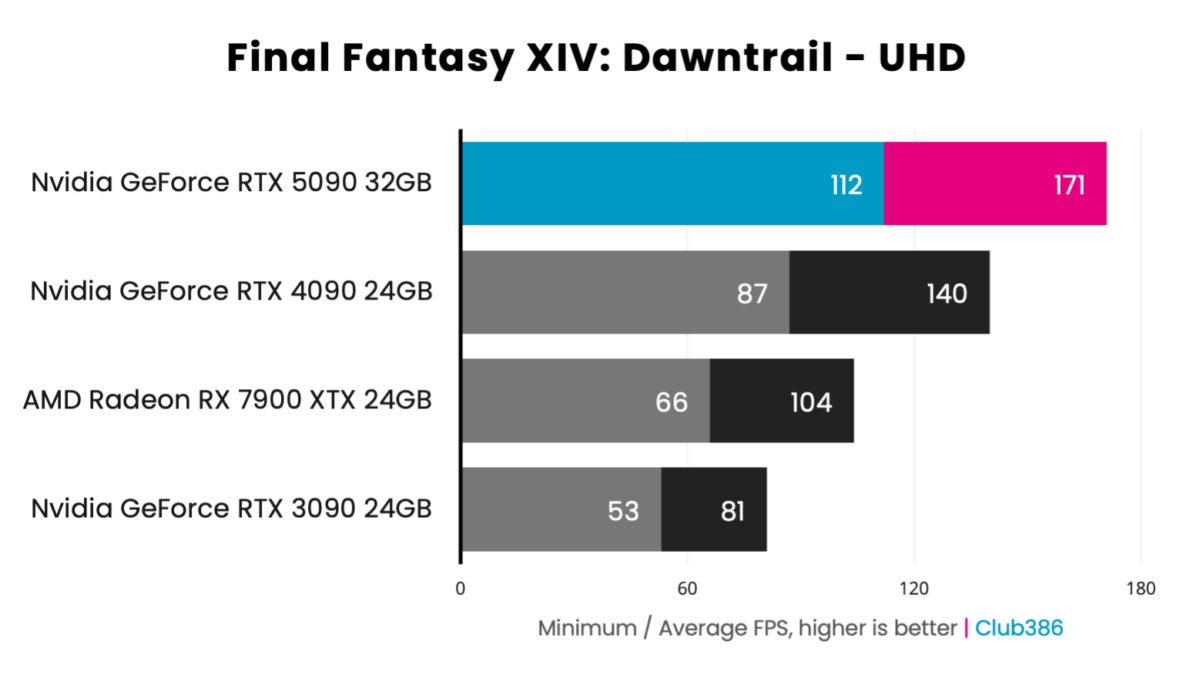

Final Fantasy XIV: Dawntrail delivers a similar in-game performance bump. RTX 5090 naturally takes its place at the top of the chart, but pure rasterisation isn’t a giant leap from 2022’s RTX 4090.

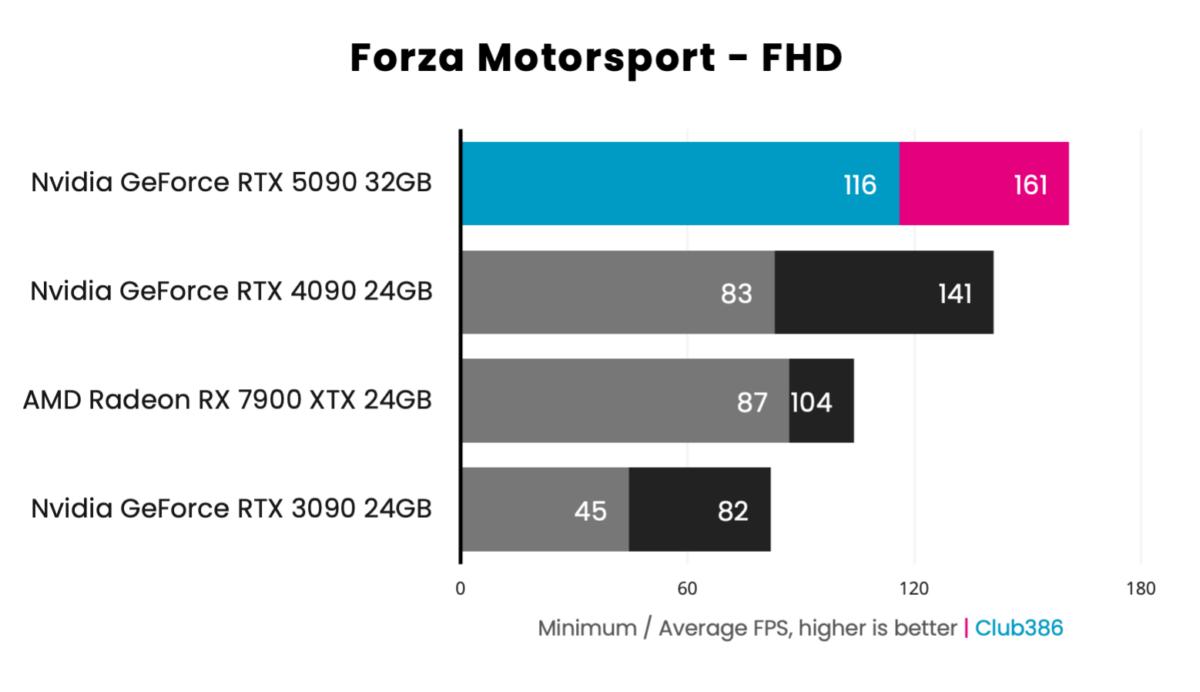

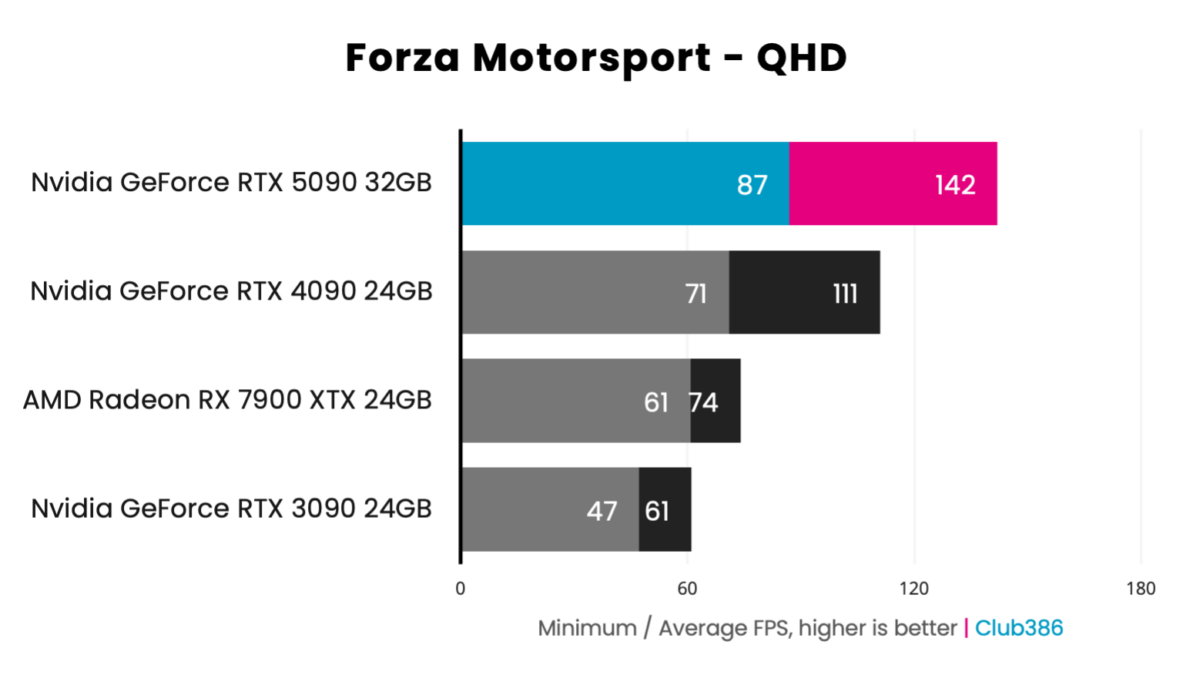

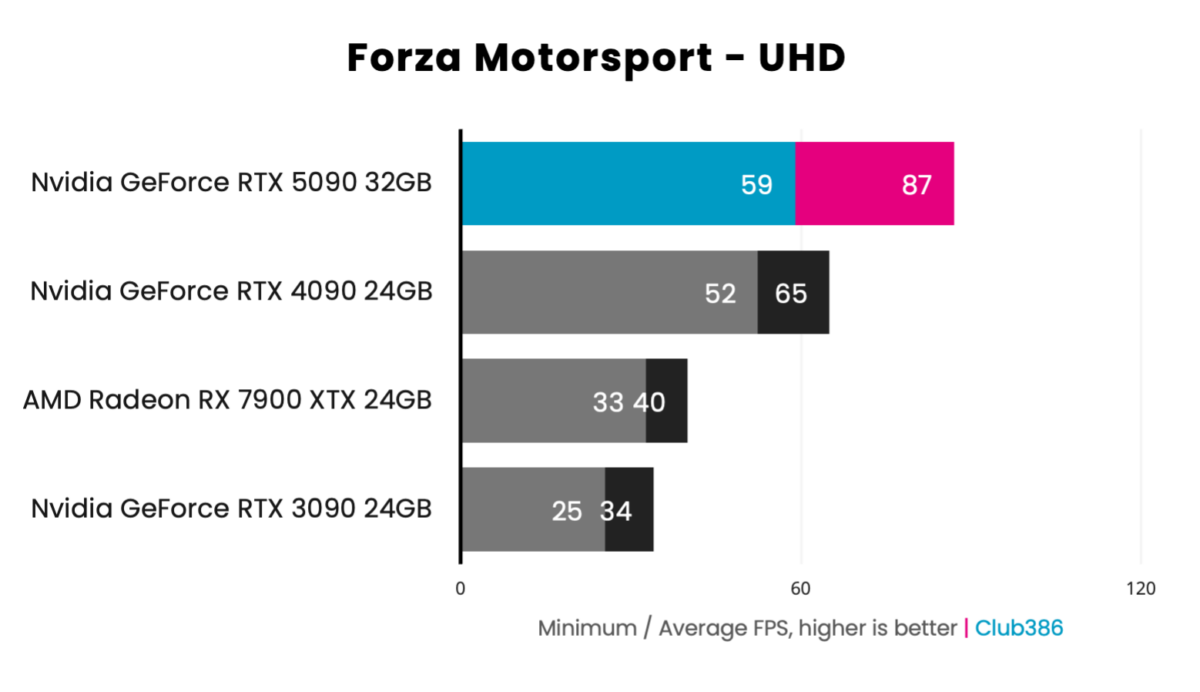

As a console-first title, Forza Motorsport is surprisingly punishing on high-end GPUs. With image quality cranked right up, even RTX 5090 can’t break the 100fps barrier at 4K UHD. Still, if you’re wondering where to go from your RTX 3090, Nvidia’s latest delivers meaningful gain; the game goes from stuttery to buttery.

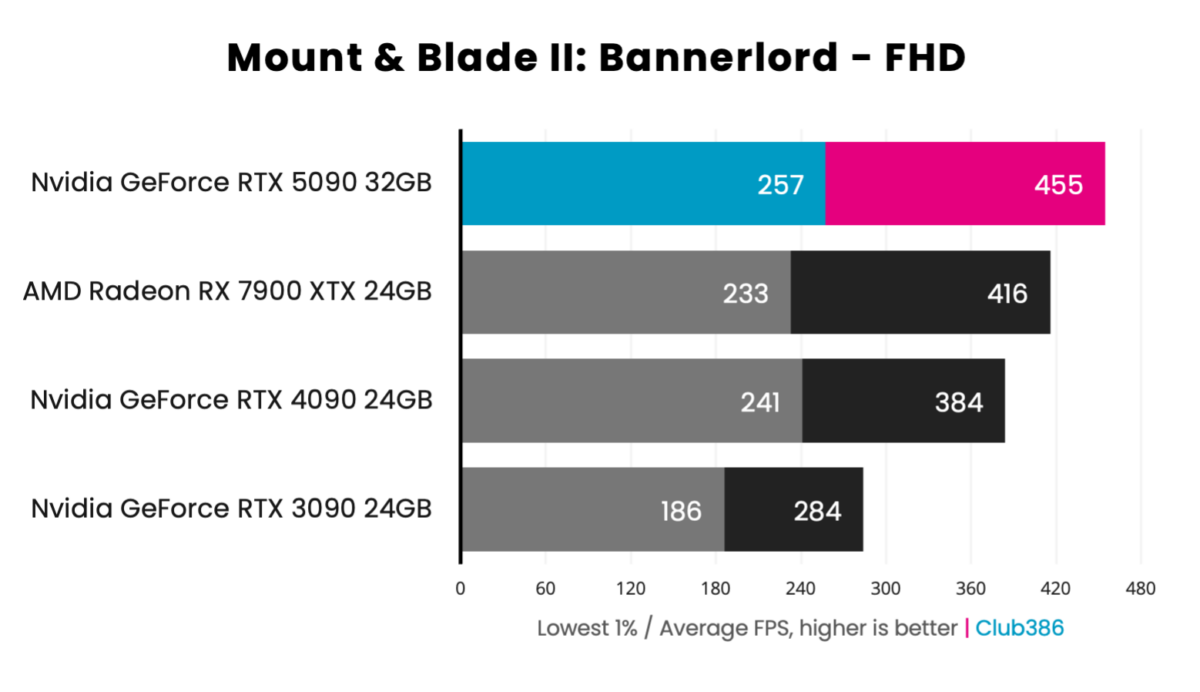

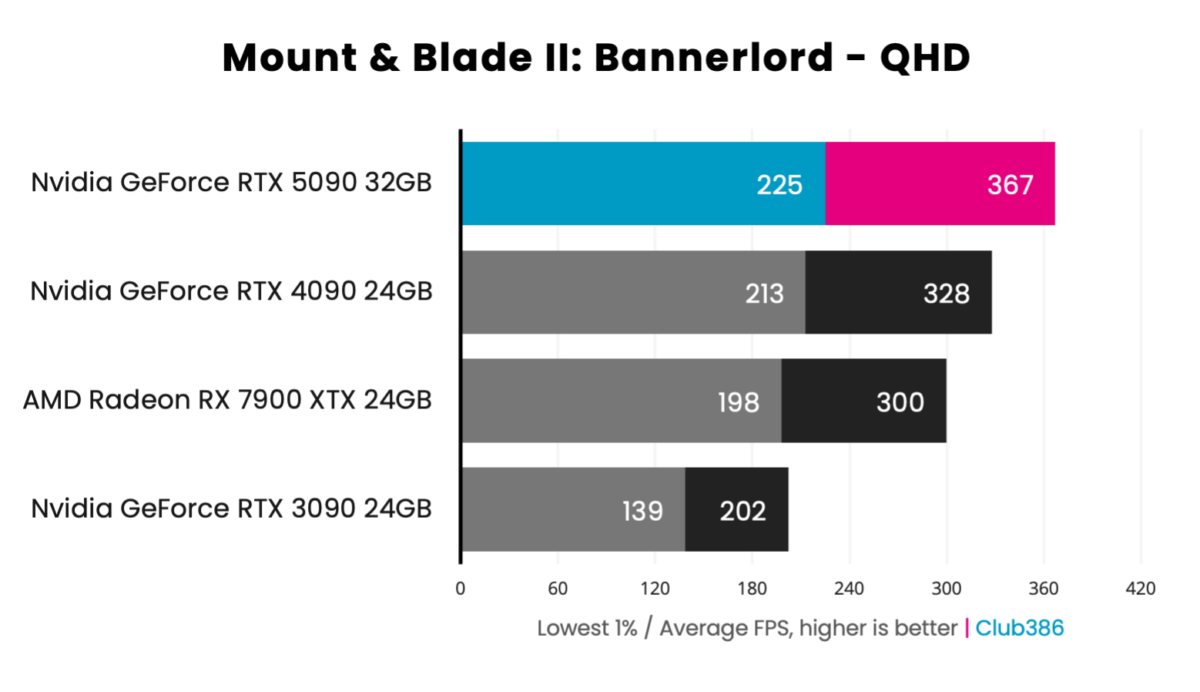

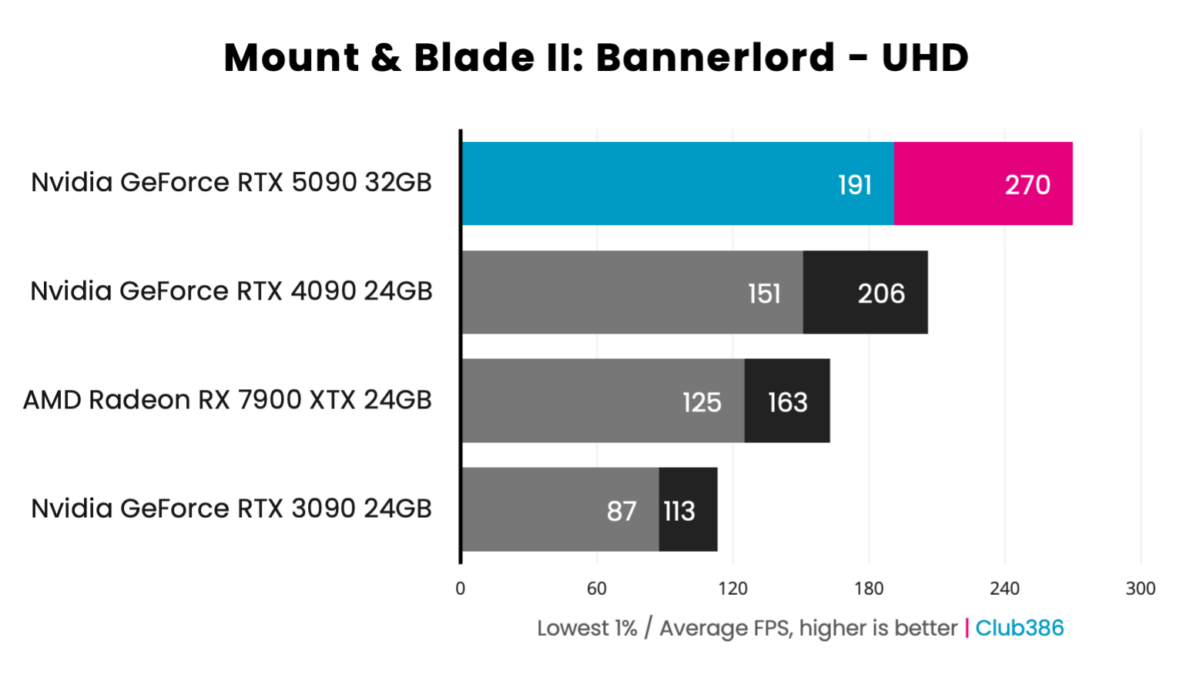

A 31% performance bump at 4K UHD in Mount & Blade II: Bannerlord is more in keeping with the card’s underlying specifications.

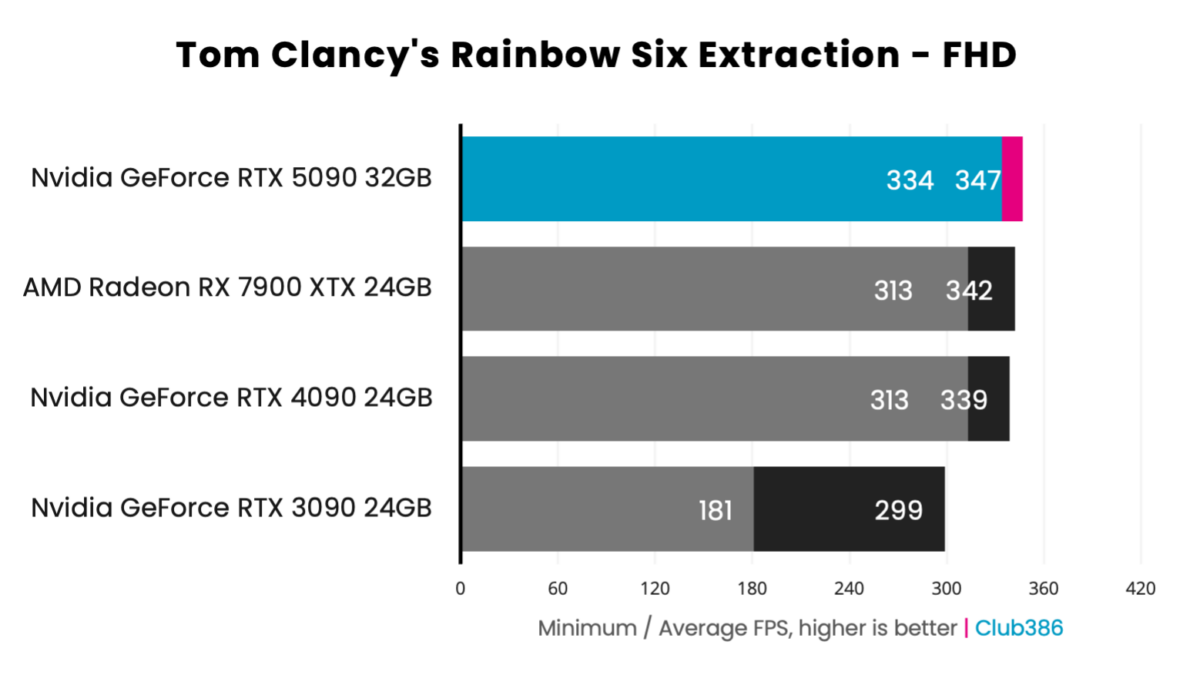

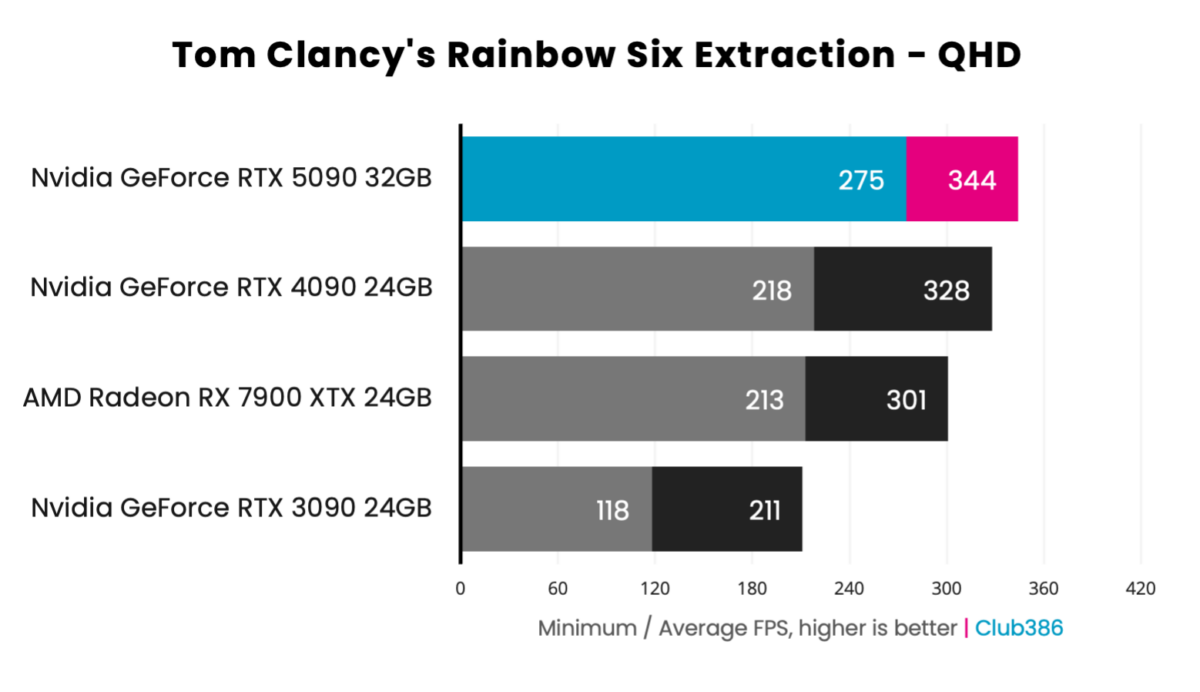

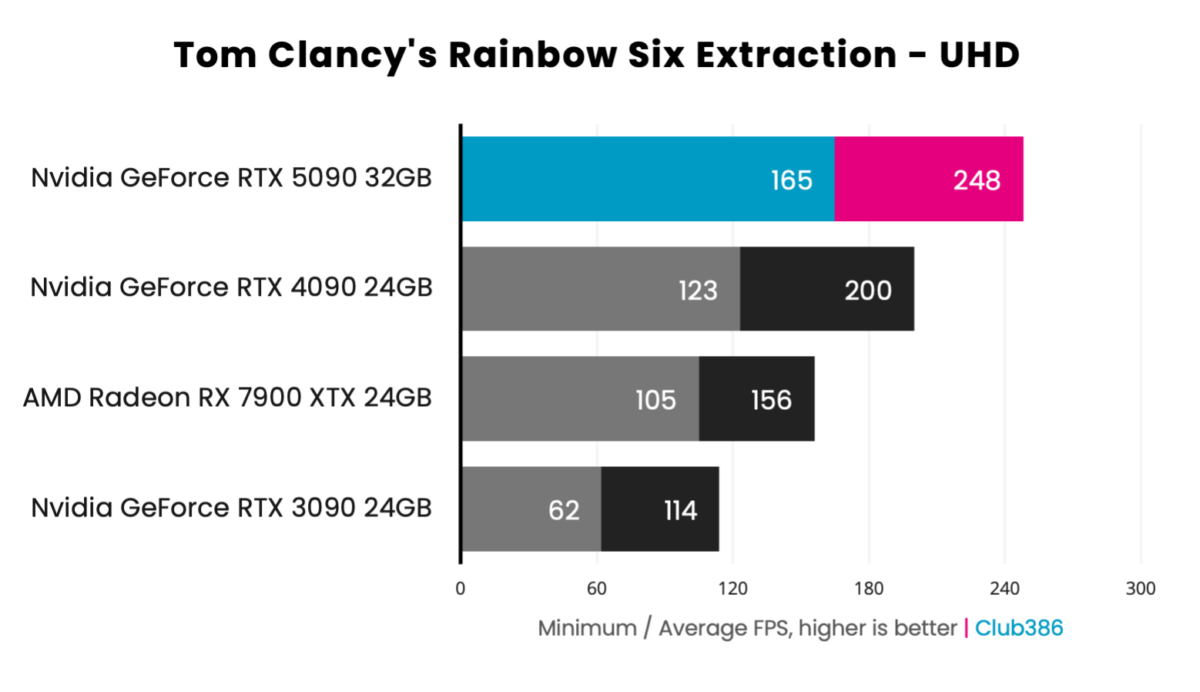

As expected, the common pattern of 32GB RTX 5090 extending its lead at 4K UHD continues in Tom Clancy’s Rainbow Six Extraction.

The tale of the tape thus far is that RTX 5090 is up to 30% quicker than RTX 4090 in the rasterisation stakes. A healthy step forward, yet that alone isn’t enough to warrant an upgrade. In fact, the generational uplift is markedly lower than from RTX 3090 to RTX 4090.

| Between Generations | Average improvement at 4K |

|---|---|

| RTX 3090 to RTX 4090 | 78.02% |

| RTX 4090 to RTX 5090 | 24.84% |

| RX 7900 XTX to RTX 5090 | 70.14% |

This table takes the mainly rasterised games and calculates the performance delta between select combinations. Results are averaged in getting the figures above.

Let me not beat around the bush. Gains from RTX 3090 to RTX 4090 are immense, averaging over 78% and peaking at 91% in Forza Motorsport, likely due to the heavy lifting required for ray tracing. A real performance step-change in the two years between launches. RTX 4090 to RTX 5090, meanwhile, is nowhere near this uplift. 25% or so isn’t bad, per se, but it’s abundantly clear focus is not on rasterisation for this generation.

Nvidia’s latest architecture is geared differently, and adding new-and-improved frame generation to the mix is where the magic begins.

Frame Generation

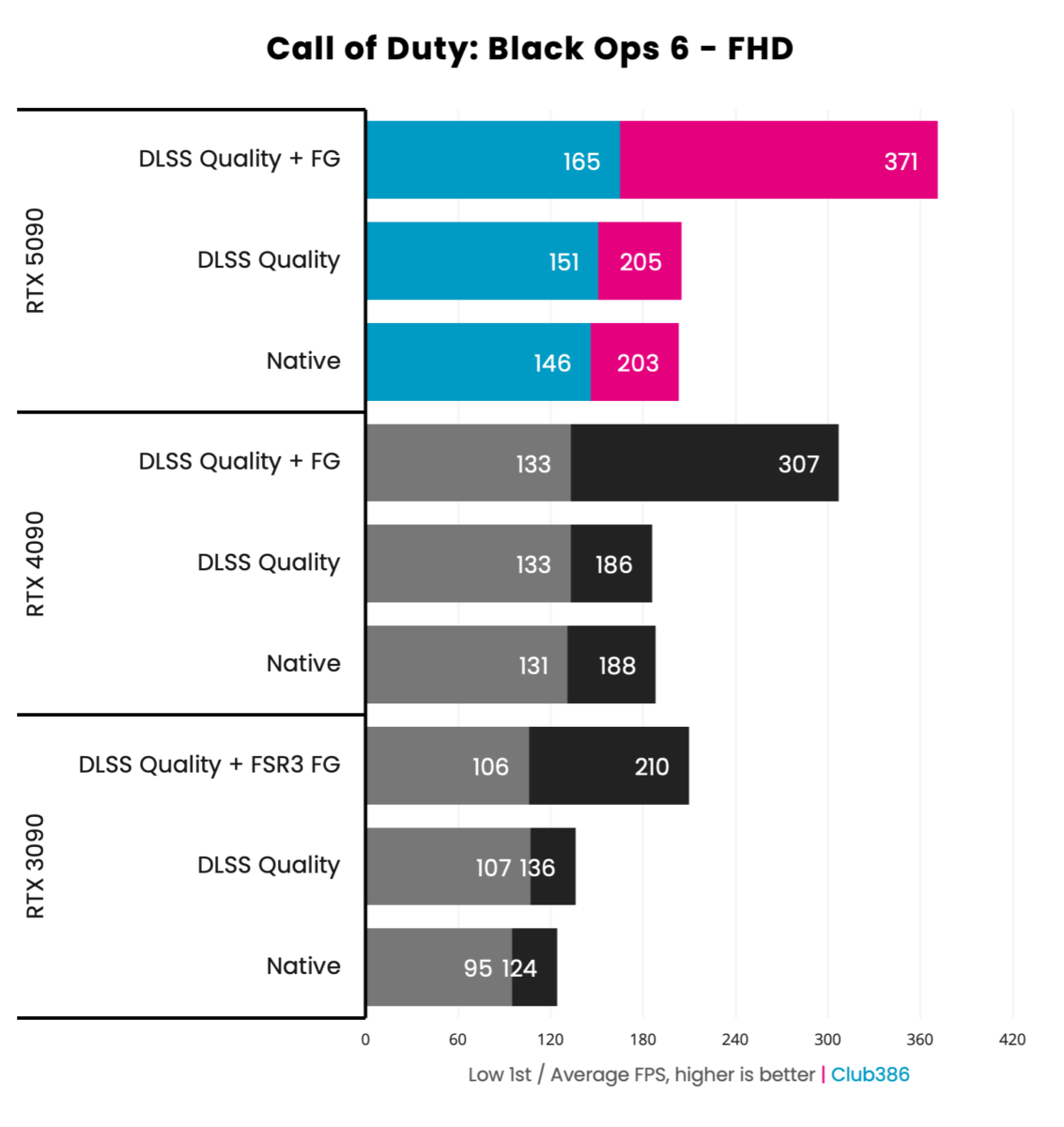

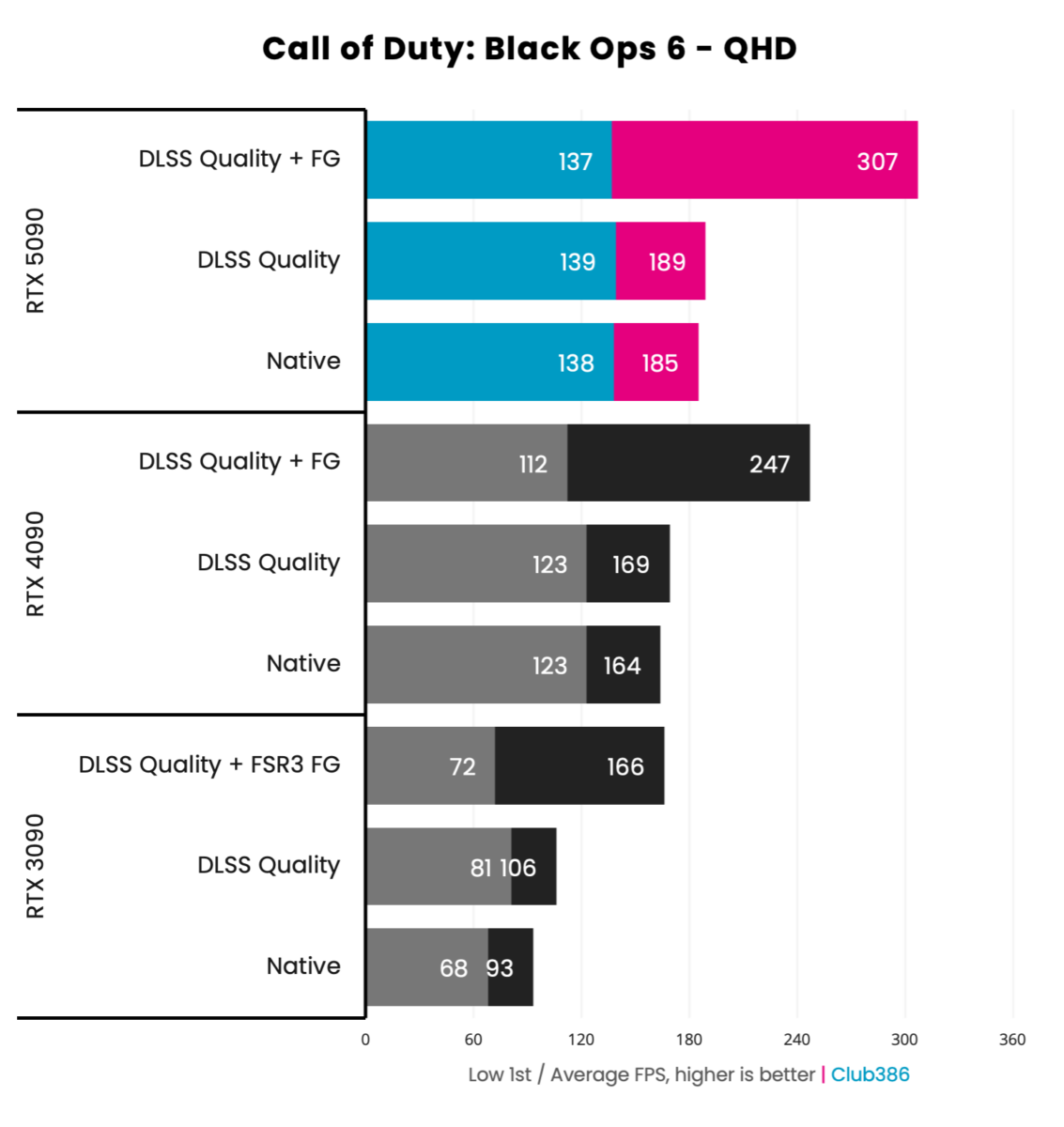

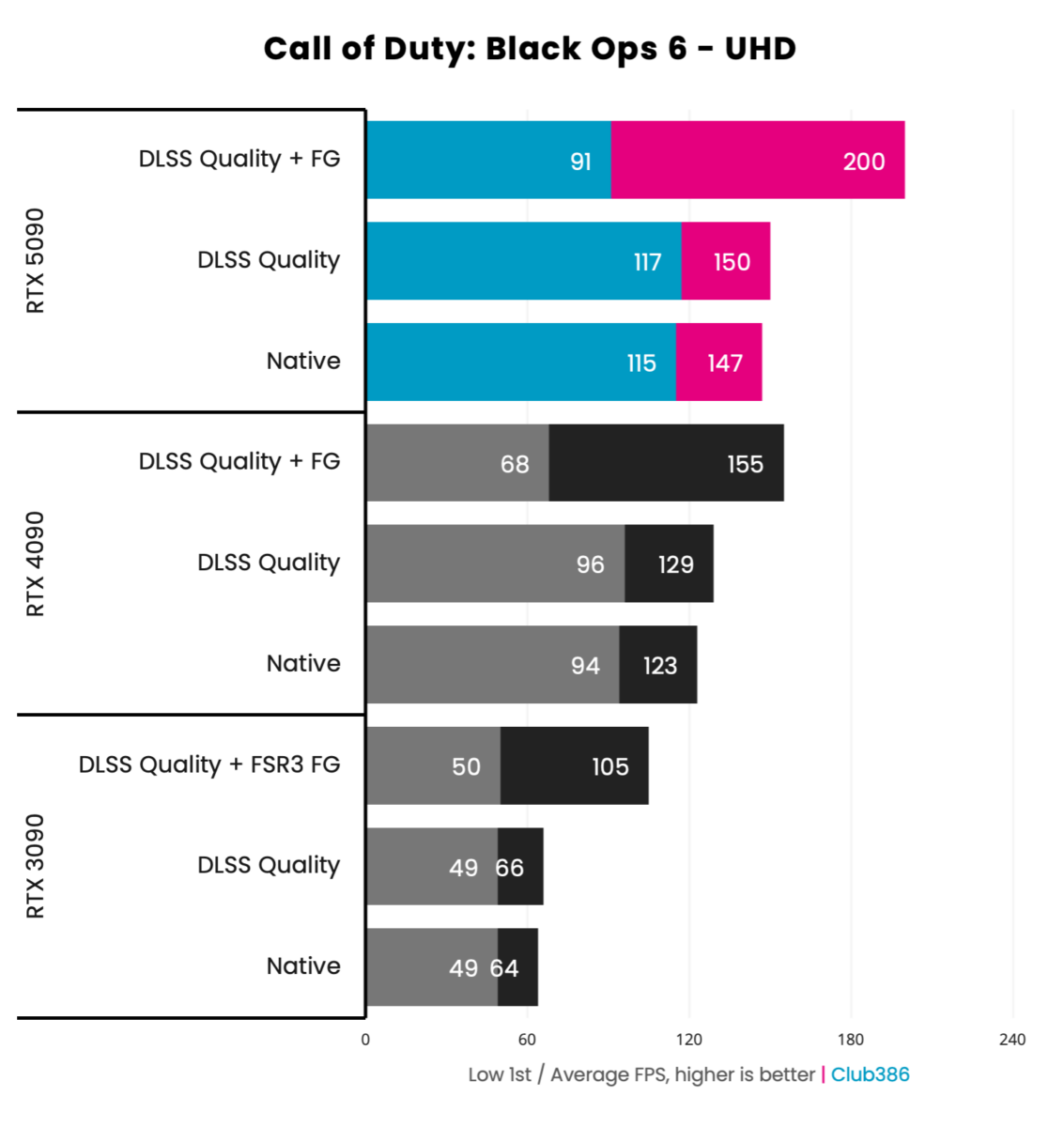

AI-generation frames have grown to exist in many forms. One of last year’s most popular games – Call of Duty: Black Ops 6 – supports DLSS Frame Generation on RTX 40 and 50 Series cards, while older 30 Series cards have to rely on AMD FSR3.

This is frame generation as we’ve come to know it ’til now. Applying DLSS upscaling provides a minor uplift in performance, but it’s those ‘artificial’ frames that make an impact. Turns out that was but the tip of the iceberg.

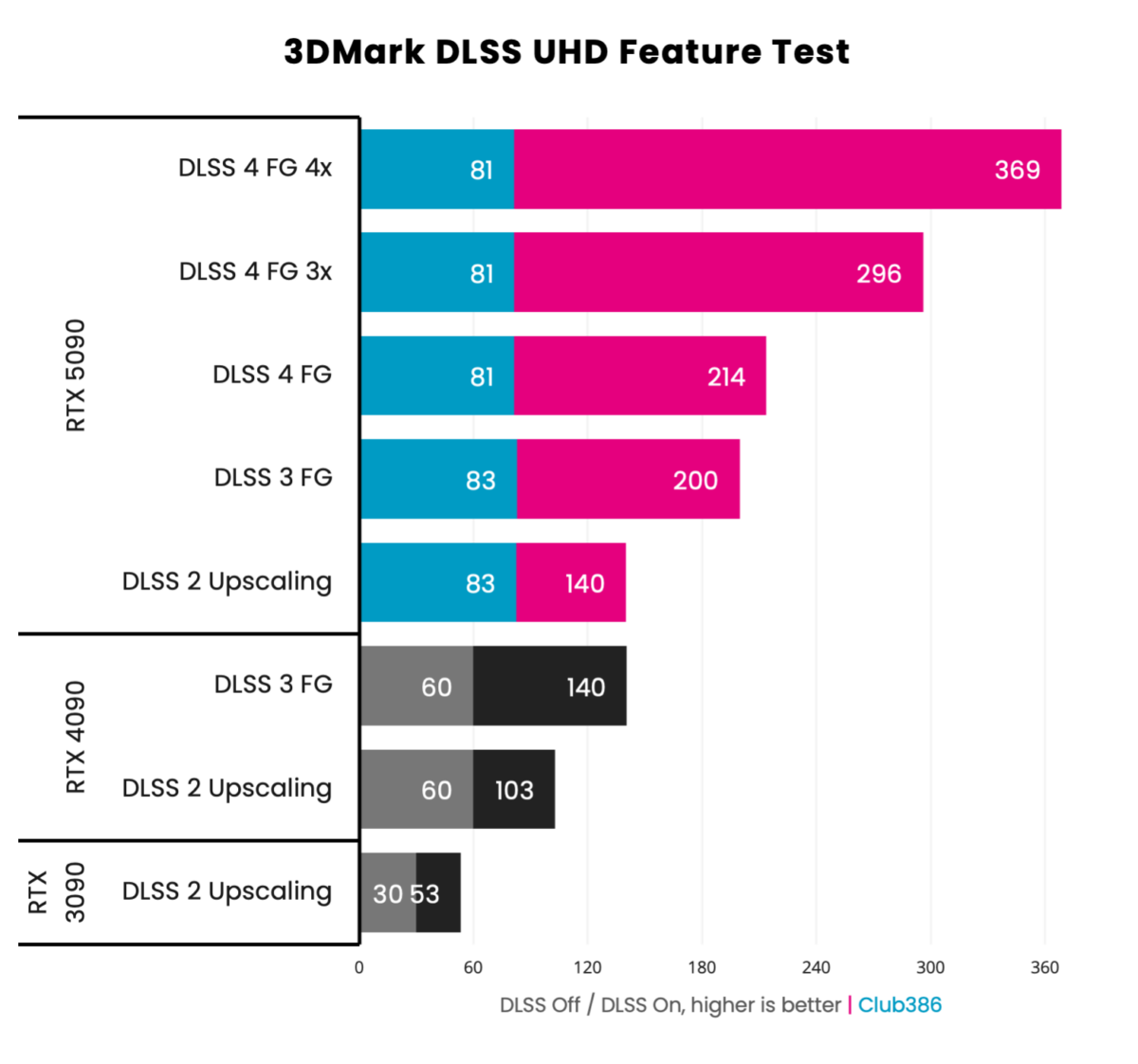

I alluded to Multi Frame Generation being RTX 5090’s secret weapon, and 3DMark’s dedicated DLSS test provides evidence. This helpful benchmark is able to measure all of the available DLSS modes that now exist – from basic DLSS 2 upscaling, right through to DLSS 4x frame generation.

It’s worth looking at the chart once more. RTX 4090 can scale to 140fps with DLSS 3 frame generation, which gets it to the base level of RTX 5090. Then things get wild. RTX 5090 frame generation ups frame rate to 200, DLSS 4 is a bit better still, but it’s 3x and 4x Multi Frame Generation that really sends performance soaring up to 296 and 369fps, respectively.

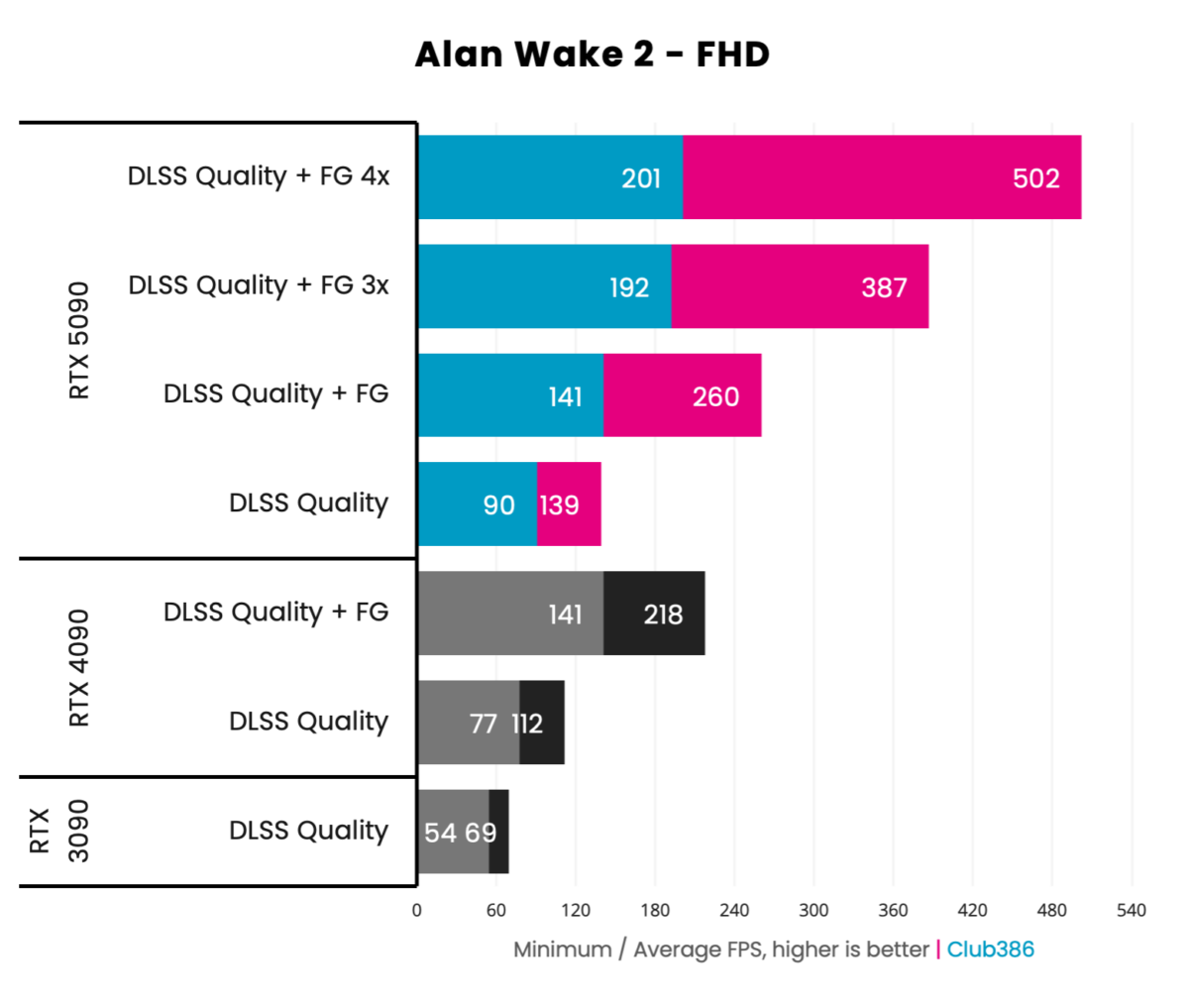

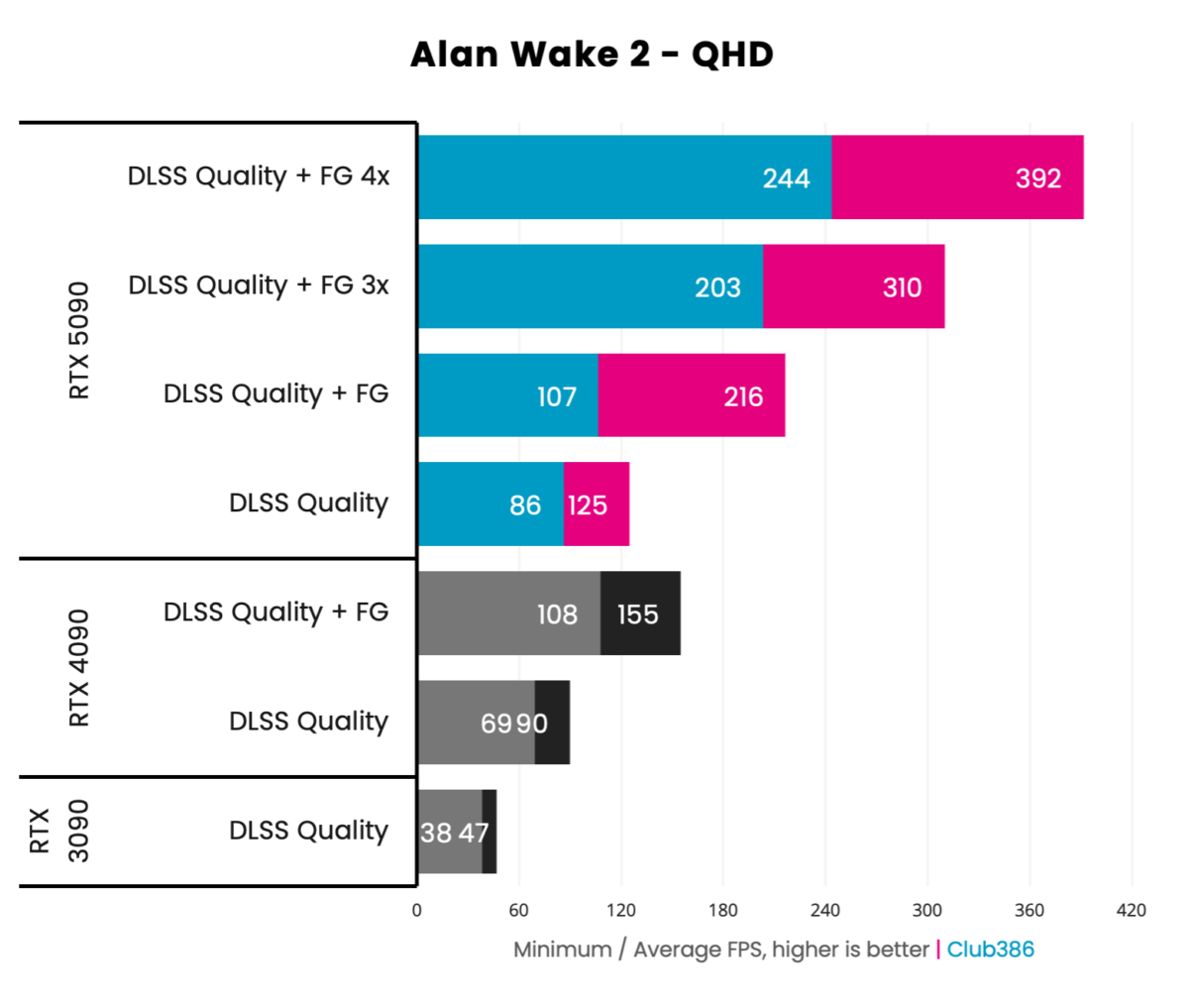

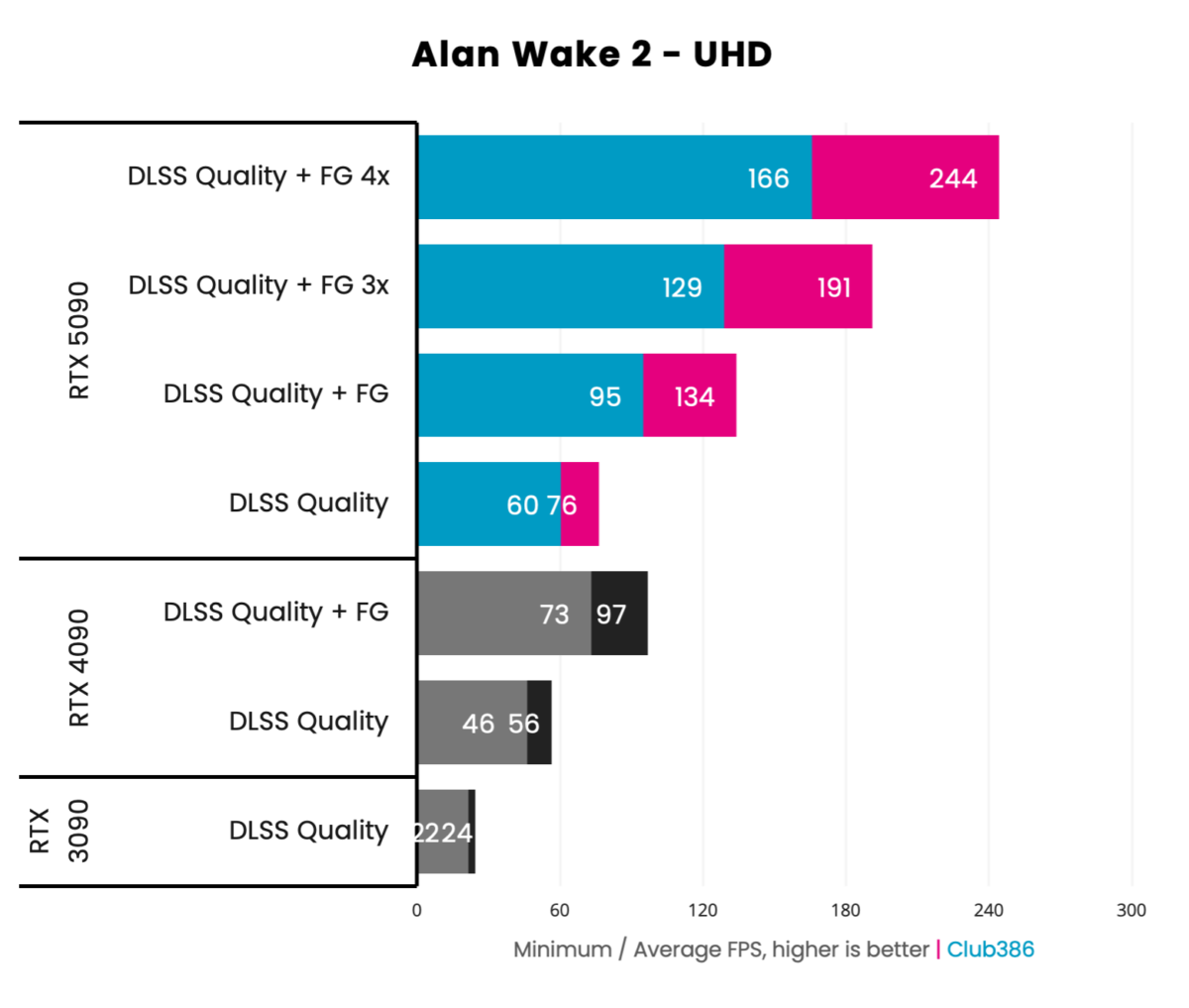

Ready for more bonkers graphs? I had access to a pre-release patch for Alan Wake 2 which adds MFG support, and for a game running ultra-demanding path tracing, the results are nothing short of outstanding. Furthermore, GeForce RTX 5090’s intrinsic power means a solid base frame rate enables MFG to really strut its stuff.

Yet there are words of caution. Higher frame rates availed by MFG don’t necessarily translate into better gamer performance – fps and perceived performance are not exactly analogous in a game dominated by AI rendering. A true 244fps feels better than the outputted 244fps here, underscored by lower latency. The old qualitative vs quantitative debate rages on.

I mentioned my old Diamond Monster 3D graphics card earlier in the review. That was based on the legendary Voodoo graphics chip, and I’ve come full circle as RTX 5090 is performing voodoo of its own. There’s surely no other way to explain going from 76fps to 244fps at the flick of a switch.

Of course, there’s much more to frame generation than first meets the eye. Look ever so closely and you will see the odd instance of ghosting or shimmering, and these unwanted artefacts affect some titles more than others. In an ideal world you wouldn’t want to have to make that trade-off, yet when the frame rate boost is as dramatic as this, it’s an evil I suspect a growing number of gamers will be willing to accept. And let’s be clear, in real-time, a lot of those artefacts are hard to spot.

There’s also the question of latency. Nvidia has a growing number of tools in its arsenal to prevent latency from spiralling out of control as more frames are inserted, and the tech appears to be working well.

| Native Latency | FG Latency | FG 3x Latency | FG 4x Latency | |

|---|---|---|---|---|

| 5090 @ UHD | 40.9 | 49.9 | 52.1 | 53.4 |

| 5090 @ QHD | 25.4 | 32.3 | 34.8 | 36.1 |

| 5090 @ FHD | 21.2 | 25.5 | 26.0 | 27.1 |

| 4090 @ UHD | 51.3 | 63.6 | – | – |

| 4090 @ QHD | 34.9 | 42.4 | – | – |

| 4090 @ FHD | 26.9 | 31.9 | – | – |

RTX 5090’s inherent horsepower ensures latency across resolutions is lower than RTX 4090, but what’s interesting is that the impact when adding MFG to the equation isn’t as dramatic as one might think.

Is an increase in latency from 41ms to 53ms worth it when a dramatically higher frame rate is on the table? That’s a personal choice gamers are going to have to make, but frankly, it’s nice to have the option.

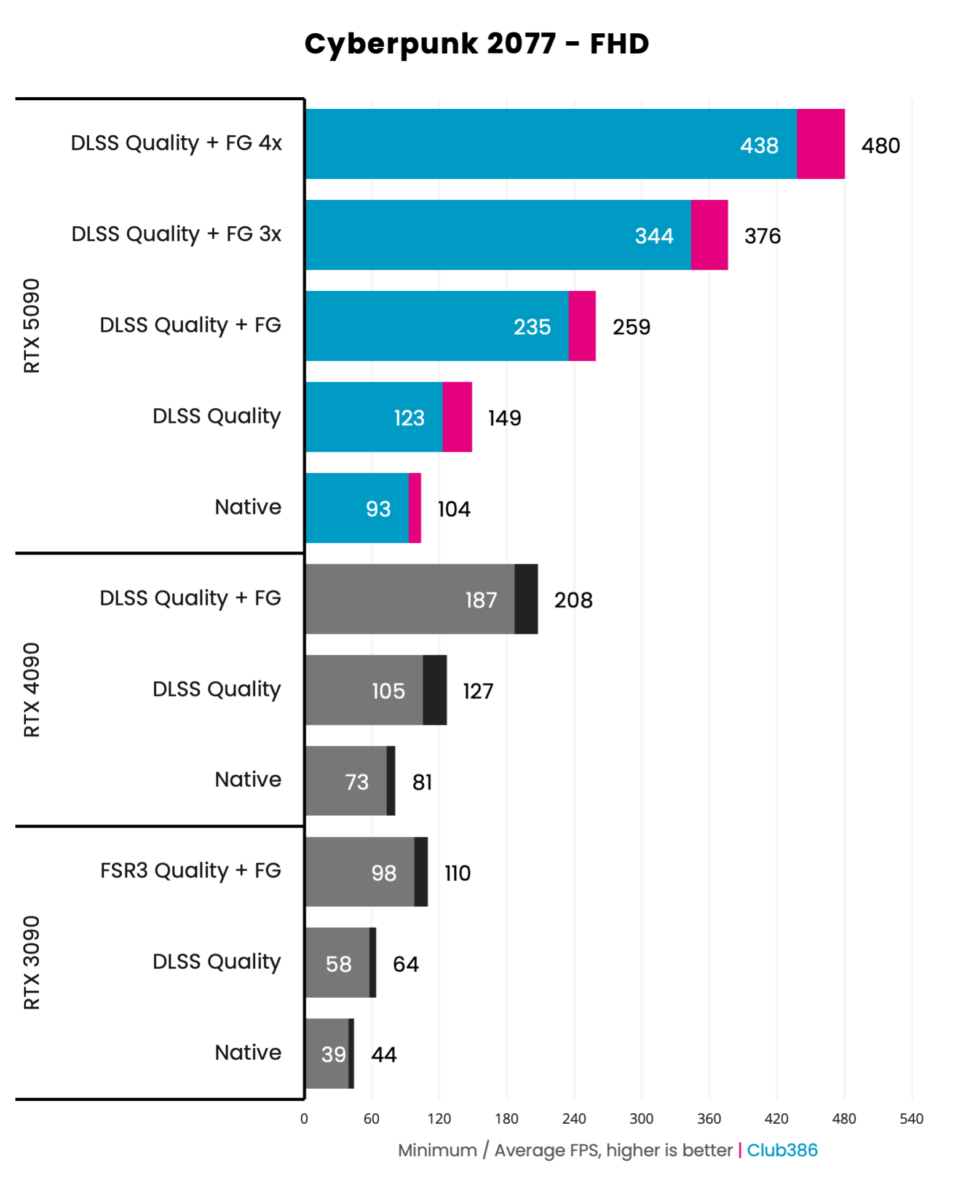

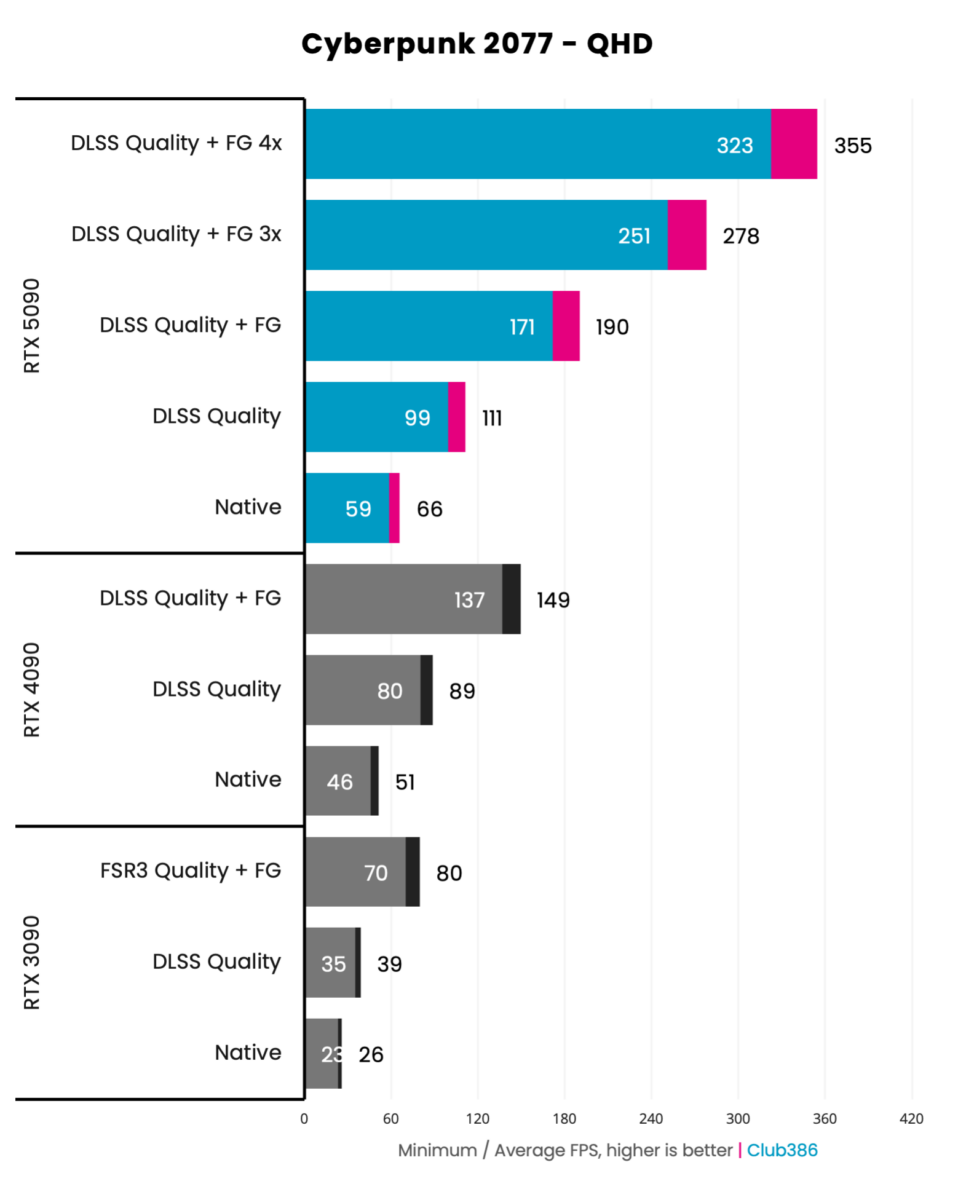

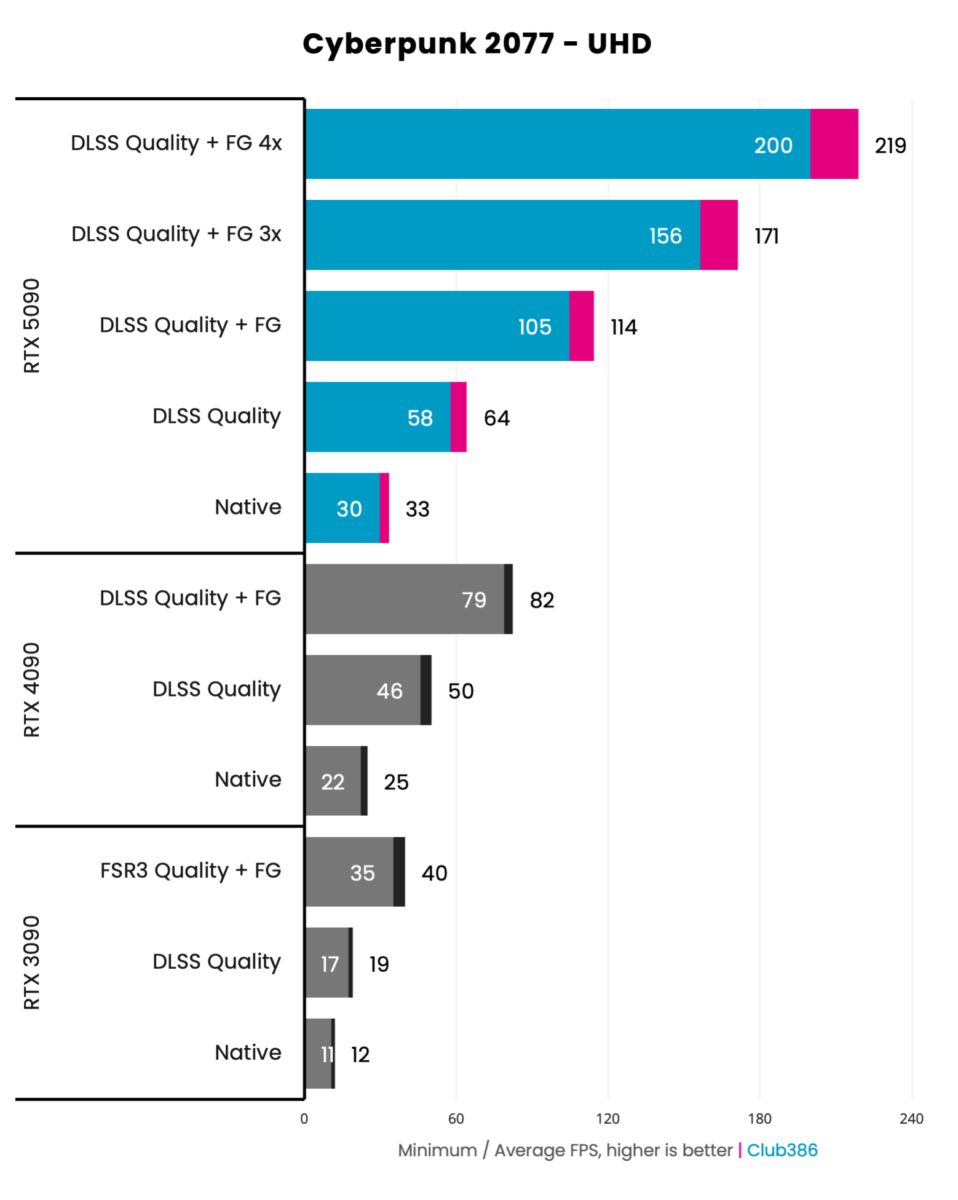

Cyberpunk 2077’s upcoming implementation of MFG is equally impressive, but I must caveat these results by stating the benchmarks are carried out on pre-release versions of the game. I expect the final release to deliver similar numbers, if not better, but MFG is a new tool for developers to get to grips with, and it’ll be a while before we know how well it works across a much broader spectrum of titles.

Still, I can’t help but marvel at what’s already being achieved. Playing using Cyberpunk’s notorious Overdrive preset is brutal for any and every graphics card. Even RTX 5090 can muster only 33fps natively. I’d prefer this to be higher, as MFG works best with a base 60fps, but flick the switch for 4x frame generation and that lowly number is propelled to 219.

Looking at it all this way, it’s only fair to tabulate the percentage increase as I’ve done before.

| Between Generations | Improvement at 4K |

|---|---|

| RTX 3090 to RTX 4090 | 105% |

| RTX 4090 to RTX 5090 | 167% |

| RX 7900 XTX to RTX 5090 | 492% |

Comparing best versus best – 50 Series MFG to 40 Series and 7900 FG – there’s a truly staggering increase, and one has to wonder, if not for innovative frame generation, how else would developers get the best-looking games to scale to over 200fps? Even quad SLI wouldn’t get the job done.

It’s hard to disagree with Nvidia’s prediction that one day all games will use AI technology for rendering, and that claim lends excitement to other categories. Seeing frame generation at its very best on high-end desktop makes me eager to explore what the technology could do for gaming handhelds, VR headsets, and beyond.

Conclusion

GeForce RTX 4090 has stood undiminished as the PC gaming weapon of choice since its arrival in October 2022. Embodying a heady mix of brute power and novel frame rate-boosting technologies such as Frame Generation, it’s rightfully been the go-to GPU for gaming at 4K with eye candy turned all the way up. Marching in with size 12s today, GeForce RTX 5090 rips the erstwhile champion’s performance crown right off and gets comfy on the throne.

Nvidia looks to the future rather than peer over its shoulder. Significant generational gains in traditional rasterisation are deliberately compromised in favour of what AI can do for a more immersive gaming experience, running from stratospheric frame rates through to smarter NPCs. Nvidia has built the infrastructure; now it’s up to developers to grasp the nettle and release better games.

Up until then, no matter how I dice it up, GeForce RTX 5090 is the absolute pinnacle of PC gaming.