Nvidia has been on a PC gaming image-quality crusade for a while. Two recent hardware-based technologies exemplify the direction team green wants to go.

Baked into RTX cards released from 2018 and improved over time, ray tracing and DLSS provide avenues for improved in-game lighting and better performance without unduly sacrificing image quality.

Both Nvidia and AMD offer hardware ray tracing technology for their latest cards. Nvidia, however, has a solid advantage with respect to the number of titles supporting ray tracing and, through more mature technology, a performance lead when compared on an apples-to-apples basis.

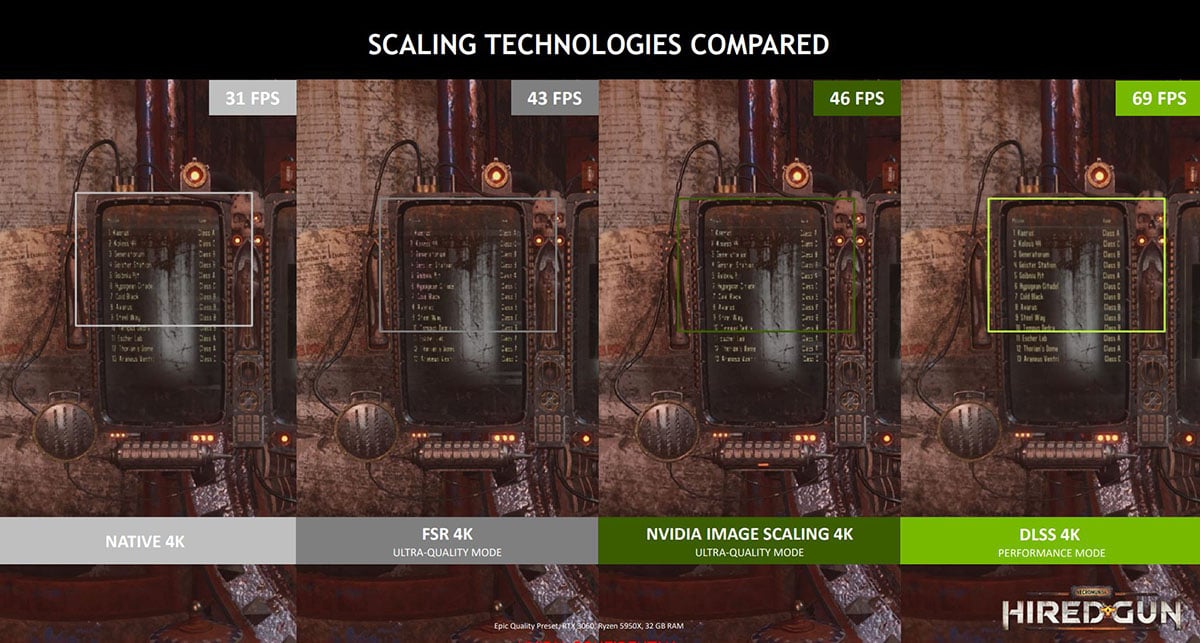

Rival AMD’s later to the table on the image upscaling side, too, with FidelityFX Super Resolution (FSR) technology using spatial upscaling rather than temporal employed by Nvidia’s preferred DLSS solution.

Nvidia Image Scaling Makes a Comeback

This brief introduction paves the way for a couple of announcements from Nvidia today. The first is refocus on the company’s own software-based spatial upscaler known as Nvidia Image Scaling (NIS).

NIS was originally launched in 2019 yet gained little traction as most of Nvidia’s upscaling efforts, understandably, were placed on DLSS’ shoulders. Now, though, Nvidia says NIS has been improved by using a new algorithm employing a six-tap filter with four-directional scaling. It offers a concurrent user-configurable sharpening algorithm so can be readily compared to AMD’s FSR.

The similarities don’t end there. NIS, Nvidia says, is more efficient than FSR because it uses a single shader-based pass – between tone mapping and post-processing – when doing its magic. NIS technology runs on every game without any further implementation by the developer, but should they choose to, an SDK is available from today, to enable specific support on an engine-by-engine basis. Running with developer-implemented SDK NIS takes away the need for gamers to tweak settings manually, which novice users may not want to do, and replaces trial and error with familiar in-game menu options.

DLSS Trumps NIS/FSR Every Time?

NIS is activated through the Nvidia Control Panel starting with Game Ready Driver 496.70, also released today. An easier option is to use GeForce Experience by selecting the desired render resolution as a percentage of native and then clicking ‘optimize.’ NIS adopts scaling percentages common on DLSS and AMD’s FSR, which is handy for comparisons.

To be abundantly clear, Nvidia is not advocating NIS as a replacement solution to DLSS. Quite the contrary, in fact, as DLSS is, in the company’s own words, ‘far superior upscaling technology.’ Let’s take a moment to understand why.

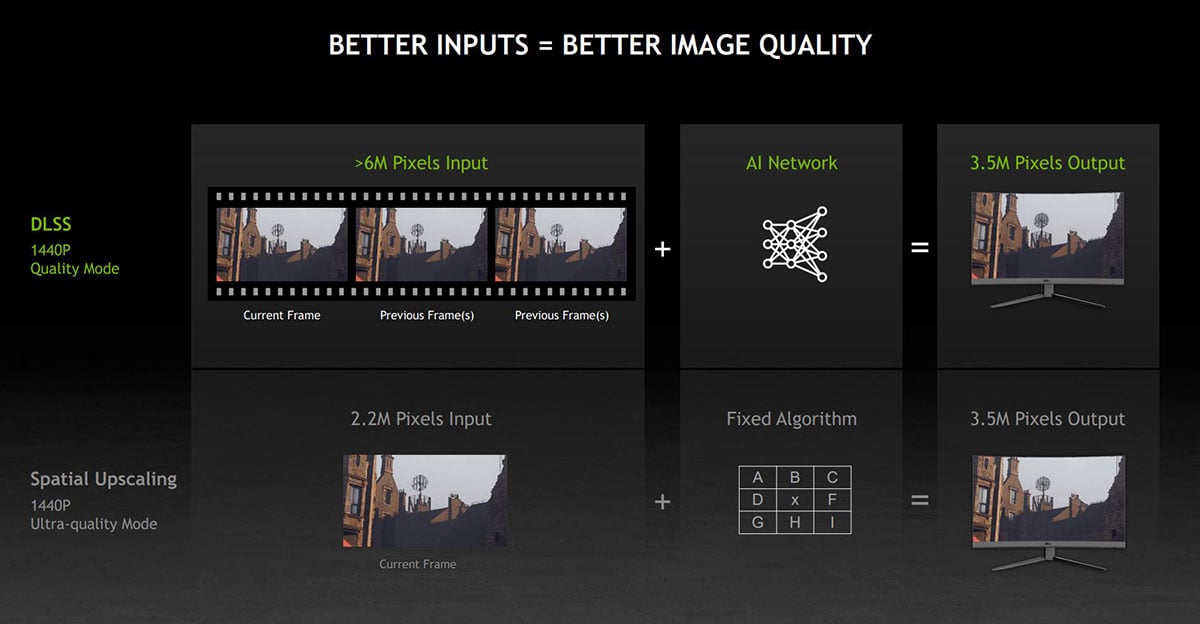

Key differences between FSR/NIS and DLSS – initialism overload! – rest with two key factors: using data from multiple frames as the input and then deriving the final output via an AI network rather than a fixed algorithm. Both features need further exposition.

Temporal vs. Spatial Considerations

DLSS is referred to as a temporal (time) upscaler because it calculates the final rendered pixel by pulling together information from the current and multiple previous frames. Motion vectors are used to inform the AI network on how best to amalgamate multiple-frame results to construct the best high-resolution image from lower-resolution inputs. Making it even easier, says Nvidia, image-enhancing anti-aliasing is also applied after the AI network, minimising GPU workload by allowing simpler, non-aliased detail to be used.

Point is, given the same nominal lower resolutions as inputs, Nvidia says DLSS’ inherent technology strengths mean it will always outclass spatial upscalers – NIS or FSR – in reconstructing a higher-resolution image. Why? More inputs, temporal understanding, and AI-led AA, for starters.

This is why DLSS’ Quality mode, for example, is reckoned to produce a better upscaled image than spatial solutions set to their best. In fact, Nvidia is so bullish on the DLSS image-upscaling enhancement that it opines DLSS Performance mode is a good match for FSR/NIS’ ultra-quality setting. Even then, their lack of temporal awareness hinders real-world motion by potentially adding in unwanted shimmering and other related artefacts.

Being even handed, DLSS needs to be implemented by the developer and is therefore not available on all games. The first iteration, DLSS 1.0, suffered from a relative lack of adoption. DLSS 2.0 improves matters through higher developer uptake and refined technology whereby the AI network doesn’t require training on a per-game basis and is faster to run and implement from a studio’s side.

Ever-improving Technology?

Updates to the underlying technology improve the way in which DLSS functions. Version 2.3, for example, makes smarter use of motion vectors to improve object detail in motion, particle reconstruction, ghosting, and temporal stability, according to Nvidia, and games such as Doom use the latest update to accurately render embers that previously have been interpreted incorrectly. 16 games currently support DLSS 2.3, including Cyberpunk 2077 from today.

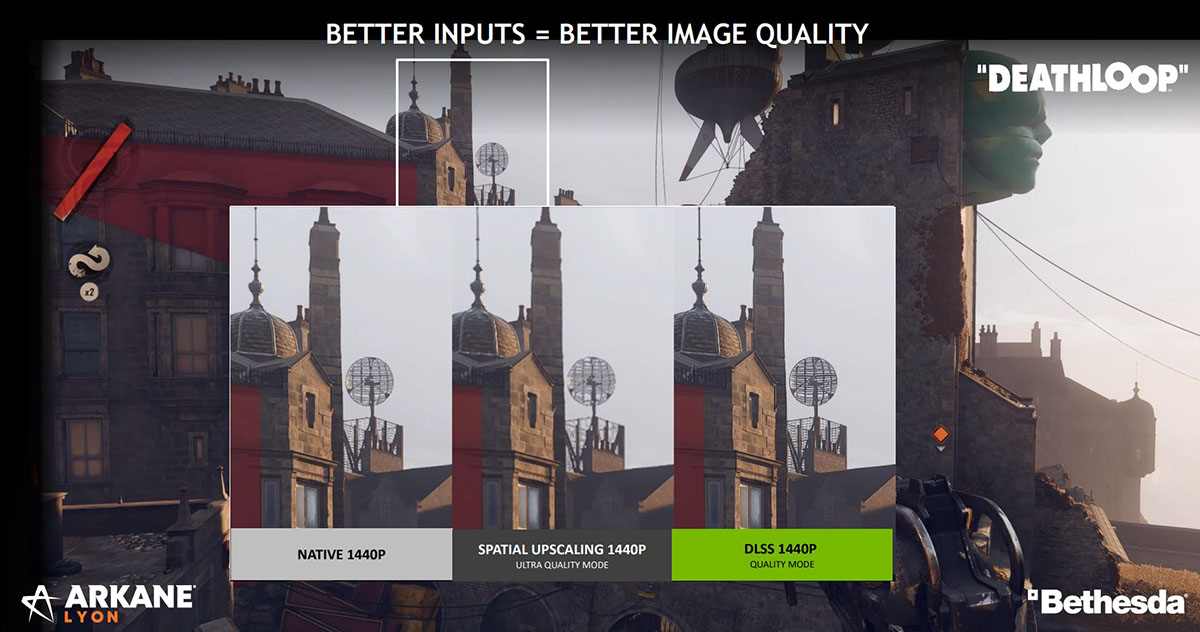

The question of how to evaluate these image-upscaling technologies easily – through either screenshots or, preferably, video – has been bugging Nvidia. So much so that part two of today’s announcement centres on a handy tool for comparing the efforts.

Enter Nvidia ICAT

Typically, tech websites like Club386 compare images by linking out to the outputs produced by, say, native rendering and framerate-boosting DLSS, pointing out any meaningful differences in commentary. ICAT enables us to add up to four screenshots or videos and compare two side by side or with a nifty slider.

The purpose of doing so is to more easily discern differences in image and motion quality when using any form of non-native rendering. The closer the spatial or temporal output is to the original – or, indeed, of higher quality – the better for the gamer. In an ideal world you’d want to lose minimal amount of image fidelity whilst receiving a solid uptick in framerate.

Nvidia shows such an example in Deathloop by comparing native 1440p output to ultra-quality spatial upscaling (FSR or NIS) and temporal upscaling via DLSS Quality mode. Of course, this is a cherry-picked example used to highlight how well DLSS works compared to ‘inferior’ upscaling methods, and we can put it to the test in any compatible game of our choice.

To that end, we fired up DLSS-optimised Control and Death Stranding and took ICAT for a spin.

What you’re looking at is a side-by-side comparison between Control run with NIS and DLSS set to Balanced modes at a 4K resolution. Framerates are approximately 65 per cent higher that of native rendering, which is helpful as the game goes from smooth to lush on a GeForce RTX 3080.

A quick peek between the two sides doesn’t tell you that much; they look rather similar, and you’d be forgiven for thinking the rendering is exactly the same. As always, the devil is in the details, and we can tease out the Horned One by zooming right into the slider line.

More Detail Retained Within DLSS

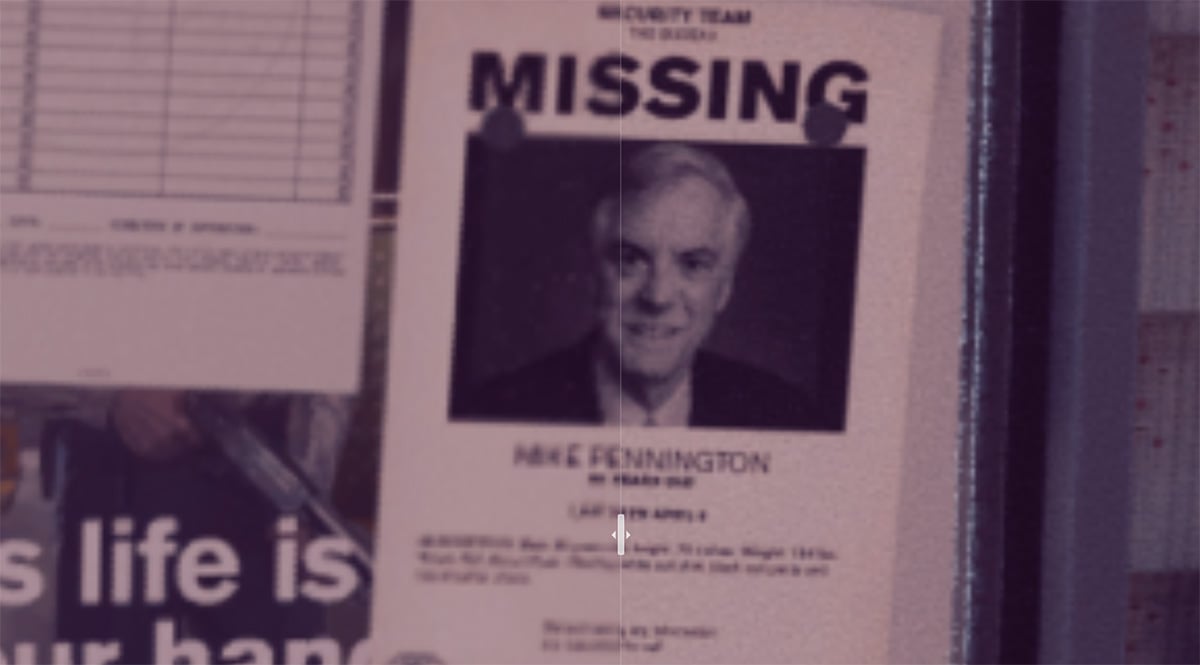

Here is where the difference between NIS (left) and DLSS (right) is more readily apparent. Notice how the writing is clearer on the DLSS-produced upscaling image, so much so that it’s straightforward to discern the missing person’s surname. The image isn’t still as crisp as native, especially as we’re using the Balanced mode for solid framerate, but the trade-off is acceptable in DLSS and not so much in spatial upscaling such as NIS.

Now we have Death Stranding split between DLSS and NIS Performance modes.

A cursory peek doesn’t reveal the manifest differences in the screenshots, so we need to zoom into areas of interest to delineate IQ changes.

Providing a frame of reference, base native 4K performance is around 95fps here, with DLSS Performance it jumps to 175fps or so, and NIS Performance cranks it up further, to 200fps. A healthy boost for both image-upscaling technologies.

It’s by zooming in we see the differences, of course, and the NIS image is softer and less defined than DLSS.

DLSS still isn’t some IQ silver bullet, though, as running at Performance mode still has adverse effects on absolute image quality when compared to native, where the detail in the railings is lost in the hyperlinked example. It gets much closer to native as we jump up to Balanced and then Quality, albeit with those modes offering less of an increase in framerate.

The biggest elephant in the room is the need for DLSS to be added to games by the developer, and it’s very likely certain AAA titles will miss out as they’re more closely aligned with AMD and FSR. This is exactly why NIS exists.

The Wrap

Our first foray into Nvidia’s ICAT software is useful as it offers a good foundation on which to illustrate image quality between competing spatial and temporal upscalers. Poring over screenshots is a start, but ICAT’s raison d’etre is to offer a means by which to highlight limitations present in certain upscalers for motion – shimmering being the most obvious example – as gamers play games and not screenshots.

Nvidia is making ICAT available for public use, opening up testing for your own games, and we’ll add a link as soon as it becomes available.