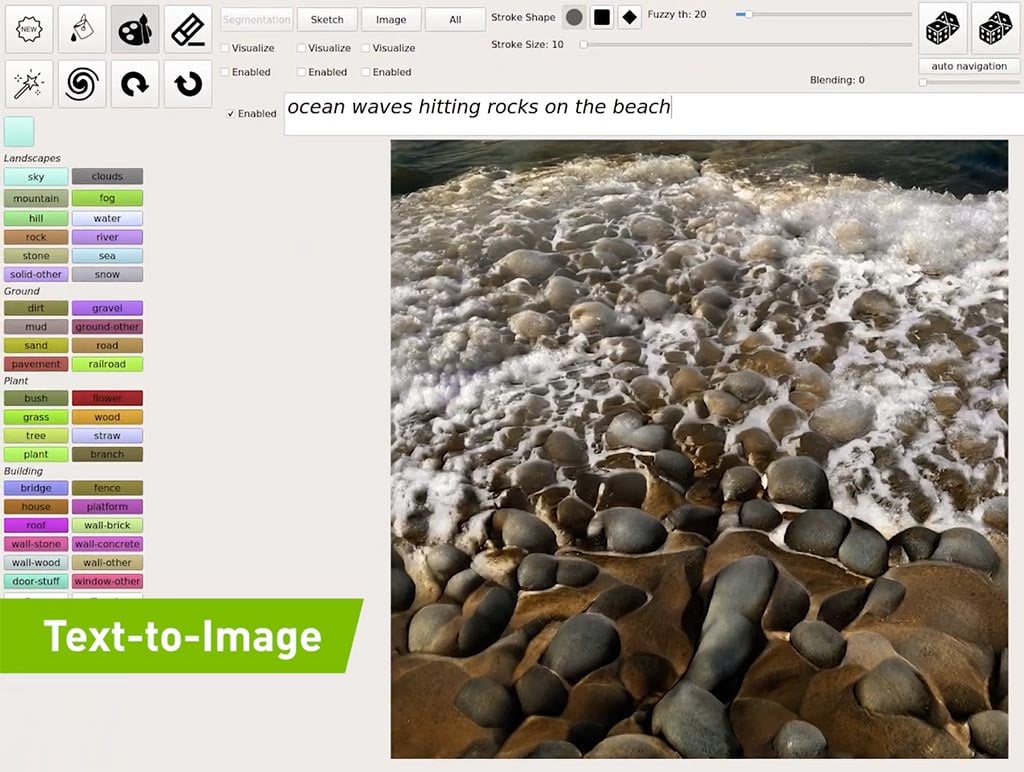

Nvidia has demonstrated the latest iteration of its GauGAN AI art application. GauGAN2 takes a few words or a phrase as input and can from this tiny seed generate a “photorealistic masterpiece.” However, it allows for more flair and creativity by allowing users to then tweak the image using a “smart paintbrush” with descriptors like sky, tree, rock or river.

We are all familiar with the phrase a picture says a thousand words but thanks to modern AI, we can almost turn that phrase on its head. Using GauGAN2, a simple phrase like “sunset at a beach” generates an attractive scene in real-time. Moreover, it can be customised further using an additional adjective e.g. “sunset at a rocky beach,” and you will see the change in real-time.

GauGAN2 doesn’t limit your creativity to a canned phrase. Once your basic scene set, it is a simple click to generate a segmentation map to provide a high-level outline of the scene constituents. From then on users can switch to drawing and tweaking the rough but realistic sketches using labels like sky, clouds, mountain, hill, fog, water, sea, snow, road, bridge, wall and many more.

How does this all work? The “GAN” in GauGAN2 is short for Generative Adversarial Network – a network that can create new from old – and the “old” source is 10 million high-quality landscape images that have been described and processed by the Nvidia Selene supercomputer, an Nvidia DGX SuperPOD-based system.

With the computer learning how to describe images, the process can be flipped around, so text strings generate images. Nvidia adds further refinements to GauGAN2 in the form of the segmentation and modifications via brushstrokes or styles.

Anyone with an Nvidia RTX GPU can download and test GauGAN technology using the Nvidia Canvas app. GauGAN2 looks like another great tool for Nvidia and its users with which to rapidly create and populate Omniverse / metaverse creations.